Types of Network Solutions in Kubernetes ?

utkarsh srivastava

utkarsh srivastava

Kubernetes is built to run distributed systems over a cluster of machines. Kubernetes networking allows Kubernetes components to communicate with each other and with other applications such as communication between pods, containers, services, and external services. This nature of Kubernetes makes networking a necessary component of Kubernetes deployment, and with the understanding of the Kubernetes networking model, we can run, monitor, and troubleshoot the applications.

In this blog, we will cover the following topics:

Container-to-Container Communication

Pod-to-Pod Communication

Inter-node pod network communication

intra-node pod network communication

Pod-to-Service Communication

Internet-to-Service Communication

Cluster IP (Local Kubernetes, kube - DNS)

Node Port (Worker IP)

Load Balancer (Cloud Provider ELB)

Ingress Controller (HAProxy, NGINX)

Container-to-ContainerNetworking :-

container-to-container communication within the same pod is straightforward because all containers in a pod share the same network namespace. This means they can communicate with each other using localhost.

Pod-to-Pod Networking :-

Pod-to-Pod Communication within the Same Node (Intra-Node) :- When multiple pods are scheduled on the same node, they can communicate with each other directly using localhost or the loopback interface. This communication happens through the pod’s assigned IP address within the cluster, typically in the form of a Virtual Ethernet (veth) pair. The communication occurs at the network layer, enabling high-performance and low-latency interactions between pods on the same node.

Pod-to-Pod Communication across Nodes (Inter-Node) :- When pods need to communicate across different nodes in the cluster, Kubernetes employs various networking solutions, such as Container Network Interfaces (CNIs) and software-defined networking (SDN) technologies. These solutions create a virtual network overlay that spans the entire cluster, enabling pod-to-pod communication across nodes. Some popular CNIs include Calico, Flannel, Weave, and Cilium. These networking solutions ensure that the pod’s IP address remains reachable and provides transparent network connectivity regardless of the pod’s location within the cluster.

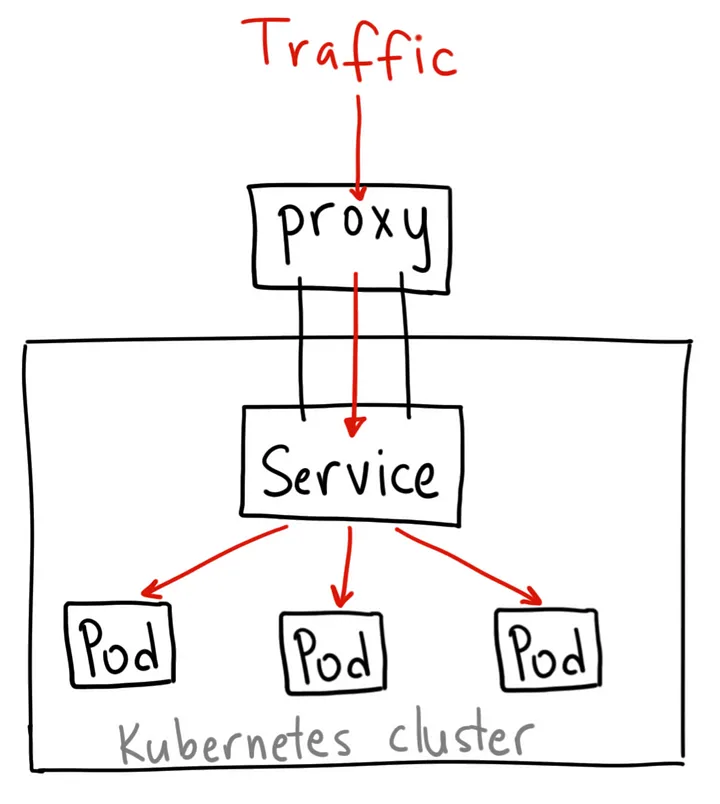

Pod-to-Service Networking :-

Kubernetes is designed to allow pods to be replaced dynamically, as needed. This means that pod IP addresses are not durable. To address this issue and ensure that communication with and between pods is maintained, Kubernetes uses services.

Kubernetes services manage pod states and enable you to track pod IP addresses over time. These services abstract pod addresses by assigning a single virtual IP (a cluster IP) to a group of pod IPs. Then, any traffic sent to the virtual IP is distributed to the associated pods. Service select pods based on selector values.

This service IP enables pods to be created and destroyed as needed without affecting overall communications. It also enables Kubernetes services to act as in-cluster load balancers, distributing traffic as needed among associated pods.

Internet-to-Service Networking :-

Whether you are using Kubernetes for internal or external applications, you generally need Internet connectivity. This connectivity enables users to access your services. Pods often need to communicate with resources outside the Kubernetes cluster, such as external services or databases. Services select Pods based on their labels. When a network request is made to the service, it selects all Pods in the cluster matching the service’s selector, chooses one of them, and forwards the network request to it.

ClusterIP :-

ClusterIP is the default Service type in Kubernetes is used if you don't specify a type for a Service. In this Service, Kubernetes creates a stable IP Address that is accessible from all the nodes in the cluster. The scope of this service is only limited within the cluster only. The main use case of this service is to connect our Frontend Pods to our Backend Pods as we don’t expose backend Pods to the outside world because of security reasons.

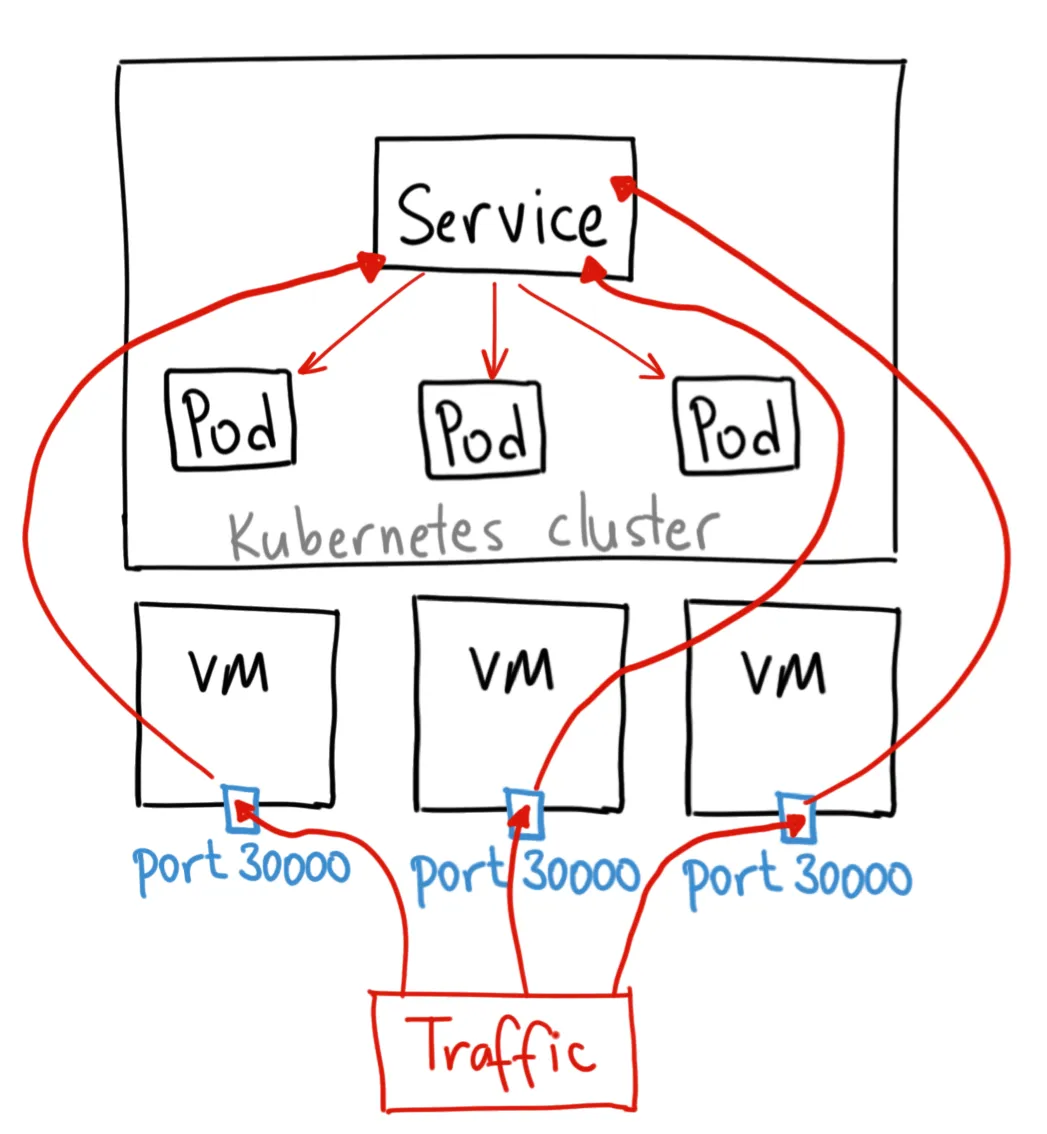

NodePort :-

A NodePort service is the most primitive way to get external traffic directly to your service. NodePort, as the name implies, opens a specific port on all the Nodes (the VMs), and any traffic that is sent to this port is forwarded to the service.

Use Cases:

External Access:

- NodePort is commonly used when you need to expose a service to the external world, such as for testing or when LoadBalancer is not available.

Development and Testing:

- Useful during development and testing phases when a more complex LoadBalancer setup is not required.

Important Considerations:

Port Range:

- NodePort values typically range from 30000 to 32767.

Security:

- If your Node/VM IP address change, you need to deal with that.

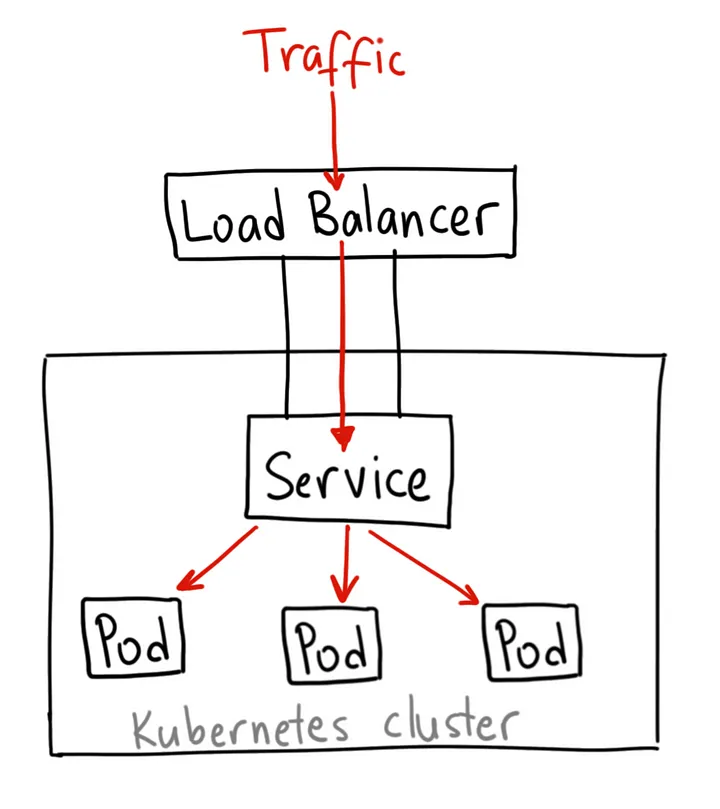

LoadBalancer :-

The LoadBalancer Service is a standard way for exposing our Nodes to the outside world or the internet. It acts as a traffic manager, ensuring that incoming requests are evenly distributed among the available instances to optimize performance and prevent overload on any single instance, providing high availability and scalability.

Load balancers in K8S can be implemented by using a cloud provider-specific load balancer such as Azure Load Balancer, AWS Network Load Balancer (NLB), or Elastic Load Balancer (ELB) that operates at the Network Layer 4 of the OSI model.

Ingress Controller :-

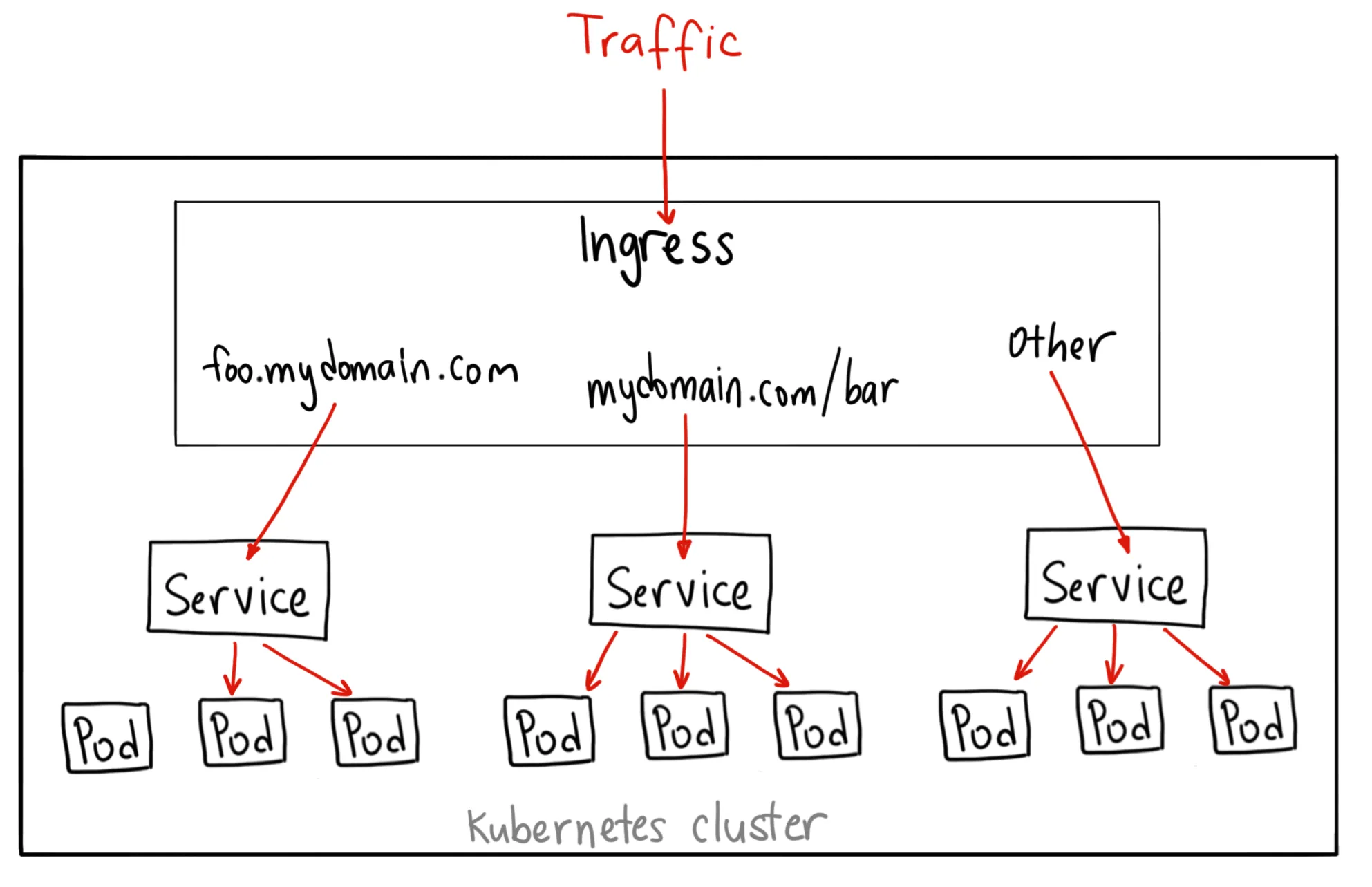

Unlike all the above examples, Ingress is actually NOT a type of service. Instead, it sits in front of multiple services and act as a “smart router” or entrypoint into your cluster. It is always implemented using a third-party proxy. These implementations are nothing but Ingress Controller. It is a Layer-7 load balancer.

You can do a lot of different things with an Ingress, and there are many types of Ingress controllers that have different capabilities. This will let you do both path based and subdomain based routing to backend services. For example, you can send everything on foo.yourdomain.com to the foo service, and everything under the yourdomain.com/bar/ path to the bar service.

You only pay for one load balancer if you are using the native AWS integration, and because Ingress is “smart” you can get a lot of features out of the box (like SSL, Auth, Routing, etc)

Conclusion:-

These network solutions collectively contribute to the effective and reliable communication infrastructure within Kubernetes clusters, supporting the deployment and scaling of containerized applications. The choice of a specific solution depends on factors such as use case, performance requirements, and security considerations.

feel free to ask queries related to this topic. I will be happy to help you.

connect with me:- utkarshsri0701@gmail.com / serv-ar-tistry Studio

Subscribe to my newsletter

Read articles from utkarsh srivastava directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

utkarsh srivastava

utkarsh srivastava

A seasoned IT professional with over 10 years of experience, crafting innovative solutions at serv-ar-tistry Studio. I am a passionate leader with a proven track record of success in the cloud, infrastructure, and DevOps space. After gaining extensive experience working for various multinational corporations across diverse industries, I took the leap to pursue my entrepreneurial dream and founded serv-ar-tistry Studio. At serv-ar-tistry Studio, we are dedicated to empowering businesses with cutting-edge cloud infrastructure and DevOps solutions. Our team of skilled professionals leverages their expertise to deliver: Scalable and secure cloud solutions: We help businesses migrate and optimize their infrastructure on leading cloud platforms, ensuring efficient resource utilization and cost-effectiveness. Robust infrastructure management: We design, implement, and manage robust infrastructure solutions that are reliable, secure, and adaptable to evolving business needs. Streamlined DevOps workflows: We automate and optimize development and deployment processes, enabling businesses to deliver software faster and with higher quality.