GANs 101: Unraveling the Wonders of Generative Adversarial Networks 🌌

Sahil Madhyan

Sahil Madhyan

Motivation 🚀

In the vast landscape of artificial intelligence, GANs emerge as a solution to a crucial problem – the ability to learn from data without explicitly modelling it. Traditional methods demand labelled or unlabeled data for tasks like classification or clustering, often falling short in capturing the nuanced diversity of the data. GANs, as generative models, sidestep this need for labelled data. They thrive on randomness, producing novel and realistic data across various domains without predefined rules.

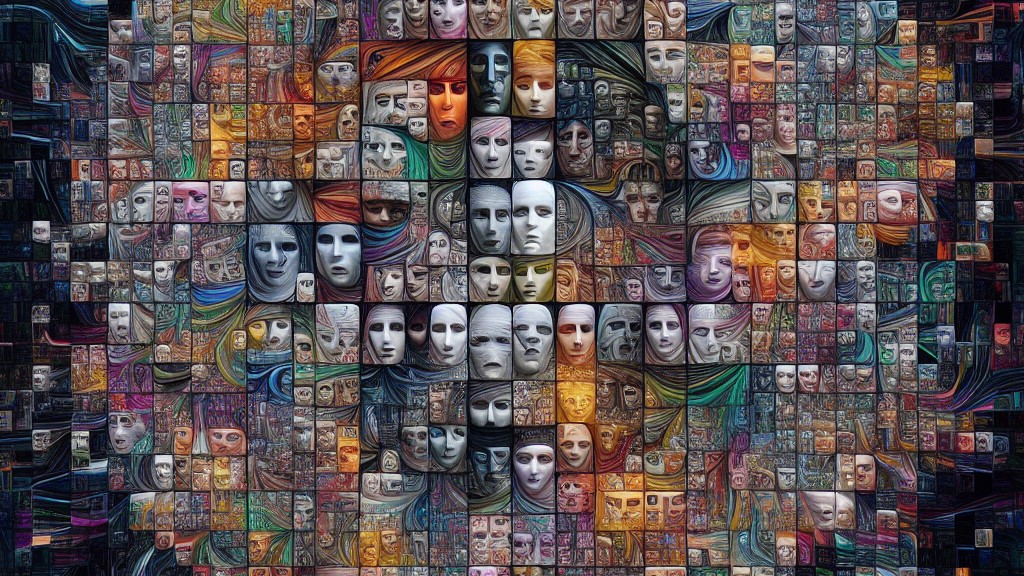

Introduction 🎭

So, how do GANs weave their magic? At their core, GANs set up a captivating duel between two players: the generator and the discriminator. The generator crafts fake data mimicking the real, while the discriminator distinguishes between real and fake. This adversarial dance unfolds as both entities refine their strategies simultaneously, leading to a balance where the discriminator can no longer discern reality from fabrication.

Working 🧠

Pic Credit: Introduction to generative adversarial network

The working of GANs revolves around two key components: the generator network (G) and the discriminator network (D). The generator crafts fake data from random noise, attempting to emulate the real data distribution. Meanwhile, the discriminator evaluates the authenticity of the input. The optimal parameters for both networks are determined by minimizing a loss function that gauges their performance. This involves the generator maximizing the probability of fooling the discriminator, and the discriminator maximizing its accuracy in distinguishing real from fake.

Loss Function (L): Measures how well the generator and discriminator perform their tasks. The generator aims to maximize the probability of fooling the discriminator. The discriminator aims to maximize accuracy in distinguishing real from fake.

Generator Loss (L_G): Measures how well the generator fools the discriminator.

L_G = -E_z[log(D(G(z)))]z is a random noise vector sampled from a distribution.

G(z) is the generated fake sample.

D(G(z)) is the discriminator's output for the generated fake sample.

log(D(G(z))) is the log probability that the discriminator assigns to the generated fake sample.

E_z represents the expectation over the noise distribution.

Discriminator Loss (L_D): Measures how well the discriminator distinguishes between real and fake.

L_D = -E_x[log(D(x))] - E_z[log(1-D(G(z)))]x is a real sample from the true data distribution.

D(x) is the discriminator's output for the real sample.

log(D(x)) is the log probability that the discriminator assigns to the real sample.

G(z) is the generated fake sample.

D(G(z)) is the discriminator's output for the generated fake sample.

log(1−D(G(z))) is the log probability that the discriminator assigns to the generated fake sample being fake.

E_x and E_z represent expectations over the real data distribution and the noise distribution, respectively.

The generator strives to minimize its loss by maximizing the probability that the discriminator assigns to its fake samples. Meanwhile, the discriminator aims to minimize its loss by maximizing the probability assigned to real samples and minimising the probability assigned to fake samples. This adversarial dance continues until both networks reach a Nash equilibrium, where neither can improve its performance by changing its parameters.

Challenges and Limitations 🤔

While GANs boast impressive capabilities, they grapple with challenges:

Mode collapse: The generator may produce limited variations of data, missing the full diversity of the distribution.

Vanishing gradients: The gradients of either network become extremely small, hindering parameter updates.

Evaluation difficulties: Measuring the quality and diversity of generated data lacks a clear, objective metric, introducing subjectivity and inconsistency.

Ethical and social concerns: GANs can be a double-edged sword, with potential positive impacts on fields like art and medicine but also raising ethical issues regarding privacy and trust.

Types of GANs 🔄

Various GAN iterations have evolved since their inception:

DCGAN (Deep Convolutional GAN): Utilizes convolutional neural networks for enhanced stability and performance.

WGAN (Wasserstein GAN): Introduces the Wasserstein distance for a more robust measurement of data distribution distance.

CGAN (Conditional GAN): Empowers control over the generator's output by introducing conditional variables.

CycleGAN: Facilitates style or domain transfer between images without paired data.

Applications of GANs 🎨

GANs have found applications in a multitude of domains:

Image synthesis, editing, enhancement, translation, and super-resolution 📸✨

- Example: StyleGAN for creating hyper-realistic faces.

Video generation, prediction, synthesis, editing, enhancement, and translation 🎥🚀

- Example: DeepFake technology for realistic video face swaps.

Future of GANs 🔮

The future of GANs holds promise but demands overcoming challenges:

Enhancing stability and convergence: A fundamental challenge in ensuring GANs converge to optimal solutions without mode collapse or vanishing gradients.

Theoretical analysis and empirical studies: A deeper understanding of GAN dynamics and properties, exploring why certain architectures or hyperparameters outshine others.

Conclusion 🚀✨

As we journey through the captivating landscape of GANs, envision a future where these neural artisans shape an AI-driven realm bursting with creativity and innovation. 🚀✨ Join the adventure and witness the evolution of GANs, painting a canvas of possibilities limited only by our imagination! 🎨🌐

Embark on your exploration of GANs, where every discovery opens a new door to the vast horizons of artificial intelligence. Dive into the world of generative magic, and let your curiosity be the guiding star in this exciting journey of innovation and possibilities! 🚀🌟 Share your thoughts on the future of GANs, and together, let's shape the next chapter in this fascinating realm of technology! 💬🤖

If you enjoyed reading this blog, please take a moment to leave a comment and let me know your thoughts. Your feedback is essential to help me improve my writing and create better content that meets your needs and interests. As a beginner, I value your opinion and would be grateful for any insights you may have. Thank you for taking the time to share your feedback!💫

Subscribe to my newsletter

Read articles from Sahil Madhyan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sahil Madhyan

Sahil Madhyan

A tech enthusiast with a keen interest in AI/ML. Beyond the digital realm, exploring diverse passions and striving for dynamic integration. 🚀💡