How do I convert a Django QuerySet into a Polars Dataframe?

ayoub arahmat

ayoub arahmat

The Problem:

In my software engineering journey, I faced a problem while working on a Django project when I had to grab a bunch of data from a PostgreSQL server and mess around with it to do some data analysis. The usual way to do this is by running a fancy SELECT SQL query on a big table (more than 8 gigabytes in my case) and turning the results into a dataframe. But here's the catch: this method has its downsides

Loading data from PostgreSQL to a variable and then transforming it into a dataframe carries a potential risk of memory allocation errors, particularly on machines with limited memory capacity. Additionally, This approach may impact memory and significantly influence the speed of data loading. so how can we solve this?

The short answer is Polars 🐻❄️.

Polars is a highly performant DataFrame library for manipulating structured data. The core is written in Rust, but the library is also in Python. Polars is very fast, and in fact, is one of the best-performing solutions available

in this article, I will show you step-by-step how to convert a huge table to a Polars dataframe to perform exploratory data analysis and apply analytical techniques ...etc

you will find

Project Requirements:

[packages]

django = "==4.2"

connectorx = "==0.2.3"

django-environ = "==0.11.2"

polars = "==0.19.19"

psycopg = "==3.1.8"

ConnectorX package enables you to load data from databases into Python in the fastest and most memory-efficient way.

Note: connectorx requires pyarrow package to be installed: pipenv install pyarrow

Download the Django project from this link and follow the steps in the README.md to set up the project

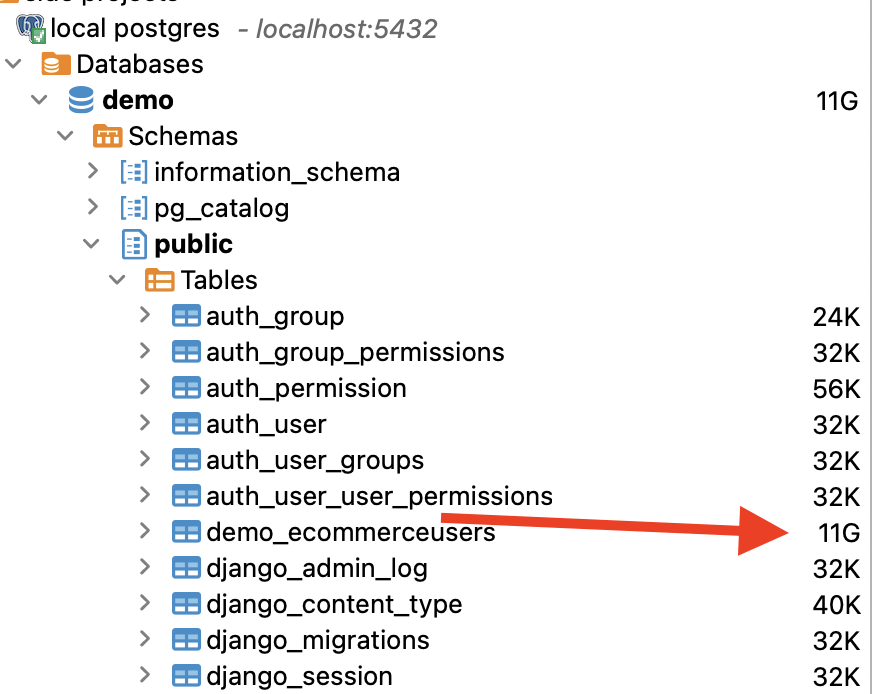

We have a table in our Django project ECommerceUsers that contains over 67 million rows (~11GB).

These are the attributes of our table (Django Model) :

from django.db import models

class ECommerceUsers(models.Model):

event_time = models.DateTimeField(auto_now=False, auto_now_add=False)

event_type = models.CharField(max_length=50)

product_id = models.IntegerField()

category_id = models.BigIntegerField()

category_code = models.CharField(max_length=255, null=True)

brand = models.CharField(max_length=50, null=True)

price = models.DecimalField(max_digits=8, decimal_places=2)

user_id = models.BigIntegerField()

user_session = models.CharField(max_length=50, null=True)

Now Polars has by default a function that reads the results of a SQL query into a DataFrame, given a connection object.

Example :

import polars as pl

df = pl.read_database(

query="SELECT * FROM test_data",

connection=user_conn,

schema_overrides={"normalised_score": pl.UInt8},

)

To take advantage of this Polars's function, we therefore need a mechanism that allows us to convert any set of queries we write in our project into a Polars data-frame.

The optimal method for accomplishing this involves adding a Manager to our specific model by extending the base Manager class. Instantiate your custom Manager in your model, and subsequently, incorporate additional Manager methods. This is the recommended approach for introducing 'table-level' functionality to our models. It enables us to execute the query as follows :

ECommerceUsers.objects.all().to_polars() # select all

# OR

ECommerceUsers.objects.all().[:10].to_polars() # first 10 users

# OR

from django.db.models.functions import Round

ECommerceUsers.objects

.annotate(price_rounded=Round('price'))

.values('price_rounded', 'event_type')

.to_polars()

# ... etc

There are two reasons you might want to customize a

Manager: to add extraManagermethods, and/or to modify the initialQuerySettheManagerreturns.

now let's create the Manager class but first, we have to create an instantiated connection (or cursor/client object) that the query can be executed against that we will pass to the connection params in read_database polar's function, let's do it ⌛️

We have created a demo/utils.py in which we add this function

from django.conf import settings

def database_uri(using: str = "default") -> str:

"""

Generate A PostgreSQL database URI based on Django settings

:param using (str, optional): the database alias to use. Defaults to "default".

"""

db_config = settings.DATABASES[using]

db_name = db_config["NAME"]

db_user = db_config["USER"]

db_password = db_config["PASSWORD"]

db_host = db_config["HOST"]

db_port = db_config["PORT"]

return f"postgresql://{db_user}:{db_password}@{db_host}:{db_port}/{db_name}"

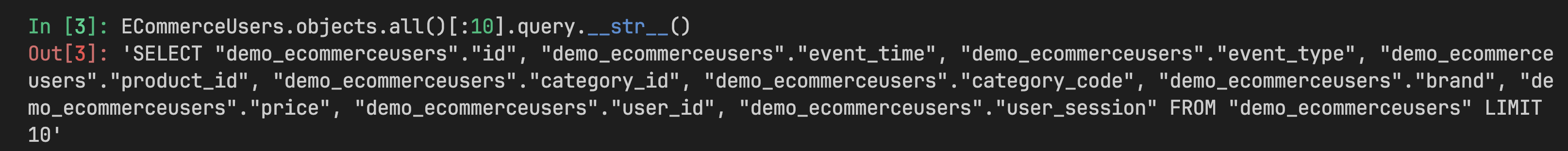

Next, because the polar function takes the query as a parameter, we need to convert our QuerySet set into an SQL statement. but how 🤨?

➡️ to convert a Django QuerySet into an SQL statement in Django, we need to call the.query.__str__() look at the image below ⬇️

The Solution :

A Manager is the interface through which database query operations are provided to Django models. At least one Manager exists for every model in a Django application.

from django.db import models

from django.db.models.functions import Round

from demo.utils import database_uri

import polars as pl

class ECommerceQuerySet(models.query.QuerySet):

def to_polars(self):

queryset = self

connection = database_uri() # connection object

sql_query = queryset.query.__str__() # sql statement

return pl.read_database(query=sql_query, connection=connection)

class ECommerceUsersManager(models.Manager):

def get_queryset(self):

return ECommerceQuerySet(self.model, using=self._db)

class ECommerceUsers(models.Model):

# ...

objects = ECommerceUsersManager()

🔥 Now let's try to load the 11 GB of data once and calculate how long it will take 🔥.

To do this, I'm going to create a temporary command called load_ecommerce_users_data.py in demo/management/commands/load_ecommerce_users_data.py.

and use the time build-in package to calculate loading_time

I am using a Macbook M1 Pro with 32 GB of RAM.

import time

from django.core.management.base import BaseCommand

from demo.models import ECommerceUsers

class Command(BaseCommand):

help = "Data Loading command."

def handle(self, *args, **options):

start_time = time.time()

df = ECommerceUsers.objects.all().to_polars()

loading_time = time.time() - start_time

print(f"data loading time is : {loading_time//60} minutes")

Conclusion

In summary, when converting Django query sets to Polars DataFrames, make sure to carefully follow the provided instructions and documentation for effective use. To enhance compatibility with different databases like MySQL, SQL Server, and Oracle, further improvements are needed. It's crucial to find the right balance between efficiency and adaptability during implementation. Stay informed and consider these aspects to get the most out of the conversion process.

Subscribe to my newsletter

Read articles from ayoub arahmat directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by