Understanding the Learning Curves in ML

Juan Carlos Olamendy

Juan Carlos Olamendy

Have you ever wondered how to interpret learning curves in Machine Learning?

What do those curves really mean?

Do you have a hard time to make sense of them?

I got you? Don't worry. I will explain those concepts in simple terms.

This article will dig into the mysteries of learning curves in Machine Learning (ML), guiding you through a real-world example of training a neural network.

Introduction to Learning Process

Imagine you're teaching a child to ride a bike.

At first, they're wobbly and unsure, much like a neural network that's just started learning.

Over time, with practice (or more training data), they get steadier.

This process is akin to the evolving learning curves of a neural network.

Initial Training

The initial step is to look at the model's training loss.

High training loss?

That's a sign of struggle.

It means the network is underfitting. In other words, it's not learning effectively.

Much like a child staring at a bicycle, not understanding how to ride it.

Growth and Learning

To address underfitting, we enhance the model's capacity, allowing it to learn more complex patterns.

It's like giving the child training wheels.

The result? A lower training loss.

The model isn't underfitting anymore. But is it learning correctly, or just memorizing?

That's a critical question. We need to evaluate this model.

Validation

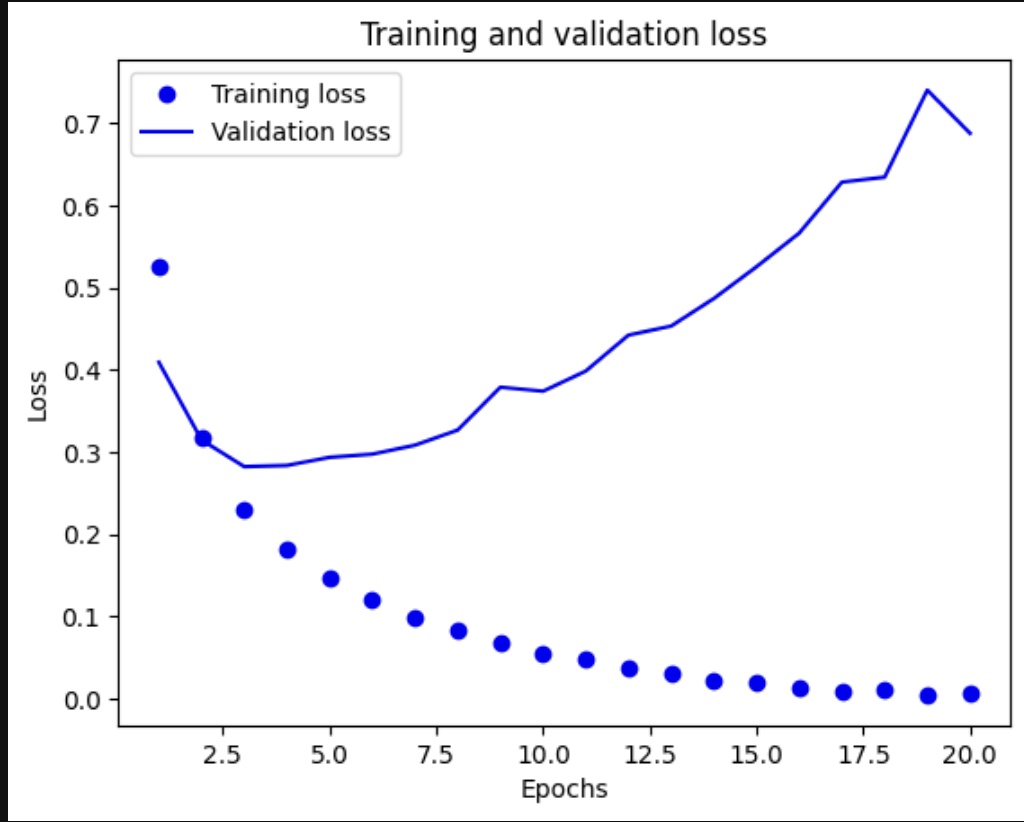

Now, it's time to evaluate against the validation set.

We test the model and observe the validation loss.

If the training loss is low but the validation loss is high, it signals overfitting.

The model isn't truly learning. Like a child who's only memorized a route but can't adapt to new paths.

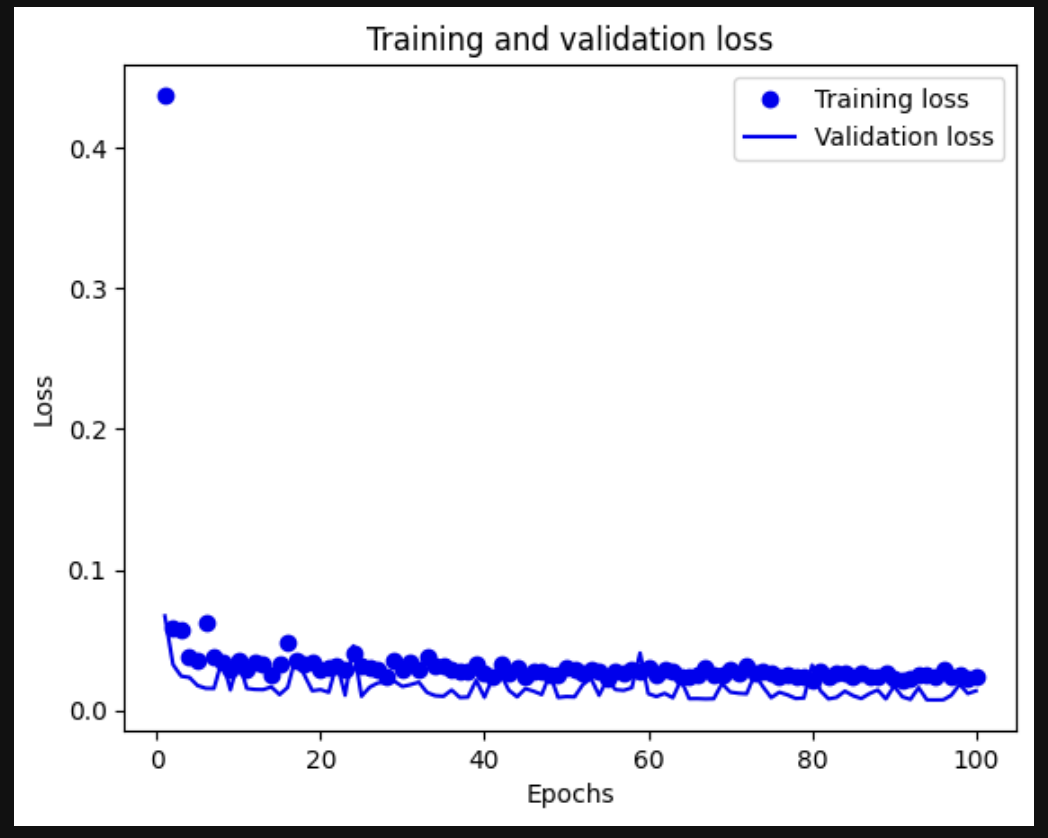

Implementing Regularization: Finding Balance

To combat overfitting, we introduce regularization.

It's like teaching the child to adjust to different terrains and obstacles.

The result?

Both training and validation losses go down.

This balance indicates a well-trained model, capable of generalizing from what it has learned.

The Concept of Early Stopping

There's a critical moment in training: when both training and validation losses decrease but then the training loss starts to dip below the validation loss.

It's like the child who starts to ride too fast, becoming overconfident.

This is where you need to stop the training. It's the concept of early stopping, crucial for preventing overfitting.

Learning Curves: The Mirror of a Model's Soul

Learning curves are not just graphs; they are stories.

They tell us about the struggles and triumphs of a neural network.

From underfitting to overfitting and finally to a balanced model, these curves provide a comprehensive view of the model's learning journey.

Conclusion

In conclusion, interpreting learning curves in ML is like understanding the growth of a learner.

It requires patience, insight, and a keen eye for the subtleties of progress and setbacks.

As we've seen, the journey from a naive model to a wise, well-adjusted one is fascinating and full of learning opportunities for both the model and the machine learning practitioner.

Remember, learning curves are more than just lines on a graph. They are reflections of a model's learning process, each twist and turn indicating a new phase in its development.

If you like this article, share it with others ♻️

Would help a lot ❤️

And feel free to follow me for articles more like this.

Subscribe to my newsletter

Read articles from Juan Carlos Olamendy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Juan Carlos Olamendy

Juan Carlos Olamendy

🤖 Talk about AI/ML · AI-preneur 🛠️ Build AI tools 🚀 Share my journey 𓀙 🔗 http://pixela.io