Day-01 of Docker : Introduction to Containers

Dilip Khunti

Dilip Khuntiintroduction

We'll cover the containers and introduce Docker. then cover Buildah, an essential tool for project building. It's gaining popularity with integrations like Scorpio and Podman.

Before we proceed, ensure you've grasped virtual machine concepts , as containers are an advancement to virtual machines and virtual machines are advancement to physical servers.

Evolution of server utilization and virtual machines.

Imagine you have a physical server – it could be from IBM, HP, or even your laptop. The issue is, most of the time, these servers don't utilize their resources fully. 😕 Even your laptop's resources often go underutilized.

Now, this becomes a significant challenge when you scale up to an organizational level with thousands of servers. 🌐 Paying for all these resources can be quite hefty.

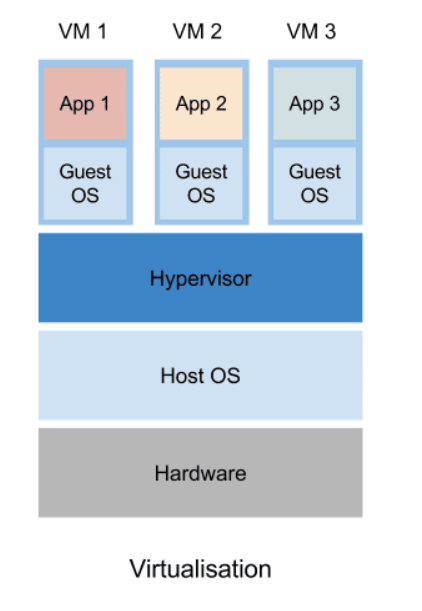

To tackle this problem, an ingenious solution emerged – virtualization! 🚀 This brought about the concept of a hypervisor, leveraging virtualization to create multiple virtual machines (VMs) on top of a physical server.

Virtualization allows logical separation, creating VMs with their individual operating systems. 🖥️ Each VM can run its own set of applications. Despite sharing the same physical server, the applications are securely isolated due to their independent operating systems.

This concept optimizes resource usage effectively, allowing multiple applications to run on a single physical server. 🔄 The result? Enhanced security and efficient resource utilization for organizations.

if virtualization is working very well why should we move towards containers

Evolution of containerization

Let's dive into the "why" behind the move towards containerization. Imagine you have a physical server, and on top of that, you've installed a hypervisor - it could be from IBM, HP, or even your laptop. The hypervisor utilizes virtualization to create multiple virtual machines (VMs) on your physical server. 🌐💻

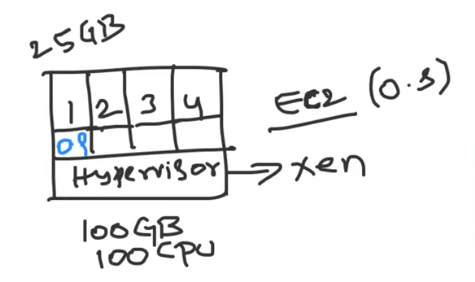

Now, let's say you've got a physical server with 100 GB RAM and 100 CPUs. To optimize usage, you use a hypervisor to create four virtual machines (vm1, vm2, vm3, vm4), each with its own operating system and 25 GB RAM and 25 CPU. 🔄

While this virtualization approach works well, organizations started realizing a significant drawback. Even after moving from physical servers to virtual machines, they observed that the VMs weren't utilizing resources to their fullest capacity. For instance, an application on a VM might only use 10 GB RAM and 6 CPUs on its busiest day, leaving a significant portion of resources untapped. 🤔💡

This underutilization led to wasted resources and potential cost implications, especially for organizations managing a large number(millions) of VMs .

Containers emerged to optimize virtual machines.However, it's essential to note that containers still have some drawbacks.virtual machines remain more secure due to their complete operating system, providing complete isolation. In contrast, containers offer logical isolation but with certain limitations, allowing communication between containers and the host operating system.

In essence, virtual machines addressed issues with physical servers, and containers resolved some challenges associated with virtual machines. 🔄🐳💻

architecture of containers

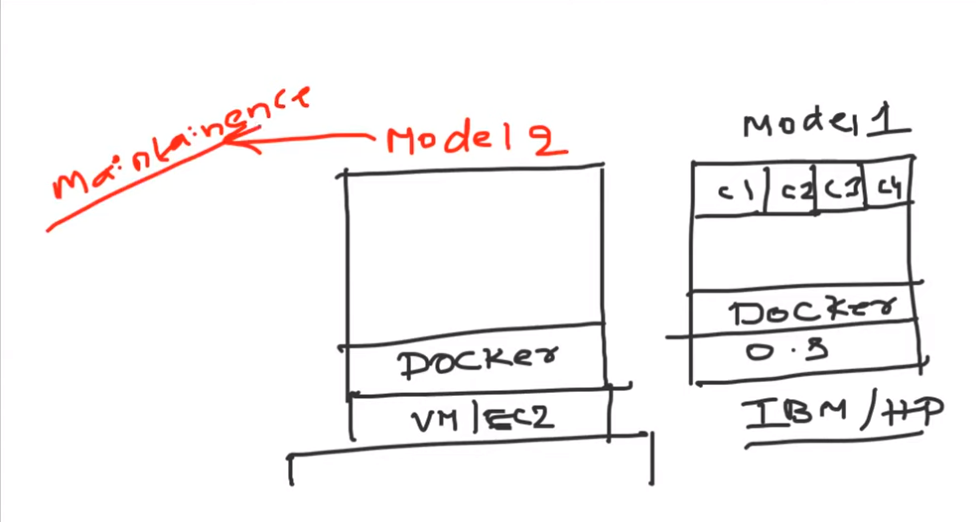

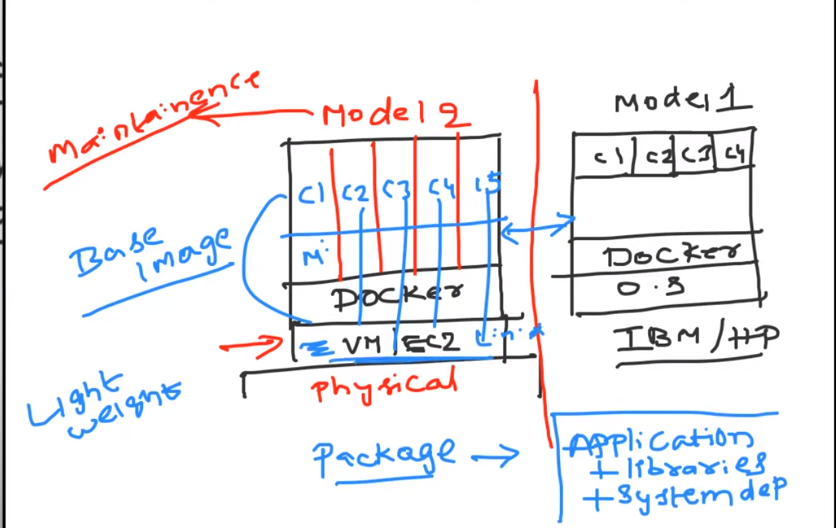

Containers can be created in two ways. In Model 1, a DevOps engineer uses physical servers in the organization's data center, installing an operating system and then Docker or another containerization platform. Model 2 involves creating a virtual machine on top of a physical server or a cloud provider's infrastructure, then implementing Docker or a similar platform to generate containers. Model 2 is becoming more popular due to reduced maintenance overhead, especially with the rise of cloud providers.

Containers are lightweight because they don't have a complete operating system. Unlike virtual machines, containers share resources with the base operating system or virtual machine they run on. They contain a minimal operating system or a base image, bundling the application, application libraries, and system dependencies. This design makes Docker containers highly efficient and lightweight.🚀💡🐳

Advantages of Container

Example 1)

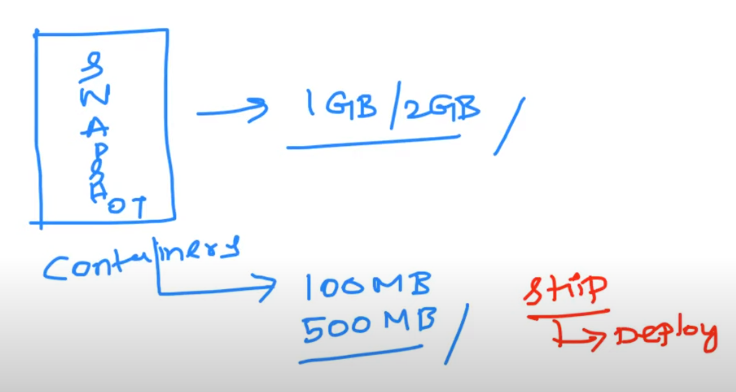

For instance, with a virtual machine, you'd create a snapshot to back it up or create an image. These snapshots are typically 2GB, 3GB, or 1GB in size, considering a basic example. In contrast, with containers, the image size varies but tends to be much smaller—potentially as low as 100 MB, depending on dependencies and usage. This significant reduction in image size makes containers lightweight and easy to transport or "ship" from one environment to another. 🚢💡🐳

Example 2)

Alright, let's dive into another advantage of Docker containers—they are incredibly lightweight. Why? Because they don't carry a complete operating system. Containers leverage resources from the base operating system they run on, making them efficient and easy to transport. 🐳💡

Consider a scenario: You have a virtual machine, and on top of it, you want to run various containers—each with different dependencies like Python, Node.js, or Java. In Docker, you create images using a base image containing all necessary system dependencies along with your application and libraries. This forms the lightweight Docker container.

Why docker is popular in containerization

Absolutely, let's delve into why Docker has become immensely popular. Docker, as a containerization platform, streamlined the community's approach to creating Docker images. It significantly enhanced the user experience by introducing intuitive commands, making containerization accessible. 🐳✨

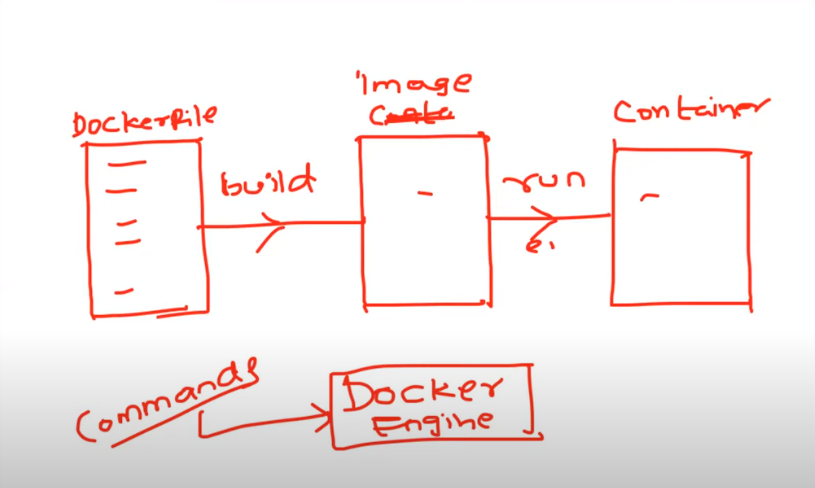

To utilize Docker, you simply need to write a Dockerfile, akin to scripting files like Jenkinsfiles. Submit this Dockerfile to the Docker engine using specific commands, and Docker will seamlessly convert it into a container image. This image can then be further transformed into a container using additional Docker commands. The lifecycle of Docker involves writing a Dockerfile, building an image, and finally running a container. 🔄💡

In the Docker platform, Docker engine handles these transitions, allowing you to interact with Docker commands such as "docker build" to convert Dockerfile to Docker image and "docker run" to create a container from the image. This straightforward process has contributed significantly to Docker's popularity. 🚀📦

Problem with docker and how buildah solve it

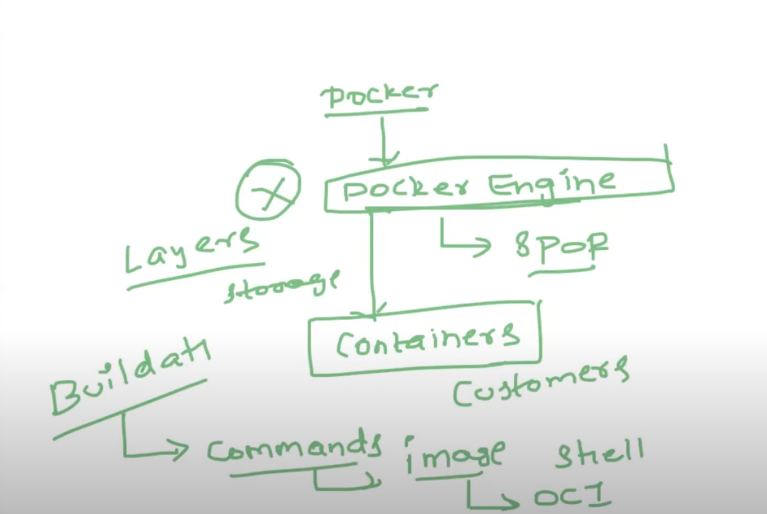

Alright, let's break it down! Docker, although widely used, faces challenges like a single point of failure with its Docker engine. This could disrupt container functionality, affecting users and customers. To combat this, the Docker community introduces Buildah, a tool addressing issues like layers, single-point-of-failure, and enhancing compatibility with tools like Scorpio and Podman.

Builder simplifies the process by leveraging commands in shell scripts, eliminating the need for Dockerfiles. It efficiently works with Docker images and other OCI-compliant images. Learning Buildah is crucial as it addresses Docker's limitations.

Video Reference:https://www.youtube.com/watch?v=7JZP345yVjw&list=PLdpzxOOAlwvIKMhk8WhzN1pYoJ1YU8Csa&index=26

Hope you found this helpful. Do connect/ follow for more such content.

~Dilip khunti

Subscribe to my newsletter

Read articles from Dilip Khunti directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Dilip Khunti

Dilip Khunti

Hello World! I am Dilip, a DevOps Engineer skilled in Kubernetes, Jenkins, Terraform, AWS, and Azure. With a background in web development.currently pursuing a B.Tech in Information Technology. In the past year, I've actively explored and learned to develop my skills in cloud and DevOps. I've engaged in tasks such as automating infrastructure, working with containers, navigating cloud platforms(Aws and Azure), and setting up continuous integration and deployment (CI/CD) with hands on exceperience.