Dhaka Stock Market Data Analysis With Python

MOHAMMAD ULLAH

MOHAMMAD ULLAHTable of contents

- Introduction

- Dataset Overview

- Let's proceed to the analysis.

- Import the necessary libraries

- Loading the dataset with pandas

- Check the data types

- Calculate basic summary statistics for each column (mean, median, standard deviation, etc.)

- Get the top 5 companies with the highest total volume:

- Explore the distribution of the 'Close' prices over time

- Identify and analyze any outliers (if any) in the dataset of volume column

- Create a line chart to visualize the 'Close' prices over time

- Calculate and plot the daily percentage change in closing prices

- Investigate the presence of any trends or seasonality in the stock prices

- Apply moving averages to smooth the time series data in 15/30 day intervals against the original graph

- Calculate the average closing price for each stock

- Identify the top 5 and bottom 5 stocks based on average closing price.

- Calculate and plot the rolling standard deviation of the 'Close' prices

- Create a new column for daily price change

- Analyze the distribution of daily price changes

- Identify days with the largest price increases and decreases

- Identify stocks with unusually high trading volume on certain days

- Explore the relationship between trading volume and volatility

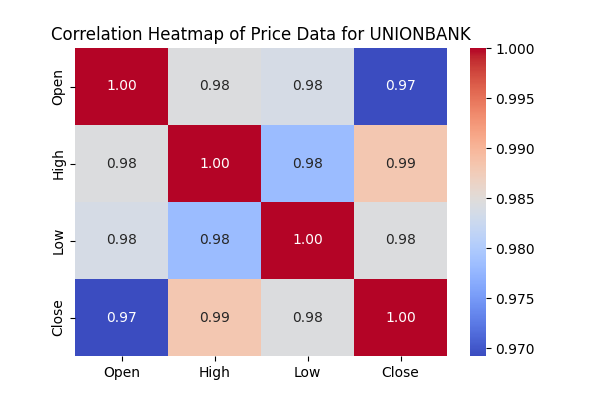

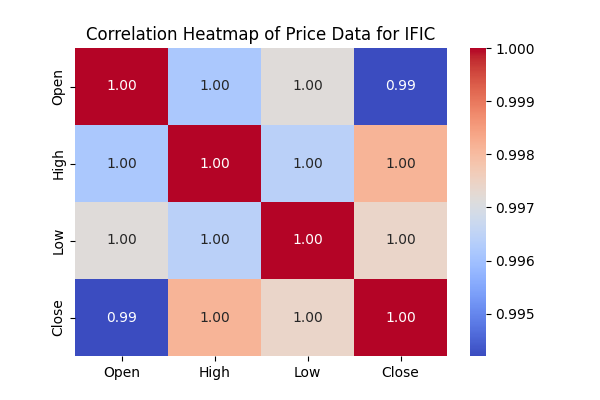

- Correlation matrix between the 'Open' & 'High', 'Low' &'Close' prices

- Create heatmap to visualize the correlations using the seaborn package.

- Acknowledgments

Introduction

Welcome to a journey through the Dhaka stock market of 2022!

This blog is your gateway to decoding market trends, deciphering data patterns, and discovering the specifics of Bangladesh's financial landscape. Through Python-powered analysis and visualizations, we'll unveil the underlying narratives behind stock movements. Join me on this data-driven journey as we unravel the Dhaka stock market's story, offering valuable insights for traders, investors, and enthusiasts seeking a deeper understanding of market dynamics.

Dataset Overview

Within this analysis, we harnessed a CSV file containing a substantial dataset for exploration. The dataset comprises 49,159 rows and 7 columns, encapsulating a wealth of information related to the Dhaka stock market. It spans the time period from January 2022 to June 2022, encompassing a diverse array of 412 companies. Key features in this dataset include columns such as Date, Name, Open, High, Low, Close, Volume. This dataset offers a comprehensive perspective on the dynamics of the Dhaka stock market, allowing for in-depth analysis and insights into this sector.

Let's proceed to the analysis.

Import the necessary libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

These libraries are essential components for our analysis.

NumPy: Utilized for numerical operations and array manipulations.

Pandas: Essential for data manipulation and analysis, offering powerful data structures and tools.

Matplotlib.pyplot: Enables the creation of visualizations, including plots, charts, and graphs.

Seaborn: A robust library for statistical data visualization, enhancing the aesthetics and overall appeal of visual representations.

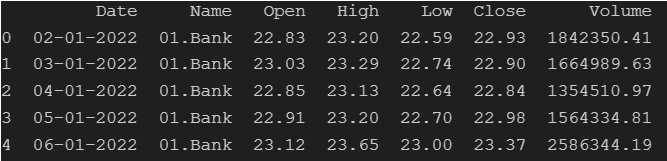

Loading the dataset with pandas

In this section, the stock market data is imported using the read_csv() function from the pandas library within a .py file in VS Code. Following this, the head() function is employed to showcase the first 5 rows of the dataset, providing an initial overview of the structure and contents of the Dhaka stock market data.

df = pd.read_csv('Stock_Market_Data.csv')

print(df.head())

Output:

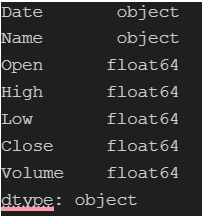

Check the data types

Verify the data types of all columns using the following command. Ensuring correct data formatting is crucial before analysis. Analyzing data in the wrong format can lead to inaccurate insights or results.

print(df.dtypes)

Output:

We notice an issue with the data type of the Date column—it appears as an object type when it should be in a DateTime format. Therefore, we need to convert the object type to datetime. Let’s proceed with that conversion.

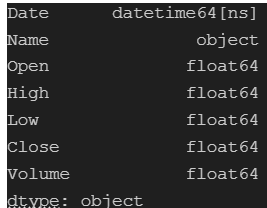

# Convert 'Date' column to datetime format

df['Date'] = pd.to_datetime(df['Date'],dayfirst=True)

Utilizing pd.to_datetime(), we perform the conversion. Additionally, we set dayfirst=True since the date format is %d%m%y. Let's recheck the data types to confirm the successful conversion

print(df.dtypes)

Output:

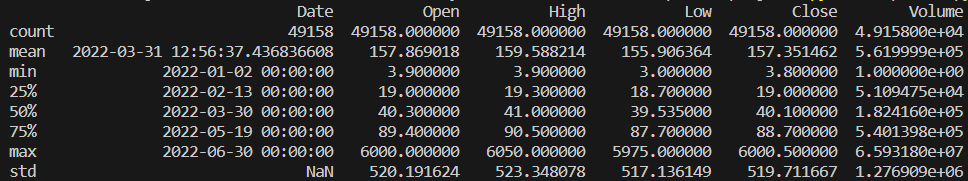

Calculate basic summary statistics for each column (mean, median, standard deviation, etc.)

The code generates essential statistical measures for the

dfDataFrame using thedescribe()function. This function delivers crucial metrics including count, mean, standard deviation, as well as minimum and maximum values for each numerical column in the dataset. These statistics offer a comprehensive overview of the data's central tendencies and variability.

print(df.describe())

Output:

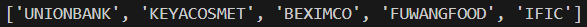

Get the top 5 companies with the highest total volume:

Handpicking key companies from the dataset allows for a deeper analysis of their market impact. The code snippet identifies the top 5 companies by their total trading volume.

# Calculate total volume for each company

volume_per_company = df.groupby('Name')['Volume'].sum()

# Get the top 5 companies with the highest total volume

top_5_companies = volume_per_company.nlargest(5).index

print(top_5_companies.to_list())

Output:

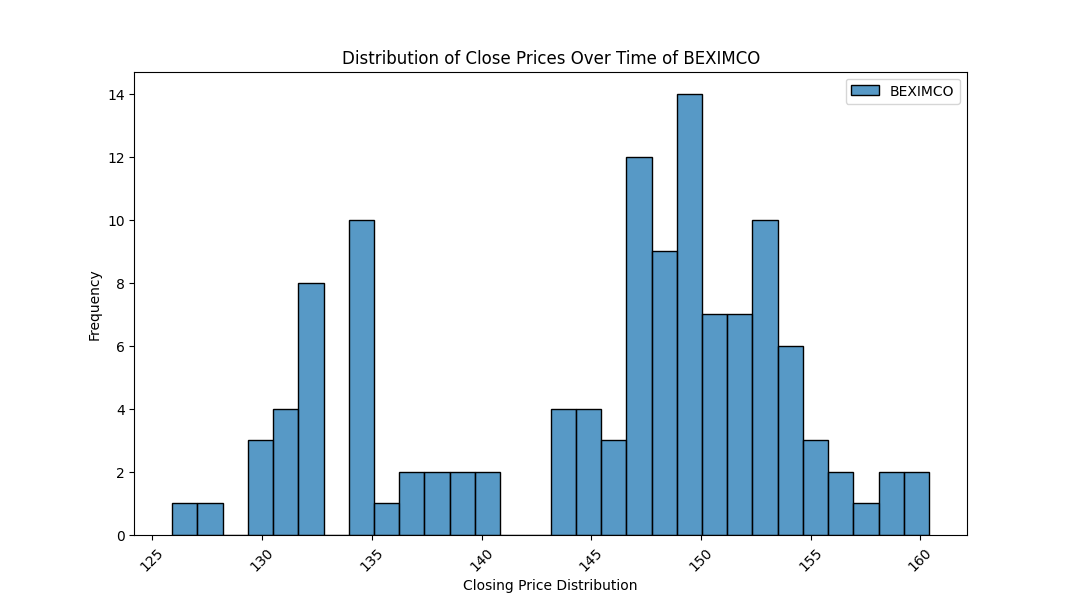

Explore the distribution of the 'Close' prices over time

Analyzing the 'Close' price distribution over time for the top 5 companies, this code generates histograms for each company. The visualizations provide insights into the variations in closing prices, facilitating a comparative assessment of their stock performance.

for name in top_5_companies:

company_data = df[df['Name'] == name]

plt.figure(figsize=(15, 5))

sns.histplot(data=company_data, x="Close", bins=30, label=name)

plt.xlabel("Closing Price Distribution")

plt.ylabel("Frequency")

plt.title("Distribution of Close Prices Over Time of {}".format(name))

plt.legend()

plt.xticks(rotation=45)

plt.show()

(2 selected plots from the 5 output plots are provided for reference.)

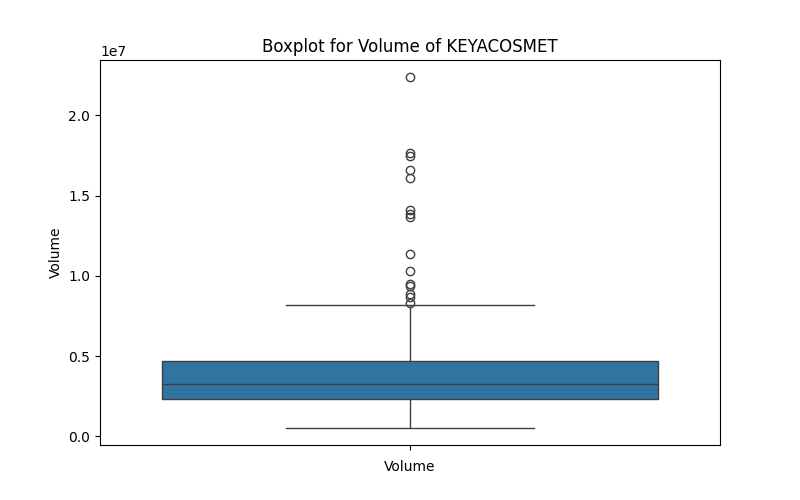

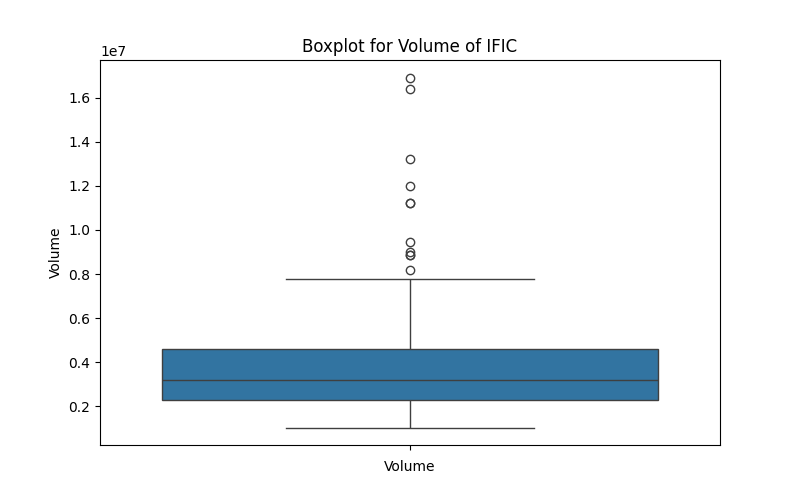

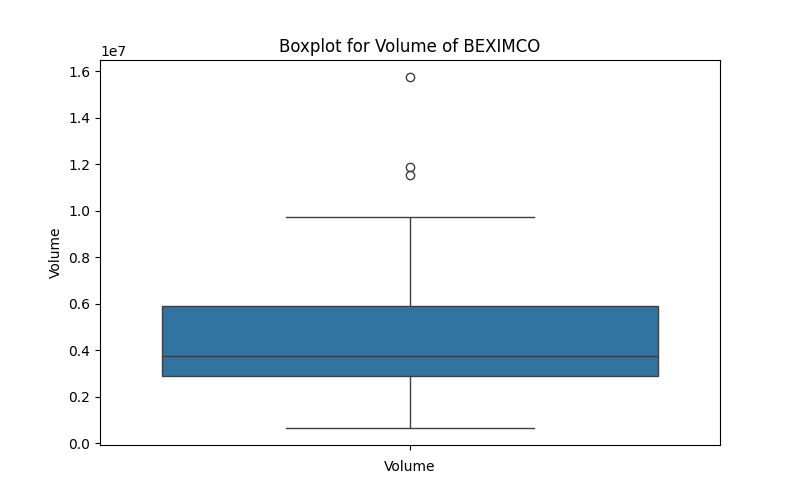

Identify and analyze any outliers (if any) in the dataset of

volumecolumn

Outliers beyond the maximum quartile in the 'Volume' boxplots indicate:

Unprecedented Extremes: Representing values extraordinarily higher than the upper quartile (Q3) and even beyond the maximum observed values.

Exceptional Market Activity: Signifying unparalleled and exceptionally rare trading volumes for these companies, often unique and unmatched in the dataset.

Possible Data Anomalies: Needing scrutiny for accuracy, as these extreme values might sometimes arise from errors or anomalies in the data.

Unmatched Market Events: Reflecting extraordinary market occurrences or exceptional trading interests that significantly deviate from typical market behavior.

Potential Market Impact: While confirming the accuracy, highlighting moments of extreme market activity that could have impacted short-term price movements or market perceptions.

(3 selected plots from the 5 output plots are provided for reference.)

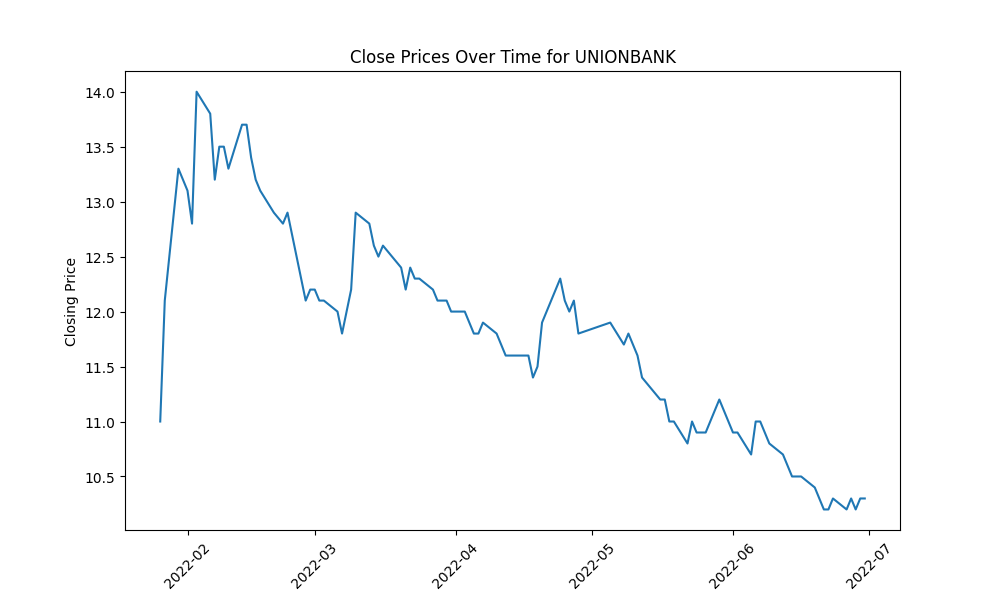

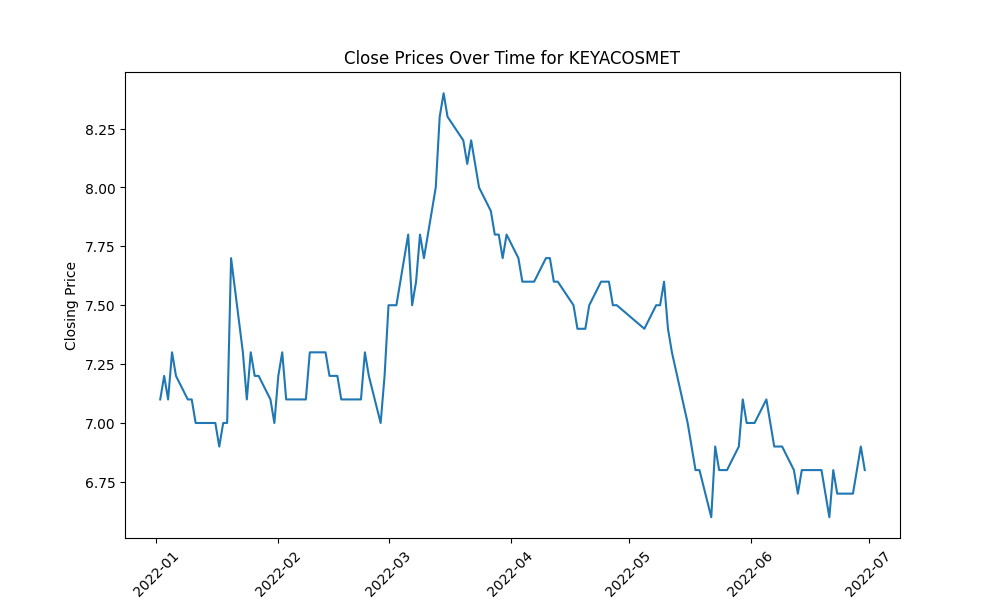

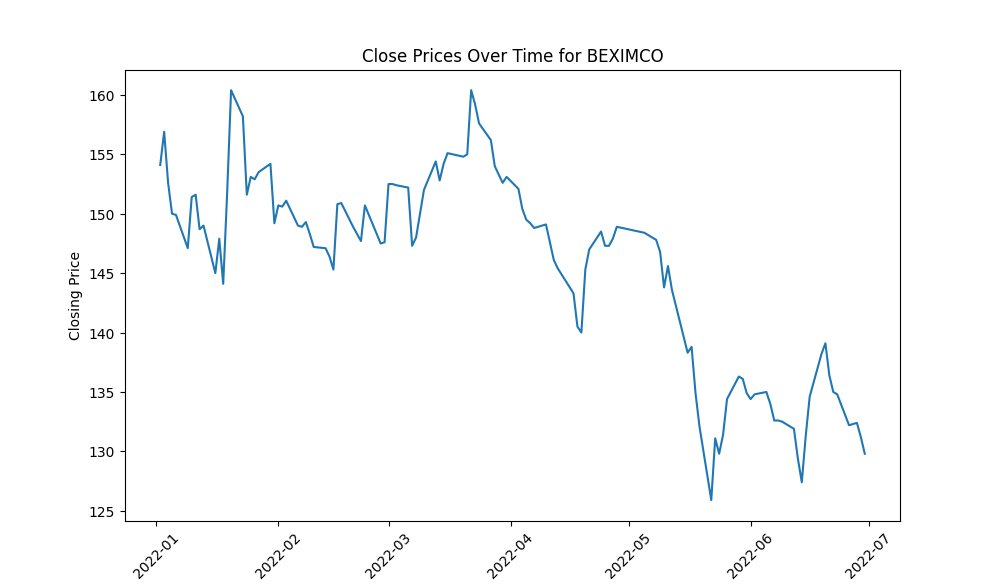

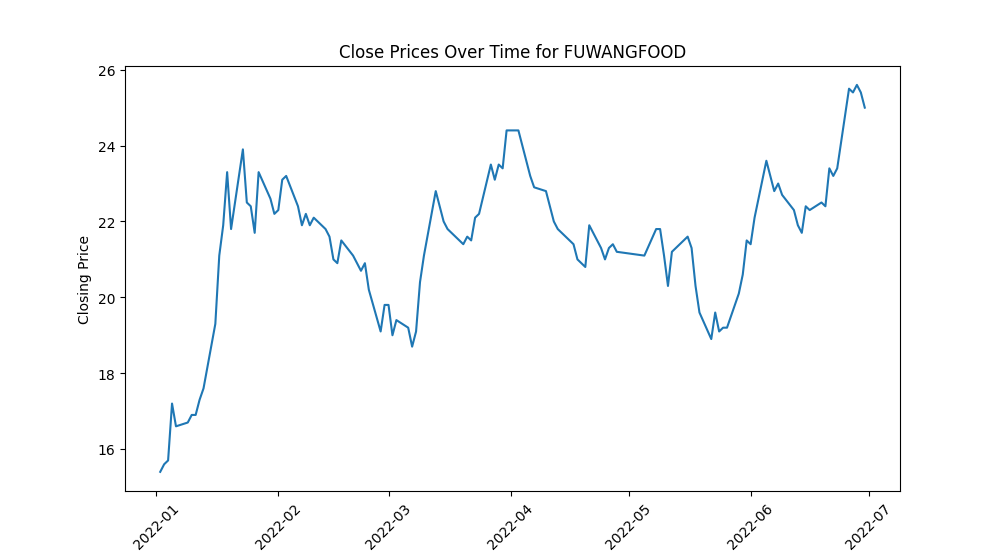

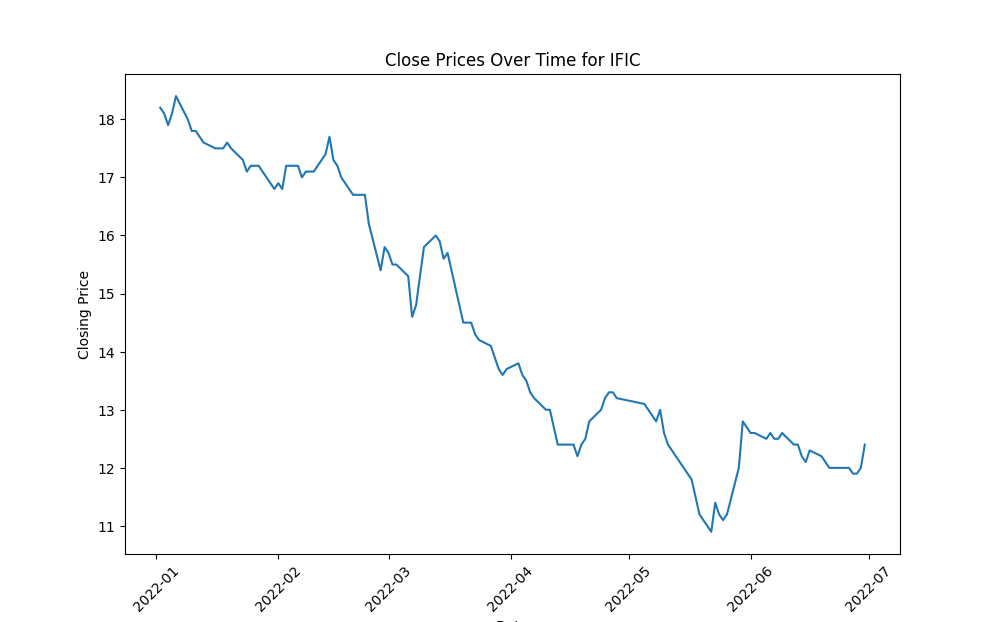

Create a line chart to visualize the 'Close' prices over time

Generate line charts depicting the 'Close' prices over time for each selected company, unraveling the historical trends and patterns in their stock performance.

# Loop through the top 5 companies based on volume

for name in top_5_companies:

# Filter data for each specific company

company_data = df[df['Name'] == name]

# Create a separate line chart for each company's 'Close' prices over time

plt.figure(figsize=(10, 4))

plt.plot(company_data['Date'], company_data['Close'])

# Set labels and title for the plot

plt.xlabel('Date')

plt.ylabel('Closing Price')

plt.title(f'Close Prices Over Time for {name}')

plt.xticks(rotation=45)

# Show the plot for each company

plt.show()

- IFIC and UNIONBANK: These companies experienced a rapid decline in their 'Close' prices over the observed period, indicating a consistent downward trend in their stock prices.

FUWANGFOOD: In contrast, FUWANGFOOD exhibited an increasing trend in its 'Close' prices from January 2022 to July 2022, suggesting a rise in stock prices over this period.

These observations can provide insights into the performance of these companies within the given timeframe. It's important to analyze the reasons behind these trends—whether they are influenced by company-specific factors (like earnings reports, market news, or company performance) or broader market conditions—to better understand the drivers behind the stock price movements.

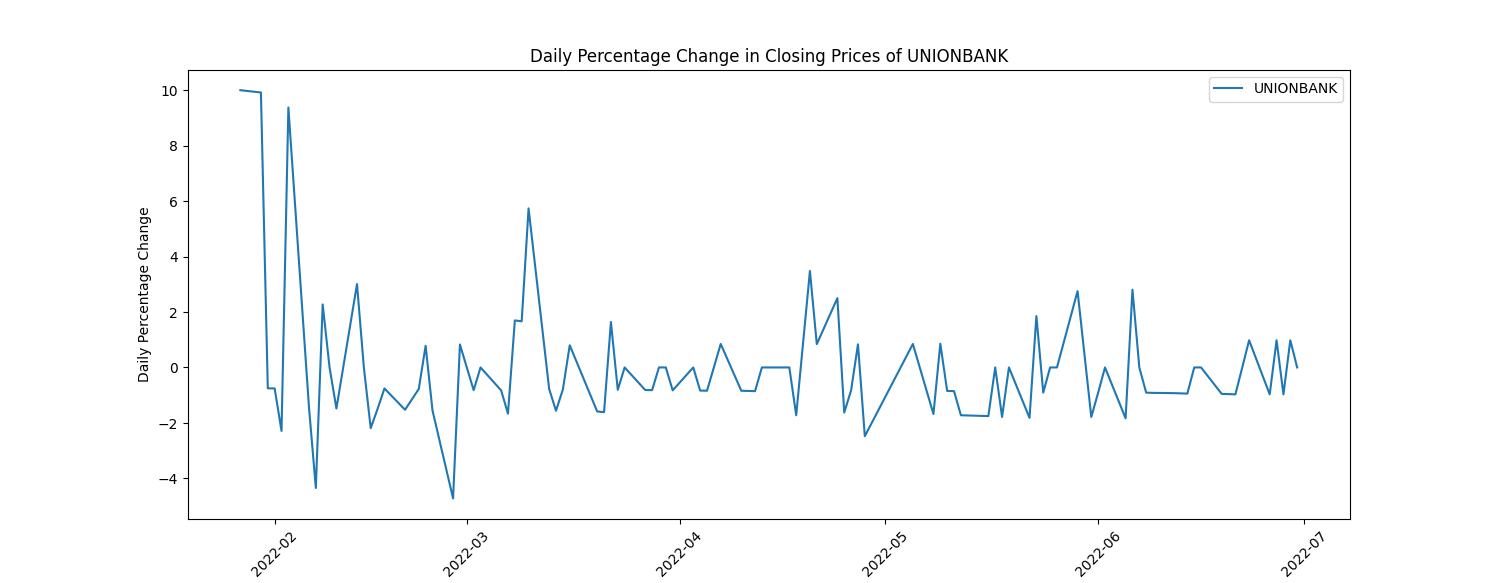

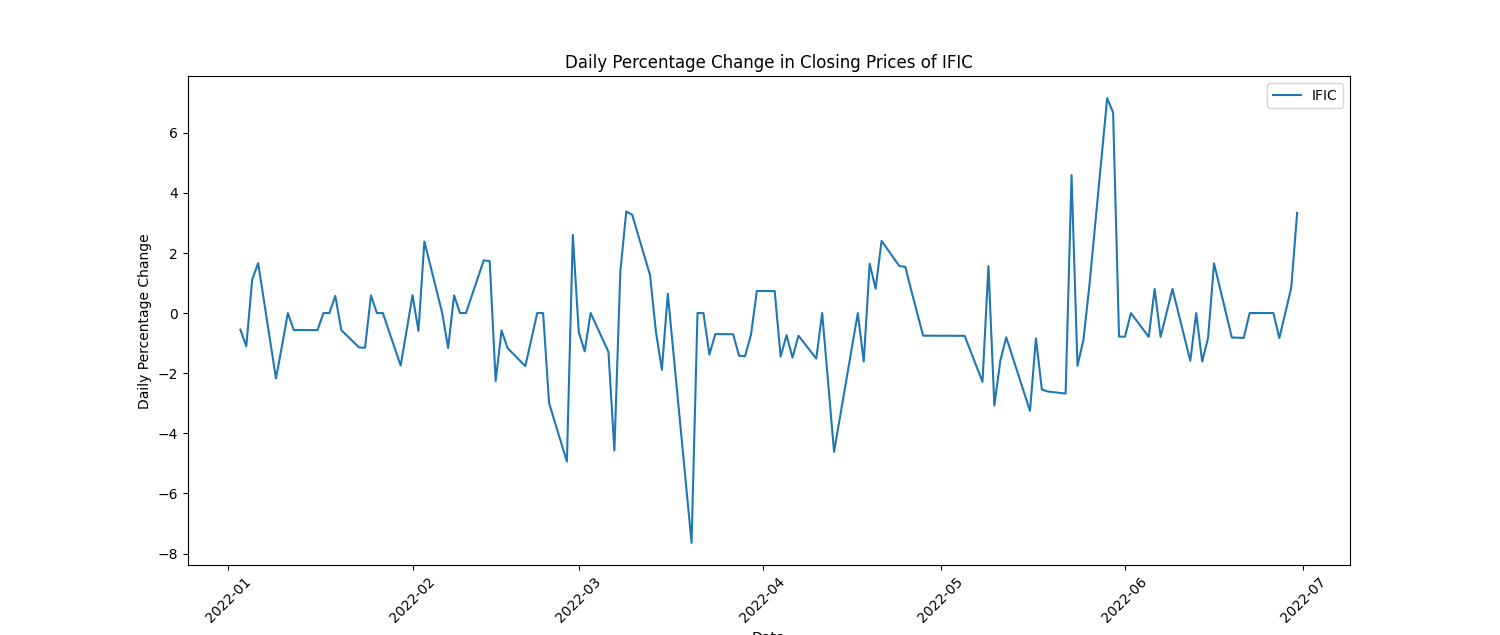

Calculate and plot the daily percentage change in closing prices

Here's a more concise breakdown of insights from daily percentage change in closing prices:

Volatility Identification: Higher fluctuations suggest more volatile stock behavior, potentially indicating greater risk.

Trend Recognition: Consistent positive or negative changes signal potential upward or downward trends in stock prices.

Event Impact: Sharp spikes or drops may align with significant company or market events, influencing stock prices.

Comparative Analysis: Comparing changes among companies highlights differing market sensitivities or strengths.

Trading Signals: Useful for short-term strategies, indicating potential buy/sell opportunities based on price movements.

Risk Evaluation: Assessing fluctuations aids in adjusting risk management strategies for investments.

plt.figure(figsize=(15, 4)) # Calculate daily percentage change for each company and plot individually for name in top_5_companies: plt.figure(figsize=(15, 4)) company_data = df[df['Name'] == name] company_data['Daily_PCT_Change'] = company_data['Close'].pct_change() * 100 # Plot the daily percentage change for each company plt.plot(company_data['Date'], company_data['Daily_PCT_Change'], label=name) # Set labels and title for the plot plt.xlabel('Date') plt.ylabel('Daily Percentage Change') plt.title(f'Daily Percentage Change in Closing Prices of {name}') plt.legend() plt.xticks(rotation=45) plt.show()

(2 selected plots from the 5 output plots are provided for reference.)

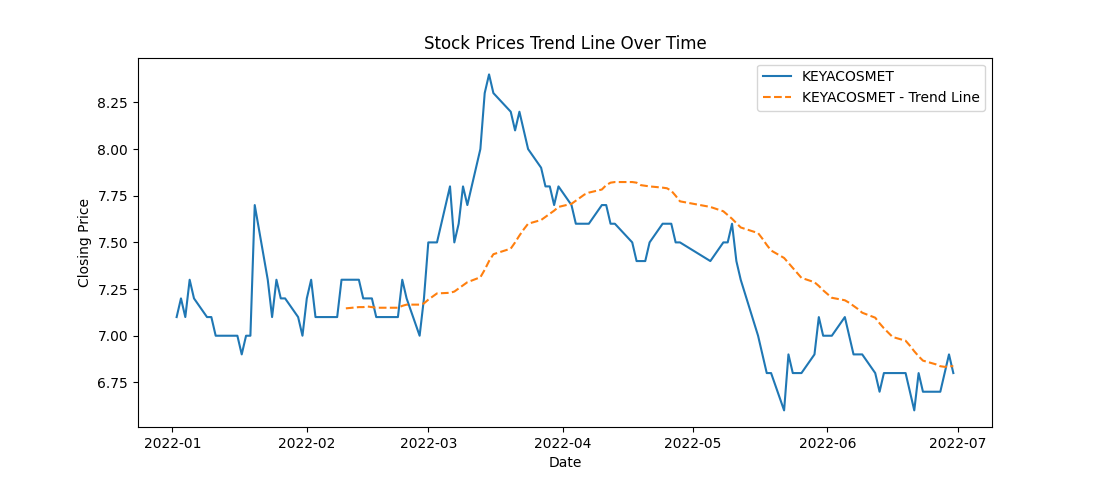

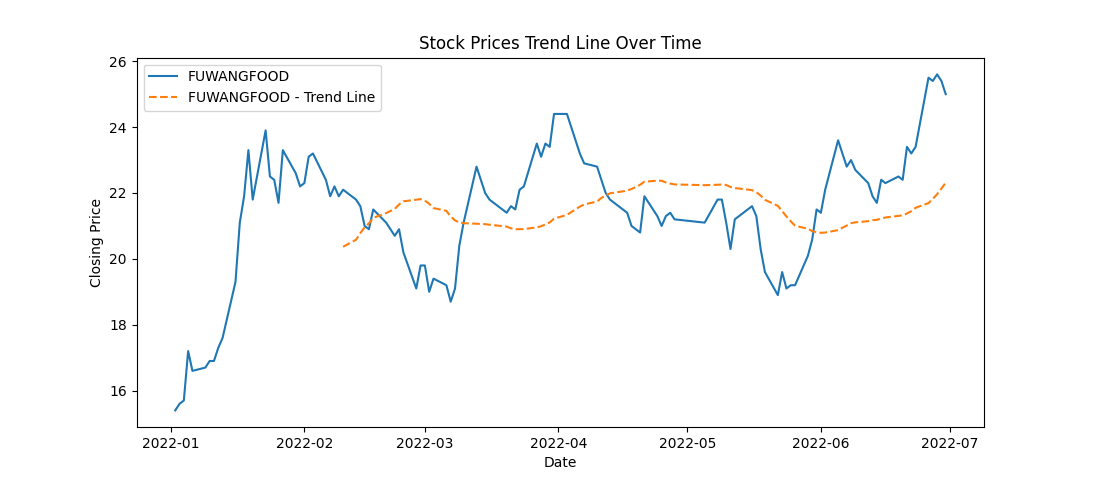

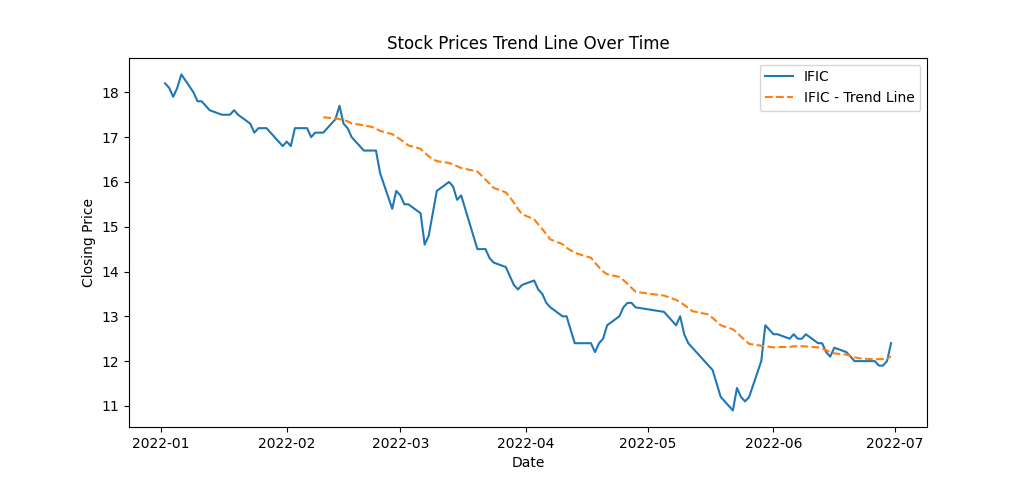

Investigate the presence of any trends or seasonality in the stock prices

This code snippet creates line charts displaying the stock prices over time for each of the top 5 companies, along with a rolling average trend line for a smoother representation of the trends. Here's what the code accomplishes:

Data Plotting: It generates individual line charts for each company's 'Close' prices over time.

Trend Line: Adds a rolling average line (e.g., 30-day) to visualize the trend in stock prices. This line represents the average closing price over a specified window (30 days in this case).

Visualization: Titles, labels, and legends are set for clarity in the visualization.

This code provides a visual representation of both the actual closing prices and a smoothed trend line, aiding in identifying the general trend in stock price movements over time for each company. Adjustments to the rolling window size or other visualization aspects can be made as needed for better analysis and presentation.

for name in top_5_companies:

company_data = df[df['Name'] == name]

plt.plot(company_data['Date'],company_data['Close'], label=name)

# Plotting a rolling average (e.g., 30 days) for trend visualizations

rolling_avg = company_data['Close'].rolling(window=30).mean()

plt.plot(company_data['Date'],rolling_avg, label=f'{name} - Trend Line', linestyle='--')

plt.title('Stock Prices Trend Line Over Time')

plt.xlabel('Date')

plt.ylabel('Closing Price')

plt.legend()

plt.show()

(3 selected plots from the 5 output plots are provided for reference.)

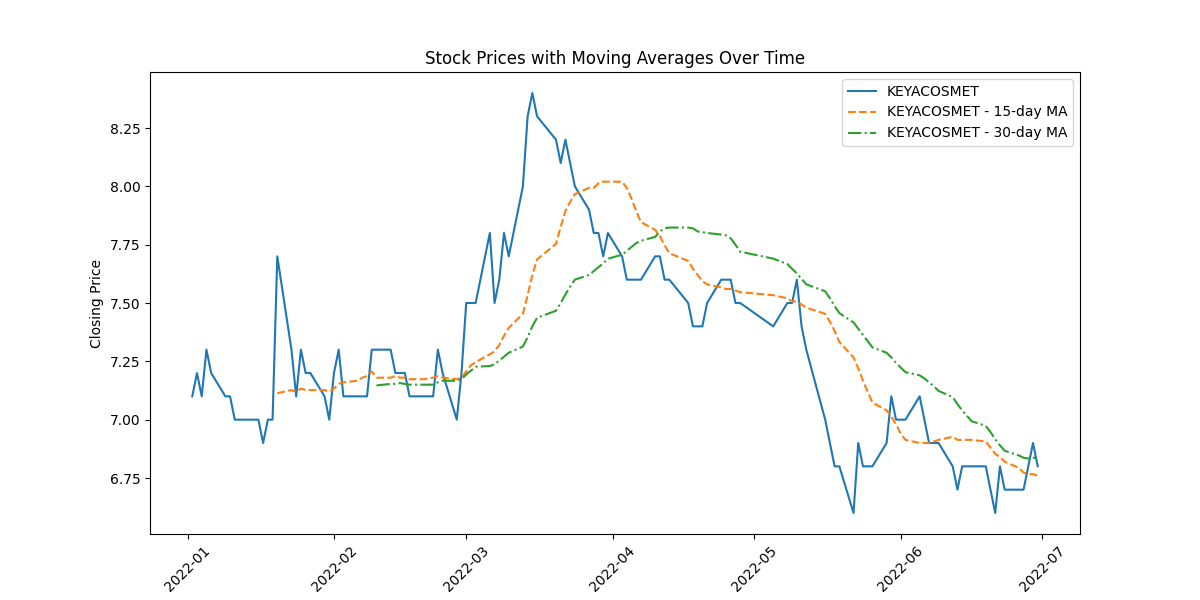

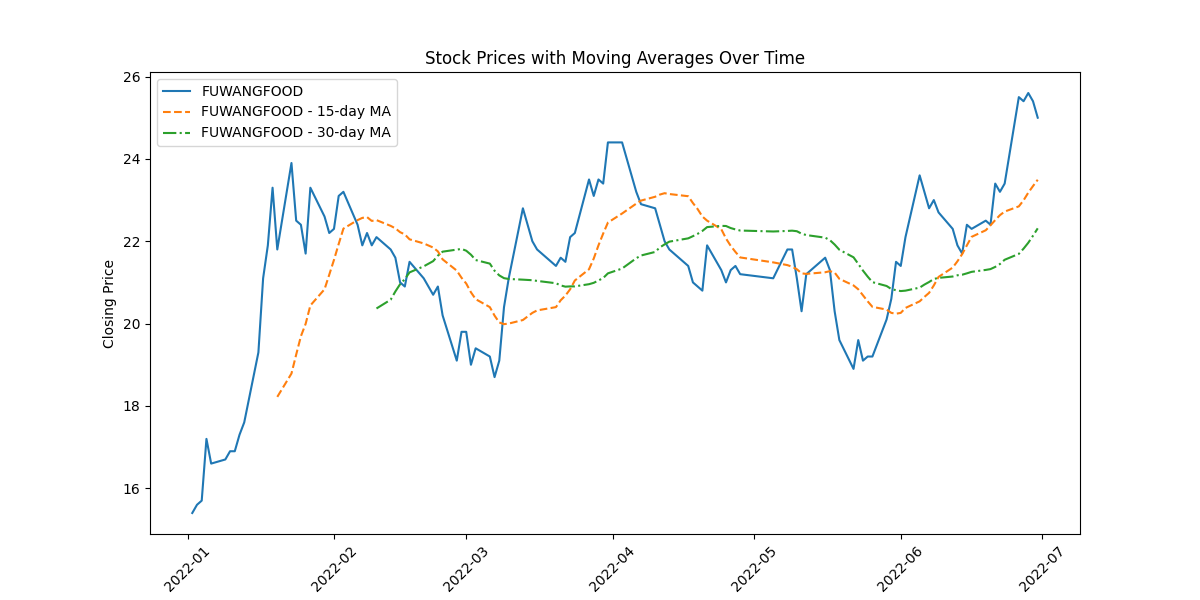

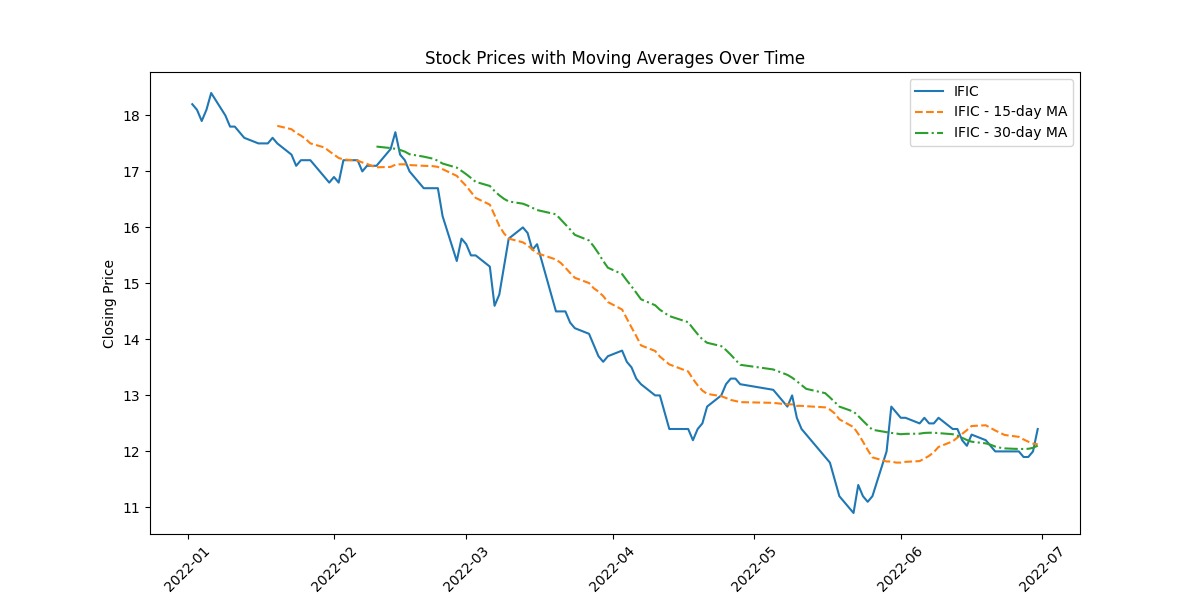

Apply moving averages to smooth the time series data in 15/30 day intervals against the original graph

The code depicting original closing prices alongside 15-day and 30-day moving averages offers these insights:

Trend Visualization: Shows long-term trends by smoothing price data, aiding in trend identification.

Signal Analysis: Crosses between moving averages indicate potential shifts in trends, guiding short-term decision-making.

Volatility Indication: The gap between prices and moving averages highlights market volatility.

Support/Resistance Levels: Interaction between prices and moving averages identifies potential support or resistance levels.

Momentum Confirmation: Consistent alignment between prices and moving averages confirms momentum in price movement.

# Loop through the top 5 companies based on volume

for name in top_5_companies:

plt.figure(figsize=(12, 6))

company_data = df[df['Name'] == name]

# Plotting original closing prices

plt.plot(company_data['Date'], company_data['Close'], label=name)

# Calculate and plot moving averages (15-day and 30-day)

moving_avg_15 = company_data['Close'].rolling(window=15).mean()

moving_avg_30 = company_data['Close'].rolling(window=30).mean()

plt.plot(company_data['Date'], moving_avg_15, label=f'{name} - 15-day MA', linestyle='--')

plt.plot(company_data['Date'], moving_avg_30, label=f'{name} - 30-day MA', linestyle='-.')

# Set labels, title, and legend

plt.title('Stock Prices with Moving Averages Over Time')

plt.xlabel('Date')

plt.ylabel('Closing Price')

plt.legend()

plt.xticks(rotation=45)

# Show the plot

plt.show()

(3 selected plots from the 5 output plots are provided for reference.)

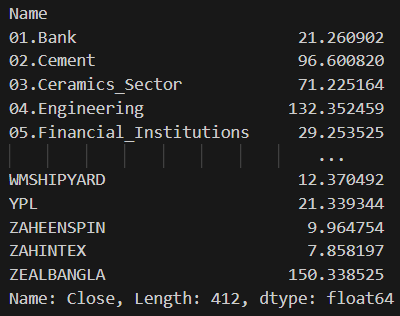

Calculate the average closing price for each stock

This code groups the DataFrame by the 'Name' column and then calculates the mean of the 'Close' prices for each group, resulting in the average closing price for each stock using the

'groupby()'function. The output will display the average closing price for all the stocks available in the dataset.

# Calculate average closing price for each stock

average_closing_price = df.groupby('Name')['Close'].mean()

# Display the average closing prices

print(average_closing_price)

Output:

Identify the top 5 and bottom 5 stocks based on average closing price.

# Calculate average closing price for each stock

average_closing_price = df.groupby('Name')['Close'].mean()

# Sort stocks based on average closing price

sorted_stocks = average_closing_price.sort_values()

# Display top and bottom stocks

print("Top 5 Stocks based on Average Closing Price:")

print(sorted_stocks.tail(5))

print("\nBottom 5 Stocks based on Average Closing Price:")

print(sorted_stocks.head(5))

Output:

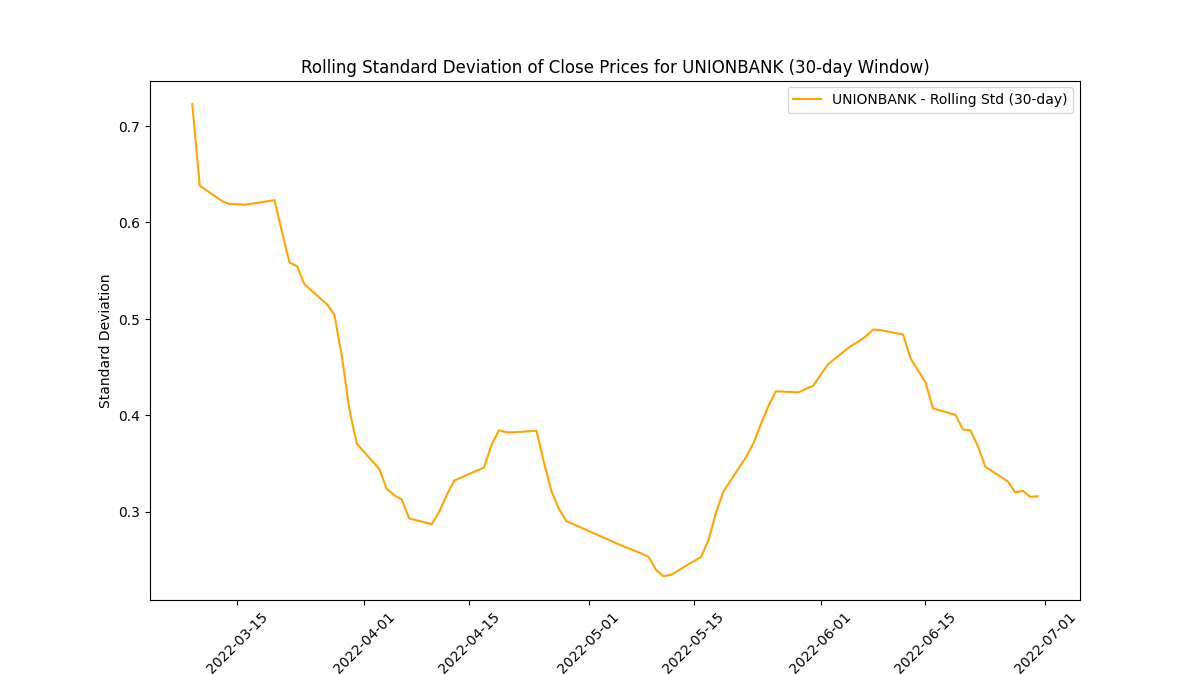

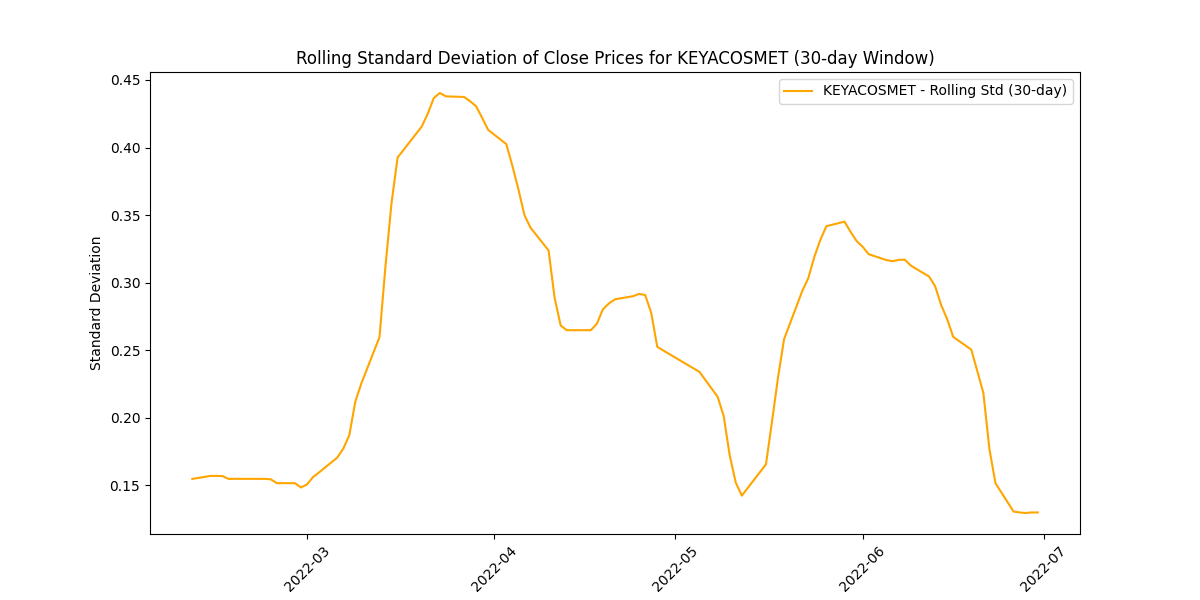

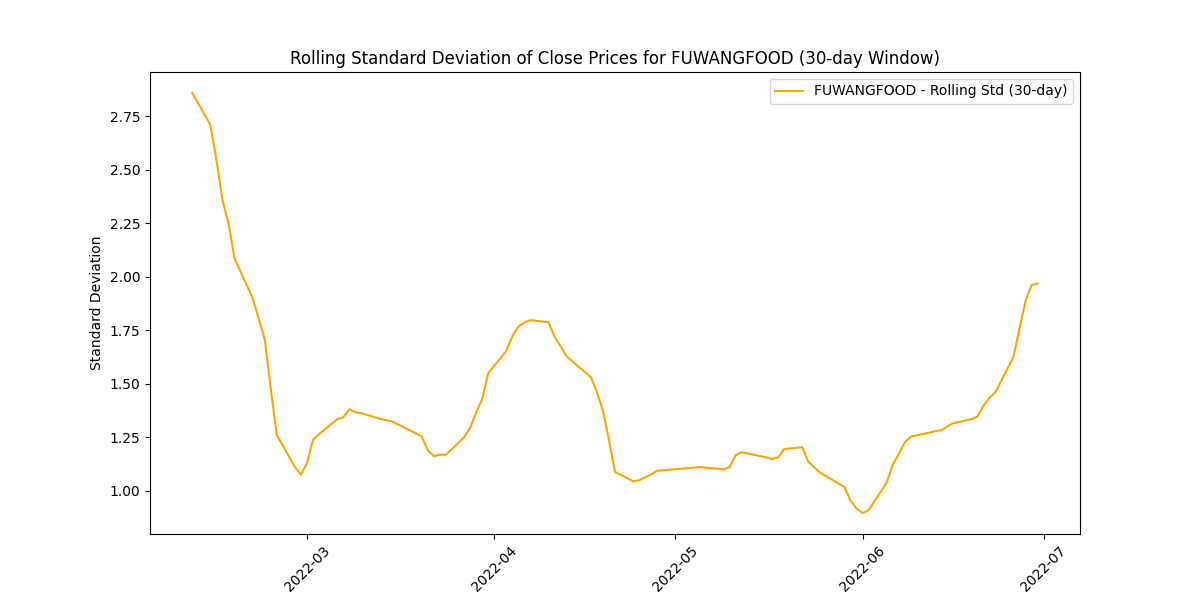

Calculate and plot the rolling standard deviation of the 'Close' prices

Analyzing the rolling standard deviation provides insights into

Volatility Levels: Comparing standard deviations helps gauge volatility differences among companies.

Market Uncertainty: Peaks in standard deviation may indicate uncertain market periods or impactful events.

Risk Perception: Higher standard deviations suggest higher risk due to greater price variability.

Stability Indicator: Lower standard deviations imply more stable prices, appealing to risk-averse investors.

Trading Insights: Fluctuations guide risk management strategies and identify potential trading opportunities.

# Calculate and plot rolling standard deviation for each of the top 5 companies for name in top_5_companies: company_data = df[df['Name'] == name] rolling_std = company_data['Close'].rolling(window=30).std() plt.figure(figsize=(12, 6)) plt.plot(company_data['Date'], rolling_std, label=f'{name} - Rolling Std (30-day)', color='orange') plt.title(f'Rolling Standard Deviation of Close Prices for {name} (30-day Window)') plt.xlabel('Date') plt.ylabel('Standard Deviation') plt.legend() plt.xticks(rotation=45) plt.show()

(3 selected plots from the 5 output plots are provided for reference.)

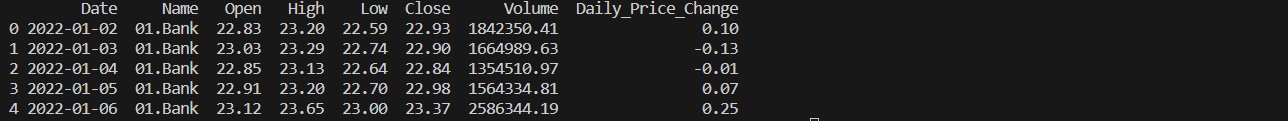

Create a new column for daily price change

# Create a new column for daily price change

df['Daily_Price_Change'] = df['Close'] - df['Open']

# Display the updated DataFrame with the new column

print(df.head())

Output:

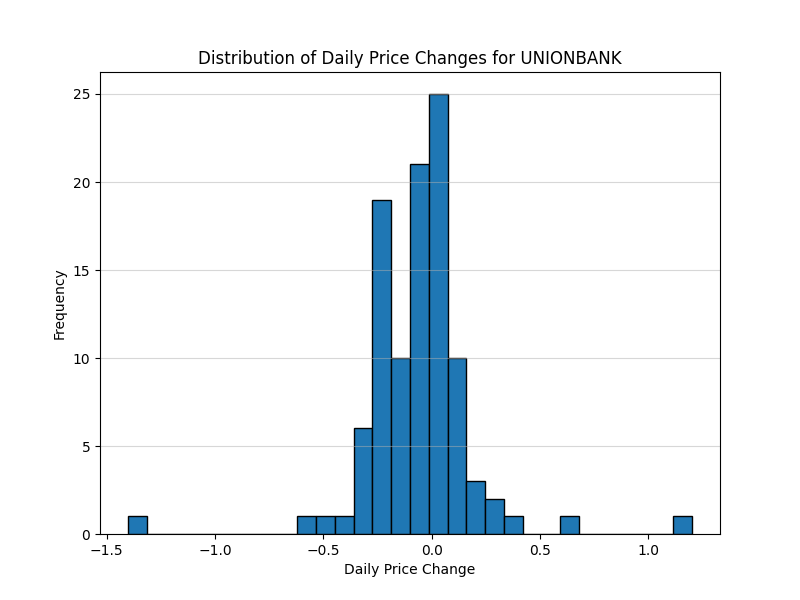

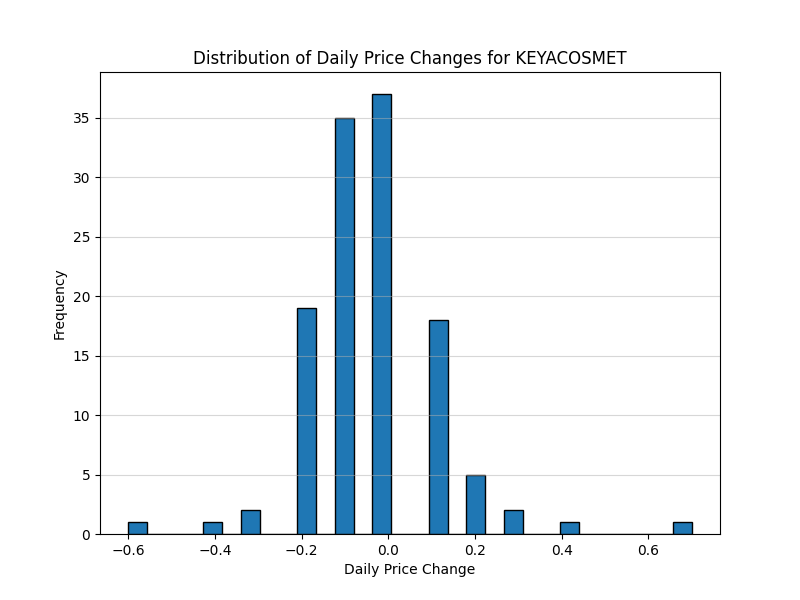

Analyze the distribution of daily price changes

# Analyze distribution of daily price changes for top 5 companies

for name in top_5_companies:

company_data = df[df['Name'] == name]

plt.figure(figsize=(8, 6))

plt.hist(company_data['Daily_Price_Change'], bins=30, edgecolor='black')

plt.title(f'Distribution of Daily Price Changes for {name}')

plt.xlabel('Daily Price Change')

plt.ylabel('Frequency')

plt.grid(axis='y', alpha=0.5)

plt.show()

(2 selected plots from the 5 output plots are provided for reference.)

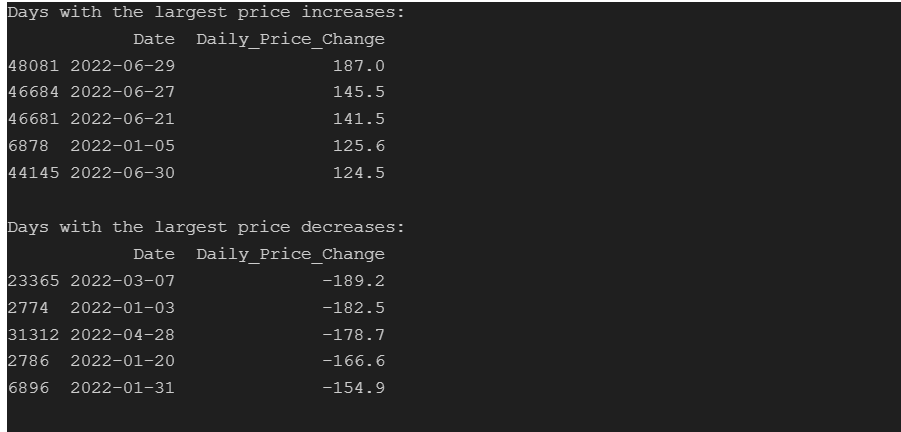

Identify days with the largest price increases and decreases

# Identify days with the largest price increases

largest_increase = df.nlargest(5, 'Daily_Price_Change')

print("Days with the largest price increases:")

print(largest_increase[['Date', 'Daily_Price_Change']])

# Identify days with the largest price decreases

largest_decrease = df.nsmallest(5, 'Daily_Price_Change')

print("\nDays with the largest price decreases:")

print(largest_decrease[['Date', 'Daily_Price_Change']])

Output:

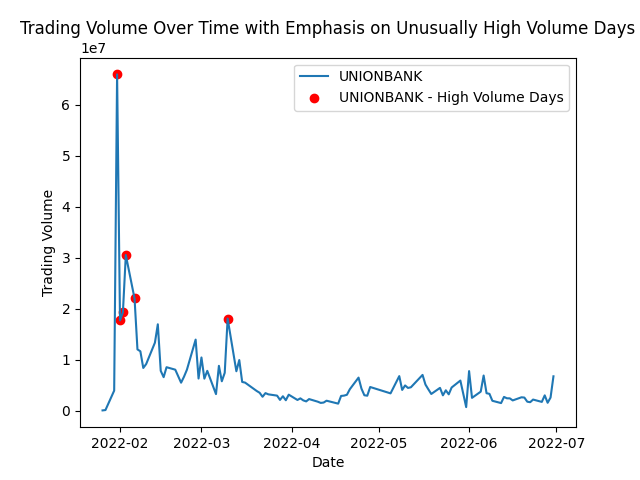

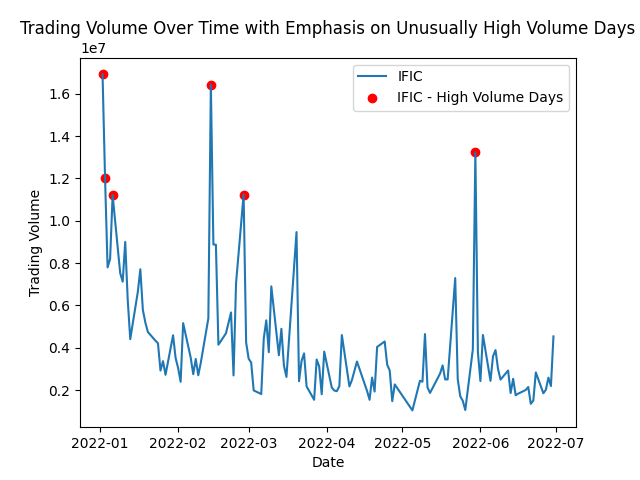

Identify stocks with unusually high trading volume on certain days

This analysis helps spot peaks in trading activity, indicating potential market events or investor interest. It shows patterns, events, or irregularities that might impact specific stocks or the market as a whole.

for name in top_5_companies:

company_data = df[df['Name'] == name]

plt.plot(company_data['Date'],company_data['Volume'],label=name)

threshold = company_data['Volume'].quantile(0.95)

high_volume_data = company_data[company_data['Volume'] > threshold]

plt.scatter(high_volume_data['Date'],high_volume_data['Volume'],color="red",marker='o',label="{} - High Volume Days".format(name))

plt.title('Trading Volume Over Time with Emphasis on Unusually High Volume Days')

plt.xlabel('Date')

plt.ylabel('Trading Volume')

plt.legend()

plt.show()

(2 selected plots from the 5 output plots are provided for reference.)

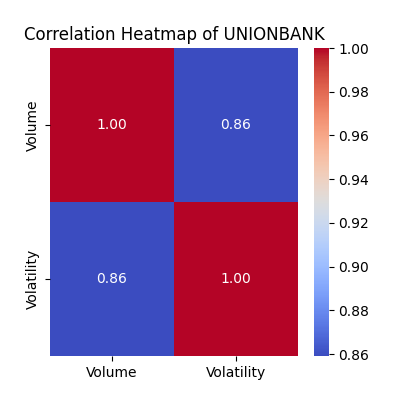

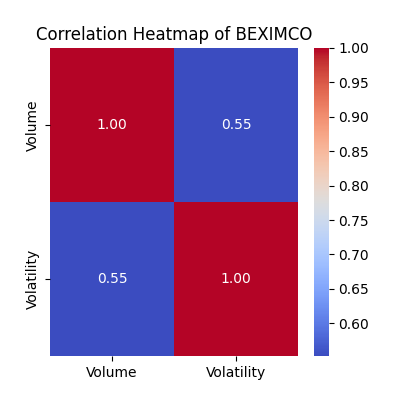

Explore the relationship between trading volume and volatility

# Calculate volatility (daily price range) for each company

df['Volatility'] = df['High'] - df['Low']

# Plot individual correlation heatmaps for each company

for name in top_5_companies:

company_data = df[df['Name'] == name]

correlation_matrix = company_data[['Volume', 'Volatility']].corr()

plt.figure(figsize=(4, 4))

sns.heatmap(correlation_matrix, annot=True, cmap='coolwarm', fmt='.2f')

plt.title(f'Correlation Heatmap of {name}')

plt.show()

The correlation heatmaps show how trading volume relates to price volatility:

Strength of Relationship: The color intensity and the value annotated within each cell represent the correlation coefficient. A value close to

1signifies a strongpositivecorrelation, while a value near-1indicates a strongnegativecorrelation. Values closer to0suggest a weaker orno linear relationship.Volume-Volatility Link: Look for patterns where higher trading volume aligns with increased volatility. Strong positive correlations indicate they often move together.

Comparing Companies: Differences in correlations among companies highlight unique trading behaviors. Higher correlations suggest more synchronized volume-volatility movements.

Trading Insights: Identify potential periods where increased trading volume predicts higher volatility, guiding potential trading strategies.

Watch for Exceptions: Notice any unexpected relationships or instances where volume and volatility don't align as anticipated, which might warrant further investigation into market behavior.

(2 selected plots from the 5 output plots are provided for reference.)

Correlation matrix between the

'Open'&'High','Low'&'Close'prices

# Iterate over each top company

for company in top_5_companies:

# Filter data for the current company

company_data = df[df['Name'] == company]

# Select columns for correlation analysis

price_data = company_data[['Open', 'High', 'Low', 'Close']]

# Calculate correlation matrix

price_correlation = price_data.corr()

Create heatmap to visualize the correlations using the

seabornpackage.

# Create heatmap

plt.figure(figsize=(6, 4))

sns.heatmap(price_correlation, annot=True, cmap='coolwarm', fmt='.2f')

plt.title(f'Correlation Heatmap of Price Data for {company}')

plt.show()

(2 selected plots from the 5 output plots are provided for reference.)

Acknowledgments

I express my sincere appreciation to Bohubrihi for their invaluable contributions to this analysis, offering guidance and resources that significantly enriched the project.

Subscribe to my newsletter

Read articles from MOHAMMAD ULLAH directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

MOHAMMAD ULLAH

MOHAMMAD ULLAH

Hey Visionary Professionals, This is me passionate professional with a track record in customer service, front desk management, account management, design, and social media to Data Analytics. I'm not just a collector of experiences, but a seeker of knowledge and innovation. While I was working in various roles over the previous 4 years, I've come to realize the immense power of data in our tech-driven world. This realization inspired me to transition my career into the data-driven domain of 𝐃𝐚𝐭𝐚 𝐀𝐧𝐚𝐥𝐲𝐭𝐢𝐜𝐬. 𝐈 𝐭𝐫𝐮𝐥𝐲 𝐛𝐞𝐥𝐢𝐞𝐯𝐞 𝐭𝐡𝐚𝐭 '𝐃𝐚𝐭𝐚 𝐢𝐬 𝐭𝐡𝐞 𝐡𝐞𝐚𝐫𝐭𝐛𝐞𝐚𝐭 𝐨𝐟 𝐢𝐧𝐧𝐨𝐯𝐚𝐭𝐢𝐨𝐧.' As an aspiring data enthusiast, I'm diving into data analytics and programming, ready to unlock valuable insights and drive change. My aim is to merge my past experience with my tech-driven future, and I'm on a mission to contribute to the success of tech-powered organizations.