S3 Programmatic access with AWS-CLI 💻 📁

Unnati Gupta

Unnati Gupta

What is storage called in AWS?

AWS storage services are grouped into three different categories: block storage, file storage, and object storage. File Storage.

Types of Storage:

Five types of storage services are available.

a) Simple Storage Service(S3)

b) Elastic File System

c) EBS (Elastic Block Storage)

d) Glacier

e) Snowball

Simple Storage Service(S3):

It is object-level storage. You can store any object or file and can access it from anywhere (option to make it public or private).

It is used to store unstructured data.

EFS(Elastic File System):

- EFS is a kind of common storage system. You can share this storage between multiple regions or availability zones. You can store software update files, and patch files in this storage. You can use this storage for Linux-based systems.

EBS(Elastic Block Storage):

It is connected with EC2-Instance, you can access this storage by EC2 only.

It is a Block-Level Storage.

Glacier:

Now Glacier does not exist separately It become part of S3. It is very cheap but highly reliable.

If you want to store data for a long time like 50 years at the cheapest price then you can use Glacier.

Snowball:

- Snowball is a portable device. Suppose an organization has data in large amounts and requires to migrate that data to AWS Cloud then it is too tough to migrate it to a cloud server. In that case, they send a portable device to that organization and the organization will upload the data in that send to the data center. They will upload that data to a cloud server.

❄Tasks:

Task-01

Launch an EC2 instance using the AWS Management Console and connect to it using Secure Shell (SSH). Create an S3 bucket and upload a file to it using the AWS Management Console. Access the file from the EC2 instance using the AWS Command Line Interface (AWS CLI).

Step 1: Log in to AWS by using the root user.

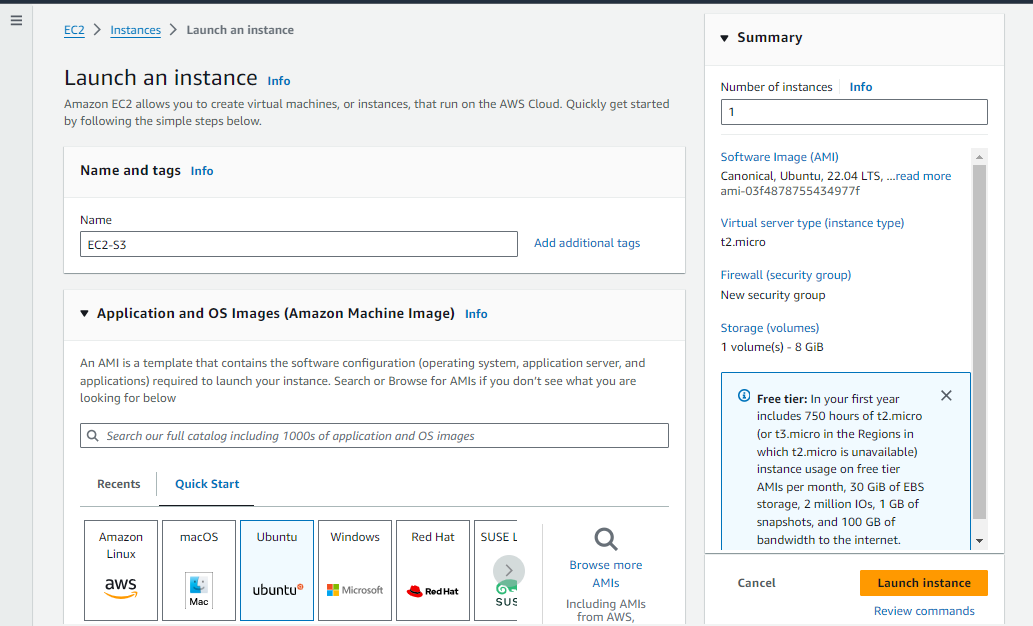

Step 2: Launch an EC2-Instance on AWS.

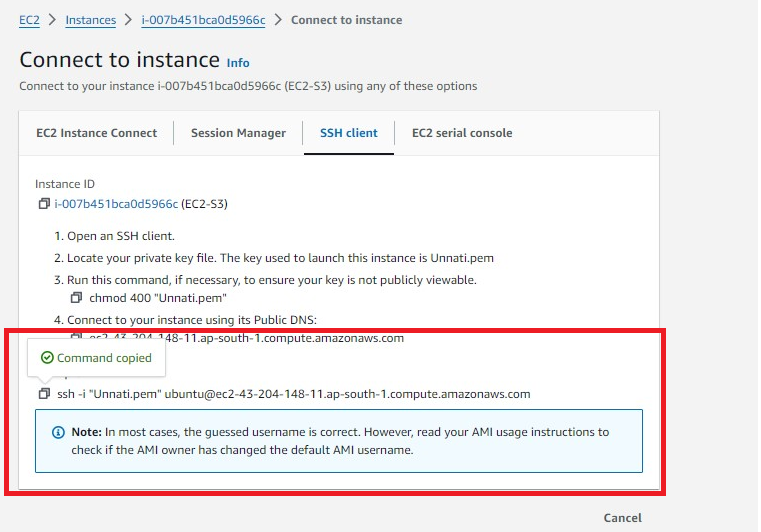

Step 3: Copy the SSH Command to access the EC2-Instance.

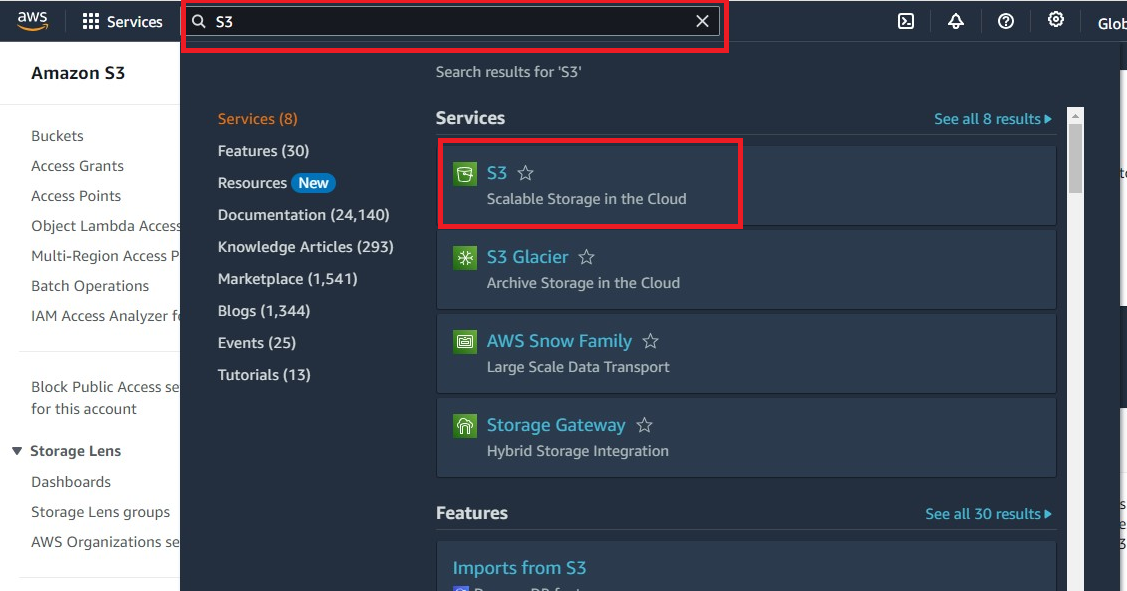

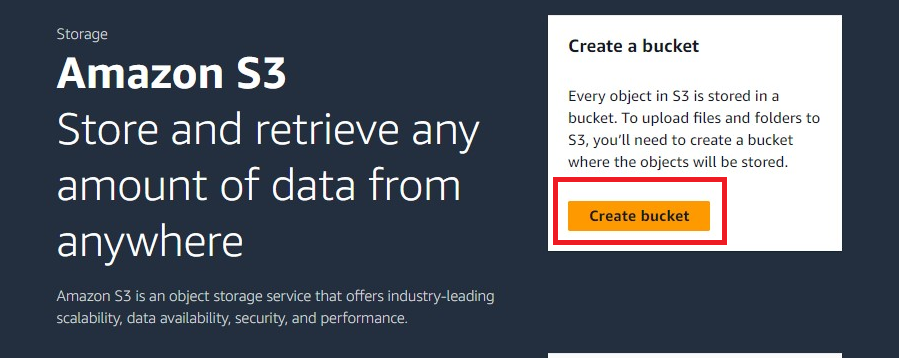

Step 4: Go over the search button on the AWS dashboard and type S3.

Step 5: Click on the "Create Bucket" option for creating storage.

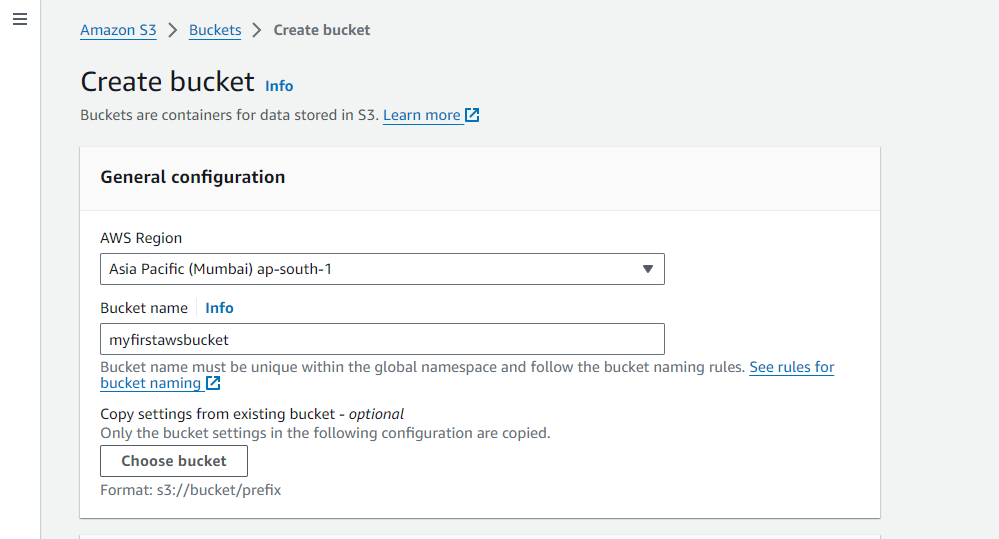

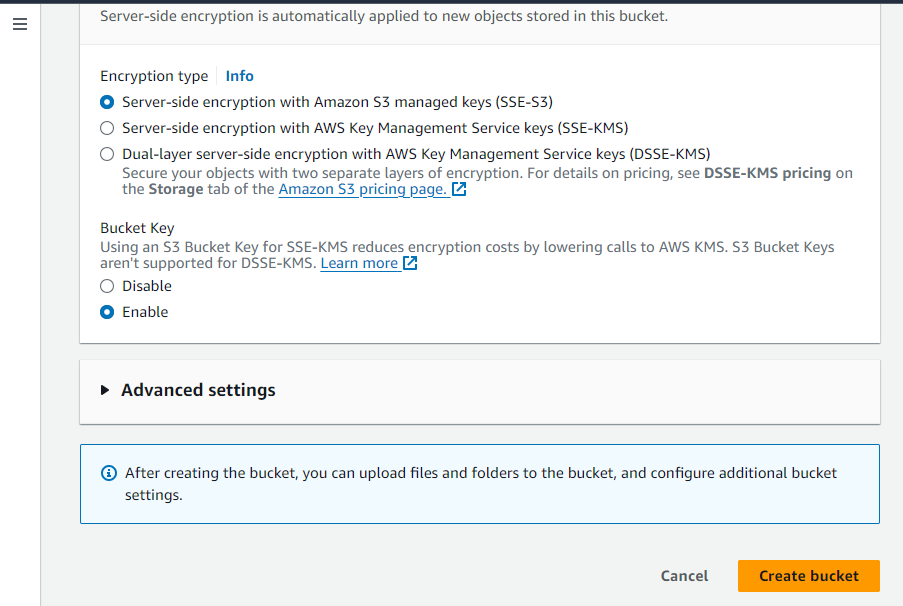

Step 5: Give a Bucket Name to create a bucket. Select Region, according to that which you use to create your EC2-Instance ( It should be the same), and click on the "Create Bucket" button.

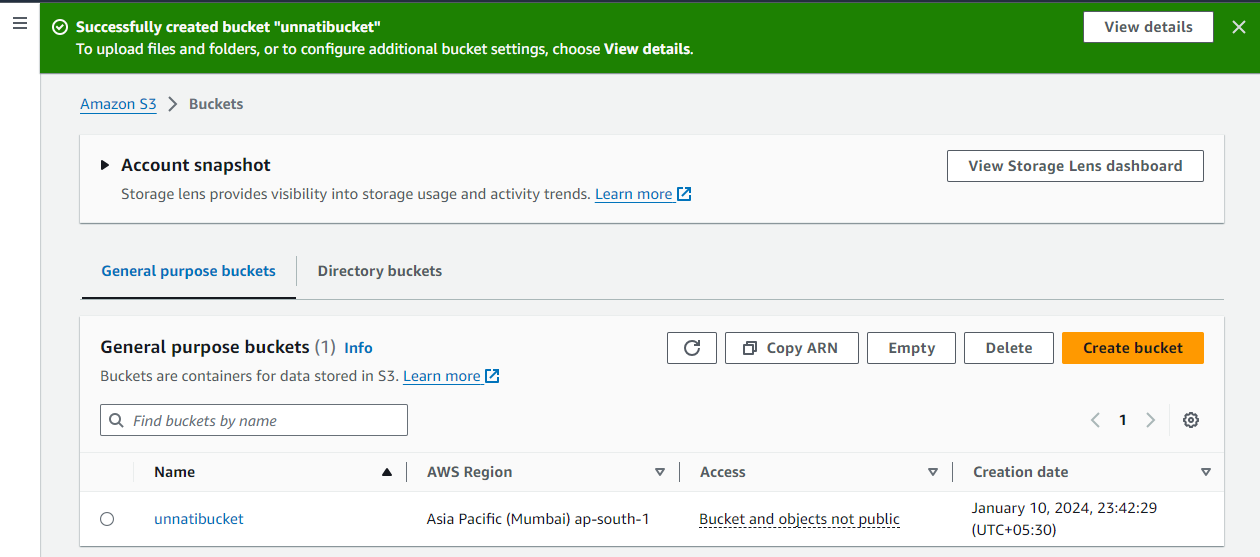

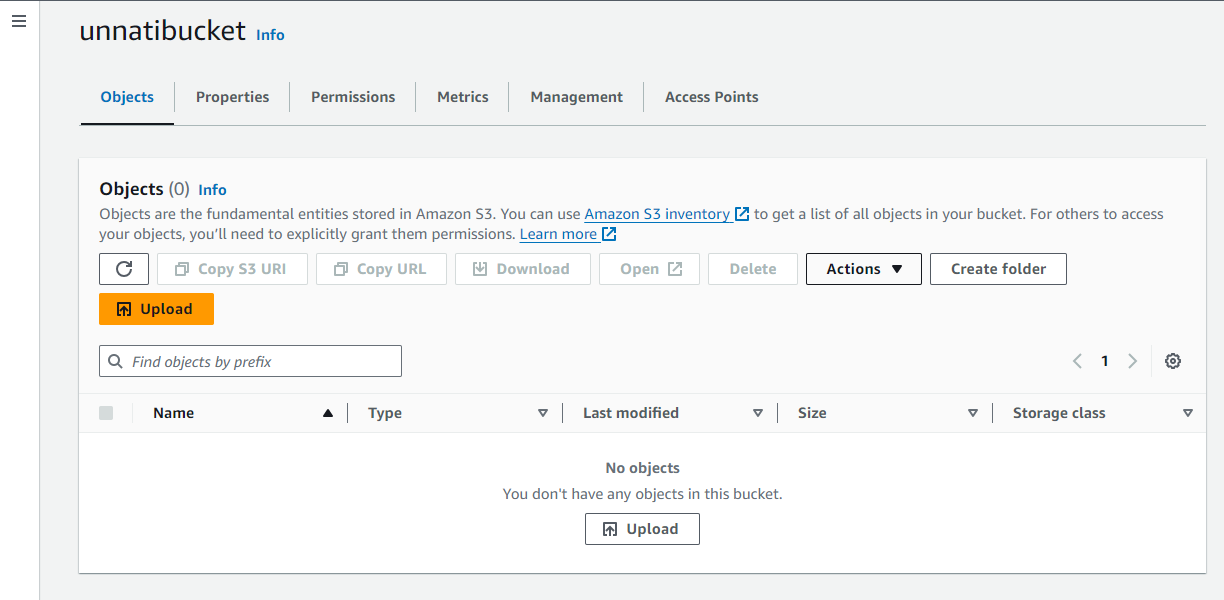

Step 6: Verify your bucket created successfully.

Congratulations!! your bucket was created successfully.

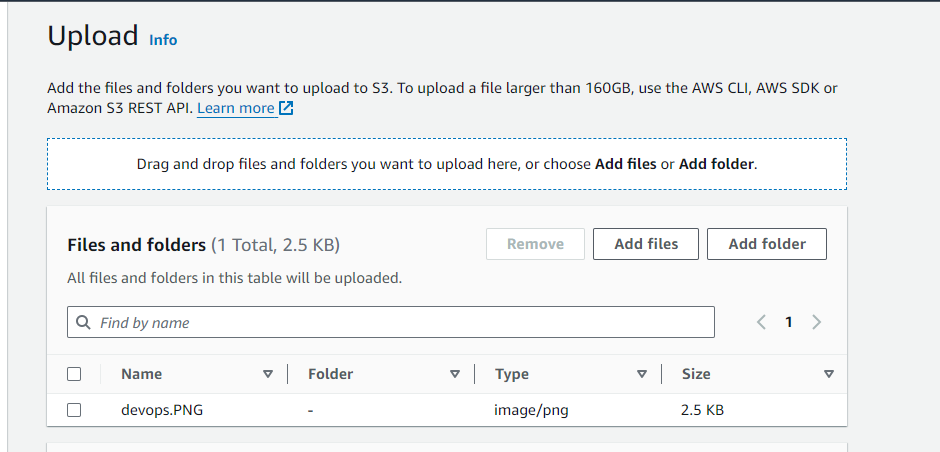

Step 7: Upload the document over the bucket, Click over the upload button.

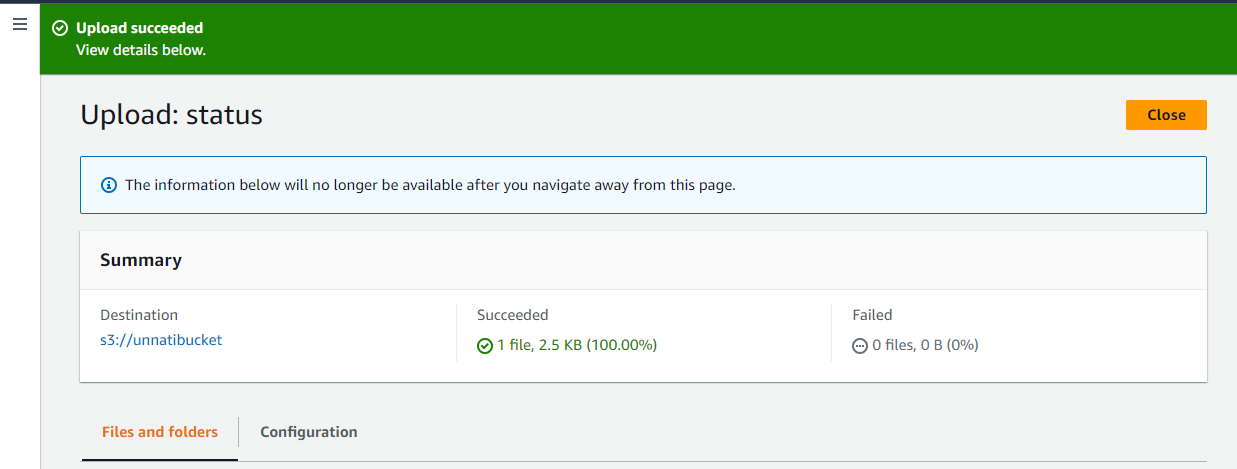

Now Check if it's uploaded successfully or not.

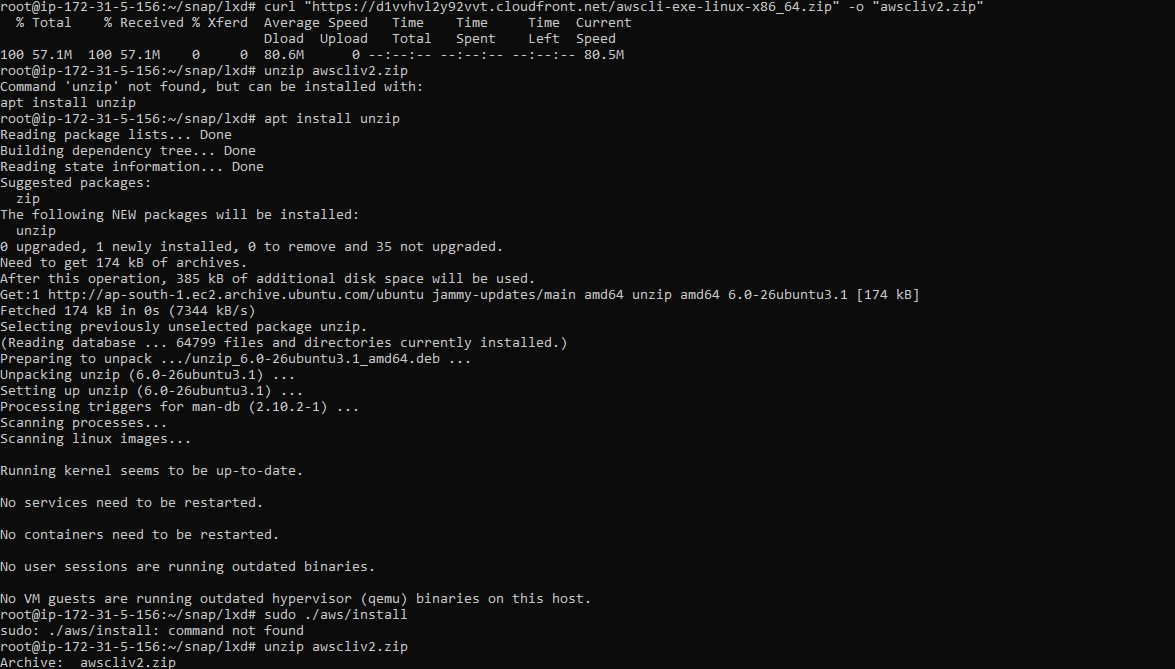

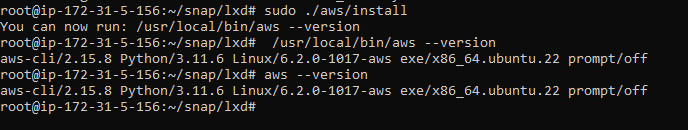

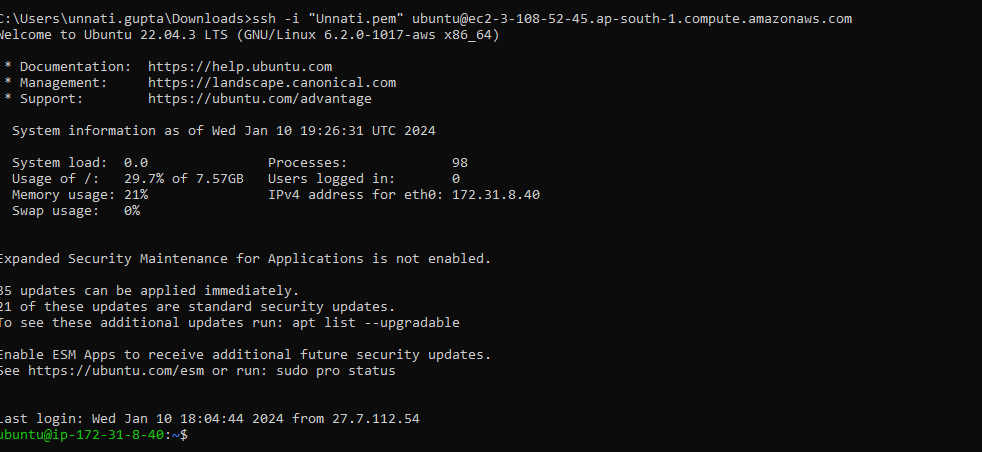

Step 8: Performing the task using CLI, require to install AWS CLI on EC2-Instance.

Verify whether it's installed successfully or not.

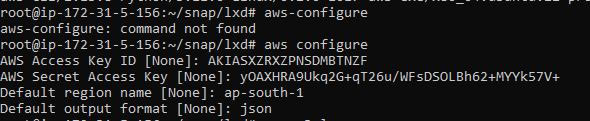

Configure AWS CLI in your EC2-Instance.

aws configure

aws sts get-caller-identity

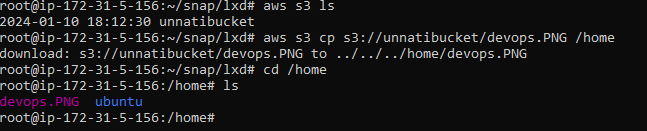

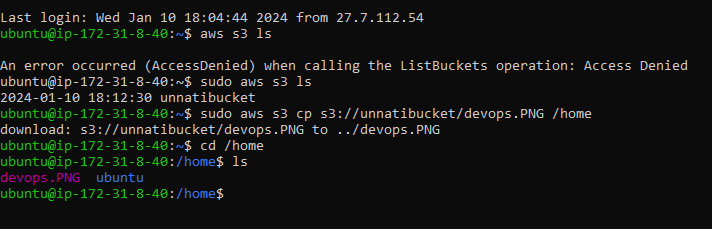

Step 9: After installing successfully, Access Storage by using AWS CLI and copy in locally (EC2-Instance).

# list of storages

aws s3 ls

# Copy file from storage to Local Machine

aws s3 cp <bucket_url> <path_to_loacl_machine>

finally S3 storage and uploaded files accessible from AWS CLI.

Task-02

Create a snapshot of the EC2 instance and use it to launch a new EC2 instance. Download a file from the S3 bucket using the AWS CLI. Verify that the contents of the file are the same on both EC2 instances.

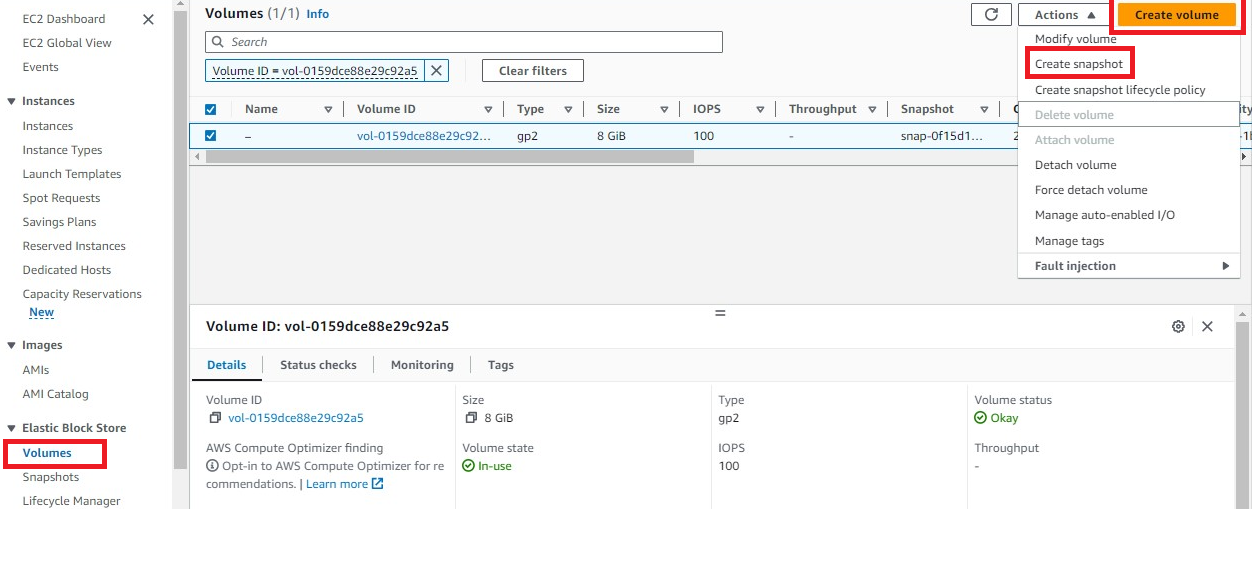

Step 1: For Creating Snapshot, Go over the Volume section and select volume then click on "Create Snapshot".

Step 2: Give a name to your snapshot and then click on "Create Snapshot".

Step 3: Finally Snapshot created successfully.

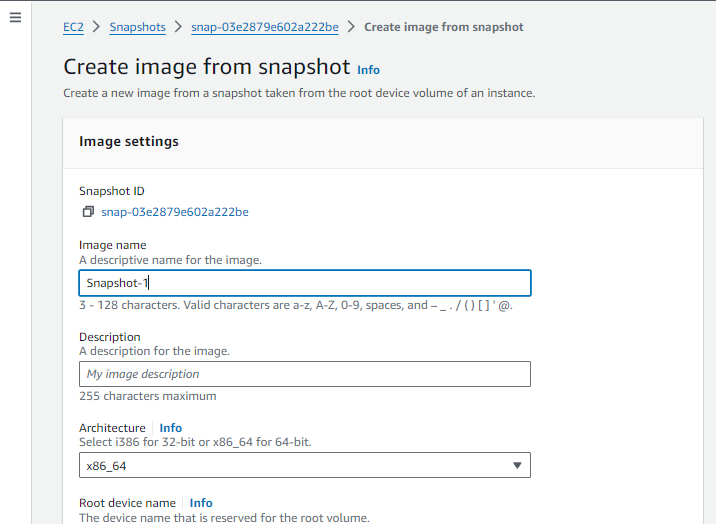

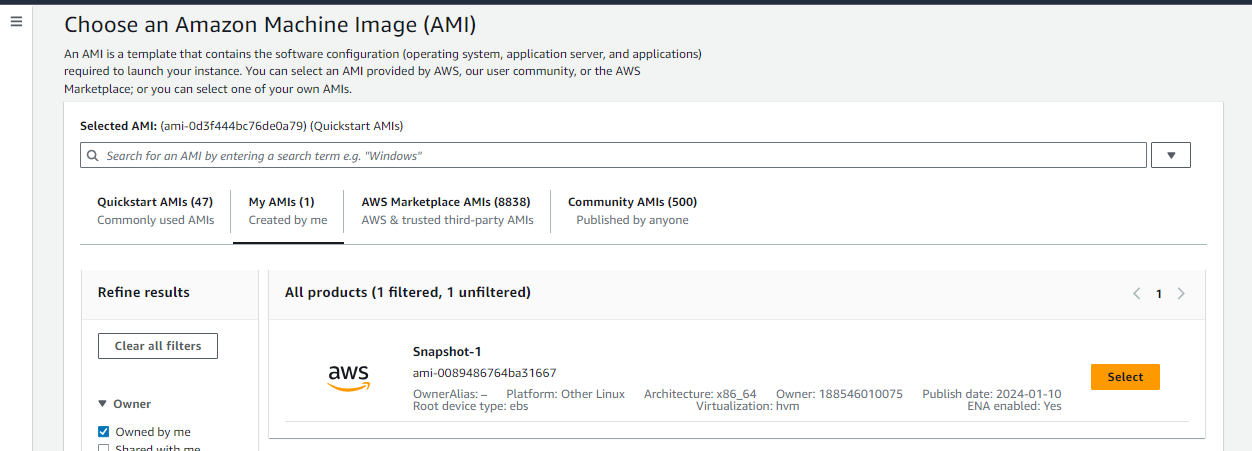

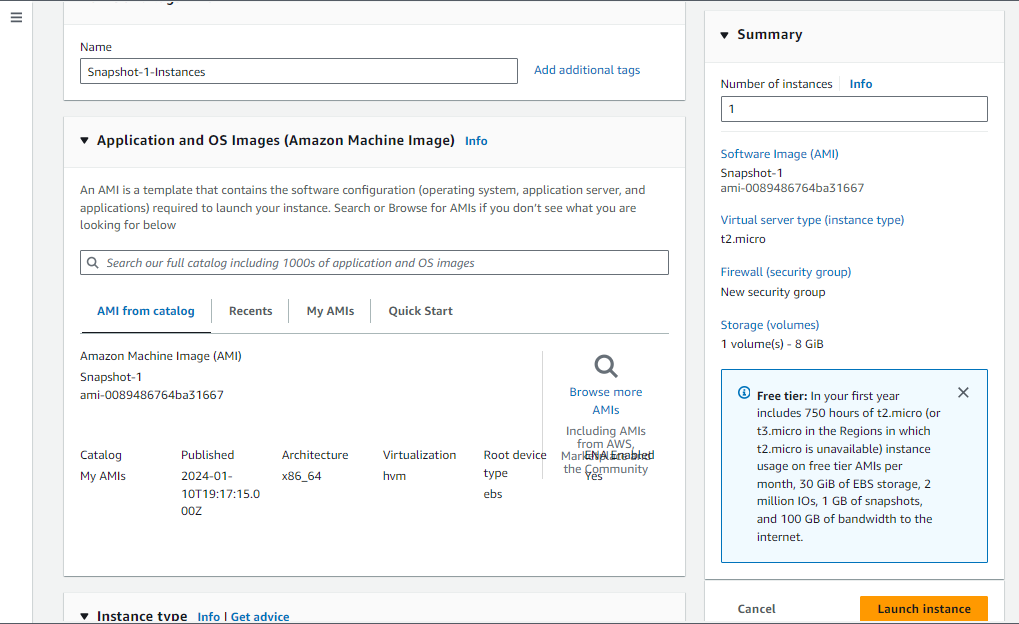

Step 4: Create a new EC2-Instance by using the above snapshot.

To select snapshot, click on Browse more AMIs then select snapshot.

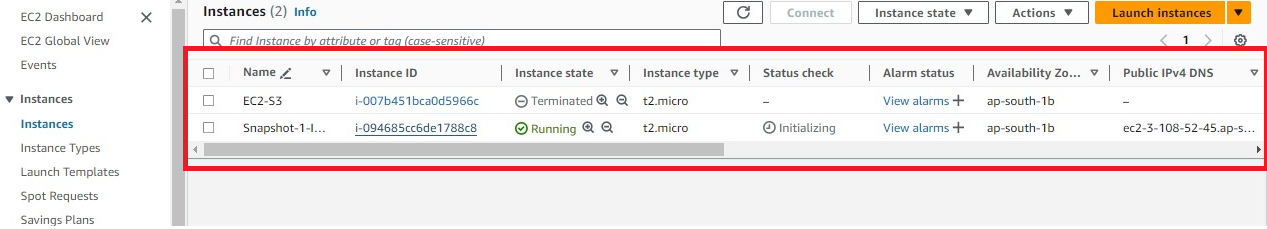

Step 5: Verify EC2- Instance Created Successfully.

Step 6: Access EC2-Instance by using the SSH key for performing tasks on CLI.

Step 7: Access Storage and check uploaded file exists or not.

# list of storages

aws s3 ls

# Copy file from storage to Local Machine

aws s3 cp <bucket_url> <path_to_loacl_machine>

Thank you for giving your precious time to read this blog/article and if any suggestions or improvements are required on my blogs feel free to connect on LinkedIn Unnati Gupta. Happy Learning 💥🙌***!!!***

Subscribe to my newsletter

Read articles from Unnati Gupta directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Unnati Gupta

Unnati Gupta

👨💻 DevOps Architect @ Hippo Technik, LLC Passionate about bridging the gap between development and operations, I'm a dedicated DevOps Engineer at 6D Technology. With a strong belief in the power of automation, continuous integration, and continuous delivery, I thrive in optimizing software development pipelines for efficiency and reliability. 🚀 Exploring the DevOps Universe In my articles, I delve into the fascinating world of DevOps, where I share insights, best practices, and real-world experiences. From containerization and orchestration to CI/CD pipelines and infrastructure as code, I'm here to demystify the complex and empower fellow developers and ops enthusiasts. 📝 Blogging for Knowledge Sharing As a tech enthusiast and a lifelong learner, I'm committed to sharing knowledge. My articles aim to simplify complex concepts and provide practical tips that help teams and individuals streamline their software delivery processes. 🌐 Connect with Me Let's connect and explore the ever-evolving landscape of DevOps together. Feel free to reach out, comment, or share your thoughts on my articles. Together, we can foster a culture of collaboration and innovation in the DevOps community. 🔗 Social Links LinkedIn: https://www.linkedin.com/in/unnati-gupta-%F0%9F%87%AE%F0%9F%87%B3-a62563183/ GitHub: https://github.com/DevUnnati 📩 Contact Have questions or looking to collaborate? You can reach me at unnatigupta527@gmail.com Happy Learning!!