Automated Backup and Rotation Script

Kunal Maurya

Kunal Maurya

This script is a Bash shell script designed to automate the backup, rotation, and deletion of backups for a specified GitHub repository. The script includes options for daily, weekly, and monthly backups and utilizes Google Drive for backup storage.

Prerequisites:

gdrive installation

Google drive

Webhook ID

Google Drive API Credentials (Authentication)

Google Drive Integration

gdrive

gdrive installation on linux:

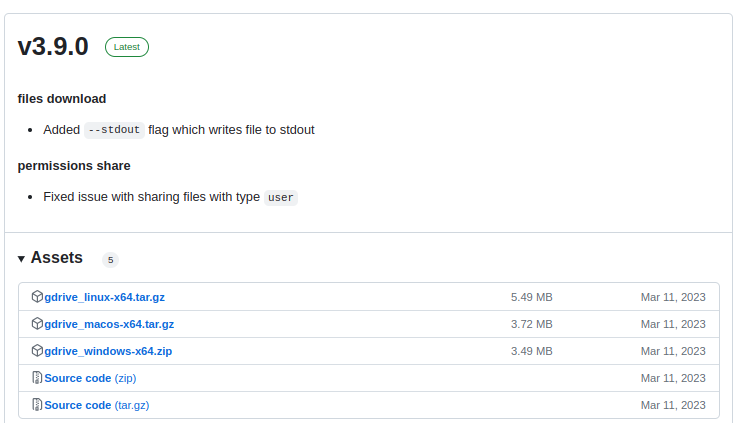

Download the latest binary from https://github.com/glotlabs/gdrive/releases

Unpack and put the binary somewhere in your PATH (i.e.

/usr/local/binon linux and macos).# Make it executable chmod +x gdrive # Move it to a directory in your PATH (e.g., /usr/local/bin/) sudo mv gdrive /usr/local/bin/

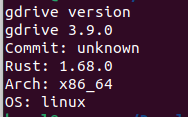

check for version.

gdrive version

Create Google API credentials:

Follow the step from https://github.com/glotlabs/gdrive/blob/main/docs/create_google_api_credentials.md

Go to Google Cloud Console

Create a new project (or select an existing) from the menu [screenshot]

Search for

drive apiin the search bar and selectGoogle drive apiunder the marketplace section [screenshot]Click to enable

Google Drive APIbutton [screenshot]Click on the

Credentialsmenu itemClick on the

Configure Consent Screenbutton [screenshot]Select

Externaluser type (Internal is only available for workspace subscribers) [screenshot]Click on the

CreatebuttonFill out the fields

App name,User support email,Developer contact informationwith your information; you will need to put the Project ID into the app name (keep the other fields empty) [screenshot]Click the

Save and continuebutton. If you getAn error saving your app has occurredtry changing the project name to something uniqueClick the

Add or remove scopesbuttonSearch for

google drive apiSelect the scopes

.../auth/driveand.../auth/drive.metadata.readonly[screenshot]Click the

UpdatebuttonClick the

Save and continuebutton [screenshot]Click the

Add usersbuttonAdd the email of the user you will use with gdrive [screenshot]

Click the

Addbutton until the sidebar disappearsClick the

Save and continuebutton [screenshot]Click on the

Credentialsmenu item againClick on the

Create credentialsbutton in the top bar and selectOAuth client ID[screenshot]Select application type

Desktop appand give a name [screenshot]Click on the

CreatebuttonYou should be presented with a Cliend Id and Client Secret [screenshot]. If you dont copy/download them now, you may find them again later.

Click

OKClick on

OAuth consent screenmenu itemClick on

Publish app(to prevent the token from expiring after 7 days) [screenshot]Click

Confirmin the dialog

Thats it!

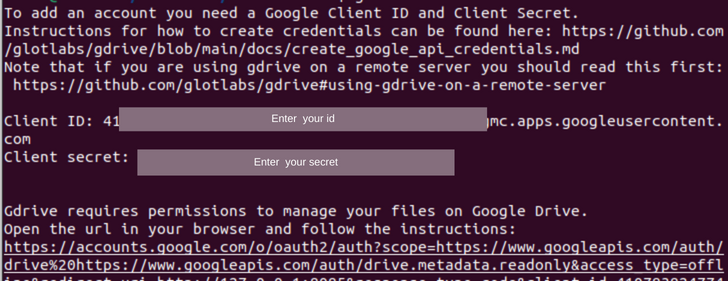

Add google account to gdrive

Run

gdrive account addThis will prompt you for your google Client ID and Client Secret (see Requirements)

Next you will be presented with an url.

Follow the url and give approval for gdrive to access your Drive

You will be redirected to

http://localhost:8085(gdrive starts a temporary web server) which completes the setupGdrive is now ready to use!

Automated Backup and Rotation Script

#!/bin/bash

# GitHub repository URL to clone

GITHUB_REPO="https://github.com/KUNAL-MAURYA1470/Redis_cluster_k8s.git" # Replace with your GitHub repository URL

# Destination folder for backups

BACKUP_DIR="/media/kunal/Storage6/backup"

# Project name

PROJECT_NAME="project"

# Google Drive folder ID for backups

GOOGLE_DRIVE_FOLDER_ID="Enter your"

# Number of daily, weekly, and monthly backups to retain

BACKUP_RETENTION_DAILY=1

BACKUP_RETENTION_WEEKLY=4

BACKUP_RETENTION_MONTHLY=3

# cURL request URL for notifications

CURL_REQUEST_URL="https://webhook.site/your_unique_id"

DISABLE_CURL_REQUEST=false # Set to true to disable cURL request for testing

# Get the current day of the month and day of the week

DAYMONTH=$(date +%d)

DAYWEEK=$(date +%u)

# Determine the backup frequency based on the current day

if [[ $DAYMONTH -eq 1 ]]; then

FN='monthly'

echo "Monthly task"

elif [[ $DAYWEEK -eq 7 ]]; then

FN='weekly'

echo "Weekly task"

elif [[ $DAYWEEK -lt 7 ]]; then

FN='daily'

echo "Daily task"

fi

# Create a timestamp for the backup

DATE=$FN-$(date +"%Y%m%d")

# Function to display help text

function show_help {

echo "BackupRotation available options are"

echo

echo "-s Source directory to be backed up"

echo "-b Destination folder for the backups"

echo "-n Name of the project being backed up"

echo "-d Number of Daily backups to keep, negative numbers will disable"

echo "-w Number of Weekly backups to keep, negative numbers will disable"

echo "-m Number of Monthly backups to keep, negative numbers will disable"

echo "-h show this help text"

}

# Function to perform the backup

function backup {

temp_folder=$(mktemp -d)

git clone "$GITHUB_REPO" "$temp_folder" || { echo "Error: Could not clone GitHub repository."; exit 1; }

# Create timestamped backup directory

timestamp=$(date +%Y%m%d_%H%M%S)

mkdir -p "$BACKUP_DIR/$timestamp" || { echo "Error: Could not create backup directory."; exit 1; }

# Create a zip archive of the cloned project

zip -r "$BACKUP_DIR/$PROJECT_NAME-$DATE.zip" "$temp_folder" || { echo "Error: Could not create zip archive."; exit 1; }

# Upload to Google Drive using gdrive

gdrive files upload --recursive --parent "$GOOGLE_DRIVE_FOLDER_ID" "$BACKUP_DIR/$PROJECT_NAME-$DATE.zip" || { echo "Error: Could not upload to Google Drive."; exit 1; }

# Log success message

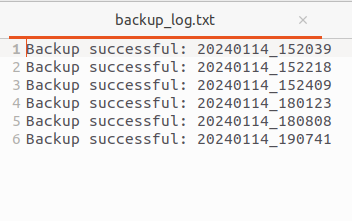

echo "Backup successful: $timestamp" >> "$BACKUP_DIR/backup_log.txt"

# Send cURL request on success

if [ "$DISABLE_CURL_REQUEST" = false ]; then

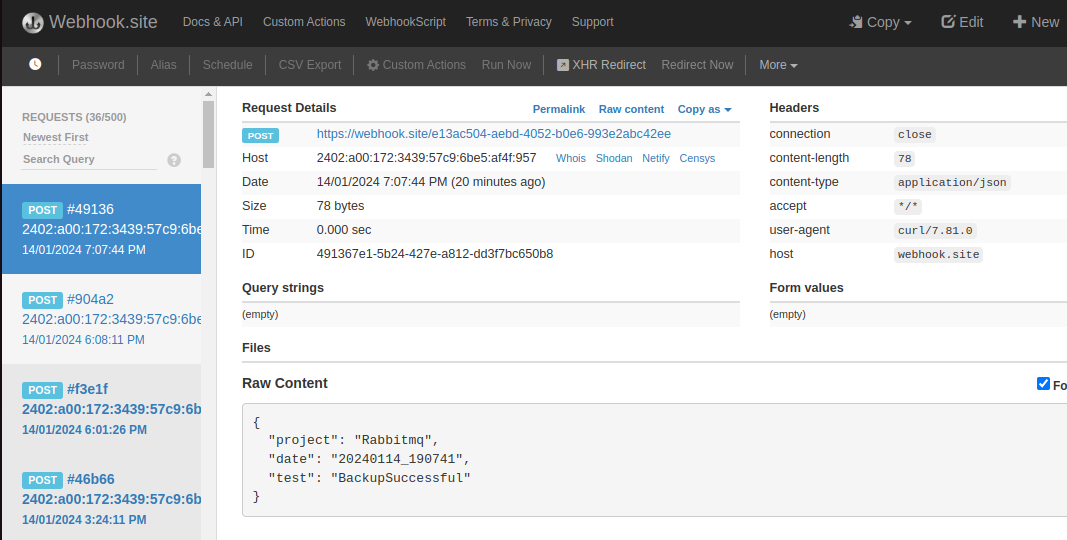

curl -X POST -H "Content-Type: application/json" -d '{"project": "'"$PROJECT_NAME"'", "date": "'"$timestamp"'", "test": "BackupSuccessful"}' "$CURL_REQUEST_URL"

fi

# Clean up temporary folder

rm -rf "$temp_folder"

# Rotate old backups

rotatebackup

}

function rotatebackup {

echo "Rotating old backups started.."

# Delete backups older than RETENTION_DAYS days from local file system

find "$BACKUP_DIR" -maxdepth 1 -type d -mtime +"$((RETENTION_DAYS * 24 * 60 * 60))" -exec rm -r {} \;

# Delete backups older than RETENTION_WEEKS weeks from local file system

find "$BACKUP_DIR" -maxdepth 1 -type d -ctime +"$((RETENTION_WEEKS * 7 * 24 * 60 * 60))" -exec rm -r {} \;

# Delete backups older than RETENTION_MONTHS months from local file system

find "$BACKUP_DIR" -maxdepth 1 -type d -ctime +"$((RETENTION_MONTHS * 30 * 24 * 60 * 60))" -exec rm -r {} \;

# Code to identify and delete old backups from Google Drive based on RETENTION_DAYS days.

OLD_BACKUPS_DAILY=$(

gdrive files list --parent $GOOGLE_DRIVE_FOLDER_ID | # List files in the specified Google Drive folder

grep "daily" | # Filter lines containing the word 'docker-daily'

awk '{print $1, $2}' | # Extract file ID and filename

while read -r FILE_ID FILE_NAME; do

# Extract the date from the filename (assuming the date format is YYYYMMDD)

FILE_DATE=$(echo "$FILE_NAME" | awk -F'[-.]' '{print $3}')

# Convert the file date to a format that can be compared with the current date

FILE_DATE_FORMATTED=$(date -d "$FILE_DATE" +"%Y%m%d")

# Get the current date in the same format

CURRENT_DATE=$(date +"%Y%m%d")

# Calculate the difference in days

DAYS_DIFFERENCE=$(( ( $(date -d "$CURRENT_DATE" +%s) - $(date -d "$FILE_DATE_FORMATTED" +%s) ) / 86400 ))

# Check if the file is older than the retention period

if [ $DAYS_DIFFERENCE -gt "$BACKUP_RETENTION_DAILY" ]; then

# Print the file ID if it's older than the retention period

echo "$FILE_ID"

fi

done

)

# Code to identify and delete old backups from Google Drive based on RETENTION_WEEKS weeks.

OLD_BACKUPS_WEEKLY=$(

gdrive files list --parent $GOOGLE_DRIVE_FOLDER_ID | # List files in the specified Google Drive folder

grep "weekly" | # Filter lines containing the word 'weekly'

awk '{print $1, $2}' | # Extract file ID and filename

while read -r FILE_ID FILE_NAME; do

# Extract the date from the filename (assuming the date format is YYYYMMDD)

FILE_DATE=$(echo "$FILE_NAME" | awk -F'[-.]' '{print $3}')

# Convert the file date to a format that can be compared with the current date

FILE_DATE_FORMATTED=$(date -d "$FILE_DATE" +"%Y%m%d")

# Get the current date in the same format

CURRENT_DATE=$(date +"%Y%m%d")

# Calculate the difference in weeks

WEEKS_DIFFERENCE=$(( ( $(date -d "$CURRENT_DATE" +%s) - $(date -d "$FILE_DATE_FORMATTED" +%s) ) / 604800 )) # 604800 seconds in a week

# Check if the file is older than 4 weeks

if [ $WEEKS_DIFFERENCE -gt $BACKUP_RETENTION_WEEKLY ]; then

# Print the file ID if it's older than 4 weeks

echo "$FILE_ID"

fi

done

)

# Code to identify and delete old backups from Google Drive based on RETENTION_MONTHS months.

OLD_BACKUPS_MONTHLY=$(

gdrive files list --parent $GOOGLE_DRIVE_FOLDER_ID | # List files in the specified Google Drive folder

grep "monthly" | # Filter lines containing the word 'docker-monthly'

awk '{print $1, $2}' | # Extract file ID and filename

while read -r FILE_ID FILE_NAME; do

# Extract the date from the filename (assuming the date format is YYYYMMDD)

FILE_DATE=$(echo "$FILE_NAME" | awk -F'[-.]' '{print $3}')

# Convert the file date to a format that can be compared with the current date

FILE_DATE_FORMATTED=$(date -d "$FILE_DATE" +"%Y%m%d")

# Get the current date in the same format

CURRENT_DATE=$(date +"%Y%m%d")

# Calculate the difference in months

MONTHS_DIFFERENCE=$(( ( $(date -d "$CURRENT_DATE" +%Y) - $(date -d "$FILE_DATE_FORMATTED" +%Y) ) * 12 + \

$(date -d "$CURRENT_DATE" +%m) - $(date -d "$FILE_DATE_FORMATTED" +%m) ))

# Check if the file is older than 4 months

if [ $MONTHS_DIFFERENCE -gt $BACKUP_RETENTION_MONTHLY ]; then

# Print the file ID if it's older than 4 months

echo "$FILE_ID"

fi

done

)

# Delete old backups

for FILE_ID in $OLD_BACKUPS_DAILY $OLD_BACKUPS_WEEKLY $OLD_BACKUPS_MONTHLY; do

gdrive files delete "$FILE_ID" || { echo "Error: Could not delete old files from Google Drive."; exit 1; }

done

echo "Rotating old backups completed"

}

# Parse command-line options

while getopts s:b:n:d:w:m:y:h option; do

case "${option}" in

s) SRC_DIR=${OPTARG} ;;

b) BACKUP_DIR=${OPTARG} ;;

n) PROJECT_NAME=${OPTARG} ;;

d) BACKUP_RETENTION_DAILY=${OPTARG} ;;

w) BACKUP_RETENTION_WEEKLY=${OPTARG} ;;

m) BACKUP_RETENTION_MONTHLY=${OPTARG} ;;

h) show_help

exit 0 ;;

esac

done

# Check conditions for daily, weekly, and monthly backups and run the backup function accordingly

if [[ $BACKUP_RETENTION_DAILY -gt 0 && ! -z "$BACKUP_RETENTION_DAILY" && $BACKUP_RETENTION_DAILY -ne 0 && $FN == daily ]]; then

echo "Daily Backup Run"

backup

fi

if [[ $BACKUP_RETENTION_WEEKLY -gt 0 && ! -z "$BACKUP_RETENTION_WEEKLY" && $BACKUP_RETENTION_WEEKLY -ne 0 && $FN == weekly ]]; then

echo "Weekly Backup Run"

backup

fi

if [[ $BACKUP_RETENTION_MONTHLY -gt 0 && ! -z "$BACKUP_RETENTION_MONTHLY" && $BACKUP_RETENTION_MONTHLY -ne 0 && $FN == monthly ]]; then

echo "Monthly Backup Run"

backup

fi

Let's Understand the script

Here's a breakdown of the script's functionality:

Configuration Variables:

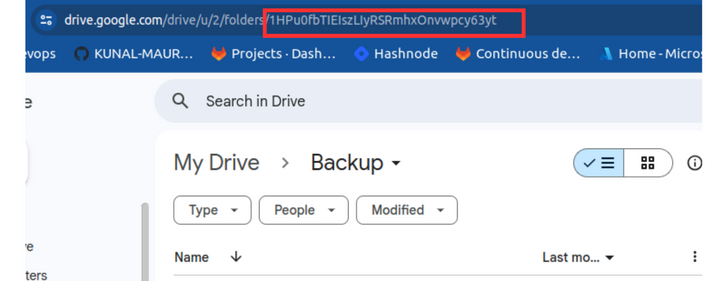

GITHUB_REPO: URL of the GitHub repository to clone.BACKUP_DIR: Destination folder for local backups.PROJECT_NAME: Name of the project which you want to save as filename.GOOGLE_DRIVE_FOLDER_ID: Google Drive folder ID for storing backups.you can find it on url after creating a folder.

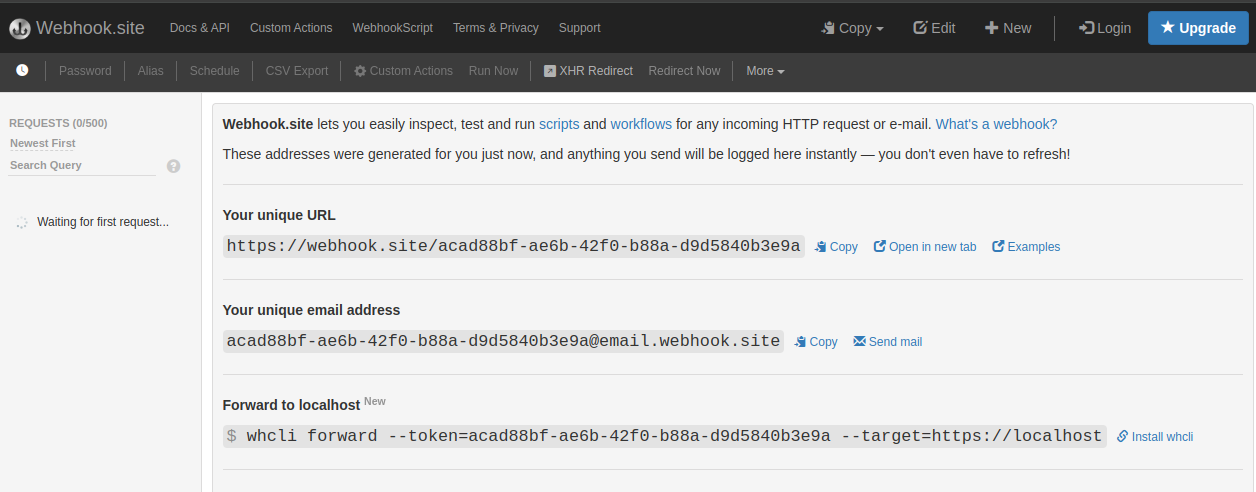

BACKUP_RETENTION_DAILY,BACKUP_RETENTION_WEEKLY,BACKUP_RETENTION_MONTHLY: Number of backups to retain for daily, weekly, and monthly backups.CURL_REQUEST_URL: URL for cURL requests for notifications.To create go to https://webhook.site/.

You will get id and credentials.

Copy your unique url and paste here.

DISABLE_CURL_REQUEST: Flag to disable cURL requests for testing.Date and Frequency Calculation:

Calculate the current day of the month (

DAYMONTH) and day of the week (DAYWEEK).# Get the current day of the month and day of the week DAYMONTH=$(date +%d) DAYWEEK=$(date +%u)Determine the backup frequency (

FN) based on the current day (daily, weekly, or monthly).# Determine the backup frequency based on the current day if [[ $DAYMONTH -eq 1 ]]; then FN='monthly' echo "Monthly task" elif [[ $DAYWEEK -eq 7 ]]; then FN='weekly' echo "Weekly task" elif [[ $DAYWEEK -lt 7 ]]; then FN='daily' echo "Daily task" fi # Create a timestamp for the backup DATE=$FN-$(date +"%Y%m%d")

Help Function:

show_help: Displays help information about available options.# Function to display help text function show_help { echo "BackupRotation available options are" echo echo "-s Source directory to be backed up" echo "-b Destination folder for the backups" echo "-n Name of the project being backed up" echo "-d Number of Daily backups to keep, negative numbers will disable" echo "-w Number of Weekly backups to keep, negative numbers will disable" echo "-m Number of Monthly backups to keep, negative numbers will disable" echo "-h show this help text" }

Command-Line Options Parsing:

Parses command-line options using

getopts.# Parse command-line options while getopts s:b:n:d:w:m:y:h option; do case "${option}" in s) GITHUB_REPO=${OPTARG} ;; b) BACKUP_DIR=${OPTARG} ;; n) PROJECT_NAME=${OPTARG} ;; d) BACKUP_RETENTION_DAILY=${OPTARG} ;; w) BACKUP_RETENTION_WEEKLY=${OPTARG} ;; m) BACKUP_RETENTION_MONTHLY=${OPTARG} ;; h) show_help exit 0 ;; esac doneOptions include source github url (

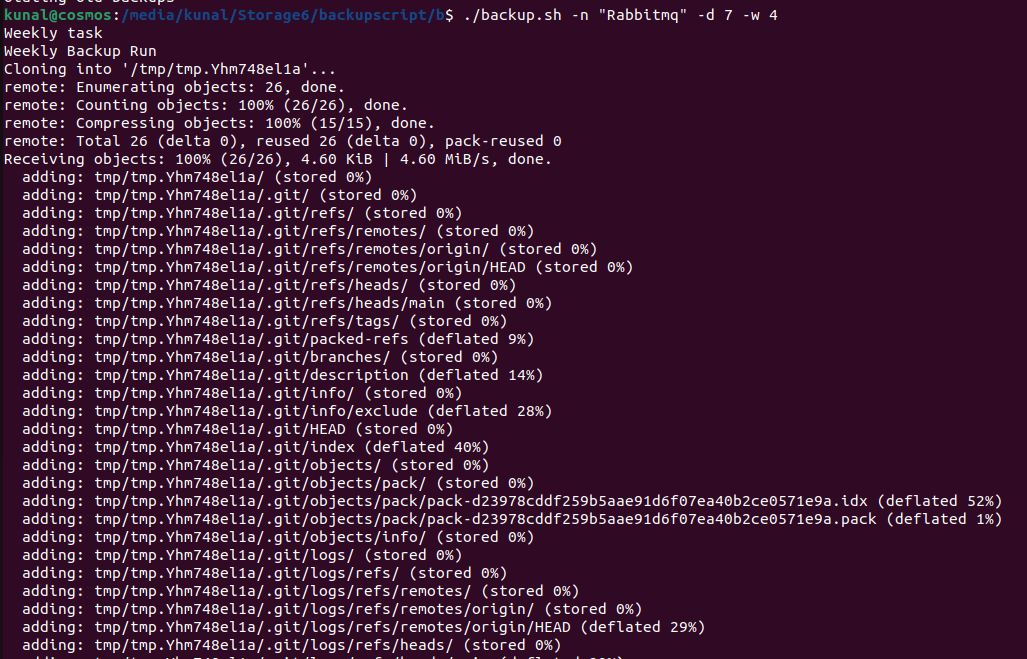

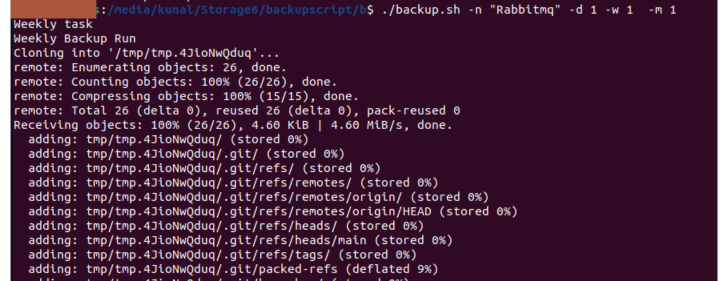

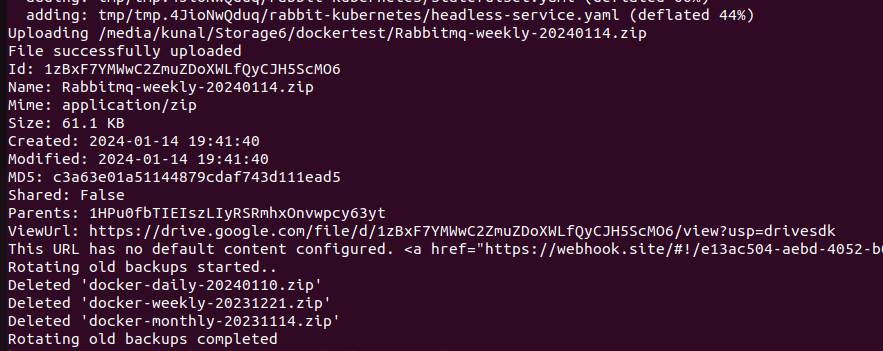

-s), backup directory (-b), project name (-n), daily retention (-d), weekly retention (-w), monthly retention (-m), and help (-h)../backup.sh -n "Rabbitmq" -d 7 -w 4

Backup Trigger Conditions:

Checks conditions for daily, weekly, and monthly backups based on retention periods and the current backup frequency (

FN). It does not take negative value and zero value.Runs the

backupfunction accordingly.# Check conditions for daily, weekly, and monthly backups and run the backup function accordingly if [[ $BACKUP_RETENTION_DAILY -gt 0 && ! -z "$BACKUP_RETENTION_DAILY" && $BACKUP_RETENTION_DAILY -ne 0 && $FN == daily ]]; then echo "Daily Backup Run" backup fi if [[ $BACKUP_RETENTION_WEEKLY -gt 0 && ! -z "$BACKUP_RETENTION_WEEKLY" && $BACKUP_RETENTION_WEEKLY -ne 0 && $FN == weekly ]]; then echo "Weekly Backup Run" backup fi if [[ $BACKUP_RETENTION_MONTHLY -gt 0 && ! -z "$BACKUP_RETENTION_MONTHLY" && $BACKUP_RETENTION_MONTHLY -ne 0 && $FN == monthly ]]; then echo "Monthly Backup Run" backup fi

Backup Function (

backup):function backup { temp_folder=$(mktemp -d) git clone "$GITHUB_REPO" "$temp_folder" || { echo "Error: Could not clone GitHub repository."; exit 1; } # Create timestamped backup directory timestamp=$(date +%Y%m%d_%H%M%S) mkdir -p "$BACKUP_DIR/$timestamp" || { echo "Error: Could not create backup directory."; exit 1; } # Create a zip archive of the cloned project zip -r "$BACKUP_DIR/$PROJECT_NAME-$DATE.zip" "$temp_folder" || { echo "Error: Could not create zip archive."; exit 1; } # Upload to Google Drive using gdrive gdrive files upload --recursive --parent "$GOOGLE_DRIVE_FOLDER_ID" "$BACKUP_DIR/$PROJECT_NAME-$DATE.zip" || { echo "Error: Could not upload to Google Drive."; exit 1; } # Log success message echo "Backup successful: $timestamp" >> "$BACKUP_DIR/backup_log.txt" # Send cURL request on success if [ "$DISABLE_CURL_REQUEST" = false ]; then curl -X POST -H "Content-Type: application/json" -d '{"project": "'"$PROJECT_NAME"'", "date": "'"$timestamp"'", "test": "BackupSuccessful"}' "$CURL_REQUEST_URL" fi # Clean up temporary folder rm -rf "$temp_folder" # Rotate old backups rotatebackup }Clones the specified GitHub repository into a temporary folder.

It Creates a temporary folder to clone the repository.

temp_folder=$(mktemp -d) git clone "$GITHUB_REPO" "$temp_folder" || { echo "Error: Could not clone GitHub repository."; exit 1; }

Here, I am running script on sunday , it will run the weekly backup, else on other days it will do daily backup.

Creates a timestamped backup directory within mentioned BACKUP_DIR.

# Create timestamped backup directory timestamp=$(date +%Y%m%d_%H%M%S) mkdir -p "$BACKUP_DIR/$timestamp" || { echo "Error: Could not create backup directory."; exit 1; }Creates a zip archive of the cloned project with name of Projectname-backup_method-date.

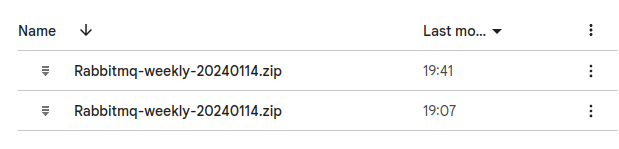

# Create a zip archive of the cloned project zip -r "$BACKUP_DIR/$PROJECT_NAME-$DATE.zip" "$temp_folder" || { echo "Error: Could not create zip archive."; exit 1; }Example:

Uploads the archive to Google Drive using

gdrive.

It will recursively upload "$BACKUP_DIR/$PROJECT_NAME-$DATE.zip" in parent directory mention $GOOGLE_DRIVE_FOLDER_ID.

gdrive files upload --recursive --parent "$GOOGLE_DRIVE_FOLDER_ID" "$BACKUP_DIR/$PROJECT_NAME-$DATE.zip" || { echo "Error: Could not upload to Google Drive."; exit 1; }Logs the success message on backup_log.txt file in Backup_dir.

Sends a cURL request on success (if enabled).

Cleans up temporary folders.

Calls the

rotatebackupfunction.

Rotation Function (rotatebackup):

Deletes local backups older than the specified retention periods for daily, weekly, and monthly backups.

- Identifies old backups on Google Drive based on retention periods and deletes them.

function rotatebackup {

echo "Rotating old backups started.."

# Delete backups older than RETENTION_DAYS days from local file system

find "$BACKUP_DIR" -maxdepth 1 -type d -mtime +"$((RETENTION_DAYS * 24 * 60 * 60))" -exec rm -r {} \;

# Delete backups older than RETENTION_WEEKS weeks from local file system

find "$BACKUP_DIR" -maxdepth 1 -type d -ctime +"$((RETENTION_WEEKS * 7 * 24 * 60 * 60))" -exec rm -r {} \;

# Delete backups older than RETENTION_MONTHS months from local file system

find "$BACKUP_DIR" -maxdepth 1 -type d -ctime +"$((RETENTION_MONTHS * 30 * 24 * 60 * 60))" -exec rm -r {} \;

# Code to identify and delete old backups from Google Drive based on RETENTION_DAYS days.

OLD_BACKUPS_DAILY=$(

gdrive files list --parent $GOOGLE_DRIVE_FOLDER_ID | # List files in the specified Google Drive folder

grep "daily" | # Filter lines containing the word 'docker-daily'

awk '{print $1, $2}' | # Extract file ID and filename

while read -r FILE_ID FILE_NAME; do

# Extract the date from the filename (assuming the date format is YYYYMMDD)

FILE_DATE=$(echo "$FILE_NAME" | awk -F'[-.]' '{print $3}')

# Convert the file date to a format that can be compared with the current date

FILE_DATE_FORMATTED=$(date -d "$FILE_DATE" +"%Y%m%d")

# Get the current date in the same format

CURRENT_DATE=$(date +"%Y%m%d")

# Calculate the difference in days

DAYS_DIFFERENCE=$(( ( $(date -d "$CURRENT_DATE" +%s) - $(date -d "$FILE_DATE_FORMATTED" +%s) ) / 86400 ))

# Check if the file is older than the retention period

if [ $DAYS_DIFFERENCE -gt "$BACKUP_RETENTION_DAILY" ]; then

# Print the file ID if it's older than the retention period

echo "$FILE_ID"

fi

done

)

# Code to identify and delete old backups from Google Drive based on RETENTION_WEEKS weeks.

OLD_BACKUPS_WEEKLY=$(

gdrive files list --parent $GOOGLE_DRIVE_FOLDER_ID | # List files in the specified Google Drive folder

grep "weekly" | # Filter lines containing the word 'weekly'

awk '{print $1, $2}' | # Extract file ID and filename

while read -r FILE_ID FILE_NAME; do

# Extract the date from the filename (assuming the date format is YYYYMMDD)

FILE_DATE=$(echo "$FILE_NAME" | awk -F'[-.]' '{print $3}')

# Convert the file date to a format that can be compared with the current date

FILE_DATE_FORMATTED=$(date -d "$FILE_DATE" +"%Y%m%d")

# Get the current date in the same format

CURRENT_DATE=$(date +"%Y%m%d")

# Calculate the difference in weeks

WEEKS_DIFFERENCE=$(( ( $(date -d "$CURRENT_DATE" +%s) - $(date -d "$FILE_DATE_FORMATTED" +%s) ) / 604800 )) # 604800 seconds in a week

# Check if the file is older than 4 weeks

if [ $WEEKS_DIFFERENCE -gt $BACKUP_RETENTION_WEEKLY ]; then

# Print the file ID if it's older than 4 weeks

echo "$FILE_ID"

fi

done

)

# Code to identify and delete old backups from Google Drive based on RETENTION_MONTHS months.

OLD_BACKUPS_MONTHLY=$(

gdrive files list --parent $GOOGLE_DRIVE_FOLDER_ID | # List files in the specified Google Drive folder

grep "monthly" | # Filter lines containing the word 'docker-monthly'

awk '{print $1, $2}' | # Extract file ID and filename

while read -r FILE_ID FILE_NAME; do

# Extract the date from the filename (assuming the date format is YYYYMMDD)

FILE_DATE=$(echo "$FILE_NAME" | awk -F'[-.]' '{print $3}')

# Convert the file date to a format that can be compared with the current date

FILE_DATE_FORMATTED=$(date -d "$FILE_DATE" +"%Y%m%d")

# Get the current date in the same format

CURRENT_DATE=$(date +"%Y%m%d")

# Calculate the difference in months

MONTHS_DIFFERENCE=$(( ( $(date -d "$CURRENT_DATE" +%Y) - $(date -d "$FILE_DATE_FORMATTED" +%Y) ) * 12 + \

$(date -d "$CURRENT_DATE" +%m) - $(date -d "$FILE_DATE_FORMATTED" +%m) ))

# Check if the file is older than 4 months

if [ $MONTHS_DIFFERENCE -gt $BACKUP_RETENTION_MONTHLY ]; then

# Print the file ID if it's older than 4 months

echo "$FILE_ID"

fi

done

)

# Delete old backups

for FILE_ID in $OLD_BACKUPS_DAILY $OLD_BACKUPS_WEEKLY $OLD_BACKUPS_MONTHLY; do

gdrive files delete "$FILE_ID" || { echo "Error: Could not delete old files from Google Drive."; exit 1; }

done

echo "Rotating old backups completed"

}

Testing Backup and rotational Backup:

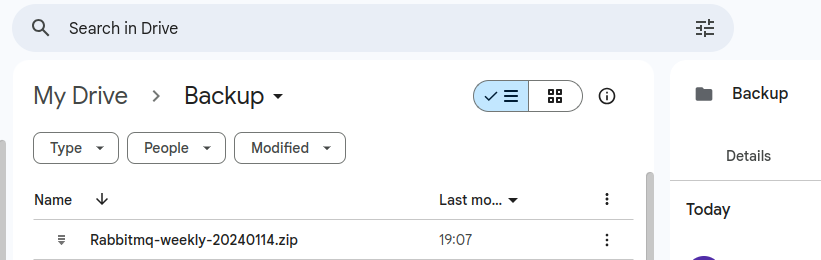

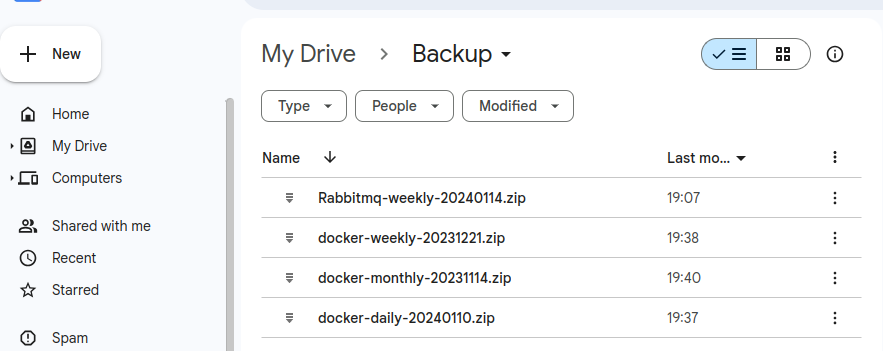

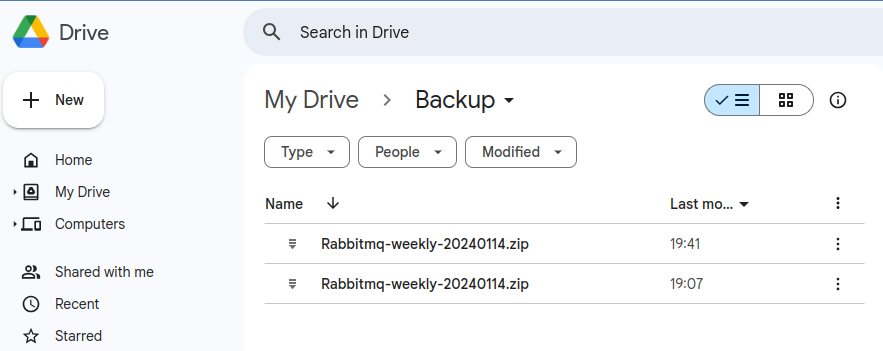

To Test We create 3 test folder namely: 'docker-daily-20240110.zip' 'docker-weekly-20231221.zip' , 'docker-monthly-20231114.zip'

Now, Running the code With Retention daily backup =1 , Retention monthly backup=1, Retention weekly backup=1.

So, It will upload a 'Rabbitmq-weekly-20240114.zip' to drive. And delete 'docker-daily-20240110.zip' 'docker-weekly-20231221.zip' , 'docker-monthly-20231114.zip' as they are older then mention retention period.

Thanks For Reading.

Subscribe to my newsletter

Read articles from Kunal Maurya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by