Webbie - Create Bots from Your Data using MindsDB in minutes !

Amit Wani

Amit Wani

Introduction

Hey, In this day and age of AI, we all want an assistant that will solve our specific problems. There have been many Large Language Models that solve generic problems and provide answers to them. But there's still a gap for tools where you can get answers just for your data. This is where the idea of Webbie comes in.

Webbie - https://webbie.amitwani.dev is a simple tool, where you can create an AI bot in minutes, based on your provided information. This chat-based interface is swiftly generated using MindsDB in the backend, offering a personalized solution to users' unique needs.

Tech Stack

Backend - Powered by NodeJS and Express.

Frontend - Developed using Angular.

Database - Utilizes PostgreSQL.

Tools - Leverages MindsDB for machine learning capabilities.

ML Engine - Employs LlamaIndex and Langchain for robust machine learning.

Deployment - Utilizes Docker, DigitalOcean, and NorthFlank for efficient deployment.

Installation

Backend - The NodeJS server operates on a DigitalOcean droplet using forever start server.js.

Frontend - The Angular application runs in a NorthFlank container, employing a Docker image with Nginx Reverse Proxy.

Database - PostgreSQL operates on a DigitalOcean droplet as a service.

Tools - MindsDB is hosted on a DigitalOcean droplet using python -m mindsdb.

Components

Data Sources

Webbie supports three data sources (with potential for more in the future):

Text Data - Simple textual information.

CSV File - A CSV file containing row/column-based data.

Website Link - Any website link with pertinent information.

ML Engine

I have used llama_index handler from MindsDB to create an ML ENGINE first in the MindsDB.

CREATE ML_ENGINE llamaindex

FROM llama_index

OpenAI

You will have to provide an OpenAI API Key to create the bot. This key is not stored in our database.

Model

After providing the above details along with the bot name and bot description. Our middleware server built on NodeJS and Express will send queries to MindsDB for creating appropriate models.

I have used llama_index for this model and a SimpleWebPageReader reader of llama_index

Now backend will create the model in MindsDB using a query like this -

CREATE MODEL abc

PREDICT answer

USING

engine = 'llamaindex',

index_class = 'GPTVectorStoreIndex',

reader = 'SimpleWebPageReader',

source_url_link = 'https://blog.amiwani.dev',

input_column = 'question',

openai_api_key = '<api-key>';

Here source_url_link will be changed depending on the data source. Below is an explanation of how it is generated and used.

Website Data Model

This was the easiest to do, as the user-provided URL can be directly passed to the model in the source_url_link.

CREATE MODEL abc

PREDICT answer

USING

engine = 'llamaindex',

index_class = 'GPTVectorStoreIndex',

reader = 'SimpleWebPageReader',

source_url_link = 'https://blog.amiwani.dev',

input_column = 'question',

openai_api_key = '<api-key>';

Text Data Model

For Textual Data, since the llama_index handler does not support text data out of the box, we create a text file and upload it to the server with the data input from the user. And then URL of that text file is provided to the model in the source_url_link

CREATE MODEL abc

PREDICT answer

USING

engine = 'llamaindex',

index_class = 'GPTVectorStoreIndex',

reader = 'SimpleWebPageReader',

source_url_link = 'http://<server>/api/file/download/<filename>.txt',

input_column = 'question',

openai_api_key = '<api-key>';

CSV File Data Model

For CSV File Data, since the llama_index handler does not support text data out of the box, we upload the CSV File to the server. And then URL of that text file is provided to the model in the source_url_link

CREATE MODEL abc

PREDICT answer

USING

engine = 'llamaindex',

index_class = 'GPTVectorStoreIndex',

reader = 'SimpleWebPageReader',

source_url_link = 'http://<server>/api/file/download/<filename>.csv',

input_column = 'question',

openai_api_key = '<api-key>';

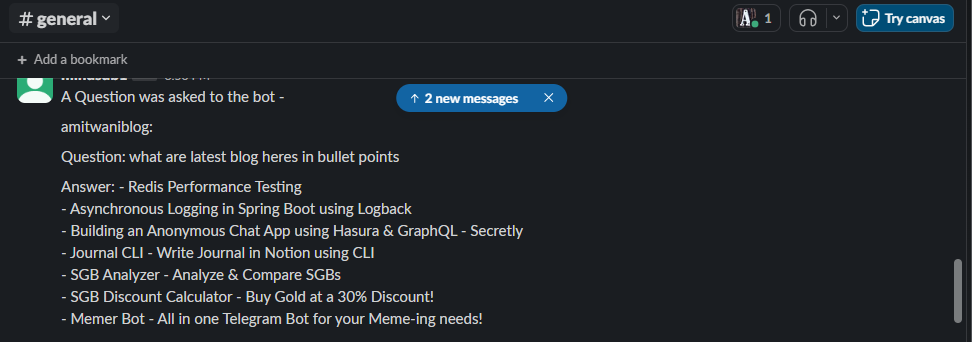

Slack

You can also enable Slack notifications in a channel. You will receive notifications in a channel for every question asked to the bot along with the answer given by the bot.

You can add your Slack App Token and Slack Channel Name to enable notifications. This app token is not stored in our database.

To obtain slack app token you can go to this guide

When the Slack notifications are enabled:

Create Slack Database

We will create a Slack database connection in the MindsDB as below:

CREATE DATABASE my_slack

WITH

ENGINE = 'slack',

PARAMETERS = {

"token": "<slack-token>"

};

Create JOB

We will create a JOB in MindsDB that will run every minute and send notification for every question asked to the bot:

CREATE JOB job AS (

INSERT INTO my_slack.channels(channel, text)

SELECT

"<channel-name>" as channel,

message as text

FROM psql_datasource.transcript

WHERE createdAt > "{{PREVIOUS_START_DATETIME}} AND botId = "<bot-id>"

) EVERY MINUTE;

This Job will read from transcript table in our PostgreSQL database for latest messages for this bot using PREVIOUS_START_DATETIME and botId and then send them in the appropriate Slack channel.

Workflow

The user will log in to Webbie - https://webbie.amitwani.dev

Create a Bot and Model

Frontend

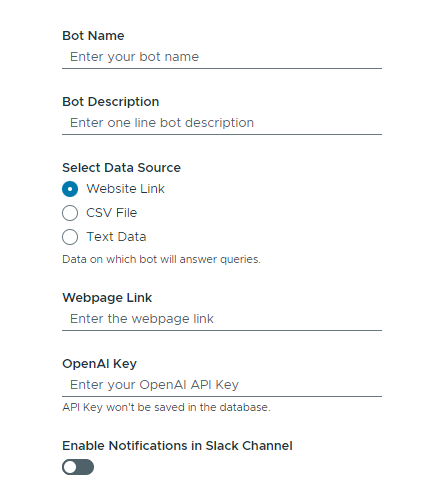

The user will create a bot by providing below details -

Bot Name

Bot Description

Select Data Source - Text/CSV/Website

OpenAI API Key

Enable Slack Notifications (optional)

Slack App Token

Slack Channel Name

Backend

The bot details will be verified

Files will be uploaded if required

CREATE MODEL using MindsDB as explained above

CREATE SLACK DATABASE using MindsDB as explained above if required

CREATE JOB using MindsDB for Slack Notifications as explained above if required

When the Model is Successfully generated, send a bot link to the client

Chat with the Bot

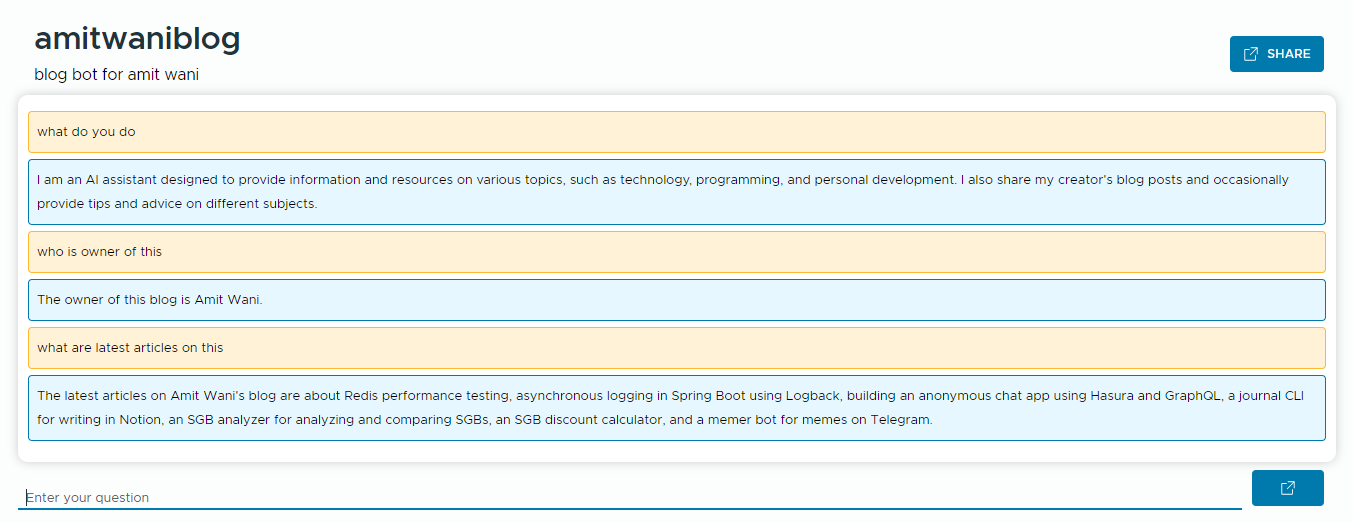

Any user can open the bot's chat interface by going to the link https://webbie.amitwani.dev/bot/<bot-id>

Frontend

Users can interact with the bot.

The query will be sent to the backend and the response will be shown to the user

Backend

- The query will be sent to Model for prediction using MindsDB as below:

SELECT answer FROM modelName WHERE question = 'question'

The answer will be sent to the client.

Question and answers will be stored in the

transcripttableSlack notification will also be sent in the channel if enabled using MindsDB as below:

Future Scope

Several features can be incorporated in the future:

Support for More Data Sources: Expand support for additional file types such as PDF/XLSX/DOCX.

Database Connection Support: Allow users to add actual database connections for bot data.

User Management System: Implement a user management system for enhanced control.

Notification Integration: Integrate more notification channels like Microsoft Teams, Twilio, etc.

You are invited to collaborate on the GitHub -

Conclusion

The development of Webbie provided a valuable learning experience, exploring diverse tech stacks and leveraging MindsDB. This project reflects a journey of exploration, experimentation, and innovation.

This is my submission for the MindsDB x Hashnode Hackathon.

Subscribe to my newsletter

Read articles from Amit Wani directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Amit Wani

Amit Wani

𝗪𝗔𝗥𝗥𝗜𝗢𝗥! Full Stack Developer | SDE Reliance Jio 🖥️ Tech Enthusiastic 💻 Man Utd 🔴 Sachin & Dravid fan! ⚾ Photography 📸 Get Hands Dirty on Keyboard!