Serverless Containerization: A Comprehensive Guide to AWS ECS Fargate

Sachin Vasle

Sachin Vasle

Introduction :

Cloud computing is like a magical realm where serverless architecture and containerization come together to create something extraordinary. In this journey, we're diving into AWS ECS Fargate, a tool that simplifies complex technical concepts and brings the magic of serverless container orchestration to life.

Embracing Serverless in AWS ECS Fargate :

1. Stateless Microservices Architecture

Think of serverless in AWS ECS Fargate as a friendly advocate for breaking down big applications into smaller, independent parts called microservices. These microservices work like small, self-sufficient units, chatting with each other through well-defined rules (APIs). It's like having a team of superheroes, each with its own special powers, making your application more agile, scalable, and resilient.

2. Event-Driven Architecture

AWS ECS Fargate loves events! It's like planning a surprise party where things happen based on triggers. With Fargate, your microservices respond to events, like messages or changes in the system. Imagine a robot that automatically adjusts to changes in its environment. That's how Fargate ensures your application can scale up or down as needed, using resources efficiently.

A Simple Dive into AWS ECS Fargate :

1. Serverless Container Orchestration

Let's talk about orchestrating containers without getting too technical. AWS ECS Fargate lets developers set up containers for their applications without worrying about the nitty-gritty details of servers. It's like having a chef create a recipe without bothering about the kitchen appliances – everything just works seamlessly.

2. Resource Isolation and Scaling

Picture Fargate as a helpful organizer at a party. Each task (group of containers) has its space and resources, so they don't interfere with each other. And when more guests arrive (increased demand), Fargate ensures there's enough room for everyone by dynamically adjusting the space. It's like adding more tables and chairs when more friends show up.

3. Integrated Networking Made Simple

Networking might sound complex, but with Fargate, it's like connecting LEGO blocks. You decide how your containers talk to each other, and Fargate makes it happen. It ensures secure communication, controls traffic flow, and helps build exciting structures (microservices architectures) without worrying about the details.

4. Task Definitions and Smart Scaling

In Fargate, tasks are like recipe cards for your containers. They tell Fargate how much CPU, memory, and other ingredients your containers need. Smart scaling is like having a personal assistant at the party. When the snacks are running low (increased demand), it automatically brings in more without bothering you. That's how Fargate ensures your application performs well without unnecessary costs.

Implementation: A Detailed Walkthrough

As a pivotal step in our serverless containerization journey, we'll set up an Elastic Container Registry (ECR) to serve as our Docker registry. This registry will house various versions of our application, providing a centralized location for container images. In this example, we'll name our ECR repository "demo-service."

It's worth noting that while we're leveraging ECR in this guide, alternative registry services like Docker Hub or Google Cloud Registry are also viable options should your preferences or requirements differ.

Create an Elastic Container Registry (ECR) Repository :

Navigate to the AWS Management Console:

- Log in to your AWS account and access the AWS Management Console.

Locate the Amazon ECR Service:

- Within the console, find the "Elastic Container Registry (ECR)" service.

Create a New Repository:

- Click on "Repositories" in the ECR dashboard and then select "Create repository."

Configure Repository Settings:

Provide a meaningful name for your repository, such as "demo-service."

Optionally, add a description to help identify the purpose of the repository.

Configure Image Scanning (Optional):

- Depending on your security requirements, you can enable image scanning to automatically check container images for vulnerabilities.

Set Permissions (Optional):

- Define repository access policies using AWS Identity and Access Management (IAM) if specific permissions are necessary.

Create Repository:

Click "Create repository" to finalize the repository creation process.

Repository URL and Authentication :

Upon successful creation, you'll be provided with the repository URL. Additionally, you'll need to authenticate Docker to the ECR registry for secure image pushes and pulls.

Retrieve Repository URI:

In the ECR console, click on your newly created repository to view details.

Copy the "Repository URI" for use in Docker commands.

Authenticate Docker to ECR:

Execute the following AWS CLI command to authenticate Docker:

aws ecr get-login-password --region <your-region> | docker login --username AWS --password-stdin <your-account-id>.dkr.ecr.<your-region>.amazonaws.comReplace

<your-region>and<your-account-id>with your AWS region and account ID.Now, you need to configure your Continuous Integration processes to send images to this repository.

For authentication, you can create a user in AWS’s IAM service. If you are using GitHub Actions, you can use Amazon’s official packages “aws-actions/configure-aws-credentials” and “aws-actions/amazon-ecr-login”.

Configuring the ECS fargate cluster :

In the realm of serverless containerization, the deployment of containers is orchestrated within a cluster. In this guide, we'll establish a serverless cluster using AWS Elastic Container Service (ECS) Fargate, eliminating the need for manual management of EC2 instances.

Choosing a Cluster Type:

- On the ECS dashboard, navigate to "Clusters" and initiate the creation of a new cluster.

Selecting a Cluster Template:

Opt for the "Networking only" template, as Fargate doesn't rely on traditional EC2 instances.

Configuring Cluster Settings:

Assign a meaningful name to your cluster, such as "demo-cluster."

Optionally, apply tags for improved organization and identification.

Defining Network Configuration:

- Tailor networking settings to your requirements, and consider creating a new Virtual Private Cloud (VPC) and subnet for enhanced customization.

Enabling CloudWatch Container Insights (Recommended):

Strengthen your monitoring capabilities by opting to enable CloudWatch Container Insights. This feature provides valuable metrics and logs for better visibility into containerized applications.

Setting Up Auto Scaling (Optional):

- If scalability is a priority, configure auto scaling to dynamically adjust the number of tasks based on demand.

Creating the Cluster:

Finalize the process by clicking "Create."

And now our cluster is ready to use.

CRAFTING A TASK DEFINITION :

Task definitions play a pivotal role in orchestrating containerized applications within our ECS Fargate cluster. These definitions encapsulate the rules and specifications that govern the behavior of our containers. As we delve into creating a task definition tailored for Fargate, precision in defining these rules is paramount.

In the Task Size section of our task definition, we define the resource limits for our container. It's crucial to strike a balance between meeting application requirements and managing costs effectively. Especially when creating a demo application for testing purposes, it's prudent to keep resource specifications conservative, as these choices directly impact your AWS billing.

With our task definition taking shape, the next pivotal step is to configure the container settings. Clicking on the "Add Container" button propels us into the heart of container customization, allowing us to fine-tune various parameters to ensure optimal performance and functionality.

As we embark on configuring our container within the ECS task definition, a crucial element is specifying the Docker image that forms the foundation of our application. In the first step, we established an Elastic Container Registry (ECR) repository to house our container images. Now, let's seamlessly integrate this repository by entering its address in the "image" field.

In the image field we have given the URL of the ECR repository, if you dont want use the customize image, you can just use the pre-created images like nginx:latest or something else.

As we delve into the configuration of our container within the ECS task definition, a pivotal aspect is the "Port Mapping" section. This step involves specifying the port number on which your application operates within the container. Let's tailor this setting to match the requirements of your application, ensuring seamless communication between the container and its external environment.

In the meticulous process of crafting our ECS task definition for Fargate, the "Advanced Settings" section allows us to delve into more nuanced configurations. One critical aspect within these settings is the "Health Check" configuration. It's imperative to pay special attention to this section, as it governs how the system assesses the health of your application and responds to potential issues.

CREATING CLUSTER SERVICE :

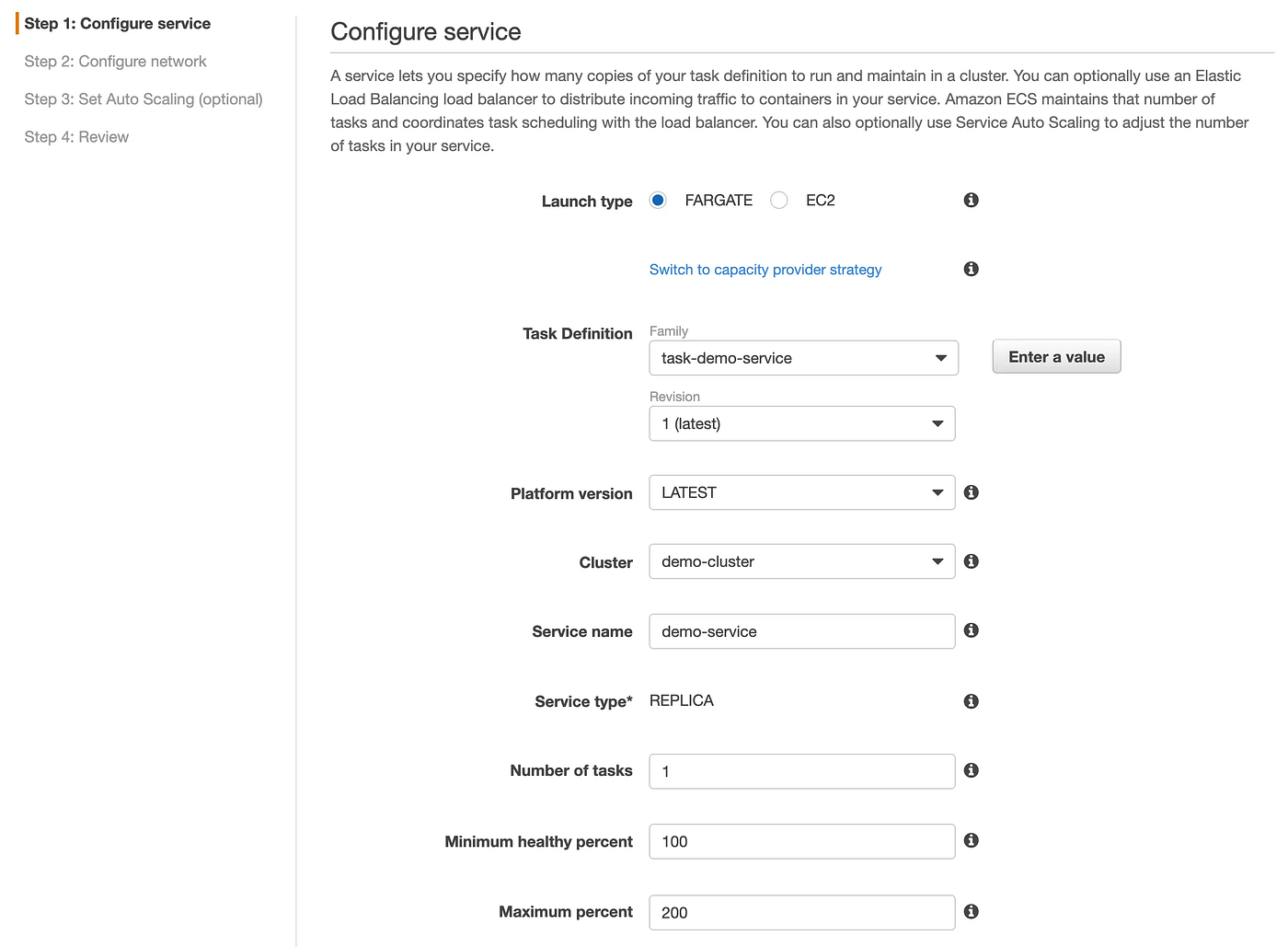

As we culminate our ECS Fargate containerization journey, the creation of a cluster service marks the final step to make our application accessible. In this pivotal phase, we'll specify the service type as Fargate, link it to the previously crafted task definition, and ensure its integration within the established ECS Fargate cluster. Let's guide through the process:

Define the network settings:

In scenarios where a new Virtual Private Cloud (VPC) has been created, linking a load balancer to your container becomes essential. This load balancer serves as the gateway, facilitating traffic distribution to the containerized application. When configuring the load balancer, two critical points demand attention: aligning port mapping with the container's operational port and defining a precise health check URL to ensure accurate health monitoring

In the concluding stages of our ECS Fargate deployment, configuring auto-scaling settings becomes an option for optimizing resource utilization and ensuring application responsiveness. However, for applications designed solely for testing purposes, proceeding without configuring auto-scaling is a viable choice. This optional step allows you to tailor the deployment strategy based on your specific needs and resource constraints.

🎉 Congratulations! Your journey into the realm of AWS Fargate has culminated in the successful deployment of your application. 🚀 By meticulously following these steps, you've gained valuable insights into creating, configuring, and deploying applications within the AWS Fargate environment.

🌟 Thank you for taking the time to explore this comprehensive guide on AWS Fargate! 🚀 I hope you found the information detailed in this article both informative and beneficial for your containerization endeavors. If you have any questions, need clarification on specific topics, or have feedback to share, please don't hesitate to reach out. 👋

Your input is valuable, and I'm here to assist with any additional information or support you may require. Happy containerizing, and best of luck with your AWS Fargate deployments! 🌐💡

Subscribe to my newsletter

Read articles from Sachin Vasle directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by