Smooth Packaging: Flowing from Source to PyPi with GitLab Pipelines

biq

biqTable of contents

Overview

In the process of releasing a tool called piper-whistle, making it available through pip was ultimately the goal. Ideally, the concepts should be documented in the automation code itself. Yet it seemed useful to have a closer look at the architecture, details and quirks. The setup and inner workings of these most convenient mechanizations, I'd wish to share with you in this article.

Branch setup

The concept is based on three main stages the source code can be in, which are represented by the three branches through which the project is structured by.

Cutting edge - main

Simulation - staging

The real thing - release

Cutting edge

The state on the main branch is for development and most recent changes. When pushed, the main branch triggers a set of testing automation, to help detecting any new issues or regressions.

Simulation

If a set of features is coming together and seem worthy of a new release, lose ends are tied up and the version number in src/piper_whistle/version.py is increased in respect to the severity of the changes. Now the change-set is merged into the staging branch, which when pushed, will trigger a set of automatons, simulating the publishing of the final package. After the simulated publishing a test install is performed to see how the released version would behave.

The real thing

If everything appears fine and dandy, the next step is the proper release. For this, the changes are merged into the release branch. A tag with the name of the current version is created and associated with the latest commit. When pushed, the appropriate pipeline targets get triggered and the release candidate is processed and uploaded to the live python package index.

Preparations

To get started, this setup needs access to several platforms, which are listed below. Please make sure to have them setup and ready, if you’d like to follow along. It also assumes that you wish to synchronize your repository between GitLab and GitHub.

A python package project buildable with setuptools

Source checked into git repository with three branch setup

Gitlab account

GitHub account

PyPi account

PyPi staging / test account

CI/CD Variables set up

To prepare the environment, GitLab offers project specific CI/CD variable configurations. The following list names the variable names as used in the script and their qualifier. The settings can be reached by browsing your repository and selecting:

Setting -> CI/CD -> Variables (click Expand)

This will allow you to create all the environment variable necessary for this setup.

GITHUB_API_KEY_FILE

Personal access token, which allows for communication with github repository API.

github_pat_...

GITHUB_DEPLOY_DOMAIN

This will be used to construct the domain for ssh known host configuration.

github.com

GITHUB_DEPLOY_KEY_FILE

Contains the private key deploy key generated through GitHub.

-----BEGIN OPENSSH PRIVATE KEY-----

...

-----END OPENSSH PRIVATE KEY-----

GITHUB_REPO_LINK

The ssh capable link to your GitHub repository.

git@github.com:think-biq/piper-whistle.git

GITHUB_USER_EMAIL

This should be set to the user email you want to be used for the push / sync.

your@login.email

GITHUB_USER_NAME

The username you want to be used for the push / sync.

YourGithubUsername

PYPI_CONFIG_FILE

The contents of the PyPi test server deploy token.

[testpypi]

username = __token__

password = pypi-Your-PyPi-Test-Token

PYPI_LIVE_CONFIG_FILE

The contents of the PyPi live server deploy token.

[pypi]

username = __token__

password = pypi-Your-PyPi-Token

The script

Working with pipelines is done through defining different targets (or jobs), which will then be run at certain stages and under certain conditions. The script file is picked up if you name it .gitlab-ci.yml and place it at root level of your repository. Each target spins up a new container image and then executes a set of instructions. It is possible to define a base image for each target, define a global default one and combine both, leading to cascaded overrides (except when you disable it via the inherit attribute).

Imagination

I created the base container ue-plugin-staging to prepare releases of Unreal Engine plugins and found it useful for other automation purposes (hence the name). You may create your own, or use another one which provides the same set of tools.

image: thinkbiq/ue-plugin-staging:latest

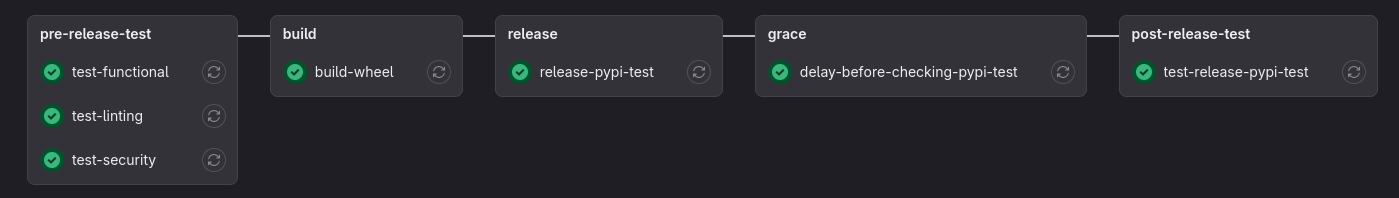

All in stages

Now we define the stages used through the pipeline. Which are essentially testing, building and releasing with extra utility layers.

stages:

- pre-release-test

- build

- release

- grace

- post-release-test

- finalize

Journey to the testing grounds

The first major layer is testing. It is split in testing before any release happens and tests which are run after the release has been successfully concluded. Let’s look at the tests running before anything else is started.

Smoke test

First in line of the pipeline is a functional test defined in the testing package of the piper-whistle source. This is what test-functional is responsible for. It checks functionality of the tool holistically by running the smoke / integration tests implemented through the class CliCommandTests defined in src/testing/functionality.py using unittest.

python3 -m unittest src/testing/functional.py

The target is available for manual trigger on the main as well as staging branch and simply installs all necessary pip requirements and invokes the test through the makefile target run-tests, which in turn invokes the unittest runner on functional.py.

test-functional:

stage: pre-release-test

rules:

- if: $CI_COMMIT_REF_NAME == $CI_DEFAULT_BRANCH

when: manual

allow_failure: true

- if: $CI_COMMIT_REF_NAME == "staging"

allow_failure: false

script:

- make pip-update-all

- make run-tests

Security audit

Next up is making sure, none of the dependencies used throughout the project brings with it any already identified security issue. The makefile target audit, invokes the handy tool pip-audit.

pip-audit --desc

pip-audit --fix --dry-run

With its help, the pipeline target test-security runs a quick scan on the packages installed and looks for known vulnerabilities and proposes fixes (if any).

test-security:

stage: pre-release-test

rules:

- if: $CI_COMMIT_REF_NAME == $CI_DEFAULT_BRANCH

when: manual

allow_failure: true

- if: $CI_COMMIT_REF_NAME == "staging"

allow_failure: false

script:

- make pip-update-all

- make audit

It can be triggered manually on the main branch. When automatically triggered on branch staging, this needs to pass without any errors or warnings.

Linting

There are multiple linting libraries and tools out there. For this scenario, I was looking for a reasonable balance of (proper) standards and ability to configure the process in regards to my customized (arguably foolish) code style. Some may be dismayed by this, which is why I take the liberty of quoting Guido van Rossum here:

A style guide is about consistency. Consistency with this style guide is important. Consistency within a project is more important. Consistency within one module or function is the most important.

Adding more weight to ease of setup and configurability, the choice came down on flake8. It is easy to integrate, since its also available through pip and let’s you configure which standards you want to omit by simply stating them as a list via the --ignore switch. Moving to ruff appears quite smooth, so future updates may do so.

test-linting:

stage: pre-release-test

rules:

- if: $CI_COMMIT_REF_NAME == $CI_DEFAULT_BRANCH

allow_failure: true

- if: $CI_COMMIT_REF_NAME == "staging"

allow_failure: false

script:

- make pip-update-all

- make lint

Turning the wheel of fortune

The target build-wheel - sole constituent of the build layer - installs all dependencies and resolves the readme markdown template to its release form (using the tmplr tool), since the readme.md file is packaged with the wheel file. This is followed by the generation of a compressed source file release (tar.gz) and building a wheel file (whl) using build and setuptools respectively.

build-wheel:

stage: build

rules:

- if: $CI_COMMIT_REF_NAME == "release"

allow_failure: false

- if: $CI_COMMIT_REF_NAME == "staging"

allow_failure: false

- if: $CI_COMMIT_REF_NAME == $CI_DEFAULT_BRANCH

when: manual

allow_failure: false

script:

- make pip-update-all

- make readme-build

- make release

- export VERSION_TAG=$(etc/./query-tag-most-relevant-version.sh)

- echo "VERSION_TAG=${VERSION_TAG}" > pypi.env

- export WHEEL_FILE_NAME="piper_whistle-${VERSION_TAG}-py3-none-any.whl"

- echo "WHEEL_FILE_NAME=${WHEEL_FILE_NAME}" >> pypi.env

- export DEP_URL_BASE="https://pypi.debian.net/piper-whistle"

- export WHEEL_FILE_URL="${DEP_URL_BASE}/${WHEEL_FILE_NAME}"

- echo "WHEEL_FILE_URL=${WHEEL_FILE_URL}" >> pypi.env

- echo "pypi.env:"

- cat pypi.env

- echo "Generating release page for ${VERSION_TAG} ..."

- if ! etc/./query-tag-message.sh ${VERSION_TAG} > release-notes.md; then

echo "Could not query tag ${VERSION_TAG}. Defaulting to empty.";

echo "" > release-notes.md;

fi

- echo "release-notes.md:"

- cat release-notes.md

artifacts:

paths:

- build/release

- release-notes.md

reports:

dotenv: pypi.env

Both of those are then exposed as artifacts, along side some environment variables as well as the release notes, which were obtained by invoking query-tag-message.sh. This shell script queries the git log for the message attached to the current version tag. Or if run on a non-tagged commit, leaves it empty. The version name is obtained via query-tag-most-relevant-version.sh, which either takes the name of the tag attached to the current commit, or queries the whistle’s version module. Targets wanting access to these artifacts, simply have to specify the target name and indicate access to artifacts through the needs attribute.

Playtime Preparation: Setting stage for the package

The PyPi team allows you to use a testing mirror of the live repository at test.pypi.org to rehearse the release of your python package. I'm using twine to communicate with PyPi staging as well as live servers. The upload can be commenced by invoking the script upload-pypi.sh, which looks something like:

twine upload --non-interactive --verbose \

--config-file "${configpath}" -r ${repo} \

--skip-existing \

$*

The format of the config file is defined by distutils. The pipeline script uses separate configuration files for test and live server of PyPi. You may as well put them in one file all together and only select the repository to use with the -r switch.

[testpypi]

username = __token__

password = pypi-ToKeN

[pypi]

username = __token__

password = pypi-ToKeN

Being pushy

First in line is release-pypi-test, which fetches the artifacts produced by build-wheel and pushes them to the test server using the authentication configuration store in PYPI_CONFIG_FILE.

release-pypi-test:

stage: release

rules:

- if: $CI_COMMIT_REF_NAME == "staging"

allow_failure: false

- if: $CI_COMMIT_REF_NAME == $CI_DEFAULT_BRANCH

when: manual

allow_failure: true

needs:

- job: build-wheel

artifacts: true

script:

- python3 -m pip install twine

- etc/./upload-pypi.sh testpypi "${PYPI_CONFIG_FILE}" build/release/*

Patience is a virtue

Since the PyPi package cache seem to need a few cycles to catch up with the updated index, delay-before-checking-pypi-test sits between the upload and following test target.

delay-before-checking-pypi-test:

stage: grace

rules:

- if: $CI_COMMIT_REF_NAME == "staging"

variables:

DELAY_IN_SECONDS: 13

script:

- echo "Sleeping for ${DELAY_IN_SECONDS} seconds ..."

- sleep ${DELAY_IN_SECONDS}

- echo "Done."

Let's go live! (well almost)

python3 -m pip install \

--trusted-host test.pypi.org --trusted-host test-files.pythonhosted.org \

--index-url https://test.pypi.org/simple/ \

--extra-index-url https://pypi.org/simple/ \

piper_whistle==$(python3 -m src.piper_whistle.version)

After patiently waiting our turn, we check if pip is able to fetch the newly released wheel file via a carefully crafted pip install invocation. The --index-url argument specifies the test server index and the --trusted-host arguments ensures seamless binary downloads from the test servers. It turned out, that some dependencies where not available on the test server, which necessitated the --extra-index-url parameter pointing to the index of the live server.

Finally, to ensure the current latest version of the staging branch is fetched from the test server and not the most recent version from the live server, the version module of piper-whistle is invoked to obtain the semantic version name. In the build script, this command is contained within the install-test-pypi.sh shell script for brevity. The target test-release-pypi-test is only available on the staging branch and is automatically triggered.

test-release-pypi-test:

stage: post-release-test

rules:

- if: $CI_COMMIT_REF_NAME == "staging"

allow_failure: true

script:

- python3 -m pip install -U pip

- export PGK_NAME="piper_whistle==$(python3 -m src.piper_whistle.version)"

- etc/./install-test-pypi.sh ${PGK_NAME}

- piper_whistle -h

- echo "Setting up search index ..."

- piper_whistle refresh

- echo "Checking if HU is supported ..."

- if [ "hu_HU" = "$(piper_whistle guess hung)" ];

then echo "Found hungarian language support";

else exit 13;

fi

- echo "Checking if model at index 1 is available ..."

- piper_whistle list -U -l hu_HU -i 1

- echo "Installing model 1 ..."

- piper_whistle install hu_HU 1

- echo "Fetching path of model 1 ..."

- export HU_VOICE_INFO=$(piper_whistle list -S -l hu_HU -i 1)

- export VOICE_NAME="$(echo ${HU_VOICE_INFO} | awk '{ print $1 }')"

- piper_whistle path ${VOICE_NAME}

After installing the package it simply goes through refresh, setup and downloads a voice to test basic functionality.

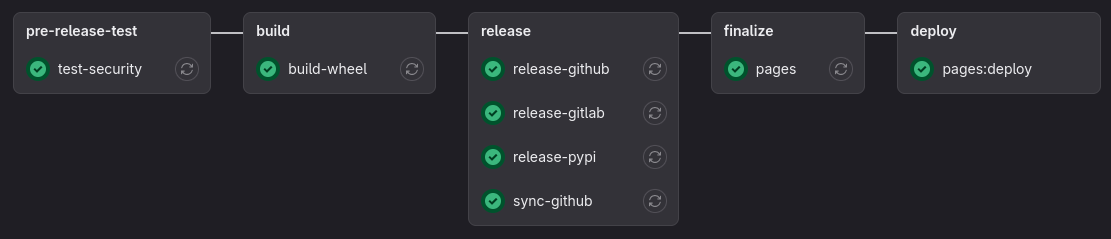

Getting serious

Publishing to the main index

Analogous to the test release target, release-pypi is virtually the same, except it is trigger on the release branch and uses the release server configuration in PYPI_LIVE_CONFIG_FILE instead.

release-pypi:

stage: release

rules:

- if: $CI_COMMIT_REF_NAME == "release"

allow_failure: false

needs:

- job: build-wheel

artifacts: true

script:

- echo "Uploading ..."

- ls -lav build/release

- python3 -m pip install twine

- etc/./upload-pypi.sh pypi "${PYPI_LIVE_CONFIG_FILE}" build/release/*

Gitlab release on repository site

To allow people to directly download the latest built from piper-whistle's repository, the release-gitlab target makes use of GitLab's base image release-cli exposing attributes for the creation of a new release page. It uses GitLab's release tool to process said attributes.

release-gitlab:

image: registry.gitlab.com/gitlab-org/release-cli:latest

stage: release

rules:

- if: $CI_COMMIT_REF_NAME == "release"

allow_failure: true

needs:

- job: build-wheel

artifacts: true

script:

- echo Tag name = "${VERSION_TAG}"

- echo Release name = "piper-whistle ${VERSION_TAG} wheel"

- echo Release notes = "$(cat release-notes.md)"

- echo Wheel file link = "${WHEEL_FILE_URL}"

release:

name: 'piper-whistle ${VERSION_TAG} wheel'

description: 'release-notes.md'

tag_name: '${VERSION_TAG}'

ref: '$CI_COMMIT_SHA'

assets:

links:

- name: '${WHEEL_FILE_NAME}'

url: '${WHEEL_FILE_URL}'

Here we make use of the environment variables exposed in the build-wheel target, as well as use the release notes stored in release-notes.md for the description.

The wheel file download link is generated with the help of a front-end to PyPi provided by the Debian Project. It may take several minutes until the front-end can find the package in the cache.

Github release

With the help of rota, we can make the new release available on the github hosted repository via the github-release command. It needs an access token stored in a file, the repository ID in the form of {username}/{project}, the version name and the release message.

release-github:

stage: release

rules:

- if: $CI_COMMIT_REF_NAME == "release"

allow_failure: true

needs:

- job: build-wheel

artifacts: true

script:

- echo Tag name = "${VERSION_TAG}"

- echo Release name = "piper-whistle ${VERSION_TAG} wheel"

- echo Release notes = "$(cat release-notes.md)"

- echo Wheel file link = "${WHEEL_FILE_URL}"

- echo "Uploading release "${VERSION_TAG}" to github ..."

- python3 -m src.tools.rota github-release

--token-file "${GITHUB_API_KEY_FILE}"

--attachment "build/release/${WHEEL_FILE_NAME}"

think-biq/piper-whistle release "${VERSION_TAG}" "$(cat release-notes.md)"

It sends a request to the github API asking for a new release to be created. If that was successful, it retrieves the dedicated upload endpoint for the specific release and uploads the file specified via --attachment (if any).

Another page in the book

To keep the documentation up to date and readily available, the target pages builds a web release of the doc-strings found throughout the source code via doxygen and hosts it through GitLab’s pages infrastructure.

pages:

stage: finalize

rules:

- if: $CI_COMMIT_REF_NAME == "release"

allow_failure: true

- if: $CI_COMMIT_REF_NAME == $CI_DEFAULT_BRANCH

when: manual

allow_failure: true

script:

- echo "Install requirements ..."

- make pip-update-all

- echo "Building documentation ..."

- make docs-build

- echo "Exposing as public ..."

- mv docs/published public

- echo "Result:"

- ls -lav public

- echo "Hosting ..."

artifacts:

paths:

- public

readthedocs.org

Additionally, a copy of most recent documentation based off of the main branch is maintained on readthedocs.org servers.

When pushing to the main branch, readthedocs will scan the root path of the repository and look for a .readthedocs.yaml configuration file.

version: 2

build:

os: ubuntu-20.04

tools:

python: "3.8"

commands:

- echo "Install dependencies ..."

- make pip-update-all || echo 💥

- echo "Building documentation using doxygen ..."

- make docs-build || echo 💥

- echo "Ensuring html directory ..."

- mkdir -p "$(realpath ${READTHEDOCS_OUTPUT})/html"

- echo "Moving page assets ..."

- mv docs/published/* "$(realpath ${READTHEDOCS_OUTPUT})/html" || echo 🚚💥

- echo "Per Aspera Ad Astra 🚀"

It’s very convenient, to have straight forward support for the static page setup which is produced by doxygen. We only need to make sure to move the generated artifacts to the right place. This would be ${READTHEDOCS_OUTPUT}/html. Since I’ve encountered issues where the path was malformed (i.e. double /), realpath is used to normalize the path before handing it off to the mv command. The way to the stars seems a bit clearer now.

The Harmonious Hustle: GitLab CI/CD's Dance with GitHub for Seamless Repository Sync

To increase the reach of piper-whistle and a greater change of someone contributing or reporting any issues, keeping the project in sync with GitHub seems like a reasonable ambition. Let's look at how me way do so with a pipeline target called sync.

sync:

stage: finalize

rules:

- if: $CI_COMMIT_REF_NAME == "release"

allow_failure: true

- if: $CI_COMMIT_REF_NAME == $CI_DEFAULT_BRANCH

when: manual

allow_failure: true

script:

- apt-get update -y

- apt-get install -yqqf openssh-client sshpass --fix-missing

- eval $(ssh-agent -s)

- cat "${GITHUB_DEPLOY_KEY_FILE}" | tr -d '\r' | ssh-add - > /dev/null

- mkdir -p ~/.ssh

- chmod 700 ~/.ssh

- ssh-keyscan ${GITHUB_DEPLOY_DOMAIN} >> ~/.ssh/known_hosts

- chmod 644 ~/.ssh/known_hosts

- git config --global user.email "${GITHUB_USER_EMAIL}"

- git config --global user.name "${GITHUB_USER_NAME}"

- git fetch --all

- git checkout staging

- git checkout release

- git checkout main

- git remote rm origin 2> /dev/null

- git remote add origin ${GITHUB_REPO_LINK}

- git push --all

- git push --tags

To achieve this, the setup uses a container local ssh user setup, leveraging the deploy key infrastructure offered by GitHub. After setting up the key and known_host configuration, changing the remote origin entry allows for pushing new commits as well as tags. Additionally, it may be appropriate to only offer this automation on the main branch and set it's trigger to manual, so not every commit grinds the pipeline budget.

You could just do this manually, by adding a separate remote for GitHub and pushing directly, but where would the fun be with this?

Extra fun

For some extra spice, the current status of the release pipeline and readthedocs status is shown as badges on the top of the readme.

To obtain a badge for your projects pipeline, only taking into account non-skipped targets:

f"https://gitlab.com/{namespace}/{project}/badges/{branch}/pipeline.svg?ignore_skipped=true"

A badge showing the latest / most recent release tag, you may use:

f"https://gitlab.com/{namespace}/{project}/-/badges/release.svg"

The readthedocs.org badge may be constructed via:

f"https://readthedocs.org/projects/{project_slug}/badge/?version={version}&style={style}"

Conclusion

Thank you for reading and I wish you all the best on your automation adventures. To celebrate the mysteries before us, let’s share in spirit with these attributed sayings:

“At last concluded that no creature was more miserable than man, for that all other creatures are content with those bounds that nature set them, only man endeavors to exceed them.” ― Desiderius Erasmus Roterodamus

“I have so many ideas that may perhaps be of some use in time if others more penetrating than I go deeply into them someday and join the beauty of their minds to the labour of mine.” ― Gottfried Wilhelm Leibniz

Encore

So, you are still reading? Let’s do some math and statistics then. Using the following script to stalk through the repository and separating the code for the package in piper_whistle from all the rest of the scripts and configuration files, we can calculate a ratio of lines of code to produce to lines of code in production. Something like a build-infrastructure efficiency coefficient, or biec for short.

The script: biec.sh

This approach is not omitting any comments or white-spaces, so keep that in mind.

count-lines-of-code-package () {

# Counts only source files in main package.

local files=$(find ./src/piper_whistle -iname "*.py" -type f)

local count=$(for f in ${files}; do wc -l $f; done \

| awk '{ print $1 }' \

| awk '{ s+=$1 } END { printf "%.0f", s }')

echo ${count}

return 0

}

count-lines-of-code-tooling () {

# Collects all source files relevant for tooling, building and configuring.

local files=$(find . \

-iname "*.py" -type f \

-not -path "*/src/piper_whistle/*" \

-not -path "*/build/lib/*" \

-o -iname "*.sh" -o -iname "makefile" \

-o -iname "pip.conf" -o -iname "*.cfg" \

-o -iname "*.yaml" -o -iname "*.yml")

local count=$(for f in ${files}; do wc -l $f; done \

| awk '{ print $1 }' \

| awk '{ s+=$1 } END { printf "%.0f", s }')

echo ${count}

return 0

}

main () {

# Investigate the codebase and create a ratio of 'lines of code in production' to 'lines of code to produce'

local root_path="${1}"

local package_lines=0

local tool_lines=0

local ratio=0

pushd "${root_path}" > /dev/null

package_lines=$(count-lines-of-code-package)

tool_lines=$(count-lines-of-code-tooling)

popd > /dev/null

ratio=$(echo "${tool_lines} / ${package_lines}.0" | bc -l)

echo "package: ${package_lines}"

echo "tooling: ${tool_lines}"

echo "ratio: ${ratio:0:6}"

return 0

}

main $*

Hint: Make sure to not place this script in the actual directory of the repository :)

Analyzing

Invoking it via biec.sh ~/Workspace/Remote/piper-whistle on release 1.6.213 yields:

package: 2050

tooling: 4080

ratio: 1.9902

So I have virtually twice as much lines of code supporting the maintenance, testing and publishing of the actual piper-whistle python package. Looking at the files that were taken into account, the heaviest appears to be the doxygen documentation configuration file.

2 ./setup.cfg

70 ./makefile

36 ./etc/query-wheel-url-from-debian.sh

45 ./etc/query-tag-most-relevant-version.sh

12 ./etc/install-test-pypi.sh

20 ./etc/query-tag-message.sh

19 ./etc/upload-pypi.sh

8 ./src/__init__.py

1 ./src/testing/__init__.py

380 ./src/testing/functional.py

0 ./src/tools/__init__.py

217 ./src/tools/tmplr.py

363 ./src/tools/rota.py

19 ./.readthedocs.yaml

20 ./.github/workflows/publish.yml

58 ./setup.py

19 ./docs/makefile

2534 ./docs/docs.cfg

4 ./pip.conf

253 ./.gitlab-ci.yml

It alone is responsible for 2534 LOC (62.1%). When excluding this config, we have a more reasonable result of:

package: 2050

tooling: 1546

ratio: 0.7541

Discussion

So roughly for every 4 lines of package code, there are 3 lines of supporting infrastructure code. I would be so bold as to state that the lines of code needed to be included when scaling the actual program does not grow as fast as the lines of code in the package. I would estimate about 10% growth of infrastructure code in relation to the product code. Under this assumption, we would reach a biec of 0.5 at about 3352 LOC for the product. As a reference, PyYAML version 6.0.1 has 5890 LOC. Recalculating for that line count, would result in a biec of about 0.33 for PyYAML. Might be fun to test this on other (build-)setups and packages as well.

Follow-up

If you feel inspired and want to get in touch, you may reach me on twitter / X, discord or LinkedIn.

Subscribe to my newsletter

Read articles from biq directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

biq

biq

Today's impossible, will be tomorrow's reality.