Day5 of #BashBlazeChallenge

Bharat

Bharat

🚀 Automated Log Analysis Script! 🚀

In the fast-paced world of system administration, efficiently managing and monitoring server logs is crucial for ensuring the health and security of your network. To streamline this process, I took on the #BashBlazeChallenge and developed a Bash script that automates the analysis of daily log files, providing a comprehensive summary report.

The Challenge

As a system administrator, my task was to create a Bash script that takes the path to a log file as a command-line argument and performs the following tasks:

Error Count: Analyze the log file and count the number of error messages based on a specific keyword.

Critical Events: Identify lines containing the keyword "CRITICAL" and print them along with their line numbers.

Summary Report: Generate a summary report in a separate text file, including the date of analysis, log file name, total lines processed, total error count, and a list of critical events with line numbers.

I also created a script to create log file containing logs.

log generation script:

#!/bin/bash

log_file="log_file.log"

for ((i=1; i<=100; i++)); do

timestamp=$(date "+%Y-%m-%d %H:%M:%S")

rand=$((RANDOM % 4))

case $rand in

0)

log_level="INFO"

message="Application is running smoothly"

;;

1)

log_level="DEBUG"

message="Debugging information: iteration $i"

;;

2)

log_level="ERROR"

message="Error occurred at iteration $i"

;;

3)

log_level="CRITICAL"

message="Critical issue detected at iteration $i"

;;

esac

echo "$timestamp $log_level: $message" >> "$log_file"

done

echo "Generated 100 logs in $log_file"

Log Analysis code:

#!/bin/bash

# Check if the log file exists

does_file_exists() {

if [ ! -f "$1" ]; then

echo "Error: Log file not found: $1"

exit 1

fi

}

# Check if the user provided the log file path as a command-line argument

if [ $# -ne 1 ]; then

echo "Usage: $0 <path_to_logfile>, log file not provided."

exit 1

fi

log_file="$1"

does_file_exists $log_file

# Count the total number of lines in the log file

total_line_count=$(wc -l < "$log_file")

# Count the number of error messages ( "ERROR" )

error_line_count=$( grep -c -i "ERROR" "$log_file")

# Search for critical events ( "CRITICAL" ) and store them in an array

mapfile -t critical_events < <(grep -n -i "CRITICAL" "$log_file")

# Generate the summary report in a separate file

summary_report="log_summary_$(date +%Y-%m-%d).txt"

{

echo "Date of analysis: $(date)"

echo "Log file: $log_file"

echo "Total lines processed: $total_line_count"

echo "Total error count: $error_line_count"

echo -e "\nCritical events with line numbers:"

for event in "${critical_events[@]}"; do

echo "$event"

done

} > "$summary_report"

echo "Summary report generated:"

echo $summary_report

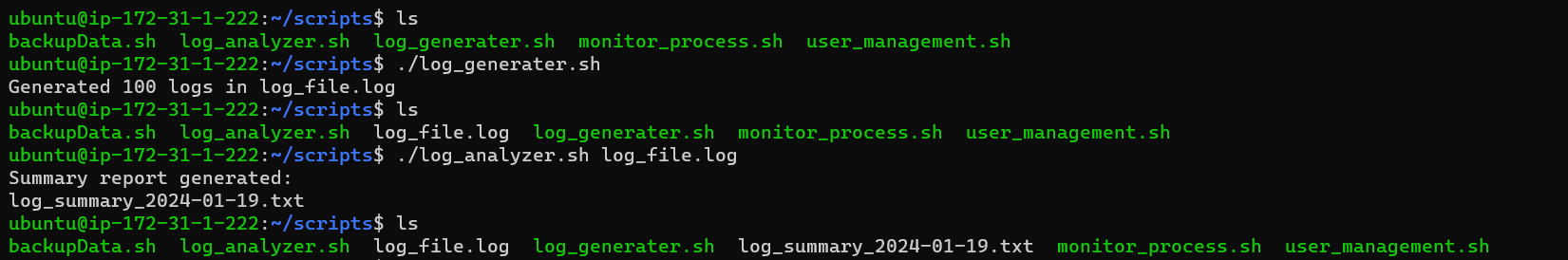

Output :

Subscribe to my newsletter

Read articles from Bharat directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by