Test-Drive a Non-Functional Requirement

Warren Markham

Warren Markham

#C #bubblesort #Unity #TDD #conventionalcommits #Makefile #testautomation

Let's try using TDD to implement the client's requirements for bubble sort. The client is Holberton's server-side test system. It reads the code I push to GitHub and attempts to compile it into a test program by linking it with server-side entry point files, and then the client executes the program (if it successfully compiled) and compares the output to an expected output.

In this article, I will:

look at what the functional and non-functional requirements of the client are

decide whether TDD is suitable

create a test runner

test-drive a non-functional source code requirement

directly update the build system to help satisfy a non-functional compilation requirement

In the next article in the series, I will test-drive the functional requirements.

Functional and Non-Functional Requirements

The bubble sort algorithm must be able to interface with Holberton's test system. The test system will call it in a test file through the interface void bubble_sort(in *array, size_t t).

After compiling a test file (such as the one shown below), Holberton's test system executes the binary and compares its captured output to the expected output. If the example test file looks like a normal C program to you, that is because it is. The actual validation of the output does not occur inside the test file. The test system validates the output with shell scripts.

Example test file

Example test file output

Functional Requirements

I can develop an initial understanding of the functional requirements by looking at the example test file and outputs (see above) provided by Holberton:

bubble_sortorders an array in ascending orderbubble_sortmodifies the array in placebubble_sortprints the state of the array after each swap

This is not necessarily a finalised or complete list. The discovery of new requirements is a certainty. But the list is a useful starting point from which to plan and test-drive the bubble sort feature.

Non-Functional Requirements

The non-functional requirements are listed on the task's project page and I summarise them below:

define

bubble_sort(int * array, size_t size)in0-bubble_sort.cuse the

void bubble_sort(int * array, size_t size)prototype insort.hsort.hshould be include guardedensure

0-bubble_sort.ccompiles with-Wall,-Wextra,-Werrorand-pedantic

Decide whether TDD is possible

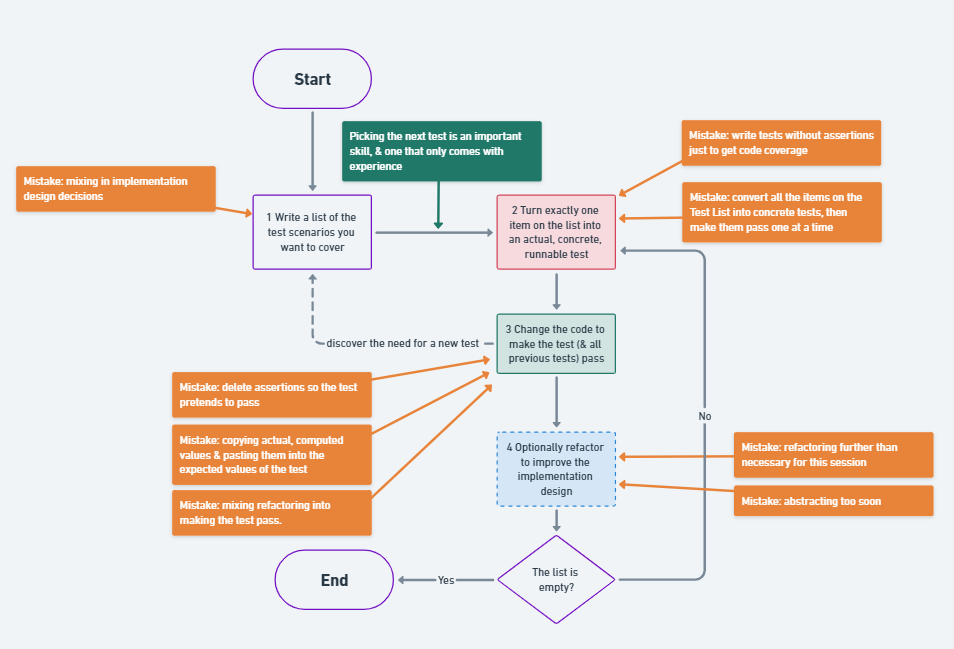

For a project to be amenable to TDD, there are four prerequisites that must be met:

predictable inputs and outputs

knowable test cases

micro-to-macro implication

sufficiency of test quality

Let's pose these prerequisites as questions of the bubble sort task:

Can I predict the outputs of a function that a) bubble sorts an array in-place and b) prints a representation of the array after each swap operation?

Yes. I know how the sort should behave and it is not computationally difficult to calculate what state the array should be in after each swap operation. The system is not a simulation.Do I know enough important test cases for a bubble-sort-while-printing function to get started writing tests for them, and can I reasonably discover the remainder as a I go?

Yes. I know the main behaviour of the algorithm. It is okay for details about how Holberton wants me to handle Null pointers, etc to be revealed in time. Importantly, I also know how to get that information when I need it: the Holberton checker will contain additional test files from which I can infer requirements.Can I use micro-tests of the bubble-sort-while-printing function to establish macro-results?

Yes. The system is simple and emergent properties will not dominate.Can the tests I write to test the system stay within Kent Beck's test desiderata acceptable zone?

This is where the biggest question mark is. Given the behaviour I need to test and the Unity framework I am using, I want to know:will the test setup be sufficiently simple?

will the tests be deterministic?

will the tests run fast enough?

will the test code stay decoupled from the structure of the system?

I haven't used Unity before. Let's reason about its suitability.

Why Unity shouldn't be a problem

I expect that almost all the above question marks will shake out favourably on the side of TDD being possible with Unity. Why? Unity is a lightweight unit test framework for embedded systems that the authors

use for TDD themselves; and

recommend running against simulated hardware

Therefore, it is unlikely that Unity will be inherently slow, non-deterministic or tightly coupled to the systems it tests.

Why Unity might be a problem

But my use case is slightly different to the authors' of the framework: I am testing non-embedded software whereas they work in the embedded space. If I have needs that are specific to non-embedded programming, the Unity framework might not have the kinds of quality-of-life features I would want.

I think the core question is: 'Will figuring out test setup - getting the inputs, outputs and state into the right shape - take too long in Unity?' If I cannot easily capture standard out with Unity, for example, test setup may prove extremely arduous.

But let's proceed and see what happens. If the tooling explodes in my face, it is all just part of the journey and I've learned something.

Create the Test Runner

In the canonical TDD workflow, all tests are run after each code change. This is how regressions are spotted and how you know whether a unit of functionality has been achieved.

When you follow this cycle, a lot of tests will get run a lot of times. If those tests have to be executed manually, TDD becomes impractical. Therefore, I need to automate the tests with a test runner.

Let's setup a test runner for the bubble sort task.

feat(tests): add bubble sort test runner

Point of interest:

- derive boiler plate from a re-usable template

I use the test runner template file, which has the Unity boilerplate I need, to create a test runner file for my bubble sort tests. Then I commit the renamed copy to version control.

$ cp tests/test_runner_template.c tests/test_bubble_sort.c

$ git add tests/test_bubble_sort.c

$ git commit -m 'feat(tests): add bubble sort test runner'

Since I will be running my tests frequently, I also alias a call to the GNU Make command as make_bt (make bubble test).

$ alias make_bt='make TEST_RUNNER=tests/test_bubble_sort.c'

Test-Drive a Non-Functional Requirement

I can now write a test for one of the client's non-functional requirements. The non-functional requirements mostly relate to the compilation needs and assumptions of Holberton's test system; consequently, they can be tested by asserting that file X exists and that it contains text Y.

test(bubble): fail file contains sort function

Points of interest:

a test should fail for one reason only

use the easier tool if it is good enough

Requirement addressed: define bubble_sort(int * array, size_t size) in 0-bubble_sort.c

I write the failing test.

Note: I had originally planned to do all my tests in C. However, for non-functional requirements like these, a Bash script is a much simpler option: it easily checks the contents of source, build and header files with grep.

I run the test to make sure it does in fact fail.

$ chmod u+x tests/test_that_BubbleSortFile_contains_BubbleSortFunction.sh

$ ./test_that_BubbleSortFile_contains_BubbleSortFunction.sh

grep: 0-bubble_sort.c: No such file or directory

bubble_sort function not found in 0-bubble_sort.c

It fails. I add it to version control.

$ git add tests/test_that_BubbleSortFile_contains_BubbleSortFunction.sh

$ git commit -m 'test(bubble): fail file contains sort function'

Note: In the test, I only checked for the existence of the bubble_sort signature in 0-bubble_sort.c. I didn't also check for the existence of a prototype in sort.h, even though these are related concepts and very similar tests. Why didn't I? Tests should be specific. If the test fails, it should fail for one reason only - not one of two possible reasons.

test(bubble): pass file contains sort function

Points of interest:

fix in increments

write only enough code to make the test pass

squash commits that aren't relevant to collaborators

Now I write the code or do the chores to make the failing test pass. I do this incrementally. If I check progress after each increment, I am less likely to be mistaken about the effects of my actions.

I fix the first error:

$ touch 0-bubble_sort.c

$ ./test_that_BubbleSortFile_contains_BubbleSortFunction.sh

bubble_sort function not found in 0-bubble_sort.c

$ git add 0-bubble_sort.c

$ git commit -m 'fix(test): add file to satisfy grep'

Then the second error:

$ echo 'void bubble_sort(int *array, size_t size);' > 0-bubble_sort.c

$ ./test_that_BubbleSortFile_contains_BubbleSortFunction.sh

bubble_sort function found in 0-bubble_sort.c

$ git add 0-bubble_sort.c

$ git commit -m 'fix(test): add function to satisfy grep'

The test passes.

Note: 0-bubble-sort.c won't compile at this point. But that isn't the behaviour I am testing so it isn't an issue. I only need to write enough code to make the current test pass.

Since the test passes, I squash the intermediate fix commits into the single canonical commit I want to push to the remote: test(bubble): pass file contains funciton. Why? Collaborators don't need to know about the steps I took to get the test to pass. If there is something important about those steps that should be communicated, I can include that context in the commit body of the single canonical commit.

Note: Squashing is done by interactively rebasing and marking the relevant entries of the git-rebase-todo file while in the editor session. I mark fix(test): add function... with the action squash and fix(test): add file... with the action reword.

$ git rebase -i HEAD~2

build(test): automate file contains sort function

Points of interest:

- test output should be helpful

Since I need my tests to run automatically, I add the test script to the run recipe in my Makefile. This is part of keeping the test code high quality - it is part of the TDD cycle. I also make sure any printed information is well-formatted and clearly messaged.

This is the current output of the bubble sort test runner. It is clear and very usable.

$ make_bt -s

test_bubble_sort.c tests:

-----------------------

0 Tests 0 Failures 0 Ignored

OK

Bash script tests:

-------------------------

bubble_sort function found in 0-bubble_sort.c

Directly update the build system

I won't continue writing tests for the remaining non-functional requirements. The process for test-driving them (seeing a search for a pattern in a file fail) would be the same approach used with test_that_BubbleSortFile_contains_BubbleSortFunction.sh.

build(compile): add required compilation flags

Points of interest:

- tests tell you if there has been a regression

Instead, I will add the compilation flags required by the client to the build recipe directly. This step is part of an important non-functional requirement: ensure that 0-bubble_sort.c compiles with those flags.

However, I want to emphasise here that adding the flags isn't a test-driven decision: there are no failing tests that will pass as a result of including them. By not test-driving the addition, I make my build file more fragile: it isn't backed up by a test. If a flag is removed or added, there will be no test to inform me of that requirement-breaking change.

Next: Functional Requirements

Subscribe to my newsletter

Read articles from Warren Markham directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Warren Markham

Warren Markham

I've worked in an AWS Data Engineer role at Infosys, Australia. Previously, I was a Disability Support Worker. I recently completed a course of study in C and Systems Programming with Holberton School Australia. I'm interested in collaborative workflows and going deeper into TDD, automation and distributed systems.