(Day 30 )Task: Kubernetes Architecture

ANSAR SHAIK

ANSAR SHAIK

Introduction

Welcome back to the 90DaysOfDevOps Challenge! Today, on Day 30, we dive deep into the world of Kubernetes, a cornerstone of modern container orchestration. Let's unravel the intricacies of Kubernetes architecture and explore its significance in the DevOps landscape.

1. What is Kubernetes? (a.k.a. K8s)

Kubernetes, often abbreviated as K8s (pronounced "Kates"), is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. It provides a robust framework for running isolated containers seamlessly, handling tasks such as auto-scaling, auto-healing, and efficient deployment. Originally developed by Google, Kubernetes has evolved into a community-driven project boasting a rich history and a plethora of features.

Key Features:

Isolated Containers: Kubernetes ensures that each container runs in its own isolated environment, preventing interference between applications.

Auto Scaling: Automatically adjusts the number of running containers based on resource demands, optimizing performance.

Auto Healing: Detects and replaces failed containers to enhance the reliability of applications.

Deployment: Facilitates smooth and efficient deployment of containerized applications.

History: Kubernetes has its roots in Google's internal system called Borg, which inspired its development.

Orchestration: Manages the deployment and scaling of containerized applications efficiently.

Health Monitoring: Constantly monitors the health of containers to ensure optimal performance.

Load Balancing: Distributes incoming network traffic across multiple containers to prevent bottlenecks.

Rollback: Enables seamless rollback to previous versions in case of issues during updates.

2. Benefits of Using Kubernetes

The adoption of Kubernetes brings several benefits to DevOps practitioners:

Scalability: Kubernetes allows applications to scale horizontally, handling increased traffic and demand effortlessly.

Resource Efficiency: Efficiently utilizes resources by dynamically adjusting container instances based on workload.

High Availability: Ensures that applications remain available and responsive even in the face of failures.

Portability: Kubernetes provides a consistent environment, allowing applications to run reliably across different infrastructure.

Time and Cost Savings: Automates repetitive tasks, reducing the operational overhead and speeding up the development process.

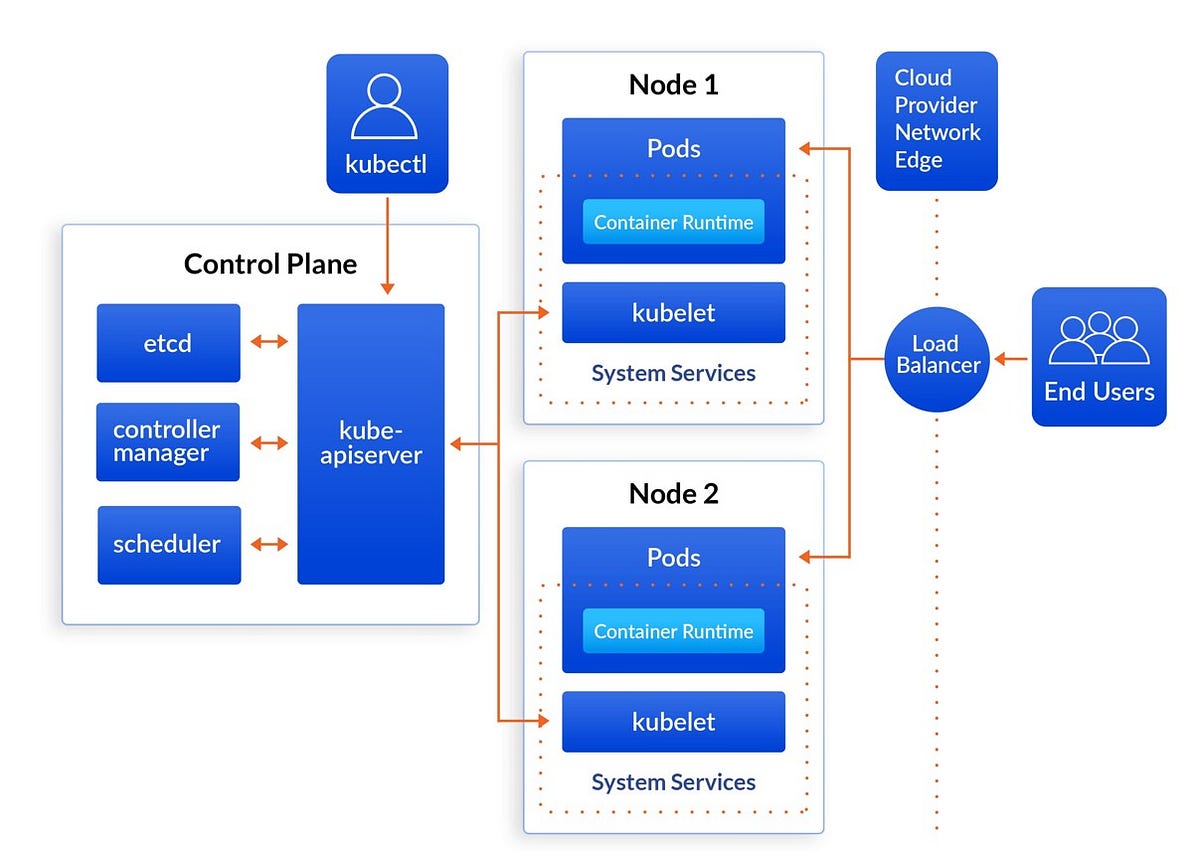

3. Exploring Kubernetes Architecture with etcd

The architecture of Kubernetes is further enhanced with the inclusion of etcd, a distributed key-value store, which acts as the persistent data store for the entire Kubernetes cluster.

Control Plane:

The Control Plane components, alongside the previously mentioned components, now incorporate etcd:

API Server: The central component that serves as the frontend for the Kubernetes control plane. It interacts with etcd to read and write the cluster state.

Controller Manager: Monitors the shared state of the cluster and enforces rules to ensure the desired state. It communicates with etcd to persistently store cluster state.

Scheduler: Assigns workloads to nodes based on resource requirements and constraints, consulting etcd for the latest cluster state.

etcd: The Reliable Data Store

etcd plays a crucial role in Kubernetes architecture by serving as the primary data store for all cluster data. Key attributes of etcd include:

Consistency: etcd maintains a consistent and coherent view of the cluster state across all nodes.

Availability: The distributed nature of etcd ensures high availability, allowing the control plane to function even if some nodes fail.

Partition Tolerance: etcd can tolerate network partitions, ensuring continued operation even when communication issues arise between nodes.

Node Components:

Each node in the Kubernetes cluster runs the following components:

Kubelet: Ensures that containers are running in a Pod and communicates with the Control Plane.

Container Runtime: The software responsible for running containers, such as Docker or containerd.

4. Control Plane: The Brain Behind Kubernetes

The Control Plane is responsible for making global decisions about the cluster, such as scheduling and scaling operations. It continuously monitors the state of the cluster through the API Server, making adjustments to maintain the desired state.

5. Kubectl vs. Kubelet: Understanding the Difference

kubectl: The command-line tool used for interacting with the Kubernetes API. It sends requests to the API Server, which, in turn, updates the etcd store.

kubelet: The primary node agent ensuring containers run in a Pod on each node. It communicates with the API Server, which reads and updates the cluster state in etcd.

6. The API Server's Role

The API Server acts as the front-end for the Kubernetes control plane. It processes RESTful API requests, performs validation, and updates the corresponding objects in the cluster. All interactions with the cluster, whether through kubectl or other Kubernetes components, go through the API Server.

As we conclude Day 30 of the 90DaysOfDevOps Challenge, we've uncovered the essence of Kubernetes, explored its architecture, and highlighted its pivotal role in modern DevOps practices. Join us tomorrow for another exciting leg of the journey as we continue to unravel the mysteries of the DevOps universe!

Subscribe to my newsletter

Read articles from ANSAR SHAIK directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

ANSAR SHAIK

ANSAR SHAIK

AWS DevOps Engineer