MultiCluster Deployment In GiTOps

Sagar Vashnav

Sagar Vashnav

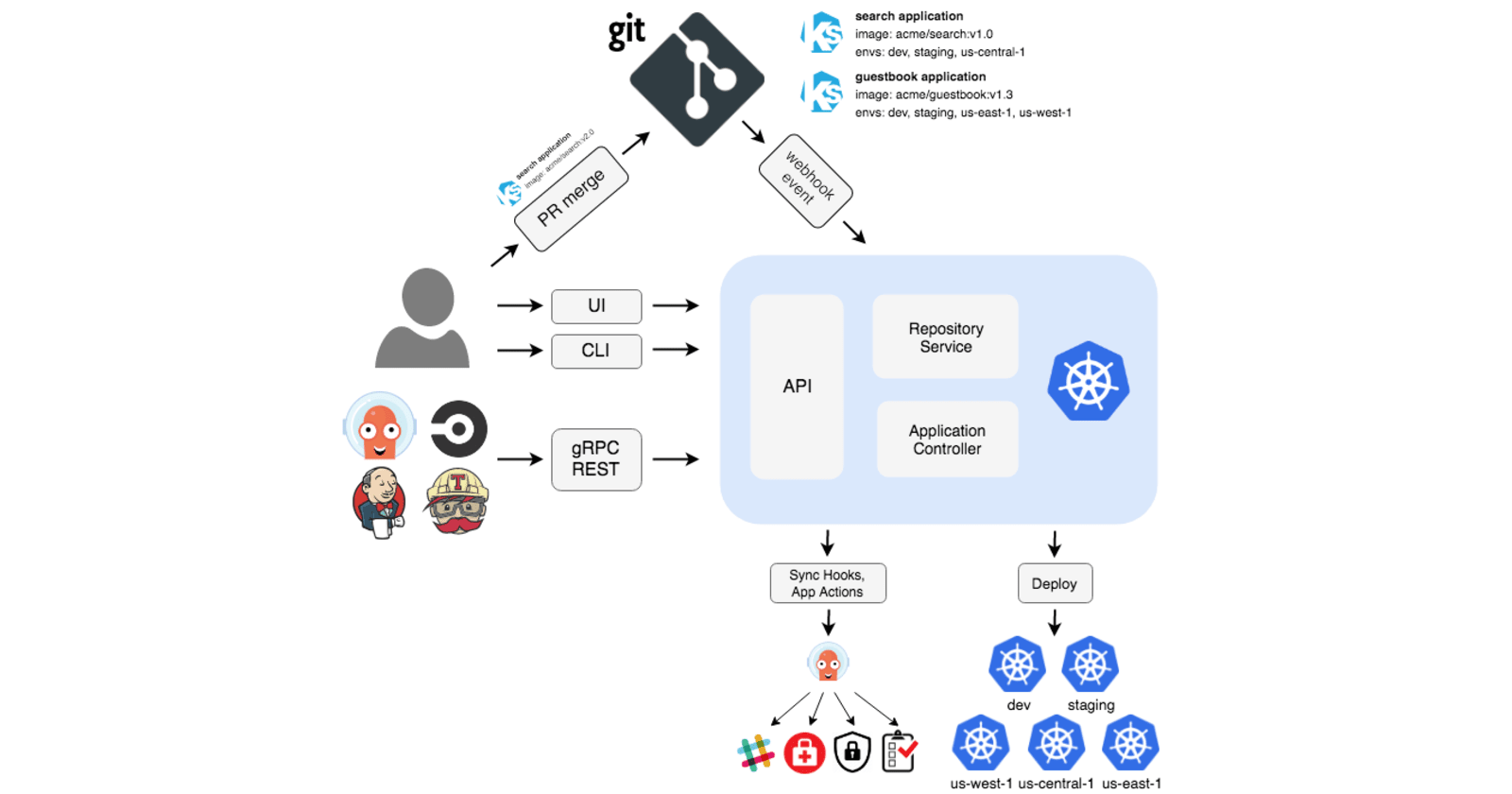

In this demo, we are using the ArgoCd, ci-cd management Tool. While Deployment we are facing some issues like:

There is no special way to record the versioning in the Deployment Phase, if a developer or DevOps Engineer makes some changes in the Deployment Phase then it directly the infrastructure without Knowing. This causes the synchronization issue.

Solution -

They are taking Git as a Single Source of Truth, By adding the configuration and Infrastructure files into the GitHub. Changes made manually are automatically reverted now.

How did this happen?

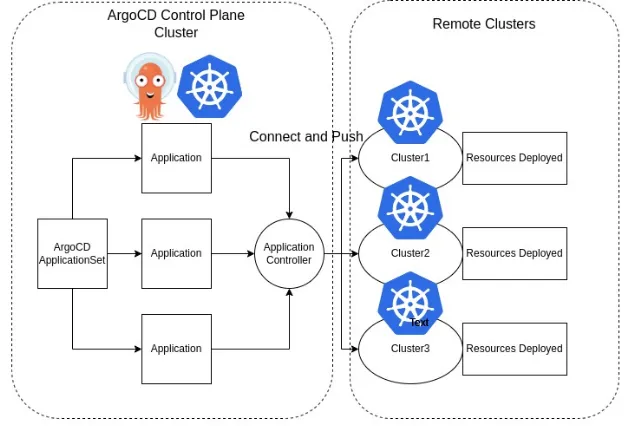

In this Project, we are using the ArgoCd Hub-Spoke Model. In which there is one Standard Cluster that maintains the deployment in another Remote Cluster.

Pre-Requisites:

AWS CLI - (configure it before creating Cluster)

AWS eksctl

Kubectl - Command Line Tool

Create Clusters

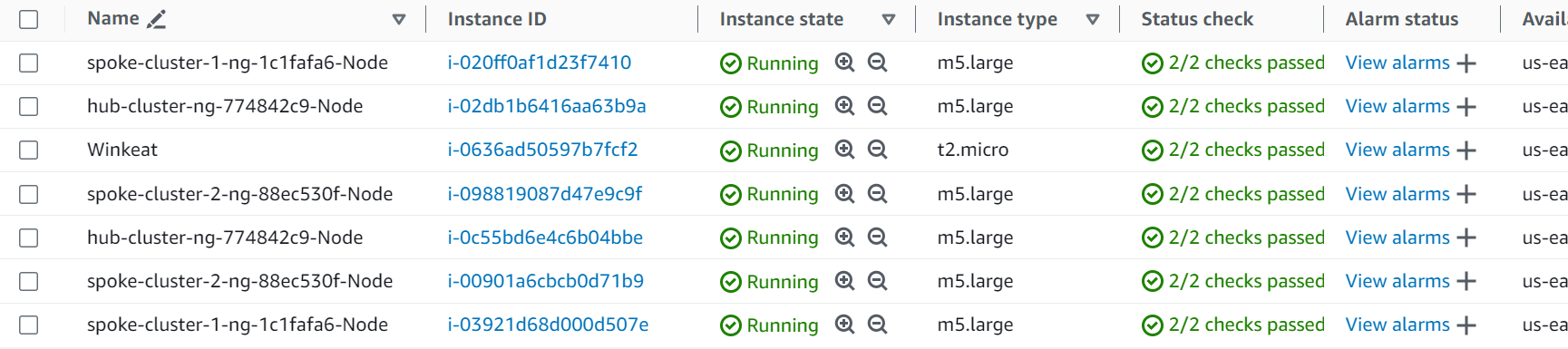

In this project, we are creating 3 Clusters one - Hub Cluster and the other 2 Spoke Cluster. It takes some time, be patient.

eksctl create cluster --name hub-cluster --region us-east-1

eksctl create cluster --name spoke-cluster-1 --region us-east-1

eksctl create cluster --name spoke-cluster-2 --region us-east-1

Now check the Clusters, using

$ kubectl config get-contexts

CURRENT NAME CLUSTER

AUTHINFO NAMESPACE

Sagar@hub-cluster.us-east-1.eksctl.io hub-cluster.us-east-1.eksctl.io Sagar@hub-cluster.us-east-1.eksctl.io

Sagar@spoke-cluster-1.us-east-1.eksctl.io spoke-cluster-1.us-east-1.eksctl.io Sagar@spoke-cluster-1.us-east-1.eksctl.io

* Sagar@spoke-cluster-2.us-east-1.eksctl.io spoke-cluster-2.us-east-1.eksctl.io Sagar@spoke-cluster-2.us-east-1.eksctl.io

For checking the current Cluster:

$ kubectl config current-context

Sagar@hub-cluster.us-east-1.eksctl.io

For switching the clusters:

$ kubectl config use-context Sagar@hub-cluster.us-east-1.eksctl.io

Switched to context "Sagar@hub-cluster.us-east-1.eksctl.io".

Install Argo Cd to Hub Cluster

$ kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argoinstall.yaml

Check Pods for ArgoCd -

$ kubectl get pods -n argocd

NAME READY STATUS RESTARTS AGE

argocd-application-controller-0 1/1 Running 0 11m

argocd-applicationset-controller-dc5c4c965-2xvkc 1/1 Running 0 12m

argocd-dex-server-9769d6499-zv97w 1/1 Running 0 12m

argocd-notifications-controller-db4f975f8-mjfzv 1/1 Running 0 12m

argocd-redis-b5d6bf5f5-9jmnz 1/1 Running 0 12m

argocd-repo-server-579cdc7849-bvcws 1/1 Running 0 12m

argocd-server-557c4c6dff-hnhzm 1/1 Running 0 11m

Now, here comes the issue we have to make ArgoCd, use the HTTP mode (which means insecure way) by changing the Config Map File.

Let's find it and change it -

$ kubectl get cm -n argocd

NAME DATA AGE

argocd-cm 0 13m

argocd-cmd-params-cm 0 13m

argocd-gpg-keys-cm 0 13m

argocd-notifications-cm 0 13m

argocd-rbac-cm 0 13m

argocd-ssh-known-hosts-cm 1 13m

argocd-tls-certs-cm 0 13m

kube-root-ca.crt 1 13m

Edit the file by adding data: server.insecure: "true" at the end of configmap.

kubectl edit configmap argocd-cmd-params-cm -n argocd

### changes in the file

----------------

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"argocd-cmd-params-cm","app.kubernetes.io/part-of":"argocd"},"name":"argocd-cmd-params-cm","namespace":"argocd"}}

creationTimestamp: "2024-01-28T11:33:47Z"

labels:

app.kubernetes.io/name: argocd-cmd-params-cm

app.kubernetes.io/part-of: argocd

name: argocd-cmd-params-cm

namespace: argocd

resourceVersion: "4727"

uid: 8ba76b5c-8dba-4d9d-954d-ee8688860842

data:

server.insecure: "true"

------------------

Optional:

Checking in the deployment -

kubectl edit deploy/argocd-server -n argocd

#### find this in the deployment

- name: ARGOCD_SERVER_INSECURE

valueFrom:

configMapKeyRef:

key: server.insecure

name: argocd-cmd-params-cm

optional: true

Accessing the ArgoCd UI

To access the UI just change the cluster IP To Node Port -

kubectl edit svc argocd-server -n argocd

##### ClusterIp to NodePort

selector:

app.kubernetes.io/name: argocd-server

sessionAffinity: None

type: NodePort

You need a password to login to UI and CLI both so let's find it.

$ kubectl get secrets -n argocd

NAME TYPE DATA AGE

argocd-initial-admin-secret Opaque 1 35m

argocd-notifications-secret Opaque 0 36m

argocd-secret Opaque 5 36m

kubectl edit secret argocd-initial-admin-secret -n argocd

## copy the password

data:

password: Y3VKRVhwVlZzMEhORk5YeA==

This password is encrypted you have to decrypt it, and then you get the password.

$ echo Y3VKRVhwVlZzMEhORk5YeA== | base64 --decode

cuJEXpVVs0HNFNXx

Before log in, you have to find the port of Argocd-server svc.

$ kubectl get svc -n argocd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argocd-applicationset-controller ClusterIP 10.100.120.202 <none> 7000/TCP,8080/TCP 30m

argocd-dex-server ClusterIP 10.100.2.58 <none> 5556/TCP,5557/TCP,5558/TCP 30m

argocd-metrics ClusterIP 10.100.46.183 <none> 8082/TCP 30m

argocd-notifications-controller-metrics ClusterIP 10.100.148.168 <none> 9001/TCP 30m

argocd-redis ClusterIP 10.100.75.144 <none> 6379/TCP 30m

argocd-repo-server ClusterIP 10.100.99.219 <none> 8081/TCP,8084/TCP 30m

argocd-server NodePort 10.100.16.211 <none> 80:30220/TCP,443:32586/TCP 30m

argocd-server-metrics ClusterIP 10.100.76.128 <none> 8083/TCP 30m

you can find here the port is 30220 for HTTP. The ec2 IP is of any hub server.

* Allow the traffic on this port in AWS Security Group *

Link - http://{your hub ec2-ip}:30220

For CLI put - argocd login {your hub ec2-ip}:30220 // port

ex - argocd login 52.90.15.226:30220

Now Add the Clusters to ArgoCd using CLI

argocd cluster add {remote-cluster-name} --server {your hub ec2-ip}:PORT

ex - argocd cluster add Sagar@spoke-cluster-1.us-east-1.eksctl.io --server 52.90.15.226:30220

argocd cluster add Sagar@spoke-cluster-2.us-east-1.eksctl.io --server 52.90.15.226:30220

At this stage, all the setup of Argo Cd, and Kubernetes is done.

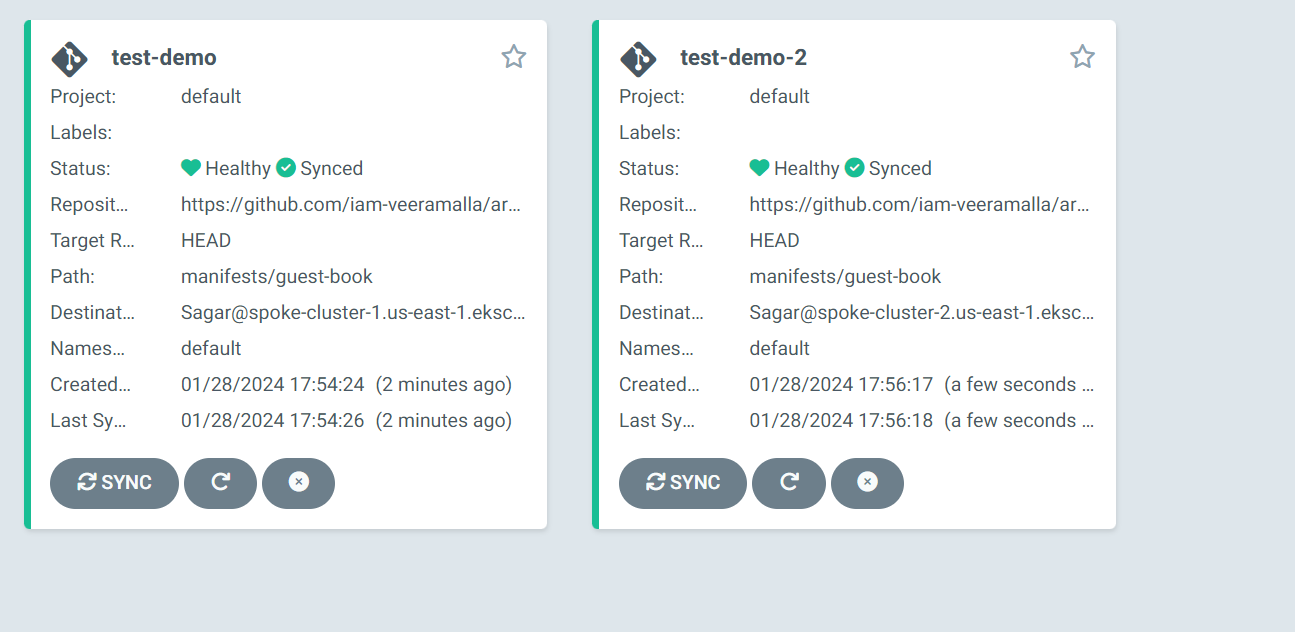

Deploy the Application

You can use this repo if you want to -

Deploy the application separately on both spoke clusters -

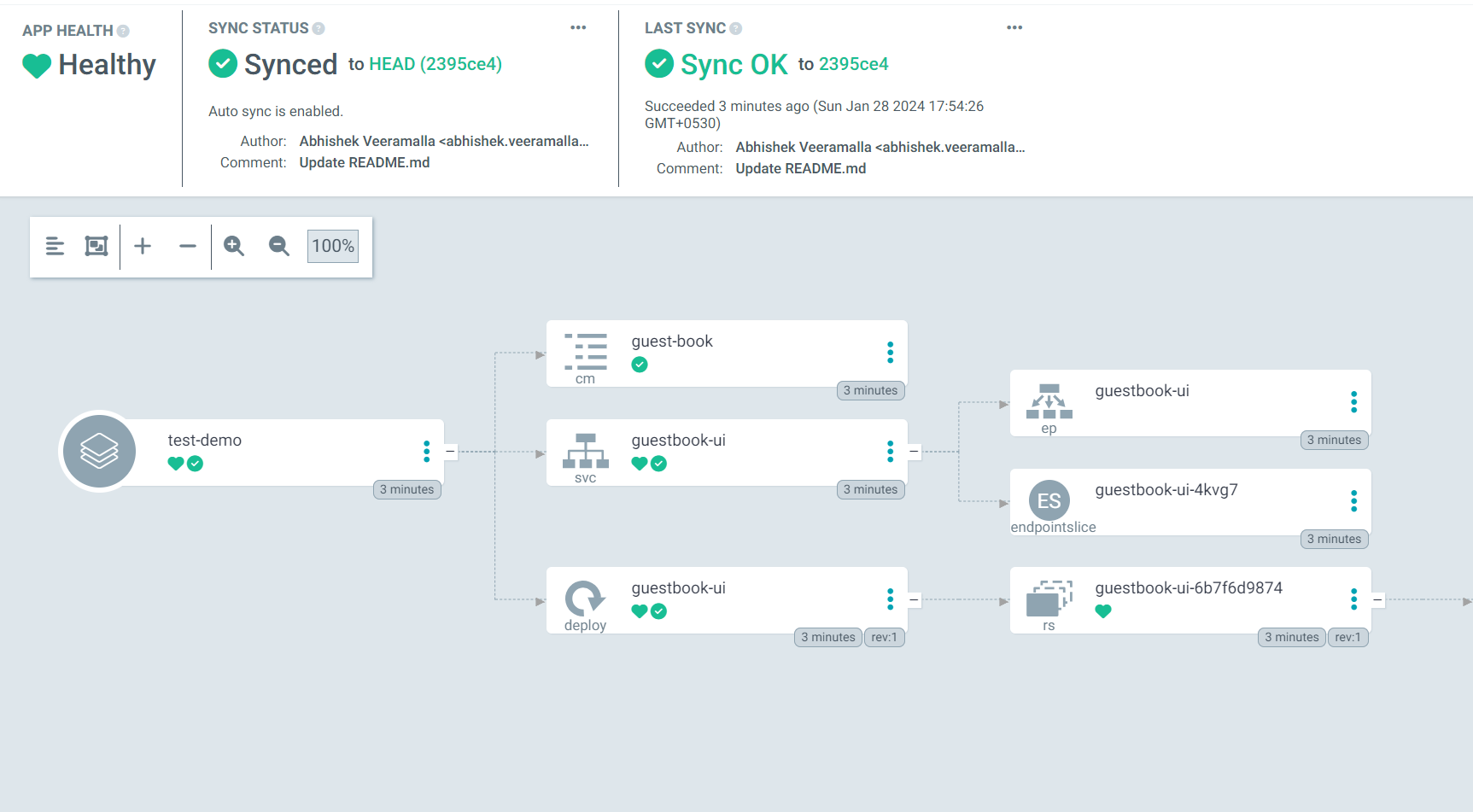

Deployed Applications -

Check the Deployment

Change to Spoke Cluster -

$ kubectl config use-context Sagar@spoke-cluster-1.us-east-1.eksctl.io

Switched to context "Sagar@spoke-cluster-1.us-east-1.eksctl.io".

Get the Deployment -

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/guestbook-ui-6b7f6d9874-bgdwv 1/1 Running 0 5m9s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/guestbook-ui ClusterIP 10.100.48.175 <none> 80/TCP 5m9s

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 67m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/guestbook-ui 1/1 1 1 5m10s

NAME DESIRED CURRENT READY AGE

replicaset.apps/guestbook-ui-6b7f6d9874 1 1 1 5m10s

Delete The Cluster

As it's a costly setup, so delete the cluster when it deployed.

eksctl delete cluster --name hub-cluster --region us-east-1

eksctl delete cluster --name spoke-cluster-1 --region us-east-1

eksctl delete cluster --name spoke-cluster-2 --region us-east-1

Thanks for your time......

Subscribe to my newsletter

Read articles from Sagar Vashnav directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sagar Vashnav

Sagar Vashnav

I am a developer who loves to explore new technology and is always ready to adopt new technologies.