The Hashnode-PR Github Action

Aazam Thakur

Aazam Thakur

Introduction 👋🏻

Please update the documentation! Come on it is so tedious!

In the modern world of software development, continuous integration and deployment (CI/CD) pipelines have become an integral part of the development process. They automate the testing and deployment of applications, making the development process smoother and more efficient. However, one area that often gets overlooked is the creation of documentation, particularly blog posts.

For this Hackathon, I aimed to utilise Hashnode's incredible API to addresses this gap by providing a GitHub Actions workflow that automatically publishes a blog post to it every time code is pushed to the main branch via a pull request. This not only streamlines the process of creating and updating documentation but also ensures that it is always up-to-date with the latest codebase.

The inspiration behind this project came from my own experiences in navigating through the tedious work of publishing a great blog right after spending countless hours preparing a PR to be ready to be merged. In today's fast-paced development environments, keeping up with changes in the codebase can be a daunting task. By automating the process of creating and updating blog posts, we aim to reduce the time spent on documentation and free up developers to focus on more critical tasks.

What does the project do? 🧐

A simple overview of how it works is as follows:

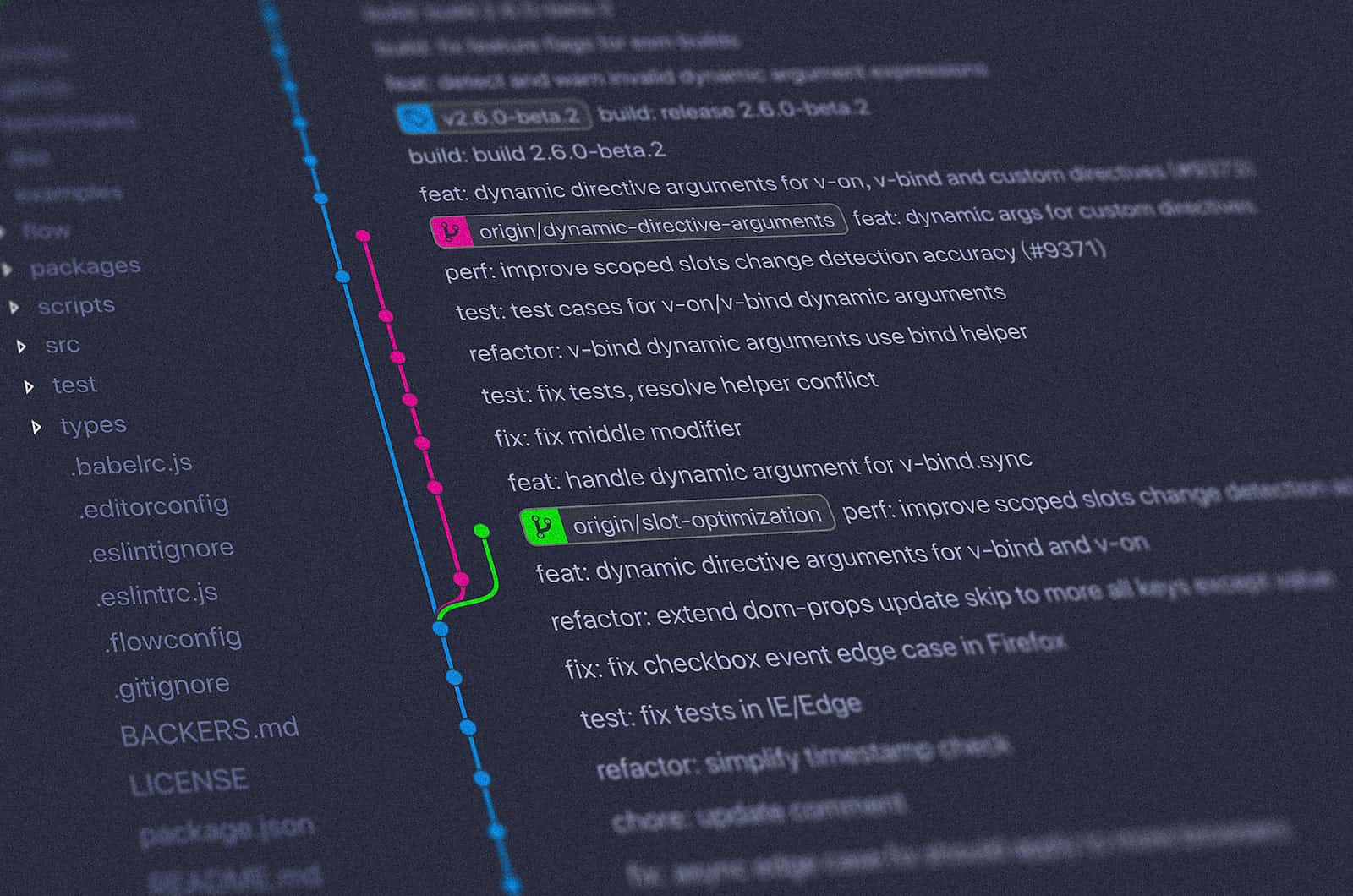

User makes a pull request -> The pull request is merged -> The github action is triggered -> A blog post based on the pull request is generated on hashnode

Building this project was a journey of learning and discovery. I started by identifying the problem we wanted to solve – automating the process of creating and updating blog posts. The journey in building and completing the action in and of itself was an insurmountable task. Prior to it, I had no experience working with GraphQL and Github Actions. I had come across them before yet this was the first to practically demonstrate my skills. The first step was figuring out how and when the action should trigger, in this case I settled on the code being pushed to main.

The second important part was to figure out how would the data be obtained for constructing the blog post. What all information can be extracted from the PR and how would it happen? These were answered with the help of Github's own API to retrieve various information.

How does it work? 🖥

The hashnode-pr action is simply a Github Action that users can integrate in their repositories on github. It consists of a ./github/workflows folder which has the main pr-publish.yml file.

The user must supply their hashnode access token and their hashnode publication ID, both which can be found in developer settings and blog dashboard. They must also need to supply their Cohere API Key. These 3 must be stored as secrets in each repository where this action is planned to be used.

name: Hashnode-PR Publish

on:

push:

branches:

- main

jobs:

blog_post:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Setup node

uses: actions/setup-node@v4

with:

node-version: '20.x'

cache: 'npm'

- run: npm ci

- name : Set enviornment variables

run : |

echo "OWNER=${{ github.repository_owner }}" >> $GITHUB_ENV

echo "REPO=${{ github.event.repository.name }}" >> $GITHUB_ENV

- name: Run script

env:

PAT: ${{ secrets.HASHNODE_API_KEY }}

PUBID: ${{ secrets.HASHNODE_PUBLICATION_ID }}

OWNER: ${{ env.OWNER }}

REPO: ${{ env.REPO }}

COHERE: ${{ secrets.COHERE_API_KEY }}

run: node hashnode-blog.js

It runs a job when the code is pushed to the main branch by first setting up the node dependencies and setting up the repository owner and repository name as environment variables for further use. The other environment variables are supplied directly from the secrets. The YAML file finally runs the hashnode-blog.js file, which is the main crux of our operation.

First we import all the necessary modules and intialise the Octokit client. Octokit is an official client for the GitHub API.

const core = require("@actions/core"); const { request } = require("graphql-request"); const { Octokit } = require("octokit"); const { CohereClient } = require("cohere-ai"); const octokit = new Octokit({});Then we start to create our async functions to make API calls using the GitHub API. Our first call is to fetch the latest PR with which we can extract it's PR number for our use with the upcoming functions.

async function fetchPR(owner, repo) { try { const { data } = await octokit.request( `GET /repos/${owner}/${repo}/pulls`, { sort: "updated", state: "closed", direction: "desc", per_page: 1, headers: { "X-GitHub-Api-Version": "2022-11-28", Accept: "application/vnd.github.raw+json", }, } ); const latestPullRequest = data[0]["number"]; return latestPullRequest; } catch (error) { console.error(error); } }Next we have the function to fetch all the commit messages in the specified PR

async function fetchCommits(owner, repo, pr) { try { const { data } = await octokit.request( `GET /repos/${owner}/${repo}/pulls/${pr}/commits`, { headers: { "X-GitHub-Api-Version": "2022-11-28", Accept: "application/vnd.github+json", }, } ); let allCommits = ""; for (let i = 0; i < data.length; i++) { allCommits += data[i]["commit"]["message"] + "\n"; } return allCommits; } catch (error) { console.error(error); } }Next we have the function to fetch all the files in the specified PR

async function fetchFiles(owner, repo, pr) { try { const { data } = await octokit.request( `GET /repos/${owner}/${repo}/pulls/${pr}/files`, { per_page: 3, page: 1, headers: { "X-GitHub-Api-Version": "2022-11-28", Accept: "application/vnd.github.text+json", }, } ); let allFiles = ""; for (let i = 0; i < data.length; i++) { allFiles += data[i]["patch"] + "\n"; } return allFiles; } catch (error) { console.error(error); } }Next we have the function to fetch all the comments made in the specified PR

async function fetchComments(owner, repo, pr) { try { const { data } = await octokit.request( `GET /repos/${owner}/${repo}/issues/${pr}/comments`, { headers: { "X-GitHub-Api-Version": "2022-11-28", Accept: "application/vnd.github.text+json", }, } ); let allComments = ""; for (let i = 0; i < data.length; i++) { allComments += data[i]["body_text"] + "\n"; } return allComments; } catch (error) { console.error(error); } }Next we have the function to fetch all the review messages in the specified PR

async function fetchReviews(owner, repo, pr) { try { const { data } = await octokit.request( `GET /repos/${owner}/${repo}/pulls/${pr}/comments`, { headers: { "X-GitHub-Api-Version": "2022-11-28", Accept: "application/vnd.github.text+json", }, } ); let allReviews = ""; for (let i = 0; i < data.length; i++) { allReviews += data[i]["body"] + "\n" + data[i]["diff_hunk"] + "\n"; } return allReviews; } catch (error) { console.error(error); } }Next we have the function to fetch the PR title and body info

async function fetchPRInfo(owner, repo, pr) { try { const { data } = await octokit.request( `GET /repos/${owner}/${repo}/pulls/${pr}`, { headers: { "X-GitHub-Api-Version": "2022-11-28", Accept: "application/vnd.github.text+json", }, } ); const title = data["title"]; const body = data["body"]; return { title, body }; } catch (error) { console.error(error); } }Now this is where we piece all our above functions and initialise the Cohere client to send all this info to generate our perfect markdown for the blog post.

We use the simple nightly model here and make sure to get back the generated text.

async function run() { const owner = process.env.OWNER; const repo = process.env.REPO; const pubID = process.env.PUBID; const pat = process.env.PAT; const co = process.env.COHERE; const pr = await fetchPR(owner, repo); const { title, body } = await fetchPRInfo(owner, repo, pr); const files = await fetchFiles(owner, repo, pr); const reviews = await fetchReviews(owner, repo, pr); const comments = await fetchComments(owner, repo, pr); const commits = await fetchCommits(owner, repo, pr); const cohere = new CohereClient({ token: co, // This is your trial API key }); const generatedText = async () => { const response = await cohere.generate({ model: "command", prompt: `I will provide you details of a pull request, including some of the files, review comments, and information. I want you to generate a blog post about it in markdown format. The details of the pull request are as follows: ${body}. Some of the files which have been changed ${files}. Any of the reviews ${reviews}. Any of the comments ${comments}. The commit messages ${commits}.`, maxTokens: 300, temperature: 0.3, k: 0, stopSequences: [], returnLikelihoods: "NONE", }); return `${response.generations[0].text}`; }; const content = await generatedText();The final snippet of the code is to create our query to actually post the blog contents to hashnode. This is done with the help of the graphql-request library we imported earlier.

const query = ` mutation PublishPost($input: PublishPostInput!) { publishPost(input: $input) { post { id slug title } } } `; const input = { title: title, publicationId: pubID, contentMarkdown: content, tags: [ { slug: title + "-pr", name: title + "-pr", }, ], }; const headers = { Authorization: pat, }; request("https://gql.hashnode.com", query, { input }, headers) .then((data) => console.log(data)) .catch((error) => console.error(error)); } run().catch((error) => core.setFailed(error.message));

Is the project complete? 🫢

The project is fully functional, implementing all the necessary features to automate the blog post publishing process. The main workflow file sets up a Node.js environment, installs the necessary dependencies, sets up the environment variables, and runs a script (hashnode-blog.js) that handles the publishing of the blog post.

The hashnode-blog.js script fetches information about the latest closed pull request, including commits, files, comments, and reviews. It then uses the Cohere AI API to generate a blog post about the pull request. Finally, it publishes the generated blog post to the user's Hashnode account.

Users can customise the action to use their own LLM providers instead of Cohere AI. Numerous options such as Open AI API can be configured to be used instead. The main caveat being to use the AI to generate an accurate blog post for the pull request.

Why use AI?

Since the blog primarily uses the PR information, reviews, comments, commit messages and the files of the PR, it becomes difficult to format all this information and make sense of it in a clean articulate format for a nice blog. Hence we rely on the power of AI to get us through and generate an appropriate blog.

Demo

Conclusion

Compared to existing solutions, my project stands out in its simplicity and ease of setup. Unlike other solutions that might require extensive configuration or complex scripts, this workflow is straightforward to set up and use. All you need to do is add the workflow file to your GitHub repository and set up the necessary secrets.

Moreover, my project is designed to be extensible. While it currently supports publishing blog posts based on pull request information, it can easily be modified to support other types of content as well. Many actions in the market aren't tailored to solve documentation and blogging simultaneously.

In conclusion, our project demonstrates the power of automation in enhancing productivity and efficiency. By automating the process of creating and updating blog posts, we hope to inspire others to explore similar opportunities and innovate in their own ways.

Subscribe to my newsletter

Read articles from Aazam Thakur directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Aazam Thakur

Aazam Thakur

I'm a software engineer from the city of Mumbai. Currently in my majoring in Artificial Intelligence and Data Science. I am an OSS contributor and love participating in hackathons.