Cloud Deployment Hygiene: Automate Application scaling in the cloud

Collins Jumah

Collins JumahIntroduction

This post outline the summary and key learnings of talk presented during the 3-day Africastalking tech summit 2023 Nairobi ,October 26, 2023 - October 28, 2023.

More often than not, we build applications without scaling in mind. During high application traffic, your valuable customers experience downtime, causing dissatisfaction, loss of trust in your services, and opportunity cost.

Autoscaling your application guarantees reliable performance by seamlessly increasing and decreasing new instances as demand spikes and drops. As such, autoscaling provides consistency despite the dynamic and, at times, unpredictable demand for applications.

In this workshop, attendees learn how to leverage AWS Cloud with Amazon EC2 Autoscaling feature while deploying their web applications to production.

We further implement a Load Balancer to balance the loads and coordinate users' access requests to our application tier.

Key Take away

How we can prepare for planned and unplanned scaling events with Amazon Elastic Compute Cloud(EC2) and ensure your infrastructure is ready to sustain increased compute power requirements.

Implementing a Load Balance to balance and or distribute traffic and coordinate user access to our Application tier.

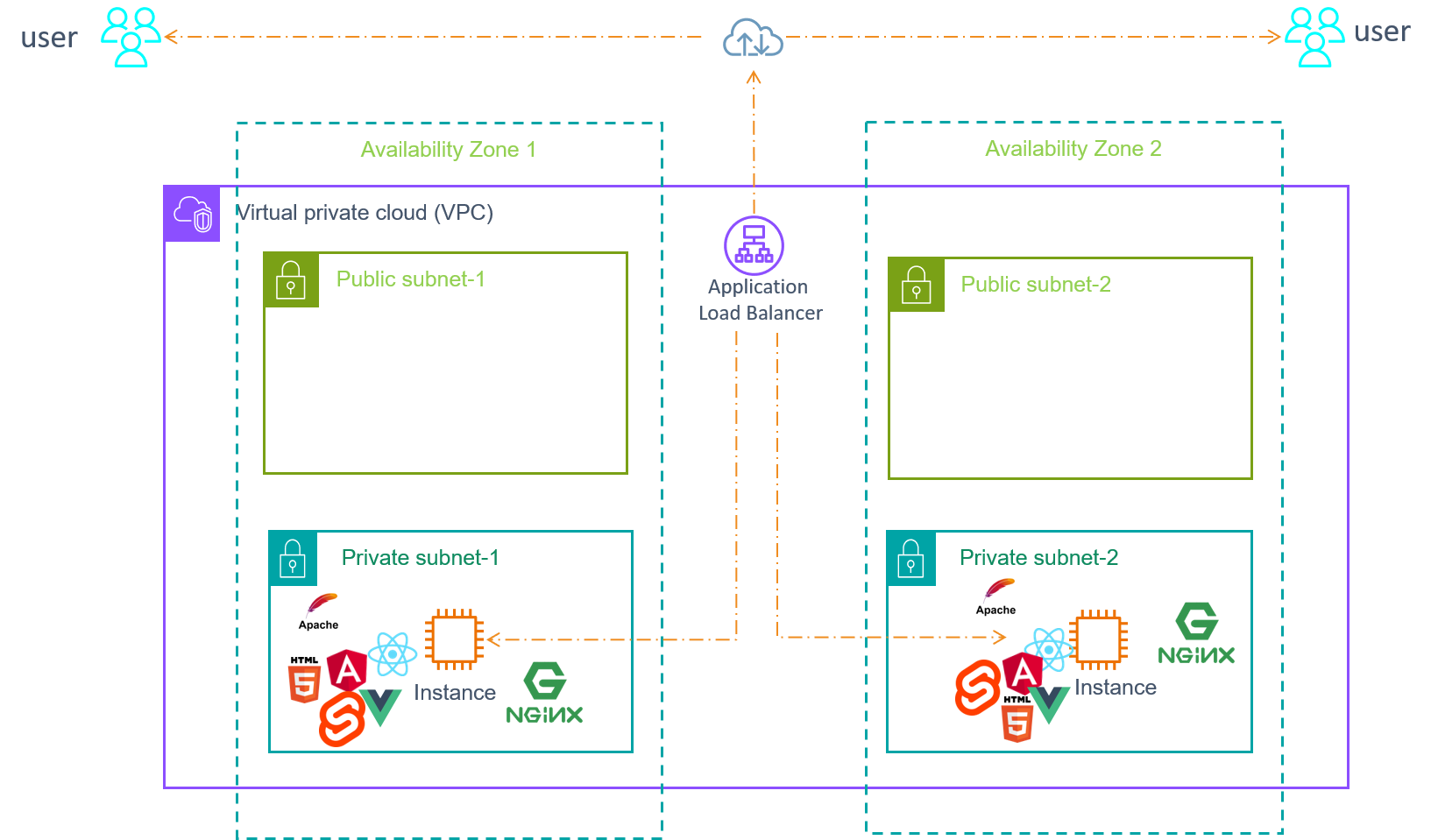

Although we will be focusing on how you can scale your web application, most of the best practices and learning will feature key AWS cloud services such as: EC2, EC2 Autoscaling and Elastic Load Balancer . At the base level of our networking, the infrastructure is built using Virtual Private Cloud (VPC) and its components such as: Subnets, Security Groups, Network Access Control List(NACL), Route Table, NAT Gateway, Internet Gateway and more.

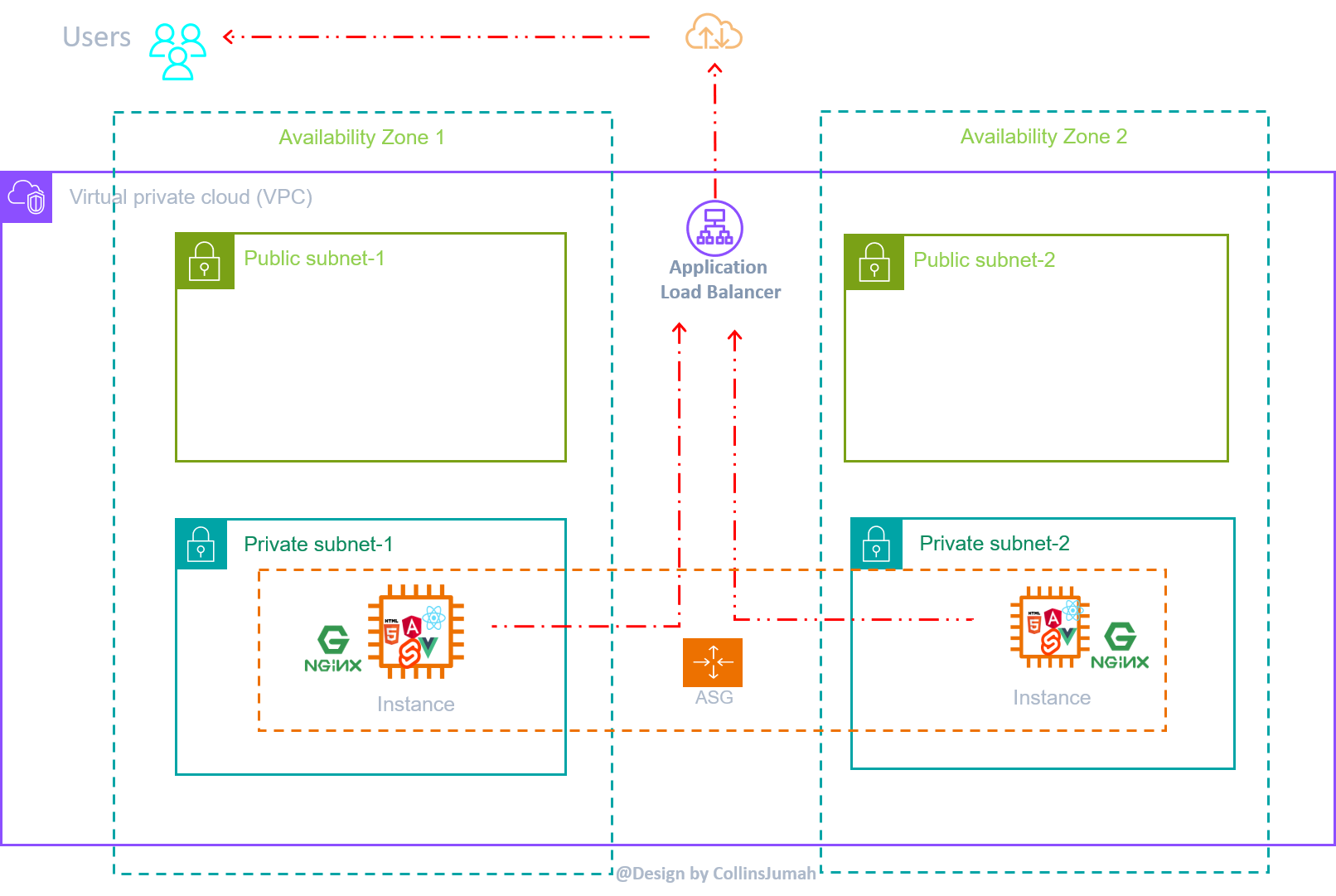

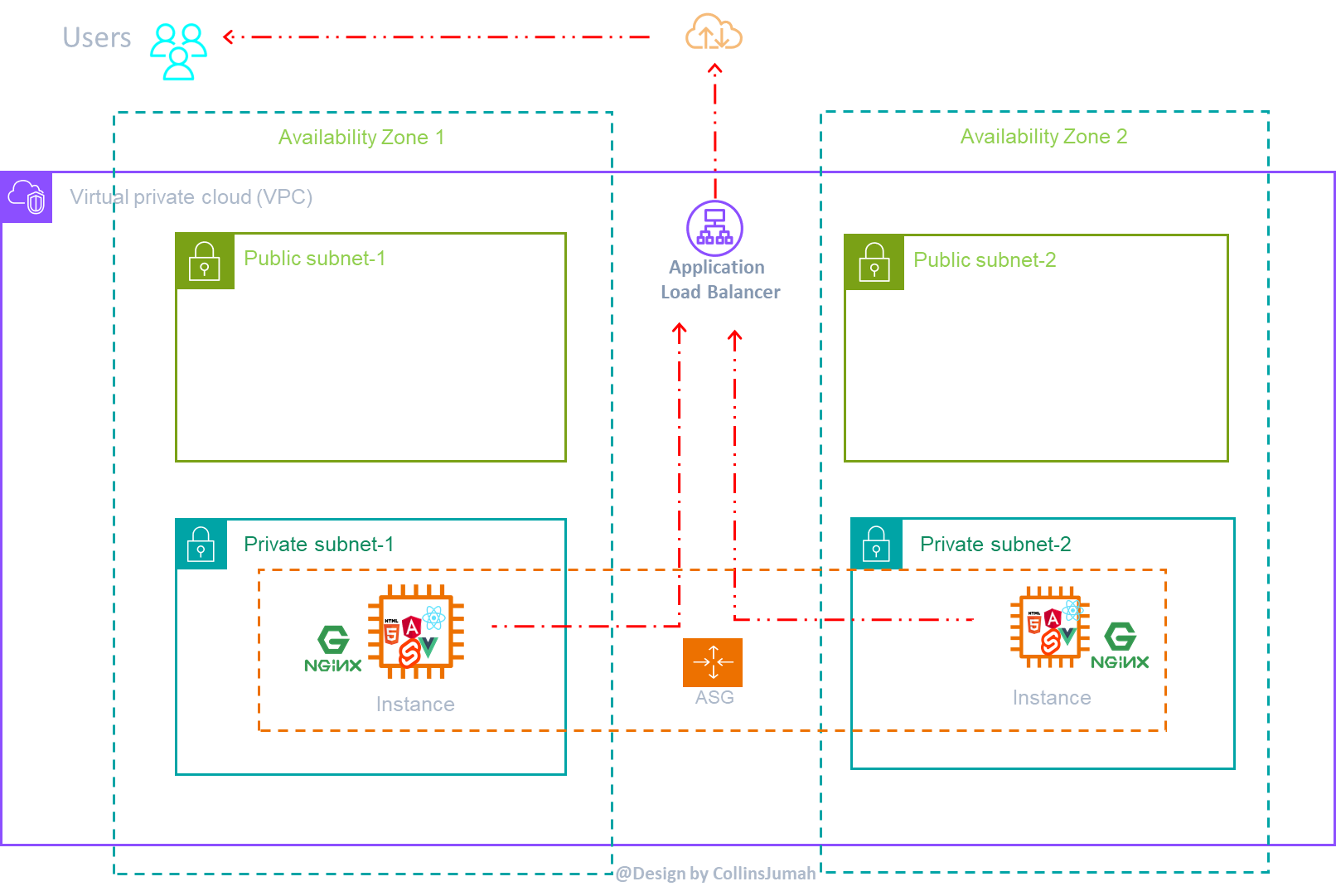

a. Final architecture

Let's breakdown this architecture. 🤗 into the following key learning pointers:

AWS Global infrastructure

Back to Basic(B2B) with Amazon VPC

Autoscaling: EC2 Autoscaling

Elastic Load Balancer

Wrap up

AWS Global Infrastructure

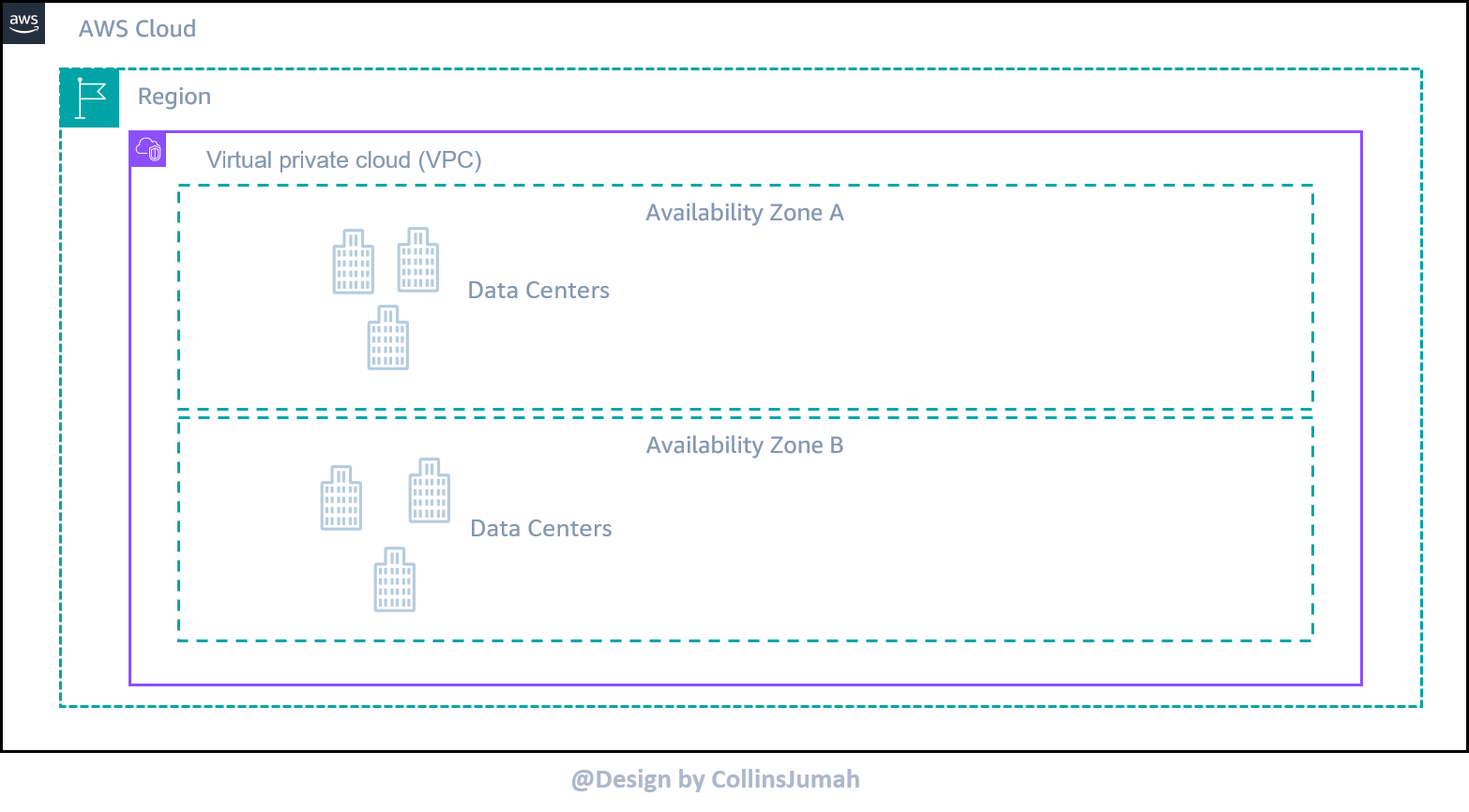

Before you design your AWS infrastructure you might need to understand where your resources will reside. Most importantly how its global infrastructure is organized. AWS Global infrastructure gives you the flexibility of choosing how and where you want to run your workloads. This include: regions, availability zone, data centers, edge locations or points of presence, local zones and more.

To give a summary on each consider this architectural design.

A region: Is a geographic area where AWS cluster its data centers. Each region is designed to be isolated from each other achieving the greatest possible fault-tolerant and availability. Additionally, its composed of multiple Availability Zones [minimum of 3] for high availability.

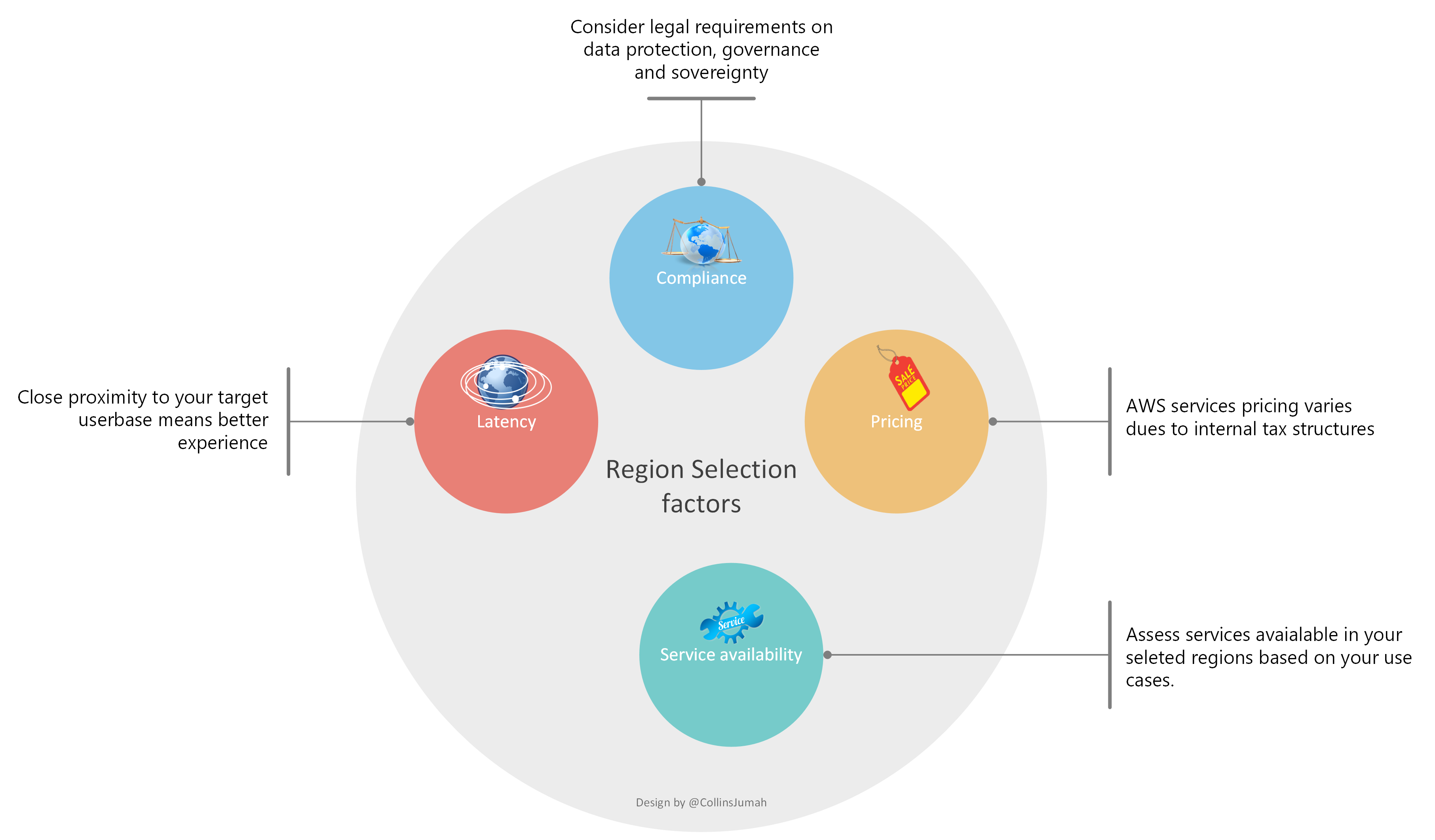

Before selecting a region for your workload, you might first need to consider the following:

Compliance: Consider any legal requirements based on data governance, sovereignty or privacy law. This vary from countries.

Latency: This is all about how closer your IT resources are to your user-base. Close proximity to customers means better performance.

Pricing: AWS service may vary in pricing from region to region. Some regions might be more expensive than others due to tax structures.

Service Availability: Not all AWS services might be available in all regions. Consider assessing services available in selected regions based on your use case.

Availability Zones (AZ): This is a collection/cluster of Data centers in a region. They are used to achieve high availability of your workload.

Edge Locations: In addition to regions and AZs, AWS global network include hundreds of edge locations for latency sensitive applications using key services such as CloudFront for content distributions, Amazon Route53 for Domain services, AWS Global Accelerator for network path optimization.

Back to Basic (B2B) with Amazon Virtual Private Cloud (VPC)

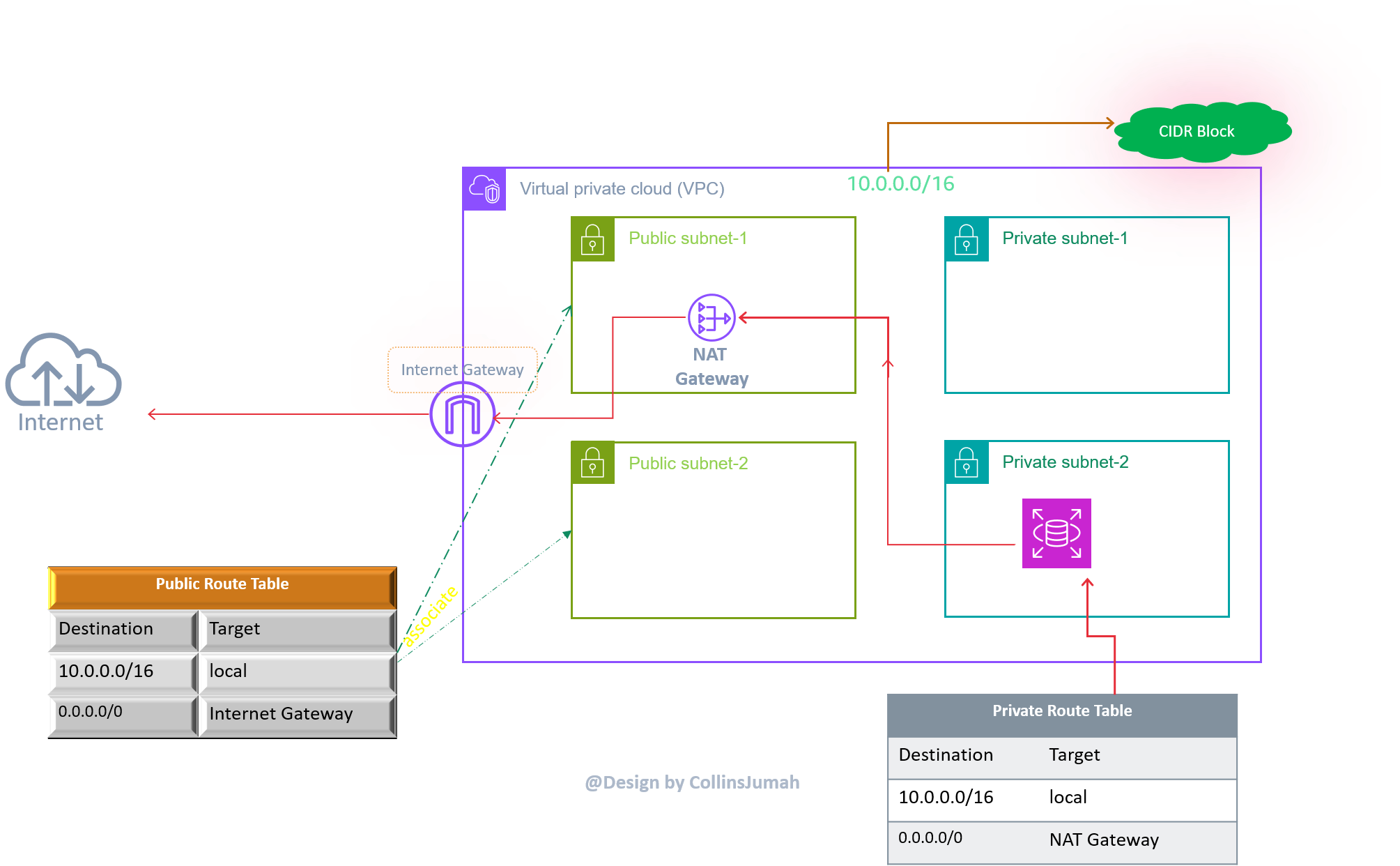

A way to isolate your network within AWS. All of the resources will reside inside a VPC. These VPC can also extend to local zones , AWS outpost and more. You can have up to 5 VPC per region per account. VPC span across all AZs in a region. You specify IP range using Classless Inter domain Routing (CIDR) block.

VPC components

Subnets: Are ranges of base VPC network. The Address range you specify must not overlap.

We create a public subnet for public facing application. This is where our Application Load Balancer will be placed. For a subnet to be public, it requires:

Internet Gateway which will allow communication between resources in VPC and the internet.

Route Table: Which contain rules that determines where network traffic is directed. In the above fig, destination of 0.0.0/0 means any network.

Public IP address which can be reached from the internet.

Private subnets is for the resources that won't be connected to the internet. For our demo , we put all EC2 instances in the private subnet. However you can still have your databases such as RDS in private subnet and any other sensitive application that should not be exposed to the internet.

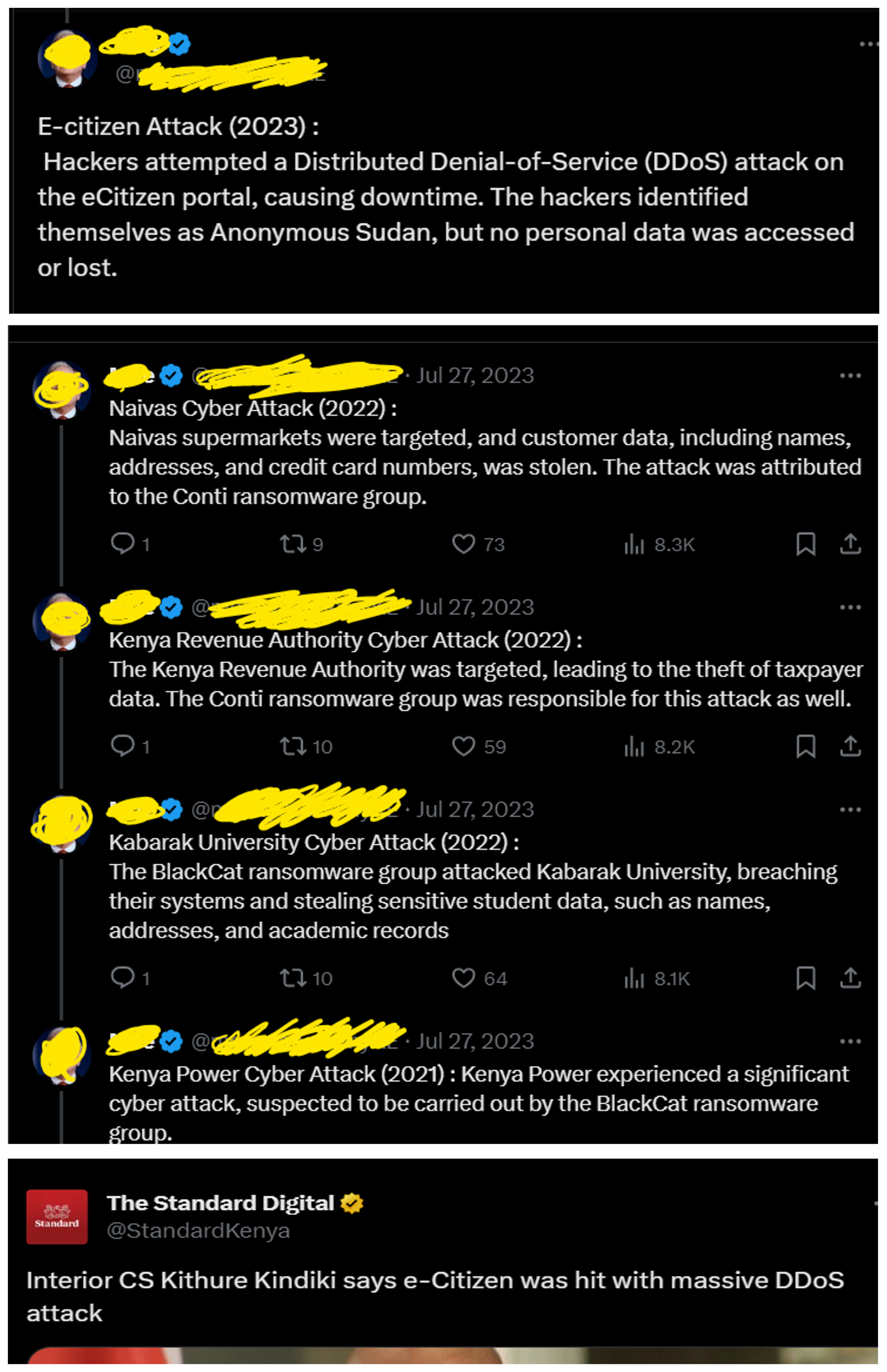

The Problem

In this section we explained some of the challenges we experience in our daily operations while running and or operating software products. Systems enemies don't sleep 🤔🛌. They are watching 👀your single moves; Hackers trying to sent malicious attacks in various forms; denying your legitimate users access to your services. One of the most common attack is DDoS attack.

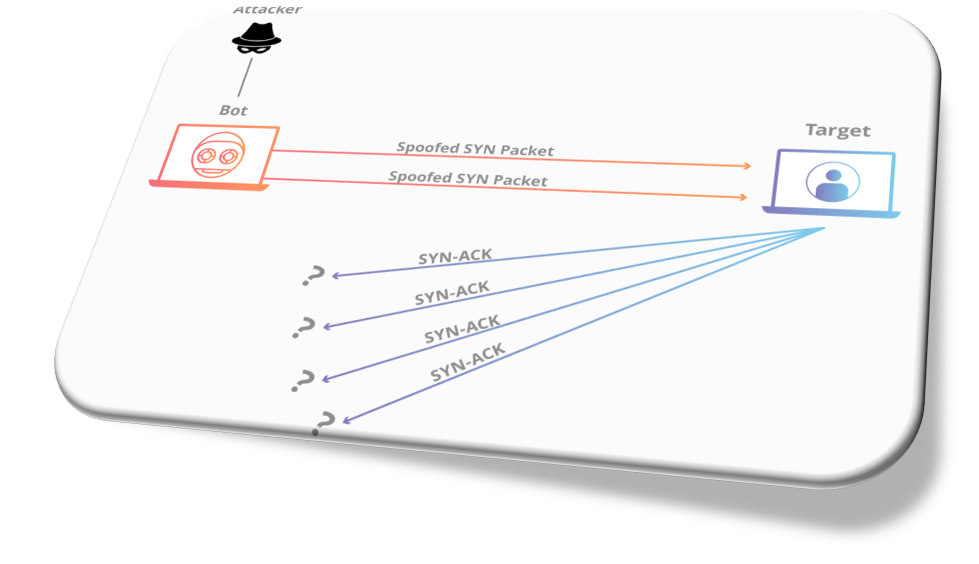

Attacker is sending huge requests (Spoofed Synchronized(SYN) packets): Which are like fake IP addresses. at the very least, it makes your application busy to be unable to handle the requests.

When requests are sent to the target. The target will then respond accordingly with SYN-ACK (Synchronized Acknowledgement), to acknowledge the communication.

While the server waits for the final ACK packet, which never arrive, the attacker will continue sending more spoofed packets.

This overwhelms the server and service unavailable to the end users.

Another use case discussed was on the increased traffic on your web platform. We went through sample real-world scenarios of increased traffic that affect your platform availability.

The big question is on how we can make our application available to serve end users and prevent such kind of attack from our platforms.

Autoscaling: EC2 Autoscaling

We wanted a way to make our platform be more reliable ,fault-tolerant and highly available to end users despite the increased traffic. Below are couple of key terms to define when configuring your architecture.

Resilient: A resilient architecture is able to recover from failures and this is determined by the amount of time its able to recover often known as recovery time objective(RTO) or RTO.

Fault-tolerant: Is the ability of your workload to remain operational even if some of the components used to build the system fail.

Highly Available is not about preventing system failure but the ability of the system to recover quickly from it.

With Auto scaling, the idea was to track scaling as it happens and remove manual overhead.

Amazon EC2 Autoscaling helps you ensure that you have the right number of EC2 instance to handle the load for your application.

This maintains high availability and managing the capacity of our application instances by automatically increasing and decreasing based on demand. This ensures that your application always has the right capacity to handle the load.

By paying only for the instances you using , you save money by launching instances only when they are needed and terminating them when not in use. This helps optimize cost.

Autoscaling has three key components:

a. Launch Template: We needed a way to create a standardized template by specifying required parameters to launch EC2 instance with Auto scaling group. We used a Launch template which determines what will be launched.

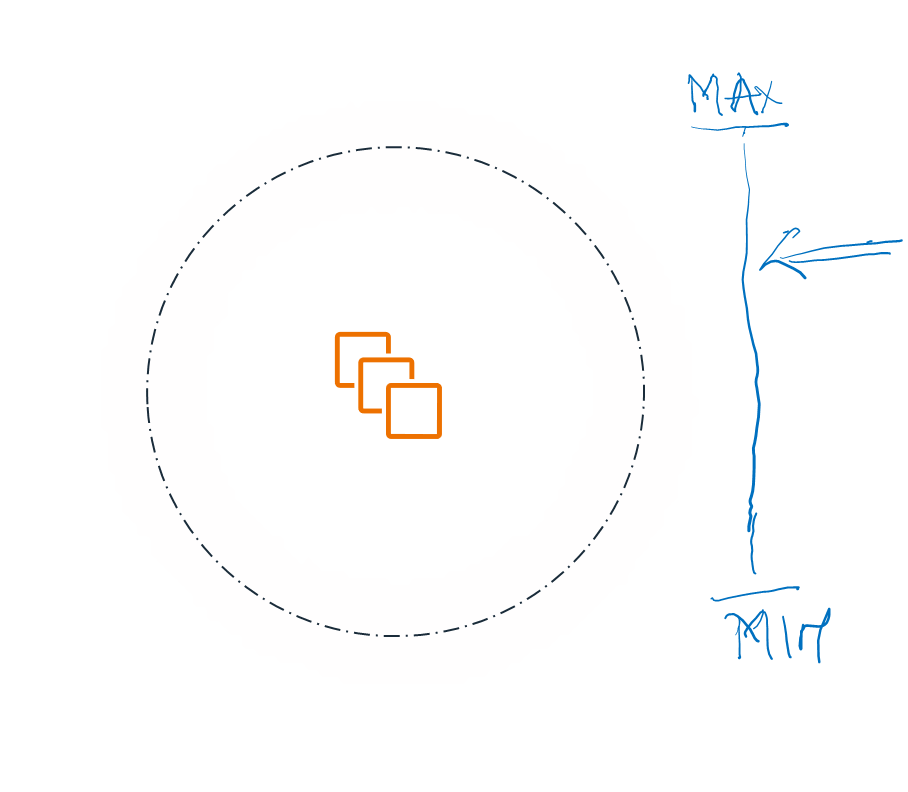

b. Autoscaling Group: With Autoscaling group, we specify the number of EC2 instances. This can be the minimum, maximum and desired capacity.

You specify the minimum number of instances in each autoscaling group, so that your group never goes below this size.

The Maximum number of instances ensures that our group never goes above this size

Desired Capacity specifies the number of healthy instance Autoscaling group should have all the time.

c. Scaling Policy: The idea here is to tell autoscaling when and how to scale. Scaling can occur, manually, on schedule, dynamic or you can use Autoscaling to maintain a specific number of instances.

Manual Scaling: Adjust the number of EC2 Autoscaling at any time. Useful when autoscaling is not needed or you want to make one time capacity adjustments.

Schedule Scaling: You set up automatic scaling for your application based on predictable load changes by creating scheduled actions that increase or decrease your capacity at specific time. This allows you to optimize cost and performance. Your application has enough capacity to handle increased traffic during peak time, but does not overprovision unneeded capacity at other times.

With Scaling policy specified, autoscaling will modify the desired target capacity; Launching and terminating instances based on demands of the application.

Load Balancing

We added a Load balancer in this case Application Load Balancer to distribute incoming traffic across all the registered targets.

- Load Balancing directs and controls internet traffic between the application servers and their visitors or clients. Therefore improving performance, scalability and security.

How load balancer improves performance of your application

By increasing response time and reducing network latency.

Distribute the load evenly [round-robin algorithm] between servers to improve application performance.

ASG with Elastic Load Balancer

With all the instances registered and managed by ASG, Elastic Load Balancer will distribute traffic to all the instances so our users can access a load balanced applications.

ALB performs Health checks and ensures users requests are directed to healthy target.

Should their be any scaling out, Elastic Load balancer will sent traffic to the newly added instances and spread the load. With scaling in, ELB stops sending requests to the draining instances(connection draining).

Conclusion

In the session, we covered some of the best practices while configuring your highly available, scalable and cost efficient architecture.

We looked into the key AWS Services to leverage while building this kind of architecture. Amazon VPC as the key networking building block provides you with a secure private connection within your AWS account.

Within the VPC you subdivide the base network using CIDR and create different subnets depending with your application needs. To ensure high availability of your architecture, you can consider Multi-AZ architectural design, behind a load balancer.

Application Load balancer will ensure evenly distribution of traffic. Additionally, using Application load balancer comes with built-in security, scalability and availability improving your application performance.

In order to automate your EC2 instance launching we used EC2 Autoscaling group which also integrate with Application load balancer.

Some resources:

Find the closest region for your workloads. CloudPing.

Understand 6 pillars of AWS Well Architected Framework.

Automate your EC2 instance with EC2 Autoscaling

Balance traffic of your instances with Elastic Load Balancer.

Subscribe to my newsletter

Read articles from Collins Jumah directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Collins Jumah

Collins Jumah

Frontend - Cloud Specialist | Technical Content Developer | Community Builder