Day 49 - INTERVIEW QUESTIONS ON AWS

Siri Chandana

Siri ChandanaTable of contents

- 1. Name 5 AWS services you have used and what's the use cases?

- 2.What are the tools used to send logs to the cloud environment?

- What are IAM Roles? How do you create /manage them?

- How to upgrade or downgrade a system with zero downtime?

- What is infrastructure as code and how do you use it?

- What is a load balancer? Give scenarios of each kind of balancer based on your experience.

- What is CloudFormation and why is it used for?

- Difference between AWS CloudFormation and AWS Elastic Beanstalk?

- What are the kinds of security attacks that can occur on the cloud? And how can we minimize them?

- Can we recover the EC2 instance when we have lost the key?

- What is a gateway?

1. Name 5 AWS services you have used and what's the use cases?

Amazon S3 (Simple Storage Service): Amazon S3 provides object storage that allows you to store and retrieve large amounts of data. It is commonly used for backup and restore, archiving, content distribution, static website hosting, and data storage for various applications.

Amazon EC2 (Elastic Compute Cloud): Amazon EC2 offers scalable virtual servers in the cloud. It enables you to quickly provision and manage compute capacity, making it suitable for hosting web applications, running batch processing tasks, and deploying enterprise applications.

AWS Lambda: AWS Lambda is a serverless computing service that allows you to run code without provisioning or managing servers. It is used for building event-driven architectures, serverless applications, and automating tasks. Lambda functions can be triggered by various AWS services or external events.

Amazon RDS (Relational Database Service): Amazon RDS provides managed relational databases in the cloud. It supports various database engines, such as MySQL, PostgreSQL, Oracle, and SQL Server. RDS simplifies database administration tasks, including backups, software patching, and automatic scaling, making it suitable for running applications that require a relational database.

Amazon VPC: Amazon VPC is the Virtual Private Cloud, which is an isolated cloud resource. It controls the virtual networking environment, such as resource placement, connectivity, and security. And it allows you to build and manage compatible VPC networks across cloud AWS resources and on-premise resources. Here, it improves security by applying rules for inbound and outbound connections. Also, it monitors VPC flow logs delivered to Amazon S3 and Amazon Cloudwatch to gain visibility over network dependencies and traffic patterns.

These are just a few examples of the many AWS services available. Each service offers a range of features and use cases, allowing developers and businesses to build scalable and reliable applications in the cloud.

2.What are the tools used to send logs to the cloud environment?

Here are some common tools and services used for log management in a cloud environment:

AWS CloudTrail: It is an AWS service that helps you enable operational and risk auditing, governance, and compliance of your AWS account. Actions taken by a user, role, or an AWS service are recorded as events in CloudTrail. CloudTrail has a validation feature that you can use to determine whether a log file was modified, deleted, or unchanged after CloudTrail delivered it.

Amazon CloudWatch : Monitors your AWS resources and the applications you run on AWS in real time. You can use CloudWatch to collect and track metrics, which are variables you can measure for your resources and applications. You can also configure alarms, which automatically initiate actions on your behalf.

VPC Flow Logs : It is a feature of Amazon Virtual Private Cloud (Amazon VPC) that helps you capture information about the IP traffic going to and from network interfaces in your VPC. Flow log data for a monitored network interface is recorded as flow log records. A flow log record represents a network flow in your VPC.

What are IAM Roles? How do you create /manage them?

IAM Roles:

IAM roles are similar to IAM users but are intended for use by AWS services or applications running on AWS resources. Roles provide a way to define a set of permissions that can be assumed by trusted entities. Instead of using long-term credentials like access keys, roles use temporary security credentials that are dynamically generated and rotated by AWS.

Roles are often used in scenarios where an AWS service or application needs to access other AWS resources securely. For example, you can create a role that grants permissions to an EC2 instance to access other AWS services, such as S3 or DynamoDB, without embedding access keys directly in the instance.

To create and manage IAM (Identity and Access Management) roles in AWS:

Sign in to AWS Management Console.

Navigate to IAM.

Click "Roles" and then "Create role."

Choose a use case (e.g., EC2), and attach policies.

Review and create the role.

Attach the role to AWS resources (e.g., EC2 instances).

Modify permissions by editing the role's policies.

AWS resources automatically use the attached role for permissions.

IAM roles automatically rotate temporary credentials.

How to upgrade or downgrade a system with zero downtime?

Upgrading or downgrading a system with zero downtime typically involves implementing a rolling deployment strategy or a canary deployment. Here's a simplified process:

Open EC2 console

Choose Operating System AMI

Launch an instance with the new instance type

Install all the updates

Install applications

Test the instance to see if it’s working

If working, deploy the new instance and replace the older instance

Once it’s deployed, you can upgrade or downgrade the system with near-zero downtime.

What is infrastructure as code and how do you use it?

IaC is a form of configuration management that codifies an organization's infrastructure resources into text files. These infrastructure files are then committed to a version control system like Git. The version control repository enables feature branch and pull request workflows, which are foundational dependencies of CI/CD. These makes it easier to manage and scale infrastructure in a consistent and reliable manner

It helps streamline infrastructure management, reduces manual effort, and increases the reliability and efficiency of infrastructure deployments. Tools like AWS CloudFormation and Terraform enable the automation of infrastructure deployment.

What is a load balancer? Give scenarios of each kind of balancer based on your experience.

A load balancer is a network device or software component that distributes incoming network traffic across multiple servers or resources to optimize performance, maximize resource utilization, and ensure high availability. It acts as a traffic manager, evenly distributing requests among server instances to prevent any single server from becoming overwhelmed.

Based on my experience, here are three common types of load balancers and scenarios in which they are typically used:

Application Load Balancer (ALB):

Scenario 1: Web Application Load Balancing: An ALB is commonly used to distribute HTTP/HTTPS traffic across multiple web servers. It performs advanced content-based routing and supports features such as path-based routing, host-based routing, and URL rewriting. This makes it suitable for load balancing traffic to different web applications or microservices within an application.

Scenario 2: WebSocket Load Balancing: ALBs also support WebSocket protocol, making them ideal for load balancing real-time applications that require bidirectional communication between clients and servers, such as chat applications, collaboration tools, and gaming platforms.

Network Load Balancer (NLB):

Scenario 1: TCP/UDP Load Balancing: NLBs are designed to handle high volumes of traffic at the transport layer (TCP/UDP). They are commonly used for balancing traffic to stateless network services that don't require advanced application-level features, such as DNS servers, VPN gateways, or IoT backends.

Scenario 2: High Performance and Low Latency Load Balancing: NLBs are known for their high throughput and low latency characteristics, making them suitable for scenarios that demand ultra-fast and highly responsive load balancing, such as high-frequency trading platforms or real-time data streaming applications.

Classic Load Balancer (CLB):

Scenario 1: Legacy Applications: CLBs are the older version of load balancers in AWS and are primarily used for backward compatibility or specific use cases that require the features provided by CLBs but not available in ALBs or NLBs.

Scenario 2: Simple HTTP Load Balancing: CLBs can be used for basic HTTP/HTTPS load balancing scenarios where advanced routing or WebSocket support is not required. For example, if you have a simple web application with a single endpoint that requires load balancing, a CLB can be a straightforward choice.

It's important to note that these load balancer types are specific to AWS environments. Load balancers from other cloud providers or on-premises solutions may have different names or features, but the underlying concept of distributing traffic remains the same.

The selection of a specific load balancer type depends on the requirements of your application, the type of traffic, and the desired features and capabilities needed to achieve optimal performance and availability.

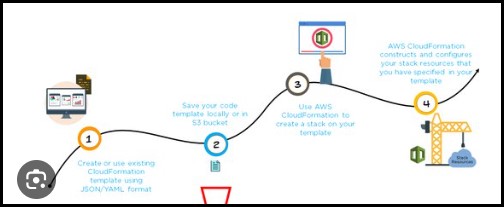

What is CloudFormation and why is it used for?

AWS CloudFormation is a service provided by Amazon Web Services (AWS) that allows you to define and manage your infrastructure resources as code. It enables you to create, update, and delete a collection of AWS resources in a predictable and repeatable manner.

CloudFormation uses declarative JSON or YAML templates to describe the desired state of your infrastructure. These templates define the resources, their properties, and any dependencies among them. By using CloudFormation, you can provision and configure your infrastructure resources in a consistent and automated way.

AWS CloudFormation is used to automate and manage infrastructure deployments using code. It provides the ability to define infrastructure as code, automate the provisioning and management of resources, ensure consistency and reproducibility, and integrate with other AWS services. CloudFormation simplifies the process of managing complex infrastructure environments and promotes efficient, reliable, and scalable infrastructure deployments.

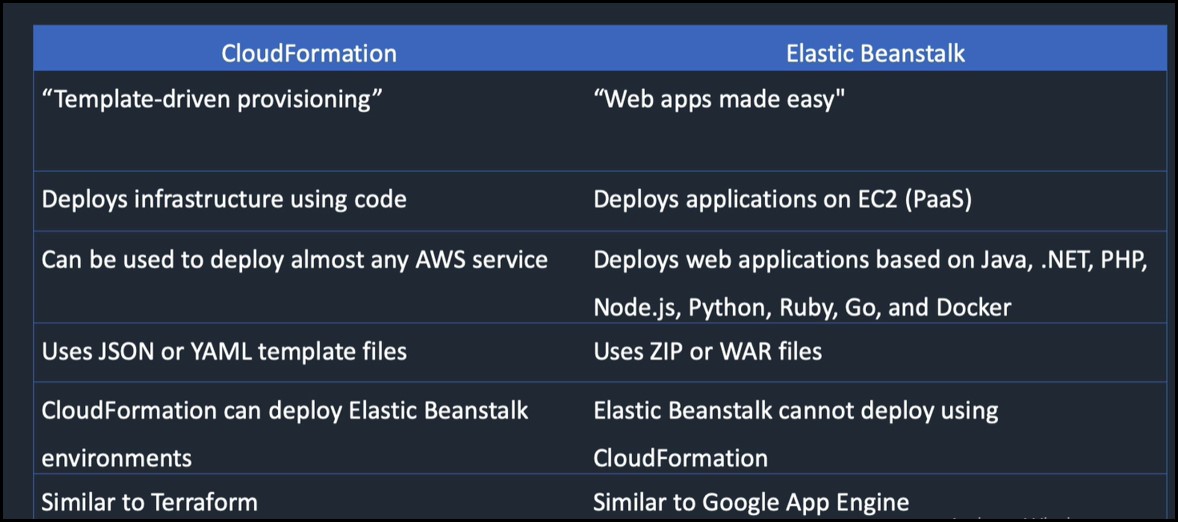

Difference between AWS CloudFormation and AWS Elastic Beanstalk?

What are the kinds of security attacks that can occur on the cloud? And how can we minimize them?

There are several security attacks that can occur on the cloud, and it's important to be aware of them. Here are some common security attacks on the cloud and strategies to mitigate them

Data Breaches: Data breaches involve unauthorized access to sensitive data stored in the cloud. This can occur through various means, such as exploiting vulnerabilities, weak access controls, or insider threats. To minimize data breaches:

Encrypt sensitive data both at rest and in transit.

Regularly monitor and audit access logs for suspicious activities.

Implement data loss prevention (DLP) mechanisms to detect and prevent data exfiltration.

Distributed Denial of Service (DDoS) Attacks: DDoS attacks overwhelm a cloud service with a flood of traffic, making it unavailable to legitimate users. To minimize DDoS attacks:

Use a cloud-based DDoS protection service that can detect and mitigate attacks.

Implement traffic filtering and rate limiting mechanisms to block malicious traffic.

Scale your infrastructure horizontally to handle increased traffic during attacks.

Insecure APIs: Insecure APIs can be exploited to gain unauthorized access or manipulate cloud resources. To minimize API-related attacks:

Use secure API authentication mechanisms, such as OAuth or API keys.

Implement API rate limiting and usage quotas to prevent abuse.

Regularly update and patch APIs to address security vulnerabilities.

Can we recover the EC2 instance when we have lost the key?

Yes, There are two ways:-

We can stop the instance detach the volume. Then create a new instance with a new key pair and attach the existing volume to it. Then we can finally launch the new instance and terminate the old instance.

Also, we can create an AMI from the existing instance and create a new instance out of that AMI. We can give a new keypair while creating the instance in the console.

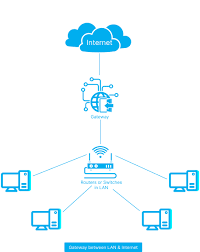

What is a gateway?

A gateway is a network device or software application that connects two different networks, allowing them to communicate and share data. It acts as an intermediary or bridge between these networks, facilitating the exchange of information. A request about a certain amount of data is made by the user end and then the server finds and processes data accordingly with the request.

What is the difference between the Amazon Rds, Dynamodb, and Redshift?

Amazon RDS, DynamoDB, and Redshift are all database services provided by Amazon Web Services (AWS), but they have different functionalities and use cases. Here are the key differences between them:

Amazon RDS (Relational Database Service):

Database Type: Amazon RDS is a managed relational database service that supports various popular database engines such as MySQL, PostgreSQL, Oracle, SQL Server, and MariaDB.

Data Structure: RDS is designed for structured data with a fixed schema. It follows the traditional relational database model with tables, rows, and columns.

Use Cases: RDS is suitable for transactional applications, content management systems, e-commerce platforms, and other applications that require traditional relational database capabilities.

Amazon DynamoDB:

Database Type: DynamoDB is a fully managed NoSQL database service.

Data Structure: DynamoDB is designed for flexible and schema-less data storage. It stores data in a key-value format and supports JSON-like documents.

Use Cases: DynamoDB is suitable for applications that require high scalability, low latency, and flexible data models. It is commonly used for real-time applications, gaming, mobile apps, and scenarios where high write and read throughput is required.

Amazon Redshift:

Database Type: Redshift is a fully managed data warehousing service.

Data Structure: Redshift is optimized for online analytical processing (OLAP) workloads and is designed for querying and analyzing large volumes of structured data using SQL queries.

Use Cases: Redshift is suitable for data analytics, business intelligence, and reporting applications that require complex queries and fast retrieval of large datasets.

Do you prefer to host a website on S3? What's the reason if your answer is either yes or no?

Yes, hosting a website on Amazon S3 can be a viable option for certain types of websites. Here are some reasons why:

Cost-Effectiveness: Amazon S3 offers a cost-effective solution for hosting static websites. It charges based on data storage, data transfer, and requests, which can be lower compared to other hosting options for static content.

Scalability: Amazon S3 is designed to handle high levels of traffic and can scale automatically to accommodate increased demand. This makes it suitable for websites that experience fluctuating traffic patterns or sudden spikes in traffic.

High Availability: Amazon S3 provides high availability and durability for your website files. It replicates data across multiple data centers, ensuring that your website remains accessible even in the event of hardware failures or outages.

Security: Amazon S3 offers various security features, such as server-side encryption, access control policies, and integration with AWS Identity and Access Management (IAM). This helps protect your website files and restrict access to authorized users.

However, it's important to note that hosting a website on S3 is most suitable for static websites that don't require server-side processing or dynamic content generation.

Thank you for 📖reading my blog, 👍Like it and share it 🔄 with your friends.

Happy learning😊😊

Subscribe to my newsletter

Read articles from Siri Chandana directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by