Unlocking Product Documentation with Conversational Chatbots

Adityan S

Adityan S

AI technology has begun to significantly change the way we consume content. Within product teams, there is a clear focus to build and integrate conversational chatbots. This is because of the visible improvement it can bring about in customer interactions. With conversational chatbots, your organization can now guide customers through the process of consuming product documentation. A specialized chatbot trained on the documentation set of a product and that which meets specific objectives enthuses these enterprises. The broad objective is to introduce a conversational AI model where users submit their query, and the AI responds using the specific information set that it has been trained on. Alongside, any organization must ensure adherence to the industry-wide demands for security, privacy, compliance, and so on.

Let us take a look at the fundamentals of conversational chatbots in this article. Along with a brief introduction to design and application of conversational chatbots, we take a look at how product teams can utilize intelligent chatbots to potentially change how enterprise content is consumed.

Terminology

Generative AI—Intricate deep-learning models that produce high-quality content such as text, images, and so on, based on the training data they have processed.

Natural language processing (NLP)—A subset of AI, it involves research and design of algorithms and language models that can help machines to understand, interpret, and generate human languages with efficiency.

Large language models (LLMs)—Deep-learning models that are trained on extensive sets of data to perform efficiently in environments that involve large-scale data. They can recognize and generate text and perform numerous other tasks. If an N-gram model is the simplest of language models, LLMs represent the other end of the spectrum due to the scale and complexity that they can handle.

Conversational chatbots—Conversational chatbots are AI software that are sophisticated enough to converse with humans in their language. They can communicate through text or voice communication channels. Also known as conversational agents or virtual assistants, they are capable of conducting extensive conversations with users, to comprehend their intricate inquiries, and provide detailed answers. Above all, they are trained to learn from their interactions. This helps chatbots to enhance their performance over time. Such chatbots are commonly used in customer service, product recommendations, and personal assistance.

Conversational Chatbots: An Overview

Conversational chatbots interact with users through natural language, mimicking human conversation patterns. Beyond engaging in casual dialogue, they serve a valuable purpose for the industry. For example, they can guide users through the information in a product documentation set by answering questions and providing personalized assistance. Understanding the different types of bots allows product teams to choose the type that best suits their documentation needs.

Types

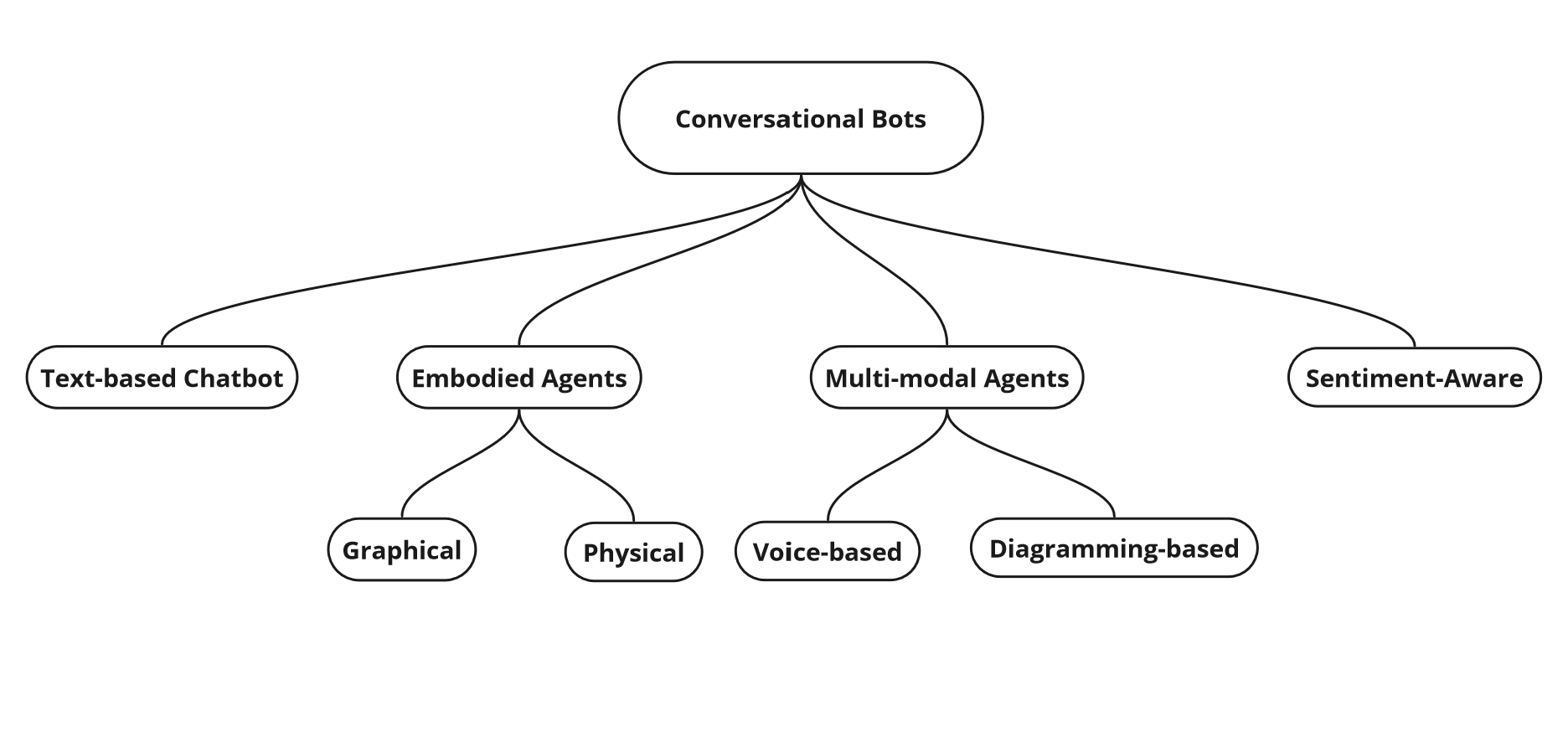

Conversational chatbots can be classified into various types or categories, based on how they are designed, accessed and the level of and type of interaction they support.

Interaction Model

Chatbots can be grouped according to the mode in which they interact with the user or input.

Chat-based bots—Conversational agents use text to interact through chat interfaces.

Embodied agents—AI systems exist in a physical or virtual form, like robots and avatars respectively.

Multi-modal agents—Chatbots or avatars interact through channels such as text, voice, images, and gestures.

Sentiment-aware agents—Chatbots or agents understand human emotions and thereby having the capability to tailor their responses and interactions.

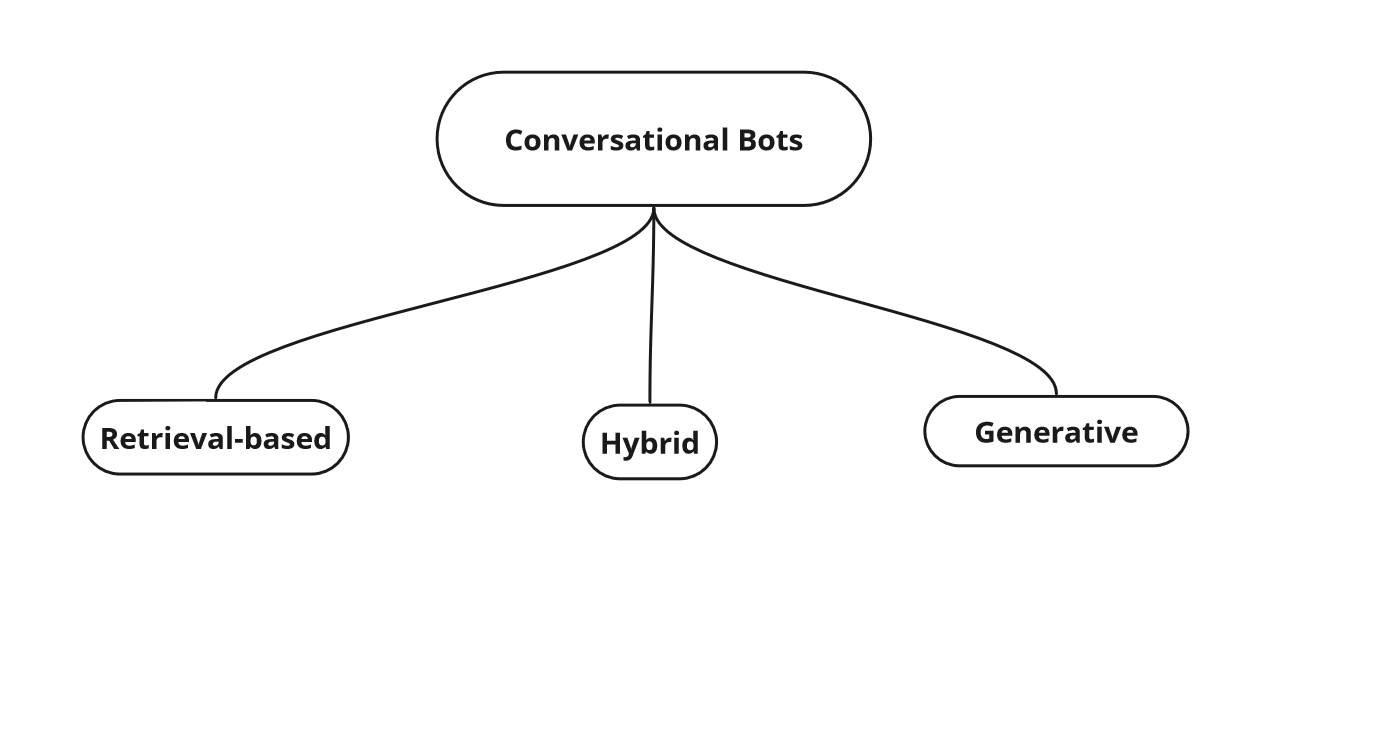

Response Generation

Retrieval-based—Chatbots access pre-written responses from a database. It is based on keywords that the user inputs. It is a fast and efficient model that works well for basic questions. However, it lacks the ability to handle any complex queries.

Generative—Chatbots use AI models like LLMs to generate original responses based on the user's input and context. These bots can handle diverse interactions but can be prone to errors as well.

Hybrid—Chatbots combine retrieval and generative approaches for generating input. They utilize pre-written responses for generic questions, but are capable of generating responses as well. This approach can be adopted to balance efficiency with flexibility.

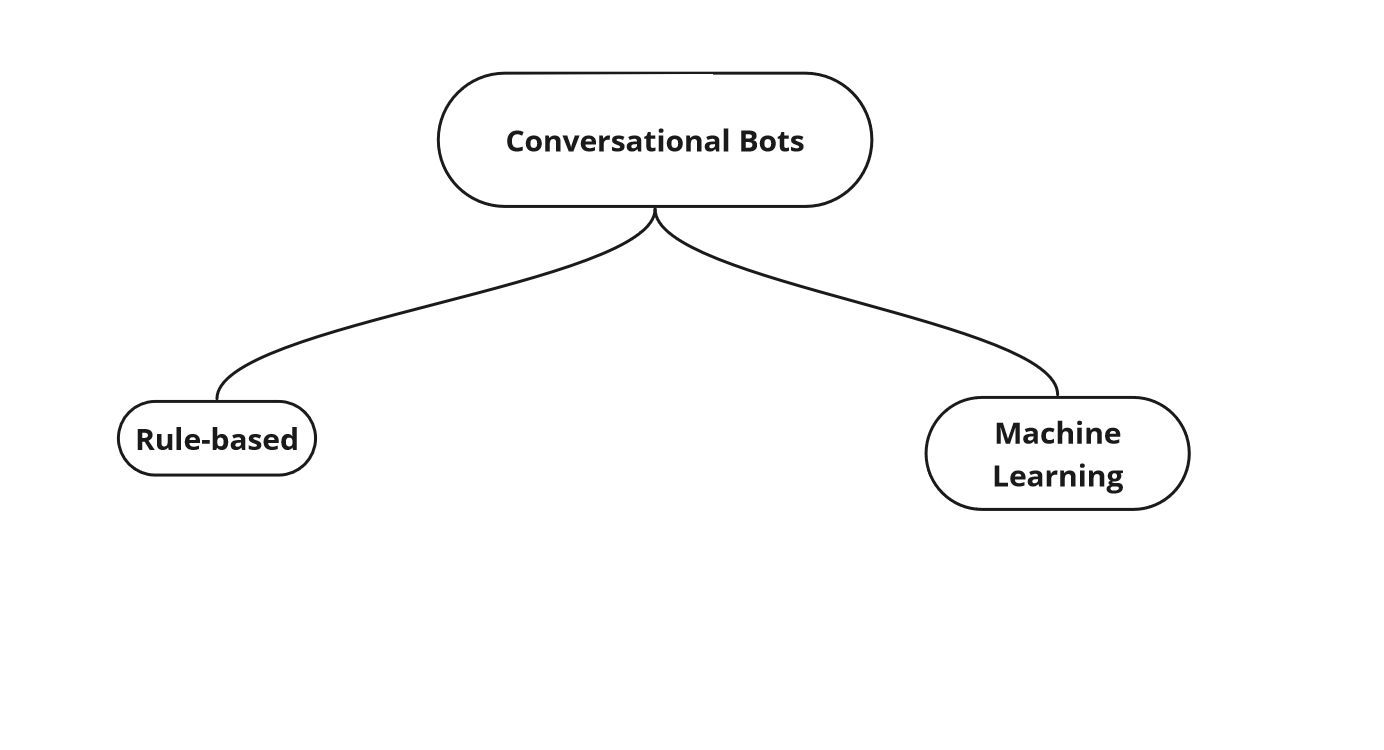

Learning Methodology

Rule-based—Chatbots follow pre-defined rules and decision trees to provide a response. Lacks capability to handle unforeseen situations.

Machine Learning—Chatbots learn from data patterns and feedback. This helps the chatbots to improve their responses over time and handle complex queries. They are more adaptive, but require ongoing training data and maintenance.

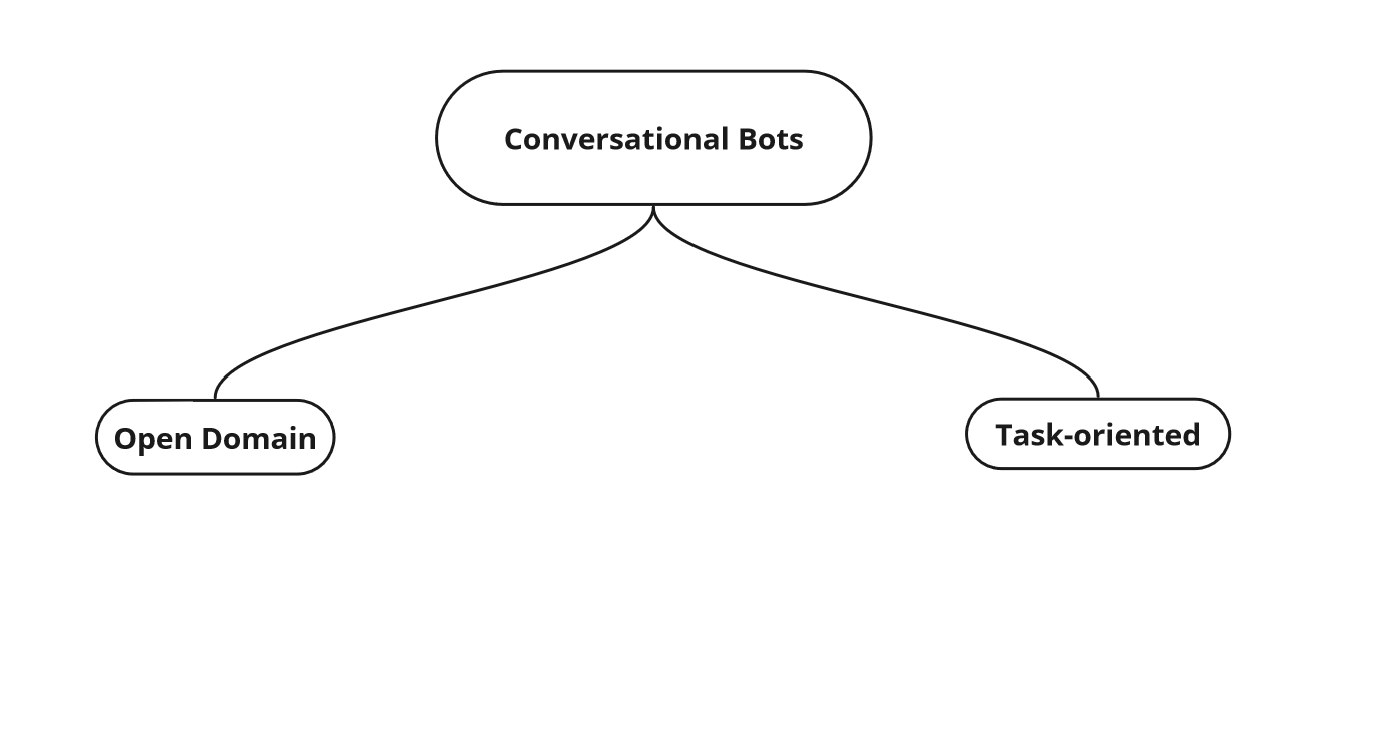

Domain

Open-domain—Chatbots trained to handle large knowledge bases and answer a wide range of questions from varied topics.

Task-oriented—Chatbots designed for specific knowledge bases or tasks. They can help to book an appointment or train themselves on product documentation to troubleshoot technical issues.

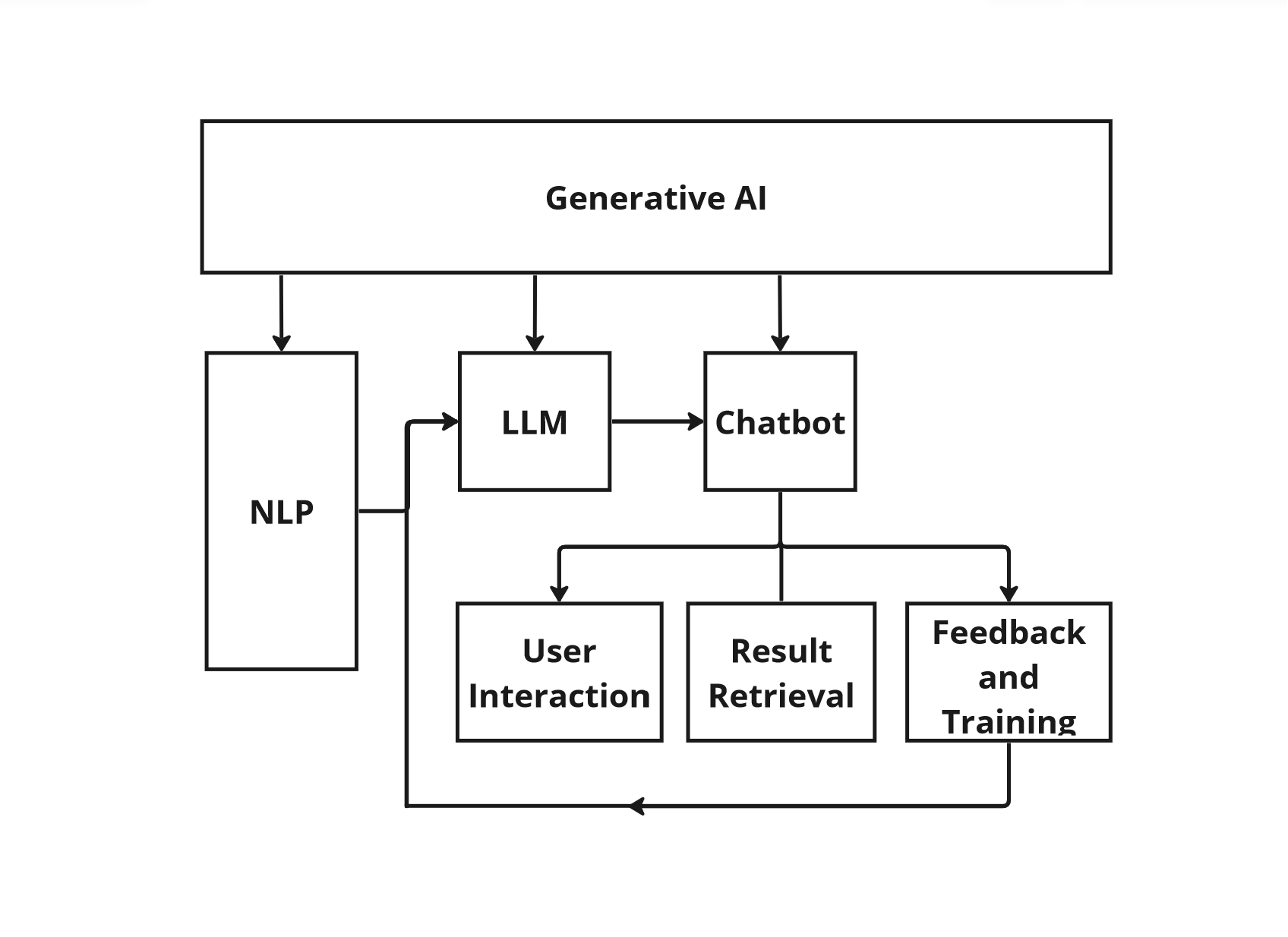

Powering Chatbots with NLP and LLMs

Advanced conversational chatbots that are used for product documentation are mostly built on the generative AI model. NLP and LLMs form the building blocks for these chatbots. They enable the chatbot to understand user queries accurately. Such chatbots can generate contextually-aware responses for the queries presented to them. Also, they continually learn from their interactions. This helps them to improve over time.

Understanding Natural Language Processing (NLP)

NLP is that part of AI which trains systems to understand human language. It’s what helps AI systems to comprehend text and speech. They interpret input, even identify the emotion, and then determine the most appropriate response. In conversational chatbots, NLP thus enables chatbots to understand any user input and turn a request into a meaningful conversion. They are capable of generating human-like dialogue, with their improved usability and user interaction compared to peer chatbots.

NLP typically involves several steps:

Tokenization (breaking sentences into individual words or 'tokens')

Part-of-speech tagging (identifying the grammatical role of words)

Named entity recognition (identifying names, places, dates, etc.),

Semantic parsing (understanding the meaning of sentences in context).

These steps combined, enable a conversational AI to process language in a way that's meaningful and valuable to the user.

Role of Large Language Models (LLMs) in Chatbots

LLMs are a specific type of AI model within NLP. They are trained on massive amounts of text data so as to generate human-quality responses. In summary, NLP provides sentiment analysis, topic modeling, machine translation and so on. Based on the NLP guidelines, LLM writes or generates different kinds of texts, translates languages, answers queries and performs other tasks.

Training Chatbots with NLP and LLMs

The process of training a chatbot begins with feeding data into the AI system. Depending on specific needs, this data can be of various types. It can be a product documentation set, previous conversation logs, or Q&A pairs. The aim is to expose the chatbot to as many plausible scenarios as possible, so that it can handle queries accurately.

NLP techniques ensure that this data is pre-processed into a format that the model can understand. Then, LLM learns from the dataset, picking up structures, patterns, synonymous phrases, and how parts of sentences relate to each other. With a continual flow of training data, the chatbot learns over time to generalize based on patterns. Thus, it becomes capable of effectively handling a broader array of user queries.

Continuous feedback plays a key role in the performance of LLM-based models. For the chatbot, each interaction with a user is a learning opportunity. The data from such interactions is analyzed and utilized to continually iterate and upwardly revise the accuracy levels of the chatbot.

From Gaps to Growth: Use Cases to Master Conversational Documentation

While conversational chatbots ensure better reach to product documentation, their integration presents a set of considerations and challenges. Successful adoption depends on how well you can address these aspects. Let's explore some key scenarios:

Communication Gaps

Challenge: Chatbots aren’t working—They fail to grasp complex queries and continue to misinterpret slang, dialects, or colloquial language.

Opportunity: Improve NLP capabilities—Invest in advanced NLP models. It can then enhance the LLM-based chatbot's ability to train on datasets and accurately interpret user scenarios.

Empathy

Challenge: Chatbots lack empathy—Artificial beings, chatbots fail to understand human emotions or emote. Hence, they cannot generate an appropriate emotional response when required. This could be critical in a customer service scenario.

Opportunity: Implement sentiment analysis—Use this technique to train the bot to recognize user emotions and generate appropriate responses. For example, train the system to identify the emotion of an administrator who encounters a technical error and is feeling frustrated. Use this training to structure responses and also offer immediate support.

Accuracy

Challenge: Bot isn’t as accurate as expected—The efficacy of a chatbot relies heavily on the quality and diversity of training data available. The accuracy of the chatbot’s response takes a toll if the training data isn't wide-ranging.

Opportunity: Continuous bot training—As the products are being continually upgraded and made feature-rich, it is critical that there is a comprehensive plan for chatbot training. The focus should be on providing regular training on diverse, relevant, and high-quality data as most products are forever-evolving.

Multimodality

Challenge: Difficulty with non-textual queries—Unless designed for it, chatbots can often struggle with non-textual inquiries such as voice, image, and so on.

Opportunity: Going beyond the text—Equip the chatbot with multi-modal capabilities to aid in handling non-textual inputs. This choice depends on what capabilities are ideal for your chatbot.

Data Security

Challenge: Handling sensitive data—Chatbots may collect personal data as part of their interaction with users. Therefore, the security and management of this data is critical to the enterprise.

Opportunity: Ensuring data privacy and security—It is mandatory to abide by the data protection regulations. Encrypt sensitive data, anonymize personal data, and limit data storage so as to achieve this.

Features for your Conversational Chatbot

Adaptive knowledge base—Content database that can be customized to meet specific requirements.

Role-based access—Assigns specific access rights and permissions to users. It can be based on the roles they play with respect to the product. By limiting access, you can maintain operational security. Few of the roles that can be assigned:

User—Provide queries, evaluate response, provide feedback.

SME—Evaluate user feedback and incorporate the learning to train the bot and improve the response.

Doc admin—Update the content database based on valid bot feedback that the SME has accepted.

Admin—Constantly monitor, evaluate, train, and maintain the bot.

Restricted search—If a particular content source or URL from the content database of the LLM seems to have incorrect information, then it is necessary that the bot does not retrieve results from this source, until the source is updated.

Strong feedback mechanism—Allows users to rate or comment on the chatbot's responses. It helps continual improvement of the system.

Report generation—Comprehensive reports that help stakeholders to track various key metrics such as usage statistics, interaction history, focus areas, and other relevant information.

Interfaces (Chatbot plugin for websites/apps, search bar, Cisco Webex client)—Different ways for users to interact with the chatbot. It could be through a conveniently located search bar, a dedicated collaboration application like Cisco Webex, or as a plugin integrated into third-party websites or software.

Handling irrelevant queries—Capability to handle unrelated or irrelevant questions. Redirect users to relevant information or prompt for clarification when the chatbot doesn't have the necessary data in the user input.

Controlled LLM access—Restrict access to authorized individuals. Safeguard sensitive data and promote data privacy.

Modular content database—Ensure that content is well organized, labeled or tagged, and easier to maintain.

Content encryption—Ensure secure communication of data shared between users and the chatbot. Block possibilities for content interception by unauthorized individuals.

Multi-modal capabilities (Read images, speak aloud)—Enhance the chatbot to interact with users through multiple modes. Develop capability to understand and process visual data (like reading images, diagrams, charts, videos) as well as vocalize responses aloud.

Contextual awareness—Ability to refer to past interactions with the user and identify the context of a query, if not provided.

Personalization—Option for users to configure preferences for language, response style, and so on.

Interactive troubleshooting—Guide users through a step-by-step problem-solving process by asking a sequence of questions. The answers to these questions help to identify the problem and suggest appropriate solutions.

Integrations—Work seamlessly with other tools like analytics platforms or productivity applications.

Self-learning—Training to learn from user interactions and improve responses over time.

Multilingual capabilities—Program your chatbot to interact with users in multiple languages. Support all languages that the product documentation covers as part of localization.

Context-aware recommendations—Recommend related features or products to a user based on analysis of current and past interactions with a user.

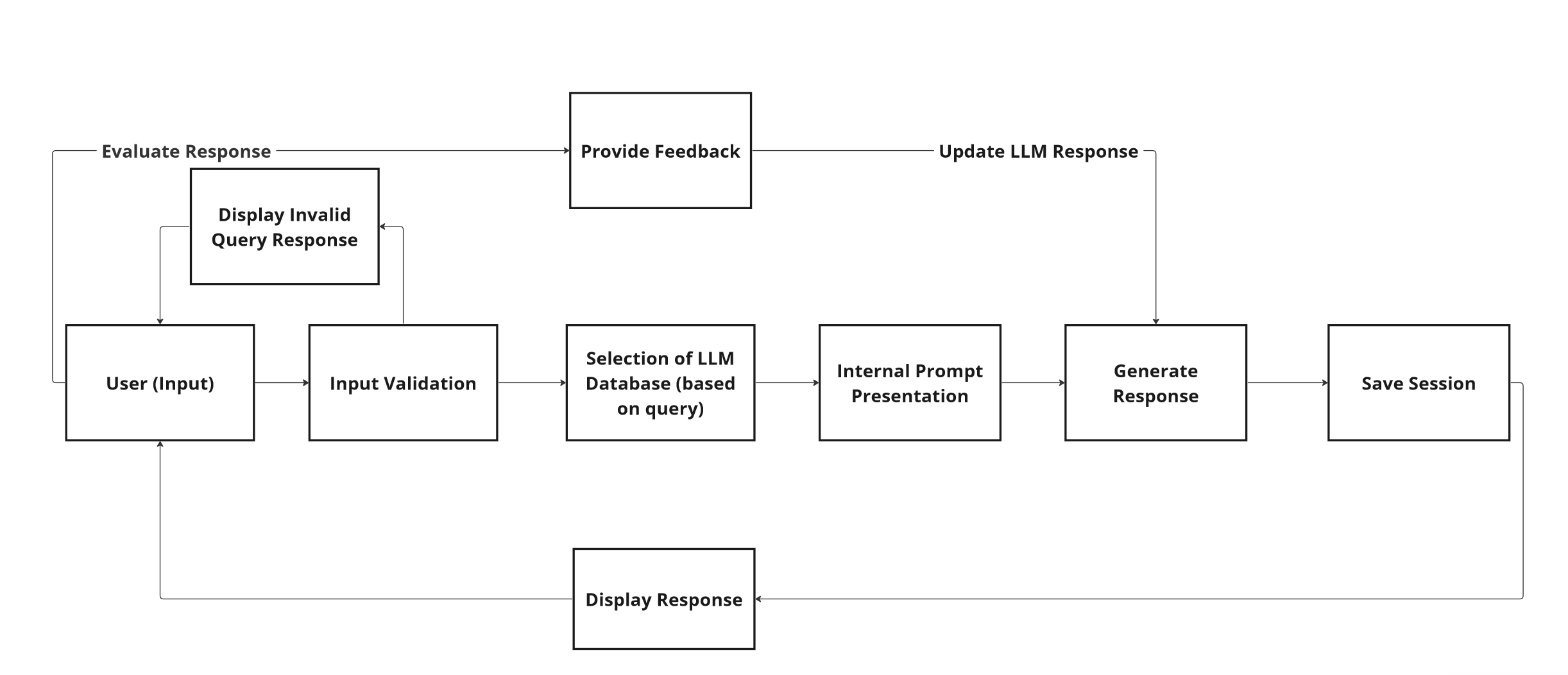

Workflow: Conversational Chatbots

Once the conversational chatbot for your product documentation is developed and deployed, the users of your product will access the interface through which they can interact with the chatbot. When a user submits an input or query, the AI-powered chatbot evaluates the input provided.

Scenario A: If the query is invalid or out of scope for the chatbot, it will respond with an apology message.

Scenario B: If the query is valid, the chatbot evaluates the different LLMs that it is trained on. It will then identify the relevant LLM to fetch the data for the user query. The query is then broken down into an internal prompt to generate the response. The session is saved for later reference and then the response is displayed to the user.

Feedback mechanism: Irrespective of whether it is Scenario A or Scenario B, the user can evaluate the response he or she has received from the chatbot and provide feedback. The feedback is saved by the AI system for the evaluation of those assigned for this task (SME or Admin). If the feedback is accepted, the LLM response for the query is revised to include the learning. Do note that the content database does not get updated when this happens, but only the response is revised. Hence, the documentation team must provision resources and time to update their product documentation so that the errors are fixed and it matches the revised feedback of the LLM.

Validate your Conversational AI Model

Off-topic queries—Ability to handle unrelated or irrelevant inquiries. Ideally, it should attempt to redirect the conversation to the topic or gracefully admit its inability to answer.

Multiple questions in a single query—Capability to dissect a query containing several questions and respond to each appropriately. Check if the chatbot just answers the first question or gets confused.

Complex questions—Ability to understand and respond to complicated or multifaceted inquiries. Check if the chatbot is able to generate a nuanced or multi-step answer.

Typos, style, slang and so on—Recognize and correct spelling mistakes in user queries, before generating a response. Check the ability to understand the user’s intent, beyond typos, writing style, slang, and colloquial usages in a user input.

Varied scenarios—The product use cases or scenarios vary from customer to customer, though the product documentation on which the LLM is trained upon is generic. Check the ability to handle different scenarios that involve a wide variety of user problems or situations.

Redirection (links or references for additional coverage)—Provide further information in the form of links or references. This is relevant when the chatbot needs to point users to external resources for additional or more detailed information.

Hand-off to a human contact—Check the ability to identify scenarios where the bot is unable to assist further and needs to transfer the interaction to a human agent. This is vital in preventing customer frustration. Also, it adds value by ensuring that complex issues are well-handled.

K-fold cross validation—Test your chatbot’s ability to handle new data that is related to your existing dataset. Divide the entirety of your content database into K subsets. Now, train the chatbot on K-1 subsets. Use the last subset that the chatbot isn’t trained on, to frame your queries to see how gracefully it can handle related, yet unknown data. Repeat the process by isolating a new subset, till all the content subsets are evaluated.

Documentation Strategy for Conversational Chatbots

Try to author your documentation in such a way that it contains clear instructions and maintains a user-friendly tone. Some of the key elements that can influence the performance of your conversation chatbot are:

Product documentation as training data—Product documentation provides training data for the LLM and therefore determines the chatbot's performance. Hence, it's necessary to provide accurate and comprehensive data during the training phase of an LLM.

Volunteering for chatbot training—Documentation often acts as the bridge between development and customer experience. This is because they would have analyzed the different use cases for each functionality of the feature. In the context of a chatbot being trained for a product documentation site, this is an invaluable asset. The knowledge of multiple use cases helps to train the chatbot for the various ways or angles in which a user may phrase questions around a feature. Hence, it is recommended that doc team members volunteer for chatbot training.

Multi-tiered responses—Sometimes, users might need more detailed information than what the chatbot initially provides. In your documentation, consider including layered answers that the bot can provide upon user request. This will allow the bot to deliver more context and depth when asked for the same.

Use case documentation—While product documentation steers clear of scenario-based documentation that addresses varied customer scenarios, it might be a good value add for documentation created for conversational chatbots, as this content enables the bot to satisfactorily answer queries related to specific scenarios.

Flow of information—Plan the flow of content in a doc topic to match the logical flow of information that a user would need to understand or perform in a product-related scenario. Then, the content flow would logically match the flow of information in a chatbot response to a user query. Thus, content mindful of the chatbot requirements will lead to better product documentation experience for the customer.

Defining chatbot guidelines—Ethical, inclusive and legal considerations are critical for businesses of the present. It is especially significant if your bot handles personal data or talks to people across the globe. It is important that doc teams are involved in the training of the chatbot to ensure that all such ground rules that doc teams have maintained over the years are included in the chatbot training as well. Also, documentation teams must provide details about how the bot is to handle privacy-related situations and comply with general data protection regulations (GDPR).

Error handling—In a scenario where the chatbot is handling queries that it doesn't understand or it is populating an incorrect response, it is imperative that the reasons for the same are internally studied. It is mandatory to improve the performance of the chatbot. In such a scenario, few areas will be looked into:

Content database accuracy (errors in doc coverage)

Missing content (lack of doc coverage)

Ability of chatbot to retrieve and present information (lack of chatbot training)

AI hallucinations

Hence, it is important that the documentation team is well aware of the doc coverage (and the lack of it) that it provides. Also, in such a scenario, each keyword of the official documentation is under the scanner. Therefore, it is important that content quality is of the highest quality.

Additional Writing Guidelines

Some of the key guidelines that are widely followed for product documentation are beneficial while authoring for conversational chatbots.

Clear

Minimalist

Conversational

Consistent

Keyword, tag driven

Periodically revised

Adherence to these guidelines will ensure consistency in tone and coverage and boost user satisfaction. In essence, drafting product documentation for conversational chatbots requires both continuation of existing best practices as well as the flexibility to integrate new approaches that are guaranteed to increase customer satisfaction.

Subscribe to my newsletter

Read articles from Adityan S directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Adityan S

Adityan S

Senior Content Specialist.