A Tale of Two VLANS

Jay Miracola

Jay Miracola

When handling sensitive traffic, in my scenario DNS, its sometimes necessary to isolate the traffic from one another. In this example, I wanted one DNS server on my Kubernetes cluster to serve two VLANs but I didn't want those VLANs to have any access to one another. I also wanted a single pane of glass to observe the requests on both VLANs. I also have the VLANs on a single trunk to the server in question. Instead of splitting the service, I split the traffic using MetalLB.

Getting started, I needed to choose BGP or ARP. I chose Layer 2 as the router in question isn't capable out of the box with BGP. Next I needed to configure the server interface with the second, non-default VLAN tag 12 and map it to a port.

auto eth0.12

iface eth0.12 inet static

address 172.16.12.10

netmask 255.255.255.0

network 172.16.12.1

broadcast 172.16.12.255

gateway 172.16.12.1

dns-nameservers 1.1.1.1 8.8.4.4

vlan_raw_device eth0

Now the server should be able to reach out of the same physical interface and reach both the default VLAN and the newly configured VLAN 12 via interface eth0.12 .

$ arping 172.16.12.5 -I eth0.12

ARPING 172.16.12.5

60 bytes from 9c:30:5b:06:6d:f5 (172.16.12.5): index=0 time=117.228 msec

56 bytes from 9c:30:5b:06:6d:f5 (172.16.12.5): index=1 time=39.202 msec

56 bytes from 9c:30:5b:06:6d:f5 (172.16.12.5): index=2 time=132.372 msec

56 bytes from 9c:30:5b:06:6d:f5 (172.16.12.5): index=3 time=72.073 msec

$ ping 172.16.12.5 -I eth0.12

PING 172.16.12.5 (172.16.12.5) from 172.16.12.10 eth0.12: 56(84) bytes of data.

64 bytes from 172.16.12.5: icmp_seq=1 ttl=255 time=56.1 ms

64 bytes from 172.16.12.5: icmp_seq=2 ttl=255 time=8.98 ms

64 bytes from 172.16.12.5: icmp_seq=3 ttl=255 time=13.5 ms

First I need to tell metalLB about the IP pool reservations I've carved out for it on my network so it only pulls from the selected range. Ill setup two pools instead of adding another string so I can appropriately tie them to the L2 advertisements in the next step.

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: public-pool

namespace: metallb

spec:

addresses:

- 172.16.12.10-172.16.12.15

---

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: private-pool

namespace: metallb

spec:

addresses:

- 192.168.120.20-192.168.120.30

Now I need to setup the L2 advertisements and tie them to the correct interfaces. Ill also reference the IP Pools above to the correct interface.

apiVersion: v1

items:

- apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: public-l2

namespace: metallb

spec:

interfaces:

- eth0.12

ipAddressPools:

- public-pool

kind: List

---

apiVersion: v1

items:

- apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: private-l2

namespace: metallb

spec:

interfaces:

- eth0

ipAddressPools:

- private-pool

kind: List

Now MetalLB's speaker logs should show the appropriate pools and advertisements tied to the correct interface. Without this , the speaker logs indicate fuzzy logic is used and it doesn't work out well in this configuration. Moving on, I need to now configure my services so that the use the IP range when calling type: LoadBalancer on the separate interfaces. For brevity, I'll only show an example of the VLAN tagged interface. The other service also matches on the same application, ports, etc but serves the default VLAN.

apiVersion: v1

kind: Service

metadata:

labels:

app: dns-server

name: dns-public

namespace: dns-server

spec:

allocateLoadBalancerNodePorts: true

externalTrafficPolicy: Local

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

loadBalancerIP: 172.16.12.10

ports:

- name: dns

port: 53

targetPort: dns

- name: dns-udp

port: 53

protocol: UDP

targetPort: dns-udp

selector:

app: dns-server

release: dns-server

type: LoadBalancer

From here, everything is up and working. Verifying would be as simple as using nslookup to the appropriate IPs or similar with arping again to be certain our L2 advertisements are working. Instead, lets use a Kubernetes tool called ksniff to observe the traffic on the speaker in cluster. Here is an example of what that command might look like.

kubectl sniff -n metallb metallb-speaker -p

In my scenario, it was a bit more involved. Because I am running k3s on ARM I needed to specify the containerd socket and use arm compatible images. Im also going to specify the traffic Im interested in. Just in case you're interested, heres what that looks like!

kubectl sniff -n metallb argo-metallb-speaker-nprwp --socket /run/k3s/containerd/containerd.sock -p --image ghcr.io/nefelim4ag/ksniff-helper:v4 --tcpdump-image ghcr.io/nefelim4ag/tcpdump:latest -f "arp"

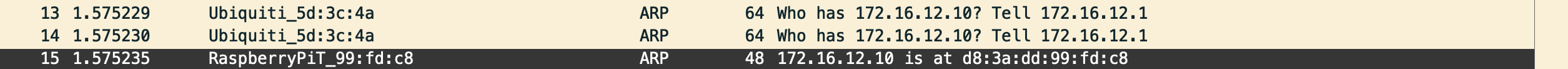

Above we can see ARP being successfully requested and replied to for the appropriate IP and MAC. Now the speaker is successfully routing traffic via the appropriate services to the same DNS server in Kubernetes so all external requests can be observed in a single place.

Subscribe to my newsletter

Read articles from Jay Miracola directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by