A small door to Federated Learning Approach: Decentralised Data.

BDL Fadoua

BDL Fadoua Table of contents

Intoduction:

Deep learning methods require a huge amount of data for training, which has raised significant privacy concerns. This means that the data used for training may contain sensitive information that could be exploited by malicious actors. As a result, there is a growing need for privacy protection in the field. Therefore, there is a need to ensure that privacy is taken into account when using deep learning methods. Users can not delete or even restrict the use of their data. The advances and the work that have been put on the deep learning methods have created a big gap between what we used to know and what we know now in long-standing Artificial Intelligence tasks such as image, speech, text recognition, etc. Big companies such as Google, Facebook, and Apple took a big step towards the deployment of deep learning on a large scale because of the amount of data they collected from their users and also the vast computational power of GPU farms. Along with all of that, the unusual accuracy of the resulting models allows them to be used as the foundation of many new services and applications, like accurate speech recognition, and image recognition that outperforms humans. Therefore, Google introduced, in 2017, Federated learning which is an approach to train machine learning models on private data, without the need to collect the data in a centralized location. That means letting the training data be distributed on the devices, but at the same time learning by a shared model from receiving the updated model without the need to share the data used for training.

Definition:

The broader definition of Federated learning as McMahan et al. proposed in his paper:” Federated Learning is a machine learning setting where multiple entities (clients) collaborate in solving a machine learning problem, under the coordination of a central server or service provider. Each client’s raw data is stored locally and not exchanged or transferred; instead, focused updates intended for immediate aggregation are used to achieve the learning objective.”

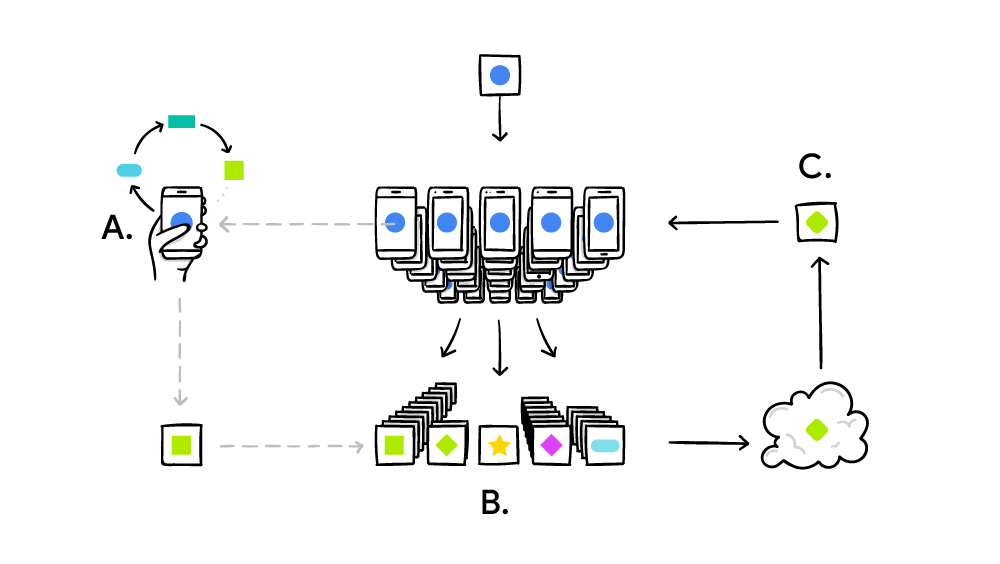

A are different mobiles that train their models using their private data that is generated and stored in the same device, and then updating B generated from each trained model to improve the global model C. The same process is going to be repeated until we get a high-quality model.

Algorithm:

The decentralized approach for federated learning of deep networks based on iterative model averaging, evaluates the efficiency of this approach and presents a practical method for federated learning of deep networks. as follow:

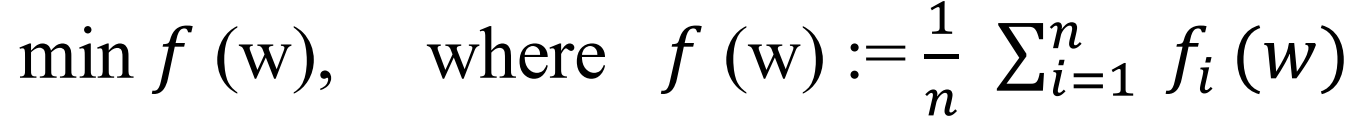

This equation defines the objective function for decentralized learning, which is used to optimize the global model parameters using local gradients computed at multiple data sources. FedAvg is using the function to solve the decentralized learning problem by iteratively updating the global model parameters using the averaged gradients computed at each client. The objective function consists of two terms: the first term is the average of the local objective functions of all clients, and the second term is the regularization. The goal is to minimize this objective function to obtain the optimal model parameters that generalize well on the data from all clients.

Conclusion:

Federated learning is a promising approach to address privacy concerns and data ownership issues in machine learning. The use of federated learning can be highly effective, it leads the model to be trained on large amount of data. This is because the approach allows for the pooling of data from multiple sources, which can help to overcome the challenge of limited datasets. The application of federated learning offers a promising solution for developing models that can accurately interpret and generate text in this language. However, further research and development in this area is necessary to fully realize the potential of federated learning to create models that are both accurate and robust.

Subscribe to my newsletter

Read articles from BDL Fadoua directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by