Any metric in Data Science - why should I be bothered?

Raj Kulkarni

Raj Kulkarni

Metrics in data science provide a way to measure the performance of your models and algorithms. They provide a quantifiable means to understand the effectiveness of your approach, and they can guide you in improving your models. Without metrics, you would be shooting in the dark, unsure of whether your model is improving, stagnating, or even degrading. Hence, understanding and using appropriate metrics is crucial in any data science project.

The project I'm currently engaged in appears straightforward - Fraud Detection across diverse payment methods. At first glance, one might assume it's a simple task. However, upon adopting a Data Science perspective, I realized its complexity.

Put it in simple words, I just made sure every step was taken from a business’ point-of-view and every step had inference and observations on which the next step would depend.

[And by the way, this dataset had over 6 million data records which I further augmented to 12+ million rows. So in “no-way” is it biased/imbalanced]

Coming to the main point of this article.

At the core of this article lies a pivotal realization that reshaped my understanding of Data Science. Until recently, I firmly believed that achieving high accuracy, precision, and recall in a model meant there was no need for further analysis. Even when different machine learning models yielded similar results, I saw little value in examining the metrics closely.

While I grasped the importance of metrics like precision, recall, and F1-score, I failed to appreciate their significance when outcomes were consistent across models. However, I've since learned a valuable lesson—I was mistaken in my assumptions.

So for this project I decided to go the bottom-to-top approach thereby using ML algorithms from classical to advance ensembling to neural network.

Once I fit my logistic regression model, I calculated all the possible metrics to make myself feel better.

Metrics were as follows -

|| Accuracy || = 0.9849088516357969

|| Cross-Validated Accuracy || -

1. On train set = 0.9848923636432019

2. On test set = 0.9849563294611317

|| Classification Report || -

precision recall f1-score support

0 0.99 0.97 0.98 1905929

1 0.98 0.99 0.99 1906372

accuracy 0.98 3812301

macro avg 0.99 0.98 0.98 3812301

weighted avg 0.99 0.98 0.98 3812301

|| Confusion Matrix || -

array([[1857935, 47994],

[ 9538, 1896834]], dtype=int64)

Upon examining the confusion matrix, it becomes evident that the count of FN (False Negative) instances stands at 9538. While this figure may appear relatively low compared to the total data points in the training set, it remains a critical aspect that demands attention due to its potential implications for customers.

The second model I used was XgBoost - without and with hyperparameter tuned.

Metrics for vanilla XgBoost were as follows -

|| Accuracy || = 0.9985588755977033

|| Cross-Validated Accuracy with Stratified K-Fold || -

1. On train set = 0.9985927508954594

2. On test set = 0.9984814944882221

|| Classification Report || -

precision recall f1-score support

0 1.00 1.00 1.00 1905929

1 1.00 1.00 1.00 1906372

accuracy 1.00 3812301

macro avg 1.00 1.00 1.00 3812301

weighted avg 1.00 1.00 1.00 3812301

|| Confusion Matrix || -

array([[1901579, 4350],

[ 1144, 1905228]], dtype=int64)

By simply modifying the model, the FN values have decreased further.

The parameters for XgBoost were selected by keeping in mind the impact every hyperparameter would have on the final result.

# Final result of best hyperparameters in the XgBoost model

hyp_model.best_params_

"""

{'n_estimators': 500,

'min_child_weight': 6,

'max_depth': 6,

'learning_rate': 0.3,

'gamma': 0.3}

"""

Now comes the fun part! Metrics for hyperparameter tuned XgBoost were as follows -

|| Accuracy || = 0.9993117017780075

|| Cross-Validated Accuracy with Stratified K-Fold || -

1. On train set = 0.9992708565494539

2. On test set = 0.9990291952204873

|| Classification Report || -

precision recall f1-score support

0 1.00 1.00 1.00 1905929

1 1.00 1.00 1.00 1906372

accuracy 1.00 3812301

macro avg 1.00 1.00 1.00 3812301

weighted avg 1.00 1.00 1.00 3812301

|| Confusion Matrix || -

array([[1903588, 2341],

[ 283, 1906089]], dtype=int64)

The parameterized XgBoost has a total of only 283 FN values.

Comparison between all 3 models :

Logistic model - FN = 9538 → 9538 records were fraud transaction classified as legitimate.

Vanilla XgBoost - FN = 1144 → 1144 records were fraud transaction classified as legitimate.

Parameterized XgBoost - FN = 283 → 283 records were fraud transaction classified as legitimate.

This is a very important point to note that even if our accuracy, or even precision % to that matter, increased by 1% or even 0.00001%, the number of records which were dangerously classified otherwise (FN) were reduced drastically to make a better and generalized model for this domain.

So the more we deep dive and see the magic of models, of course having a before-hand understanding of the model itself, the more we see how they effect real life businesses.

Why did I choose only FN values and not FP?

First we need to understand what is the fundamental difference between FN and FP with this data source.

FP are the ones were a legitimate transaction has happened but is classified as Fraud.

FN are the ones were a fraud transaction has happened but is classified as legitimate.Consider this scenario: which situation do you believe poses a greater risk to the customer? If a legitimate transaction from my bank account is mistakenly flagged as fraud, I can simply contact the bank and clarify the issue or, ideally, disregard it altogether. However, if an unauthorized individual debits funds from my bank account, the repercussions are far more severe. In such a case, I would need to alert the bank, involve law enforcement, and even then, there's no guarantee of recovering the lost funds.

So the major emphasis is on FN values (or recall) instead of FP (precision).

Of course the accuracy increase is important, the classification report is important, but the important point is did we make a model that would effectively be a solution for the problem statement we are working on. This is exactly what AI (especially Data Science) is about.

80% of the problem statement is understanding the result and inference at every turn and act accordingly. This would include Data Analysis, Engineering and inferences from every metric and acting accordingly.

Important points to sum up this article -

It is imperative for AI and Data Science projects to prioritize the business aspect over model algorithms.

The metrics we choose are very important as this would decide how well the model is behaving when its idea is extrapolated and applied to the colossal population and its respective real-time data.

Notably, the decrease in false positives (FP) alongside false negatives (FN) contributes to maintaining customer trust.

I hope this blog ignited your curiosity. Remember, learning is a journey, not a destination. Keep exploring and asking questions! Happy Learning :)

For any suggestions or just a chat,

Raj Kulkarni - LinkedIn

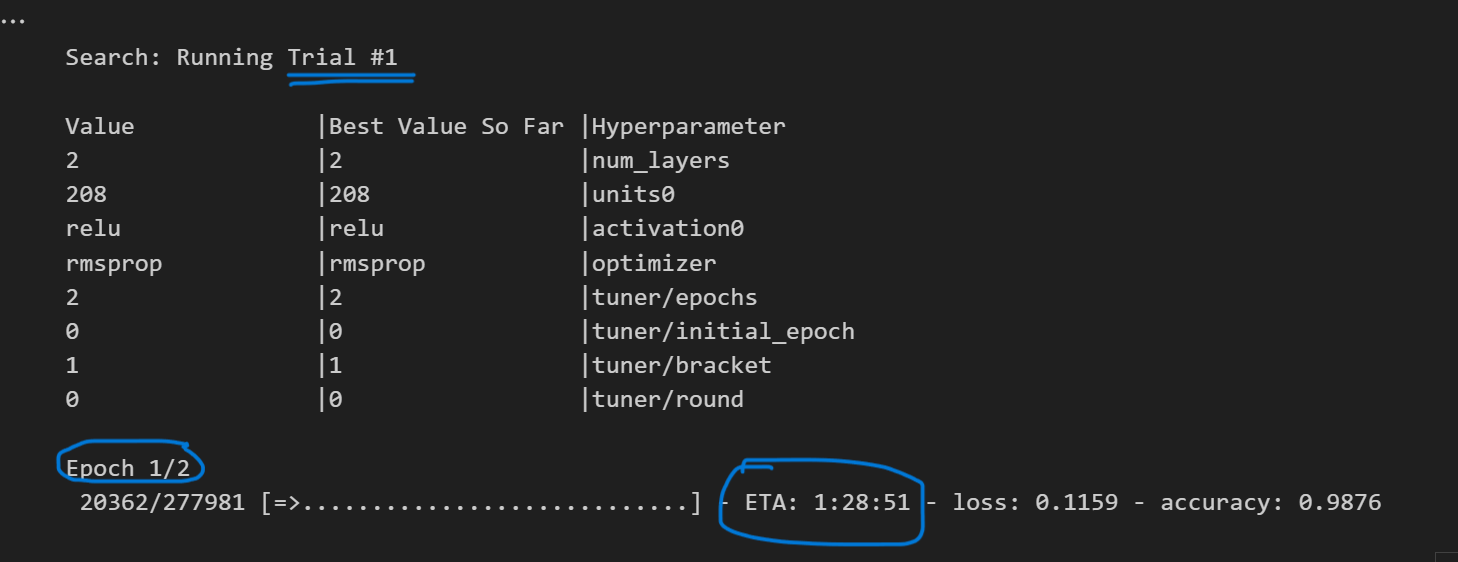

P.S. - 1 → I am still doing my neural network for this project to see how the data is being handled by deep learning models.

P.S. - 2 → I wrote this article when I had my neural network running for searching it’s parameters. I have 1 hour and 28 minutes left and it is still in its 1st epoch of the 1st trial. There are still 25 more epochs to go! :’)

Subscribe to my newsletter

Read articles from Raj Kulkarni directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Raj Kulkarni

Raj Kulkarni

I like to write, talk and discuss about AI, Data Science and its sub-domains. I always prefer learning in a fun-way.