Basic terminology related to Kubernetes

Harshit Sharma

Harshit Sharma

This is the first article for the K8s series, and in this article, we are going to see:

Monolithic vs Microservice architecture

Containers - Docker

Container Orchestration Engines - Kubernetes

I believe going through these articles will build up a basic knowledge regarding important terminologies before diving deep into Kubernetes itself.

Monolithic vs MicroService Architecture

Going by word itself, Monolithic means something which is formed of a single large block. And in IT, monolithic architecture means that the whole application is deployed as a single unit. This is the old way of developing applications and it is self-contained, which means it is packaged and deployed as single unit. There are some disadvantages to Monolithic architecture, which are:

The code base is often very large.

Difficulty in scaling application.

Adding features is a complex task.

Technology is dependent on initial decision.

In the MicroService architecture, multiple services make up the application instead of just one piece. In this architecture small and multiple services are made to handle specific concerns. It also solves the disadvantages of Monolithic architecture, as:

It provides flexibility in scaling.

It gives technological freedom (different services can be developed using different technologies).

It can be updated, deployed and scaled rapidly to meet demands.

MicroService architecture consists of its own set of challenges, which are:

When the number of services becomes large, it is very complex to maintain all the services manually. It requires use of specialized tools.

This architecture depends heavily on network performance.

So, once you select an appropriate architecture and build your application, you are going to deploy it. But how do we deploy applications? This is where containers come into play.

Containers

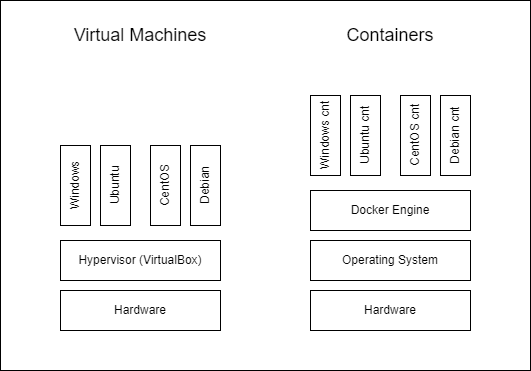

Containers are basically standalone and executable packages that bundles applications, their dependencies and the OS. So, what makes them different from traditional Virtual Machines? Containers share the kernel with the host machine, and that makes them very fast and lightweight as compared to VMs.

Since containers pack the application along with its dependencies and the Operating System, they also solve the problem of "works on my machine".

Common container engines are: Docker and Rocker (rkt). We will be using Docker as the container engine for the next articles in this series.

Docker is the leading containerization engine in the market. It helps in building and shipping your application code as Docker Images, and then deploying it using Docker Containers. Furthermore, Docker's image-based approach facilitates version control and collaboration.

Container Orchestration Engines

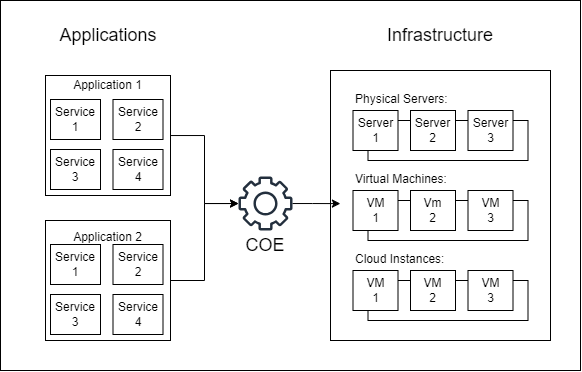

Once you have built and deployed your application using containers, you may want to scale it. And when the number of containers reaches very high, it is not feasible to maintain them manually. Instead of doing all the tasks of managing containers manually, you can use something which is called as Container Orchestration Engine (or COE). A Container Orchestration Engine automates deploying, scaling and managing containerized applications on a group of servers.

Suppose you have some servers including Physical Servers, VMs, and Cloud Instances. And you need to deploy Microservices based applications on them, where each microservice is running inside of a docker container. It will be a hassle to maintain this infrastructure manually. But once you install a COE, then you can easily go about managing these numerous microservices.

Key features of COE are:

Automated Deployment and Scaling: COEs automate the deployment of containerized applications, ensuring consistency across different environments. They also enable automatic scaling, allowing applications to adapt seamlessly to varying workloads.

Load Balancing: To optimize resource utilization and distribute traffic efficiently, COEs incorporate load balancing capabilities. This ensures that containers are distributed evenly across the available infrastructure.

Resource Management: Efficient resource utilization is a key aspect of COEs. They manage resources like CPU and memory, ensuring that containers receive the necessary computing power while avoiding resource contention.

Configuration Management: COEs help manage configuration settings for containerized applications. This ensures consistency and enables easy updates to configuration parameters.

Prominent container orchestration engines include Kubernetes, Docker Swarm, and Apache Mesos. Kubernetes (or K8s), in particular, has emerged as the de facto standard due to its robust feature set and widespread adoption in the industry.

Next article is going to be about Architecture of Kubernetes.

Thanks for reading! ✨

Know more about us with:

Our Website

Our LinkedIn

Subscribe to my newsletter

Read articles from Harshit Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by