Kubernetes Gateway API

Karim El Jamali

Karim El Jamali

So, we ended our previous post talking about some of the downsides of the Load Balancer service in terms of security, manageability and increased costs. So this weekend, I set myself up to understand the Kubernetes Gateway API (not an API Gateway) which is a consolidated ingress solution to get traffic to your Kubernetes Clusters.

It's important to note the terminology here. Traditionally, Kubernetes users have been familiar with Ingress, the widely-used method for creating a single entry point to expose services within a cluster, primarily targeting HTTP/HTTPs workloads. However, there's a new player in town: the Gateway API.

While Ingress acts like a monolith, requiring modifications to a single object as more applications are exposed, the Gateway API takes a more versatile approach. It covers a broader spectrum of protocols, including HTTP, HTTPS, and gRPC. What sets it apart is not just the protocol support but also the improved role-based API, providing a more flexible and sophisticated means of managing ingress.

Role Based API

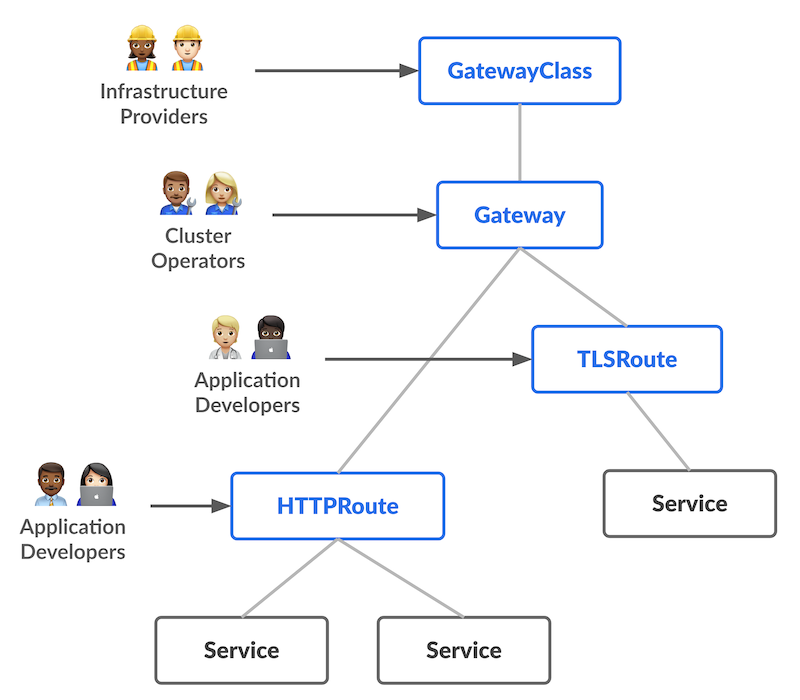

ACME Corp has different roles that help manage their Kubernetes infrastructure and deploy their required applications and services on top.

Choosing the Provider: John handles the decision on which Infrastructure Providers to bring in and the high level settings of that provider. For instance, John favors the NGINX solution. For that matter John has to define the GatewayClass object which is a cluster scoped resource tied to the provider (NGINX). At a high level, this is John saying "We will use NGINX as the platform of choice to handle Ingress Traffic." There might be cases for various reasons where multiple GatewayClasses (Infrastructure Providers) can be used simultaneously.

Configuring the Proxy: Karim handles the NGINX proxy configuration via the Gateway Object. It is important to think about the proxy or Load Balancer Instance as a shared resource across multiple teams, thus some settings will be common and defined under the Gateway Object whereas others will be configured under Routes (HTTPRoutes, TLSRoutes). Options defined under the Gateway are:

Specifying the class of Gateway it belongs to (GatewayClass specified by John)

Specifying the hostname (acme.com), port, and protocol the proxy is listening to as well as TLS parameters.

Allowing particular routes to attach to the Load Balancer.

Route Configuration: Chris has a particular ticketing Application that he intends to publish. He would like to use the FQDN ticket.acme.com and as soon as this URI is seen, he would like this routed to the ClusterIP service within Kubernetes which in turn will target his application workloads. A deeper explanation on ClusterIP and how it works can be found here.

Another advantage that the RoleBased API brings into the picture is a better RBAC implementation where each of those users or teams can gain access to the needed objects & thus sets better governance on publishing applications externally.

The final advantage of Gateway API we will touch upon is portability i.e. the ability to switch implementation from one vendor to another without requiring change. Ingress on the other hand was heavily dependent on vendor specific annotations which made it harder to switch from one implementation to another.

Source: https://gateway-api.sigs.k8s.io/

But how does it Work?

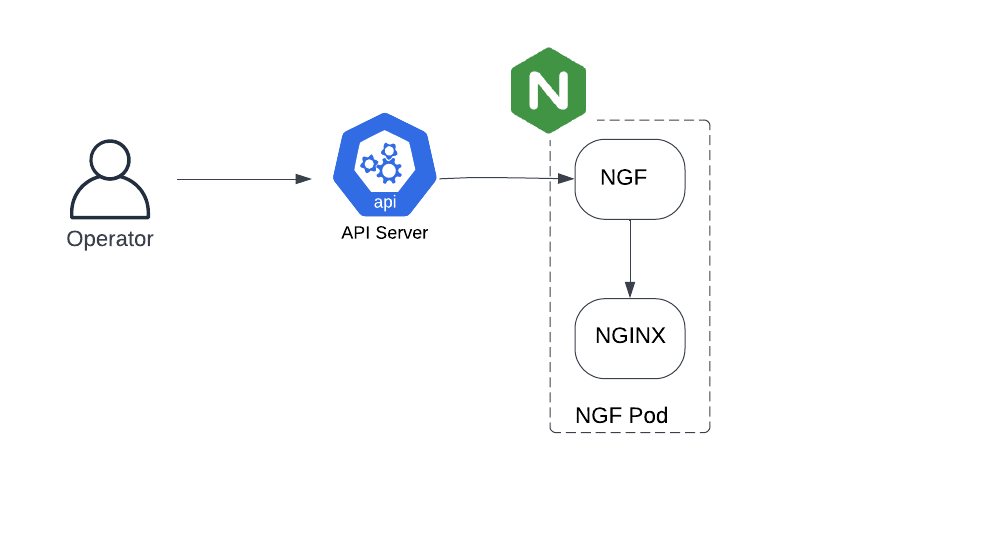

In this section, we will use NGINX's implementation of Gateway API referenced as NGINX Gateway Fabric as an example but the logic holds true mostly for other vendor implementations. There are a few components that make this work:

Custom Resource Definitions (CRDs): Kubernetes by default has a set of out of the box objects but can be extended with the use of Custom Resource Definitions (CRDs). In a nutshell, Gateway API is delivered as a set of CRDs extending the capability of Kubernetes to be able to manage the end-to-end lifecycle of third party objects which in our case are referencing the 3rd party NGINX Load Balancers.

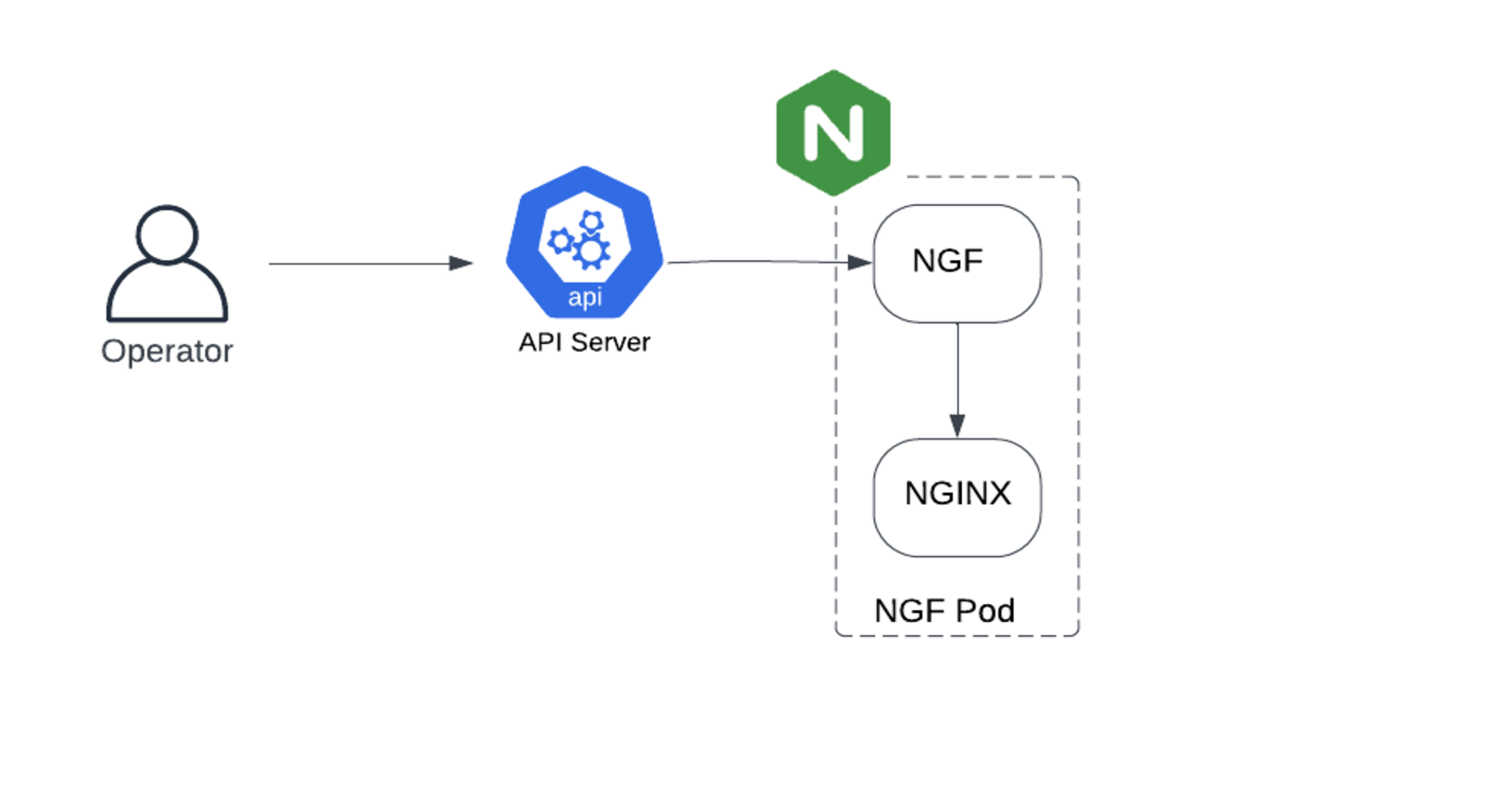

We need to install the vendor implementation i.e. the NGINX Gateway Fabric Pod and all its associated objects (Deployments, ReplicaSets, Service Account, ClusterRoleBindings..etc. A key piece to understand is that the NGINX Gateway Fabric Pod (NGF Pod) consists of two different containers: NGF and NGINX. You can think of NGF as a component that listens to API Server configuration changes pertaining to the 3 main objects within GatewayAPI (GatewayClass, Gateway, Routes here encompasses HTTPRoutes/TLSRoutes) and then programs the NGINX container accordingly. Thus, NGF acts as a translator between the API Server and the native NGINX container configuration. For more details on installation, you can refer to NGINX Gateway Fabric docs here.

Scenarios

Simple Routing Configuration

Traffic Flow

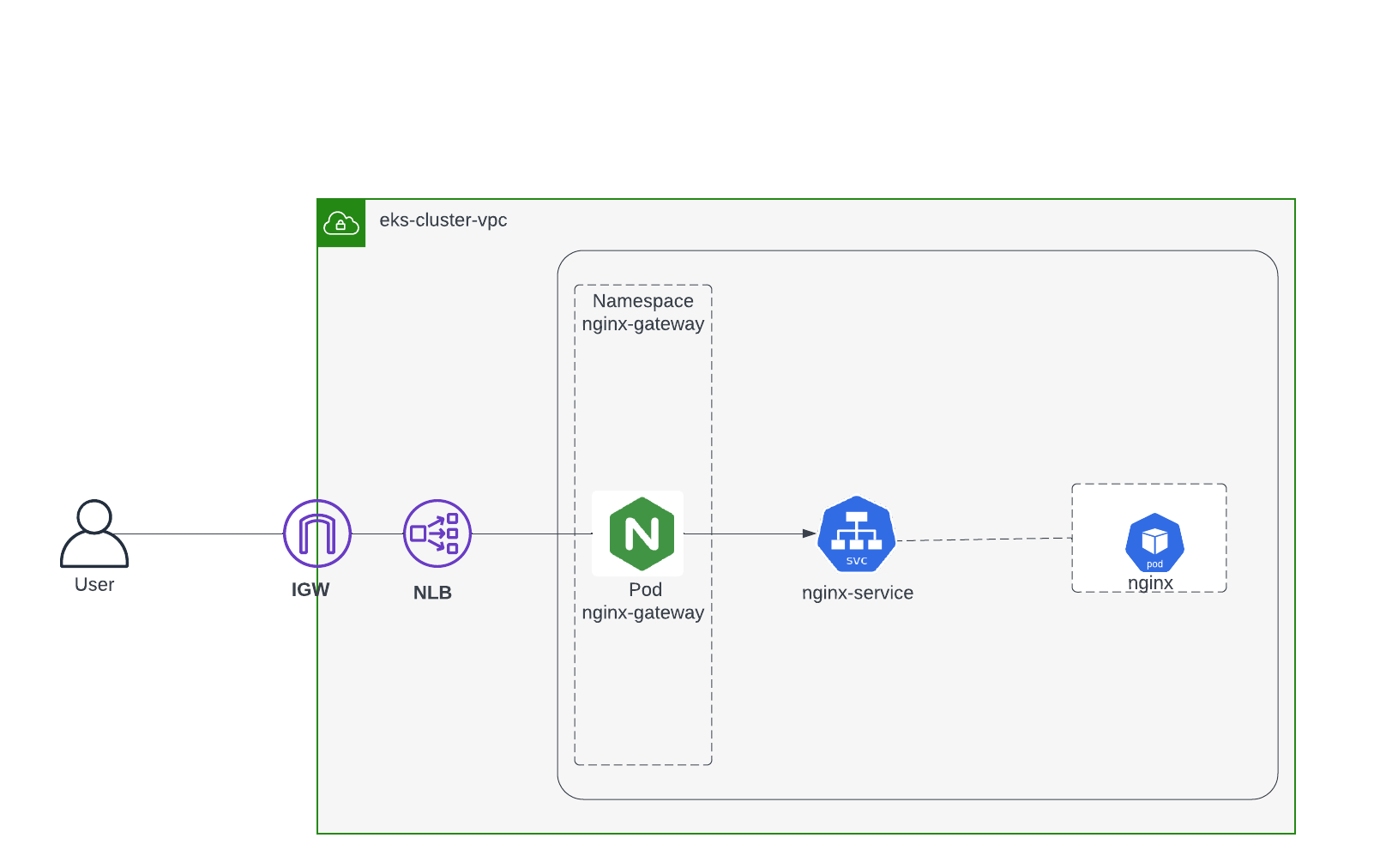

This is the simplest of all scenarios:

Client resolves the DNS name of the NLB to an IP address

Traffic from the client goes towards the NLB's Public address to be DNATTed by the IGW.

An important consideration to mention here is that this NLB is part of a Load Balancer service in AWS that instantiated this NLB and we use to provide Public Access to the Nginx Gateway Fabric Pod. More details on the NLB service can be found here.

NGINX is configured via the HTTP Route to send all traffic destined to any path to the nginx service backend which in turn sends it to the NGINX pod. It is worth noting that the nginx service & pod are running NGINX web servers and could be any other workload for that purpose.

Configuration Snippets

It is important to understand that the route object references the Gateway and the Gateway references the GatewayClass. You can find the comments within each of the outputs.

apiVersion: v1

items:

- apiVersion: gateway.networking.k8s.io/v1

kind: GatewayClass

metadata:

labels:

app.kubernetes.io/instance: nginx-gateway

app.kubernetes.io/name: nginx-gateway

app.kubernetes.io/version: 1.1.0

name: nginx

spec:

controllerName: gateway.nginx.org/nginx-gateway-controller #this is a fixed domain set by the vendor and the path can vary specifically if you want to run multiple implementations

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: mygateway

namespace: default

spec:

gatewayClassName: nginx #tying the Gateway to the Class basically stating this is an NGINX Gateway. Similar to IngressClass

listeners:

- allowedRoutes: #By default we are only allowing Routes to use this GW if they come from the same namespace

namespaces: #which in our case is the default namespace object for the gateway

from: Same

name: http #Listening on TCP port 80 for HTTP. Can be locked down to particular domains also.

port: 80

protocol: HTTP #Can be used to filter TLS Routes

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: nginx-default

namespace: default

spec:

parentRefs: #Tying the Route to the Gateway

- group: gateway.networking.k8s.io

kind: Gateway

name: mygateway

rules:

- backendRefs: #Backend Service to send the traffic to

- group: ""

kind: Service

name: nginx

port: 80

weight: 1

matches: #Any traffic

- path:

type: PathPrefix

value: /

In a nutshell the objects tie as follows:

Route (HTTPRoute/TLSRoute) ties to the Gateway

The Gateway has an allowedRoutes which is like a filter on which routes to accept which by default allows routes within the same namespace as the Gateway Object but can be configured to allow routes from other namespaces. The Gateway on the other hand ties to the GatewayClass. On another note, Gateways leverage listeners as another method of filtering Routes i.e. if a gateway for instance listens for HTTP traffic, any TLS route will not be attached to the gateway. In addition, if the Gateway is listening to a particular hostname (*.acme.com) it will automatically ignore any routes that are not part of the ACME domain. The reason these filtering capabilities are important is because they set some regulations/boundaries on publishing applications that are agreed upon between the developers and infrastructure owners.

The GatewayClass ties to the Controller Name. You might want to make variations there when if you have a case where you need dual implementations of the NGINX Gateway Fabric for instance where one pod handles for example production workloads and the other handles development workloads.

Leveraging Paths

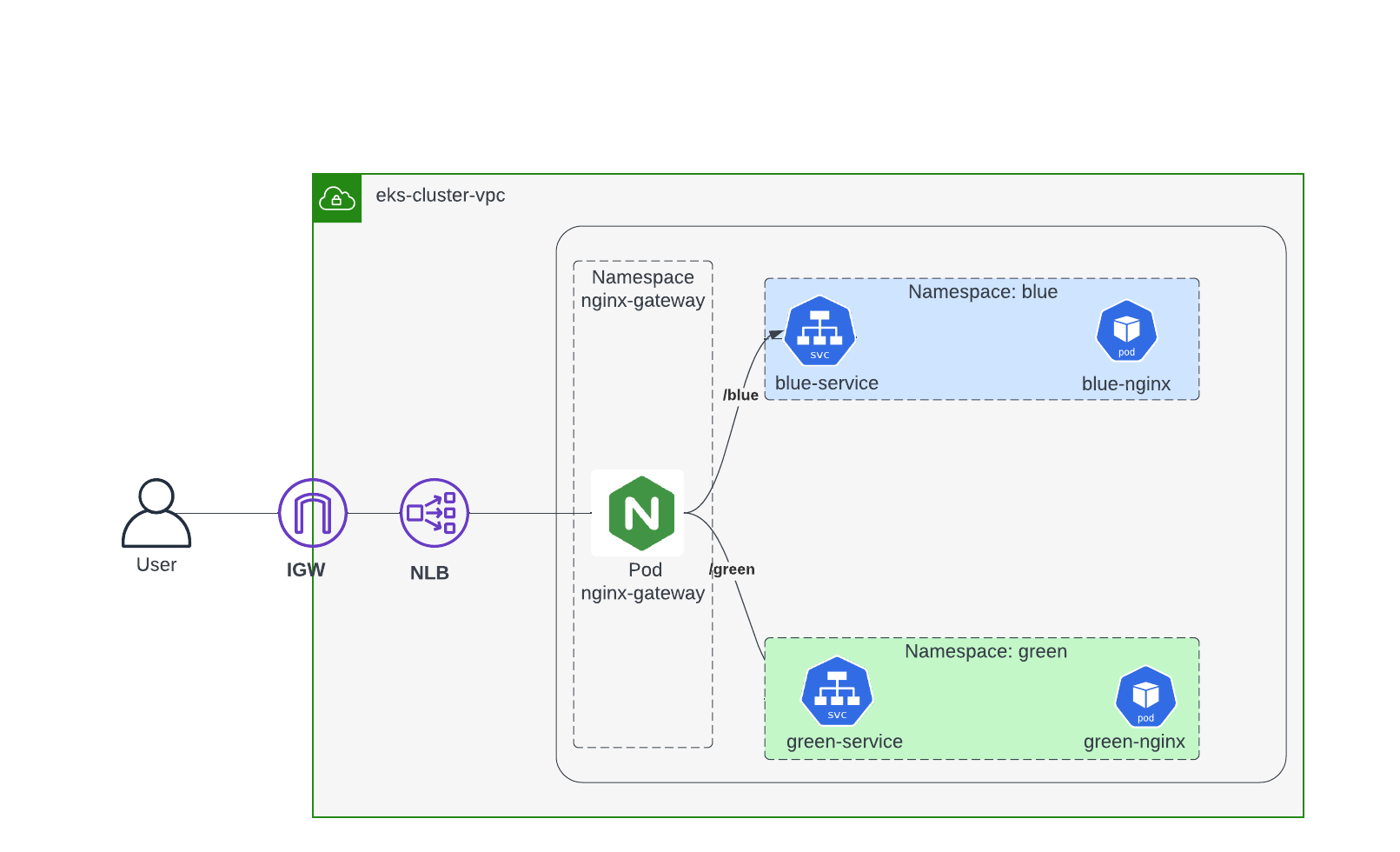

Traffic Flow

Following the same logic from the simple routing configuration but just noting the differences. NGINX is configured via the HTTP Route as follows:

When traffic matches /blue send traffic to the blue nginx deployment

When traffic matches /green send traffic to the green nginx deployment

Again this example can become more advanced by listening to Host headers within the HTTP and then within these host headers we can use paths where the objective is to publish many applications behind the NGINX Proxy for an enterprise deployment. For example:

App1: app1.acme.com and within you can have app1.acme.com/internal app1.acme.com/customer

App2: app2.acme.com and within you can have app2.acme.com/internal app2.acme.com/customer

Configuration Snippets

The difference in this scenario is that the HTTPRoute objects don't reside in the same namespace as the Gateway object thus we need to configure the gateway to allow routes in the blue and green namespaces to hook to it. This is done by the allowedRoutes block under the Gateway Object referencing a particular label for the namespace which in our example is "access-gateway:true" and ensuring that both namespaces blue and green have that label on. This step will ensure that the Gateway object residing in the default namespace will accept HTTPRoutes in the blue and green namespaces.

apiVersion: v1

items:

- apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: mygateway

namespace: default #gateway object resides in the default namespace

spec:

gatewayClassName: nginx

listeners:

- allowedRoutes: #In this scenario, the two services we expose

namespaces: #live in a different namespace thus we need to allow the HTTP routes

from: Selector #in those namespaces to hook to the Gateway else they will be floating

selector: #this is done via labels on those namespaces that are accepted by the gateway object

matchLabels:

access-gateway: "true"

name: http

port: 80

protocol: HTTP

NAME STATUS AGE LABELS

blue Active 2d1h access-gateway=true,kubernetes.io/metadata.name=blue

green Active 2d1h access-gateway=true,kubernetes.io/metadata.name=green

In this case the route is a bit different as we need to differentiate /blue and /green paths. We are not showing the green route for brevity.

apiVersion: v1

items:

- apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: blue-nginx

namespace: blue

spec:

parentRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: mygateway

namespace: default

rules:

- backendRefs:

- group: ""

kind: Service

name: blue-nginx-deployment

port: 80

weight: 1

matches:

- path:

type: PathPrefix

value: /blue

In order to differentiate the NGINX web server deployments, this is a simple configmap that can be attached to the NGINX deployment so that NGINX in different namespaces will be easily distinguishable in your browser.

apiVersion: v1

data:

nginx.conf: |

server {

listen 80;

location / {

default_type text/html;

set $nginx_ip $server_addr;

set $client_ip $remote_addr;

return 200 '<html>

<head>

<style>

body {

background-color: blue;

color: white;

font-size: 72px;

display: flex;

align-items: center;

justify-content: center;

height: 100vh;

margin: 0;

}

</style>

</head>

<body>

<div style="text-align: center;">

<p>Server Name: Blue NGINX</p>

<p>Server IP Address: $nginx_ip</p>

<p>Client IP Address: $client_ip</p>

</div>

</body>

</html>';

}

}

kind: ConfigMap

metadata:

name: blue-nginx-config

namespace: blue

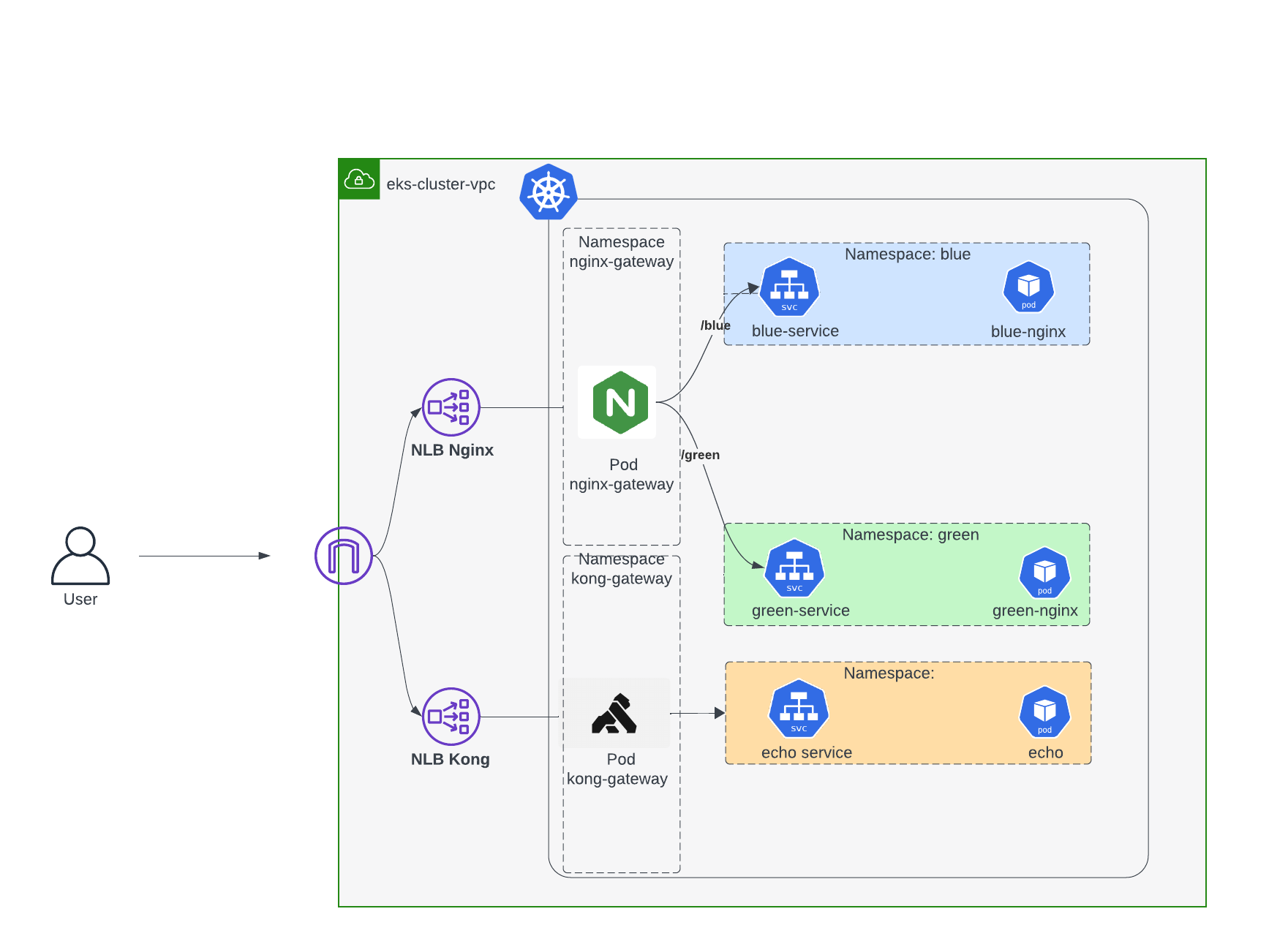

Dual Ingress Implementation

In this setup, we extend the model by adding another implementation of the GatewayAPI from Kong where this time we have two ingress points to our Kubernetes Cluster one with the NGINX Gateway Fabric and the other from Kong and we publish some applications via NGINX and the others via Kong. The NGINX setup is left intact in this scenario.

Traffic Flow

For the echo service it will be accessible via Kong which in turn will have its own NLB for external access from outside the Kubernetes Cluster.

Configuration Snippets

We will need to create the GatewayClass and Gateway for Kong similar to what we have done for the NGINX Fabric Controller. Note that the HTTPRoute has a reference to the Kong Gateway.

apiVersion: gateway.networking.k8s.io/v1

kind: GatewayClass

metadata:

name: kong

spec:

controllerName: konghq.com/kic-gateway-controller #this is again a domain name specified by the vendor

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: kong

namespace: default

spec:

gatewayClassName: kong #by default we only allow routes from the same namespace (default)

listeners:

- allowedRoutes:

namespaces:

from: Same

name: proxy

port: 80

protocol: HTTP

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

annotations:

konghq.com/strip-path: "true"

name: echo

namespace: default

spec:

parentRefs: #referencing the Kong gateway

- group: gateway.networking.k8s.io

kind: Gateway

name: kong

rules:

- backendRefs:

- group: ""

kind: Service

name: echo

port: 1027 #this is the port the echo service is running on

weight: 1

matches:

- path:

type: PathPrefix

value: /echo

References:

NGINX Gateway Fabric documentation: https://docs.nginx.com/nginx-gateway-fabric/

Kong Documentation: https://docs.konghq.com/kubernetes-ingress-controller/3.0.x/gateway-api/

Subscribe to my newsletter

Read articles from Karim El Jamali directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Karim El Jamali

Karim El Jamali

Self-directed and driven technology professional with 15+ years of experience in designing & implementing IP networks. I had roles in Product Management, Solutions Engineering, Technical Account Management, and Technical Enablement.