Understanding Tensors in TensorFlow: The Building Blocks of Higher-Dimensional Data

Gyanendra Vardhan

Gyanendra Vardhan

TensorFlow, as the name suggests, revolves around the concept of tensors. Tensors serve as the fundamental building blocks upon which TensorFlow, one of the most powerful and widely-used deep learning frameworks, is built. But what exactly is a tensor, and how does it relate to the computations in TensorFlow? Let's try and understand the core concept of tensors, exploring their definition, properties with some examples.

What is a Tensor?

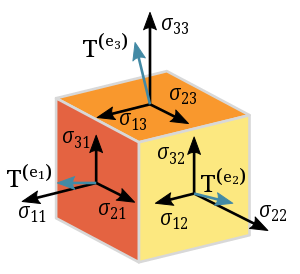

To begin with, let's demystify the term "tensor." At first glance, it might seem like a complex concept, but in reality, it's quite simple. In essence, a tensor is a generalization of a vector to higher dimensions. If you're familiar with linear algebra or basic vector calculus, you likely have encountered vectors before. Think of a vector as a data point; it doesn't necessarily have a fixed set of coordinates. For instance, in a two-dimensional space, a vector could consist of an (x, y) pair, while in a three-dimensional space, it might have three components (x, y, z). Tensors extend this idea further, allowing for an arbitrary number of dimensions.

According to the official TensorFlow documentation, a tensor is defined as follows:

A tensor is a generalization of vectors and matrices to potentially higher dimensions. Internally, TensorFlow represents tensors as n-dimensional arrays of base datatypes.

Let's break this down further. Tensors can hold numerical data, strings, or other datatypes, and they can have varying shapes and sizes. They serve as the primary objects for storing and manipulating data within TensorFlow.

Creating Tensors

Now that we have a basic understanding of tensors, let's see how we can create them in TensorFlow. Below are some examples of creating tensors using TensorFlow's API:

import tensorflow as tf

# Creating a string tensor

string_tensor = tf.Variable("Hello, TensorFlow!", tf.string)

# Creating a number tensor

number_tensor = tf.Variable(123, tf.int16)

# Creating a floating-point tensor

float_tensor = tf.Variable(3.14, tf.float32)

In the above code snippets, we use TensorFlow's tf.Variable function to create tensors of different datatypes. Each tensor has an associated datatype (tf.string, tf.int16, tf.float32) and an initial value. These tensors represent scalar values since they contain only one element.

Rank of Tensors

The rank, also known as the degree, of a tensor refers to the number of dimensions it possesses. Let's explore the concept of rank with some examples:

Rank 0 Tensor (Scalar): This tensor represents a single value without any dimensions.

Rank 1 Tensor (Vector): It consists of a one-dimensional array of values.

Rank 2 Tensor (Matrix): This tensor contains a two-dimensional array of values.

Higher Rank Tensors: Tensors with more than two dimensions follow the same pattern.

Here's how we determine the rank of a tensor using TensorFlow's tf.rank method:

# Determining the rank of a tensor

print("Rank of string_tensor:", tf.rank(string_tensor).numpy())

print("Rank of number_tensor:", tf.rank(number_tensor).numpy())

print("Rank of float_tensor:", tf.rank(float_tensor).numpy())

In the above code, tf.rank(tensor) returns the rank of the tensor. The .numpy() method is used to extract the rank value as a NumPy array.

Shape of Tensors

At its core, a tensor is a multidimensional array that can hold data of varying dimensions and sizes. Tensor shapes play a pivotal role in defining the structure and dimensions of these arrays. The shape of a tensor describes the number of elements along each dimension. It provides crucial information about the structure of the tensor. Let's illustrate this concept with examples:

For a rank 0 tensor (scalar), the shape is empty since it has no dimensions.

For a rank 1 tensor (vector), the shape corresponds to the length of the array.

For a rank 2 tensor (matrix), the shape represents the number of rows and columns.

Let's see this with example

import tensorflow as tf

# Tensor with Rank 0 (Scalar)

tensor_rank_0 = tf.constant(42)

print("Tensor with Rank 0 (Scalar):")

print("Tensor:", tensor_rank_0)

print("Shape:", tensor_rank_0.shape)

print("Value:", tensor_rank_0.numpy())

print()

# Tensor with Rank 1 (Vector)

tensor_rank_1 = tf.constant([1, 2, 3, 4, 5])

print("Tensor with Rank 1 (Vector):")

print("Tensor:", tensor_rank_1)

print("Shape:", tensor_rank_1.shape)

print("Values:", tensor_rank_1.numpy())

print()

# Tensor with Rank 2 (Matrix)

tensor_rank_2 = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

print("Tensor with Rank 2 (Matrix):")

print("Tensor:", tensor_rank_2)

print("Shape:", tensor_rank_2.shape)

print("Values:")

print(tensor_rank_2.numpy())

Output

Ou

Tensor with Rank 0 (Scalar):

Tensor: tf.Tensor(42, shape=(), dtype=int32)

Shape: ()

Value: 42

Tensor with Rank 1 (Vector):

Tensor: tf.Tensor([1 2 3 4 5], shape=(5,), dtype=int32)

Shape: (5,)

Values: [1 2 3 4 5]

Tensor with Rank 2 (Matrix):

Tensor: tf.Tensor(

[[1 2 3]

[4 5 6]

[7 8 9]], shape=(3, 3), dtype=int32)

Shape: (3, 3)

Values:

[[1 2 3]

[4 5 6]

[7 8 9]]

In these examples:

tensor_rank_0is a scalar tensor with rank 0 and no dimensions.tensor_rank_1is a vector tensor with rank 1 and a shape of(5,).tensor_rank_2is a matrix tensor with rank 2 and a shape of(3, 3).

Tensor Manipulation

Tensor manipulation forms the backbone of many TensorFlow operations, enabling us to reshape, transpose, concatenate, and manipulate tensors in various ways. Understanding tensor manipulation techniques is crucial for data preprocessing, model building, and optimization. Let's delve into some key tensor manipulation operations:

Reshaping Tensors

Reshaping tensors allows us to change their dimensions, rearrange their shape, and adapt them to different requirements. Whether it's converting between 1D, 2D, or higher-dimensional arrays, reshaping is a fundamental operation in data preprocessing and model preparation.

import tensorflow as tf

# Create a tensor

tensor = tf.constant([[1, 2, 3], [4, 5, 6]])

# Reshape the tensor

reshaped_tensor = tf.reshape(tensor, [3, 2])

print("Original Tensor:")

print(tensor.numpy())

print("Reshaped Tensor:")

print(reshaped_tensor.numpy())

Output

Original Tensor:

[[1 2 3]

[4 5 6]]

Reshaped Tensor:

[[1 2]

[3 4]

[5 6]]

Slicing Tensors

Slicing operations allow us to extract specific subsets of data from tensors along one or more dimensions. By specifying the start and end indices, we can extract desired portions of the data for further processing.

# Slice the tensor

sliced_tensor = tensor[:, 1:]

print("Original Tensor:")

print(tensor.numpy())

print("Sliced Tensor:")

print(sliced_tensor.numpy())

Output

Original Tensor:

[[1 2 3]

[4 5 6]]

Sliced Tensor:

[[2 3]

[5 6]]

Concatenating Tensors

Concatenating tensors involves combining multiple tensors along specified dimensions. This operation is useful for merging datasets, assembling model inputs, and creating batches of data.

# Create tensors for concatenation

tensor_a = tf.constant([[1, 2], [3, 4]])

tensor_b = tf.constant([[5, 6], [7, 8]])

# Concatenate tensors along axis 0

concatenated_tensor = tf.concat([tensor_a, tensor_b], axis=0)

print("Tensor A:")

print(tensor_a.numpy())

print("Tensor B:")

print(tensor_b.numpy())

print("Concatenated Tensor:")

print(concatenated_tensor.numpy())

Output

Tensor A:

[[1 2]

[3 4]]

Tensor B:

[[5 6]

[7 8]]

Concatenated Tensor:

[[1 2]

[3 4]

[5 6]

[7 8]]

Broadcasting

Broadcasting is a powerful technique that enables element-wise operations between tensors of different shapes. TensorFlow automatically aligns dimensions and extends smaller tensors to match the shape of larger ones, simplifying mathematical operations and improving computational efficiency.

# Perform broadcasting operation

tensor_a = tf.constant([[1, 2], [3, 4]])

tensor_b = tf.constant([5, 6])

result = tensor_a + tensor_b

print("Tensor A:")

print(tensor_a.numpy())

print("Tensor B:")

print(tensor_b.numpy())

print("Result after Broadcasting:")

print(result.numpy())

Output

Tensor A:

[[1 2]

[3 4]]

Tensor B:

[5 6]

Result after Broadcasting:

[[ 6 8]

[ 8 10]]

Transposing Tensors

Tensor transposition involves swapping the dimensions of a tensor, thereby altering its orientation. Transposing tensors is particularly useful for tasks such as matrix multiplication, convolution operations, and feature extraction.

import tensorflow as tf

# Create a tensor

tensor = tf.constant([[1, 2, 3], [4, 5, 6]])

# Transpose the tensor

transposed_tensor = tf.transpose(tensor)

print("Original Tensor:")

print(tensor.numpy())

print("Transposed Tensor:")

print(transposed_tensor.numpy())

Output

Original Tensor:

[[1 2 3]

[4 5 6]]

Transposed Tensor:

[[1 4]

[2 5]

[3 6]]

In conclusion, tensors and TensorFlow form the foundational elements of modern machine learning and deep learning workflows. Tensors, as multidimensional arrays, provide a flexible framework for representing data in various forms, from scalars to complex multi-dimensional structures. TensorFlow, with its powerful suite of libraries and tools, harnesses the computational capabilities of tensors to build and train sophisticated machine learning models efficiently. Together, tensors and TensorFlow empower researchers, developers, and data scientists to tackle diverse challenges in artificial intelligence, enabling groundbreaking innovations across a wide range of domains. As the field of machine learning continues to evolve, the understanding and mastery of tensors and TensorFlow remain essential skills for driving advancements and unlocking the full potential of AI technologies.

Subscribe to my newsletter

Read articles from Gyanendra Vardhan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gyanendra Vardhan

Gyanendra Vardhan

I am a technologist defined by my curiosity and be a lifelong learner.