Lakehouse: Securing data access

Stefan Starke

Stefan Starke

In this article, we'll delve into best practices for securing data access when using Microsoft Fabric Lakehouses. The goal is ensuring that your valuable data remains protected and enable people from within your organization to see subsets of the data that is relevant for their field of work. In the end we will have a look into sharing data with report creating users.

Key Takeaways

Data access restriction is important.

Do not use data masking as a security measure.

Create reports based on shared semantic models.

Starting Point

Let's assume we look at our Microsoft Fabric platform. We see fancy data pipelines that grep data from whatever sources you can think of, transform the hell out of the data leaving us with perfect, golden data inside a Lakehouse. Of course in form of nice delta tables.

Now how can we give data access to people within our organization and ensure that they

Only see tables that are relevant for them? (Object Level Security)

Only see columns that are relevant for them? (Column Level Security)

Only see data (rows) that is relevant for them? (Row Level Security)

Basically how can we enable them to query the data they are allowed to see, understand the data model and build reports on their own? Just in case you need it, the fancy buzzword is Fostering self-service reporting while safeguarding data.

Strategy

To realize this goal we adopted the following strategy:

Read-Only SQL Connection: Users are granted read-only access to the SQL endpoint of the lakehouse. By default, they are shielded from access to underlying tables, minimizing the risk of inadvertent alterations or unauthorized access.

Row-Level Security (RLS): RLS mechanisms are deployed to dynamically filter data at the row level based on user identities or membership in Microsoft 365 groups. This granular access control ensures that users only interact with data relevant to their designated roles or responsibilities.

Column-Level Security (CLS): Complementing RLS, CLS configurations restrict access to specific columns within tables, safeguarding sensitive information from unauthorized disclosure.

Data Masking: As an additional layer of defense, data masking techniques are employed to obfuscate sensitive information displayed to users. Primarily cosmetic, this approach must not be compared to CLS as the risk of data leakage via brute-force queries is a given fact. See example lateron.

Semantic Data Models for Self-Service Reporting: Empowering users with semantic data models serves as a structured abstraction layer, enabling them to navigate and interpret complex datasets intuitively. This approach fosters self-sufficiency in report creation while ensuring adherence to organizational data standards.

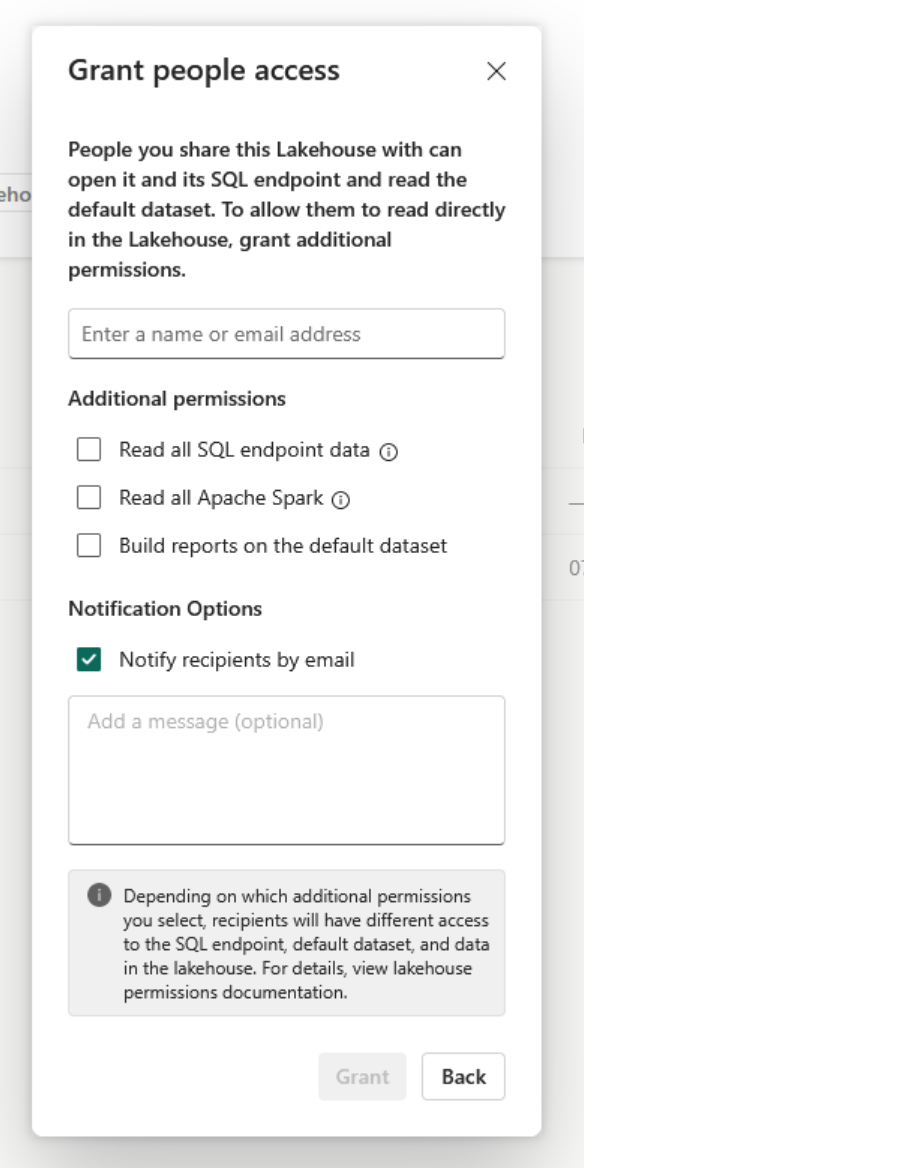

Share SQL Endpoint

Sharing the SQL endpoint of a lakehouse with recipients or groups is straight forward.

To give recipient a basic Connect permission like in an SQL server be sure to NOT select any of the available sharing options. By doing so per default no data is available to be read and GRANT permissions need to be explicity defined. Some kind of a trust nobody strategy.

For more details see MS Data Warehouse Sharing.

Row-Level Security

Row-level security ensures that user can only access rows they are allowed to see.

These restrictions are applied directly inside the data tier (database) every time data is accessed. This makes it more reliable, robust and less error prone than restrictions that are applied inside an application tier.

To enable row-level security two things are needed

a schema containing an inline table-valued function

a security policy using the created function as a predicate

Let' have a look at a simple example.

First creating a new schema including a function that returns 1 when the value of the email is the same as the user executing the query. The value of the user is read via USER_NAME(). To make it more robust all values are converted to lowercase.

CREATE SCHEMA sec;

GO

CREATE FUNCTION sec.customers_validate_rlssecurity(@email as VARCHAR(500))

RETURNS TABLE

WITH SCHEMABINDING

AS

RETURN SELECT 1 AS validate_rlssecurity_result

WHERE LOWER(@email) = LOWER(USER_NAME())

GO

Now all that is left to do is create a security policy, make the connection to the corresponding table (dbo.customers) and set its STATE to ON.

CREATE SECURITY POLICY CustomersFilter

ADD FILTER PREDICATE sec.customers_validate_rlssecurity(Email)

ON dbo.customers

WITH (STATE=ON);

GO

During development it can be useful to disable the policy temporarily. Just set the STATE to OFF.

ALTER SECURITY POLICY CustomersFilter

WITH (STATE = OFF);

Looking into performance of RLS is most likely an additonal blog post on its own. Roughly the impact will be comparable to using a view. Two simple, basic recommendations to keep in mind

Keep the predicate function simple. The more joins the worse the performance.

Ensure there are indices on referenced tables.

Column-Level Security

Nothing fancy here. Grant access to users, groups as needed.

GRANT SELECT ON customers(FirstName, LastName, Email) TO [restricted.robert@cloudflight.dev];

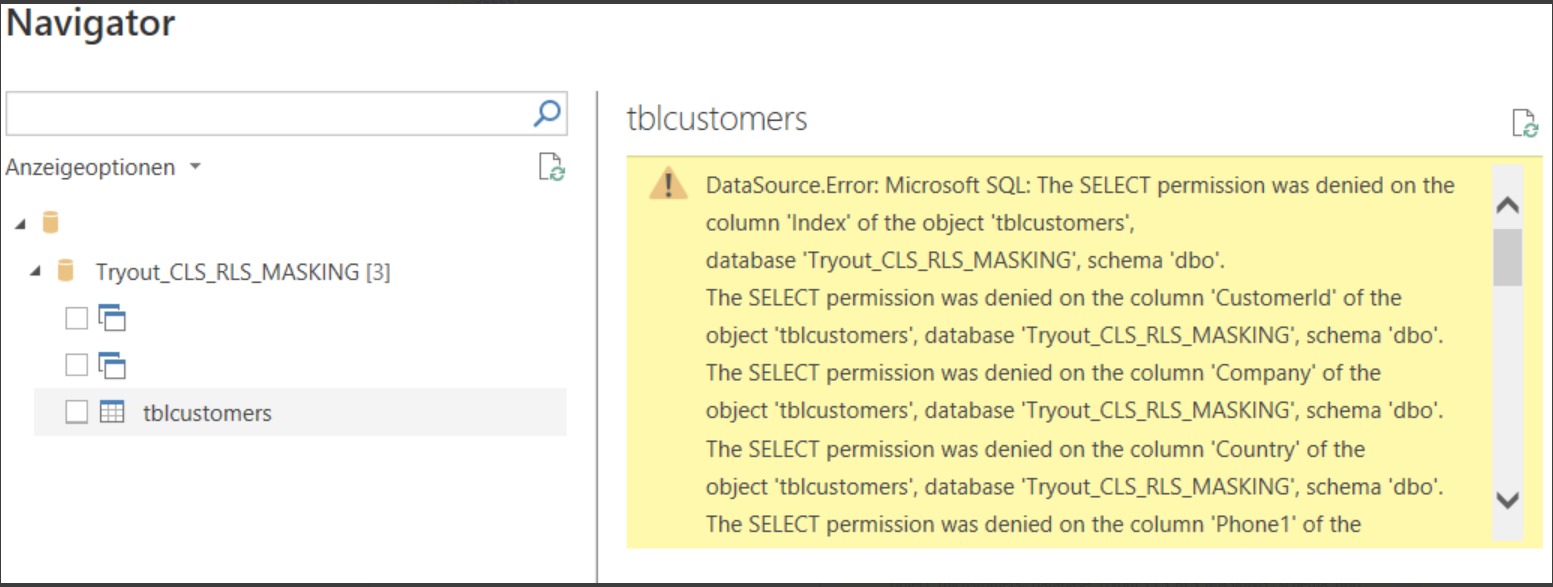

It is important to know, that CLS in place will prevent restricted users from executing SELECT * statements like the one below as they will fail with an error message. Wanted columns need to be specified explicitly in the SELECT statement.

SELECT * from customers;

Msg 230, Level 14, State 1, Line 12

The SELECT permission was denied on the column 'Age' of the object 'customers', database '****', schema 'dbo'.

In a way this may be seen as kind of leaking information as it tells the user that there are further columns and how they are named BUT at least they are not accessible.

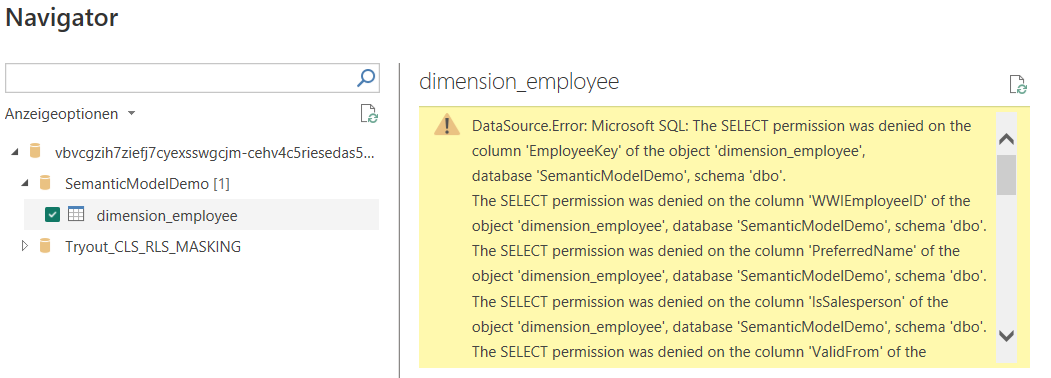

It does however result in the direct SQL endpoint not being usable when creating a Power BI report. It will fail connecting to the endpoint with the same exceptions as above.

This issue can be solved by creating a semantic model of the lakehouse and use it as the base for creating a report. We will come to that later. Moreover it is the recommended approach when speaking about performance.

For more details see MS Column-Level-Security.

Data Masking

The main use case of data masking is to hide sensitive data in the result of a query.

It can be applied easily on columns by using a command like

ALTER TABLE dbo.customers;

ALTER COLUMN City ADD MASKED WITH (FUNCTION = 'default()');

GO

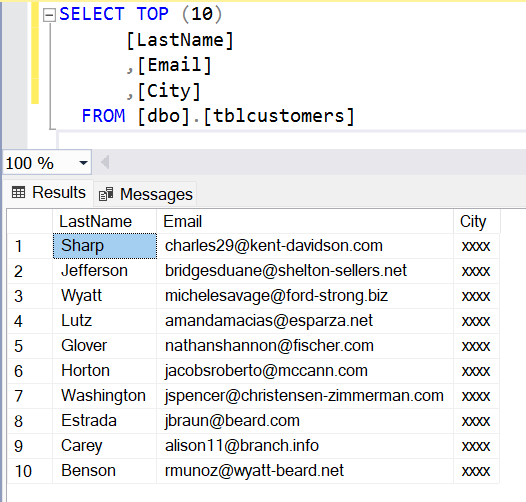

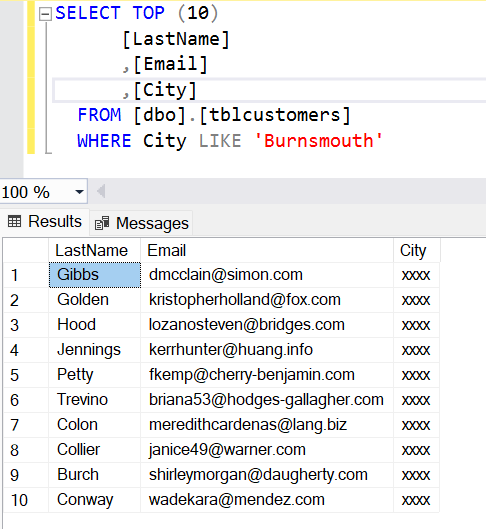

Resulting, as expected, in queried data being masked.

BUT there is a - from our point of view - rather alarming fact. One should never every use data masking as a replacement for column-level security. Data masking is applied once the data has been queried. This makes it possible to perform (automated) brute force queries for gathering insights into the masked data.

This is how - although data masking is in place - you can find out users from the marvelous, imaginary city Burnsmouth.

With having proper column-level security in place the user would not be able to get such insights as access to the city column would be denied completly.

Fore more details see MS Dynamic Data Masking.

Building reports with semantic models

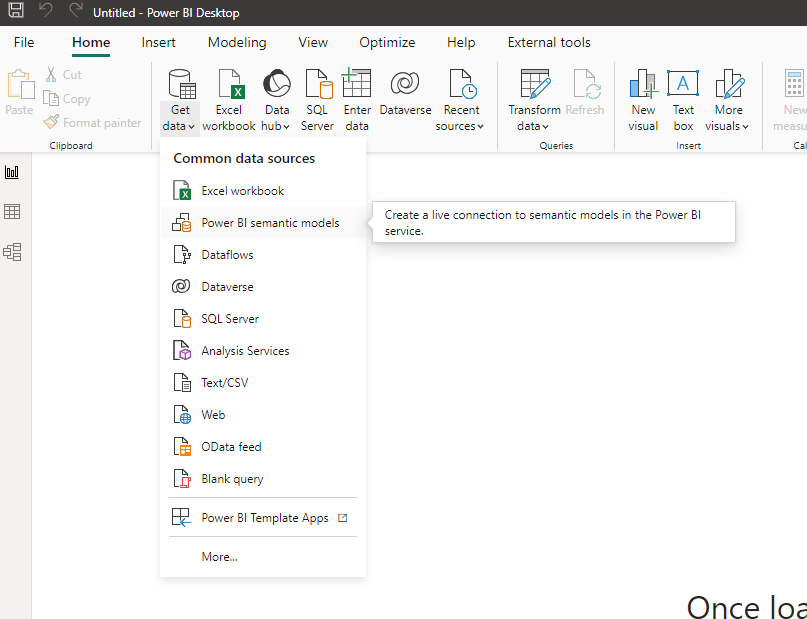

Now that everything is secure, how can we best share data - including an easy to grasp data model - with someone eager to create reports?

The best way to achieve this is by sharing semantic models.

Let's look at some of the reasons why.

✔️ Firstly we have seen above, that with column-level security in place Power BI will fail accessing data from just an SQL endpoint as it is unable to query the structure of the tables. Most likely Power BI is doing a SELECT * FROM table to get all available columns which fails with proper column-level security in place.

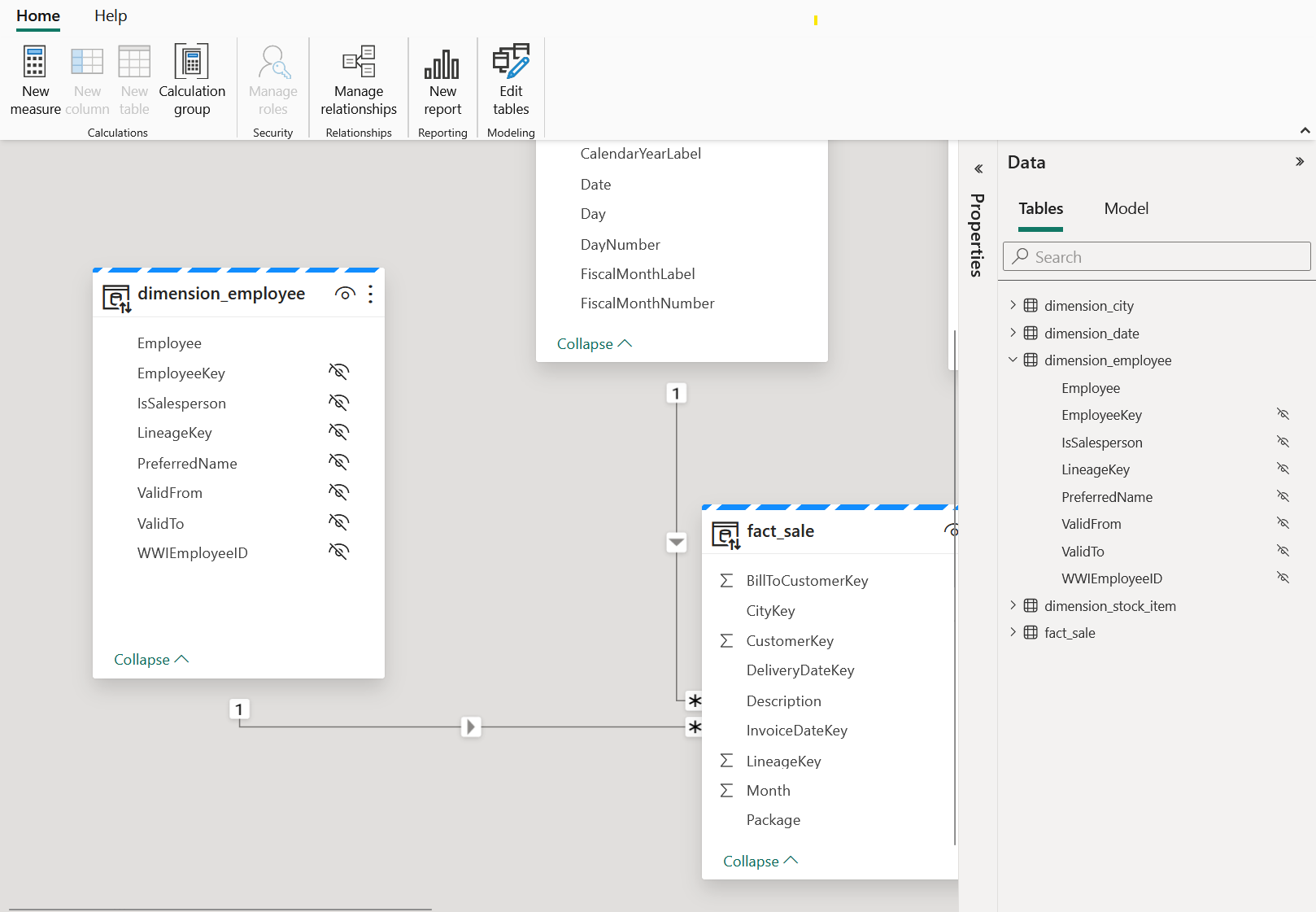

✔️ Secondly a semantic model is a logical description of an extract of your lakehouse, most likely from a specific domain (e.g. Sales, HR). Most of the times it will be a star schema and can help report creating users easily understand the data model.

✔️ Thirdly the default connection type when using semantic models is the so called Direct Lake mode. It combines the existing connection types - Direct Query and Import - by combining their advantages. This means it is fast and there is no need for periodically updating your reports dataset to reflect the latest data changes. Going into detail would be a blog post on its own. For now you can find out more details here.

Every lakehouse creates a default semantic model but the recommendation is to create specific ones for individual use cases, narrowed down to the tables, relationships and columns the users need and are allowed to access (combined with column-level security).

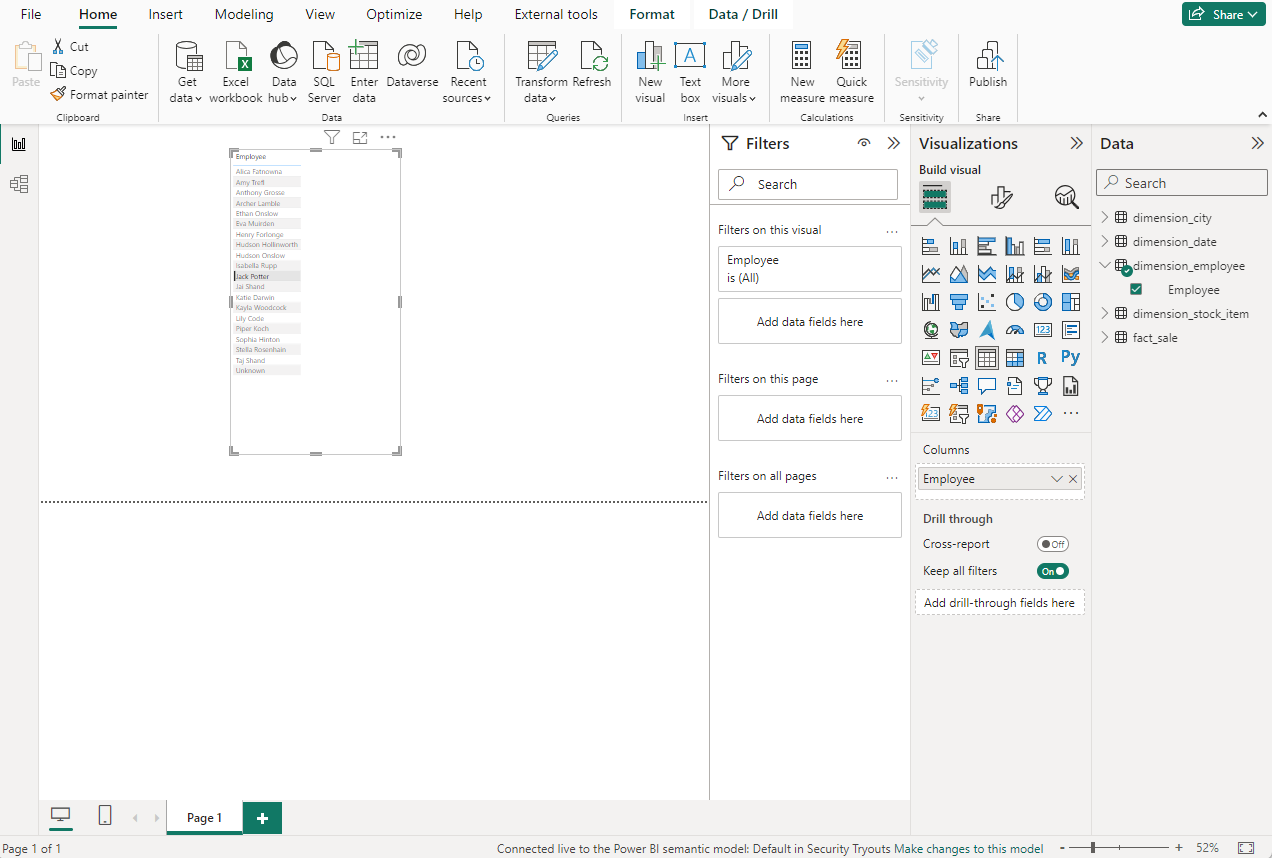

Let's illustrate this process.

Create a semantic model for a lakehouse

Include needed tables and model their relationships

Hide all columns that are not visible to the user because of column-level security (everything but the employee name from the dimension table)

Share the semantic model with a group of users

Let them import the semantic model in Power BI to build reports on top of it

After connecting we see the expected result. The employee table only shows the employee column. Job done.

Remember, with column-level security in place connecting to the SQL endpoint would fail during import.

That is it for now.

Stay tuned for more posts in the area of data engineering with Microsoft Fabric. Especially the important topic of how to automatically test granted permission and security in general might be an interesting next read.

Subscribe to my newsletter

Read articles from Stefan Starke directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by