Intents, Solvers, and Everything Aggregators

Tannr Allard

Tannr Allard

Blockchains are the Best Substrate for Intents

The goal of intent systems is to outsource the process of state transition generation. State transition generation is performed on-chain in traditional smart contract architectures. Yet, everybody who interacts with a smart contract system always has in mind a particular goal they wish to achieve. This goal - or intent - is the real reason for the smart contract system’s existence. The efficacy with which it achieves this goal on behalf of the end user is the metric by which the system is judged.

In the general case, this can be said of any software system or indeed any tool at all: we choose the tools that best allow us to achieve the outcome we have in mind. If we had no outcome in mind, we’d have no reason to use any tools at all.

So why are intents particularly intriguing in the blockchain context? Blockchains provide a globally accessible “shared” space; any software or data on the chain can interact (in some fashion) with any other software or data. There are no restrictions on the origins of the software that exists on chain. Blockchains are the best system to facilitate intents because they provide the best substrate to facilitate the generation & utilization of common knowledge.

Common Knowledge is the Foundation of Intents

Intent systems stand to benefit greatly from blockchains because blockchains are common knowledge systems. Common knowledge is a unique form of knowledge held among a collection of participants. Informally, it is knowledge wherein each participant not only knows some fact, but can also:

Conclude whether or not any other participant knows that fact and

Can conclude whether or not any other participant knows about who knows that fact

This type of knowledge is useful for intent systems because this knowledge enables collective action and preserves the possibility of optimal selection. Collective action requires coordination, which in turn requires involved participants to operate within a shared set of base assumptions as well as to share a common protocol for communication and decision making. Optimal selection (especially in a decentralized and trust-minimized setting) requires that a decision maker have all options available as choices and has a means to choose any available choices. Both of these requirements are met by common knowledge.

Some blockchain designs are better at facilitating common knowledge generation & stewardship than others. Knowledge about the full state of the system is what allows an end-user (or application) to choose the best means by which to achieve their goal. The fact that the knowledge is common is what preserves and guarantees the freedom for users to choose.

Common knowledge is the bedrock of interoperability

Smart contracts distinguish themselves from traditional software through their inherent design for open access and the facilitation of direct interactions, situated within a decentralized, globally shared environment. This architecture inherently fosters interoperability in the form of common knowledge as it relates to the capabilities and interfaces of smart contracts. Despite the existence of access control mechanisms and the challenge posed by the semantic opacity of contract code to other smart contracts, the interoperability conferred upon smart contract systems is materially better compared to traditional software. The level of interoperability (and the work required to achieve interoperability) is a direct result of a particular blockchain architecture’s ability to facilitate this sort of common knowledge.

The critical relationship between common knowledge and interoperability lies in the mutual awareness among blockchain participants that any smart contract is, in principle, accessible and capable of integration, within the bounds of its designed access permissions. This environment engenders a competitive and cooperative ecosystem where the design and development of smart contracts are inherently informed by their potential for integration within a larger network of independently developed contract systems.

Current technology fails to harness the power of common knowledge

The fact that all smart contract systems are equally visible to an end user has an important implication for smart contract developers: their users (other smart contracts, front ends, etc) always have immediate access to alternative software that does the job better. The goal which a user has in mind when interacting with a smart contract system may, at any time, be better achieved either by an alternative smart contract system or by an ad-hoc combination of independent systems, connected by a transaction that spans them all.

The best smart contracts for an end-user are the contracts that are maximally useful to them according to their goals. Since all smart contract systems exists out in the open for anybody to use, end-users are free to utilize the best contracts (including combinations of independent contracts) that best suits their needs in the moment.

This sounds great, yet it is extremely challenging for any end-user to make this decision. Decision making is a significant computational or cognitive burden in this setting. The mechanisms required to facilitate an efficient discovery and selection process across a global set of combinations of choices does not exist.

This is why services like exchange aggregators are useful and desirable: they help users find possible trade paths according to their preferences and they help users choose the best one out of multiple viable options. Of course, a completely universal protocol for this would be ideal, but it can be hard to imagine how it might look or function. Let’s begin with a look at exchange aggregators and iteratively consider ways in which their design might be made more universal or generic.

Aggregators are Search & Selection Services

Exchange aggregators search and select across a multitude of potential exchange systems to identify the best one for the end user. Of course, the aggregator itself is then the limiting factor on what counts as “best”; which systems are worthy of consideration; and how much say the user has in defining what factors are considered when deciding what is “best”.

Aggregators can facilitate trades between disconnected markets

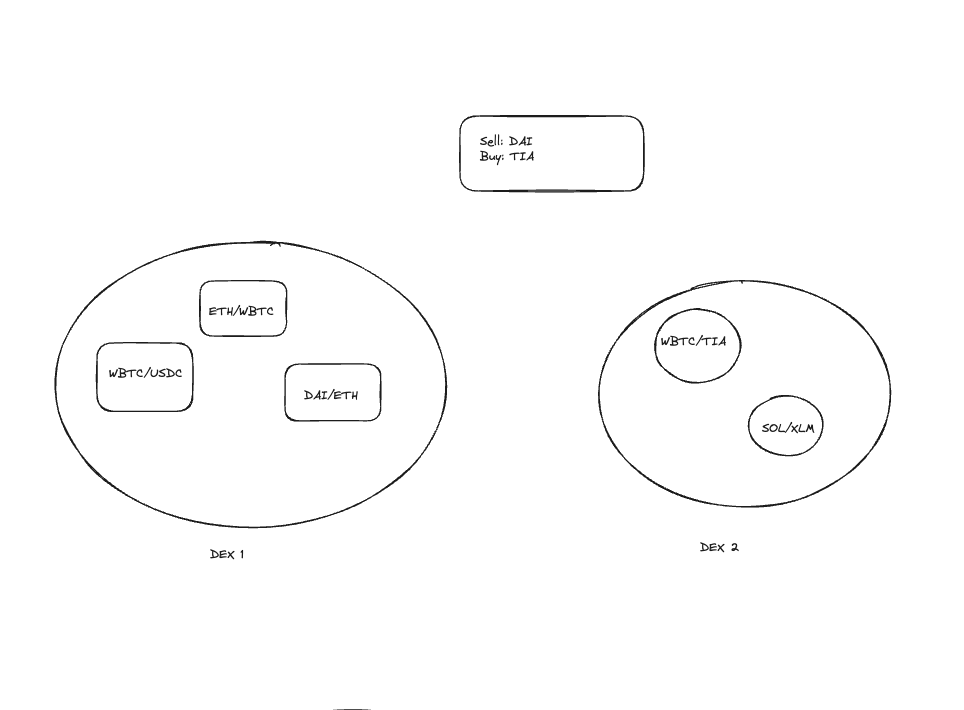

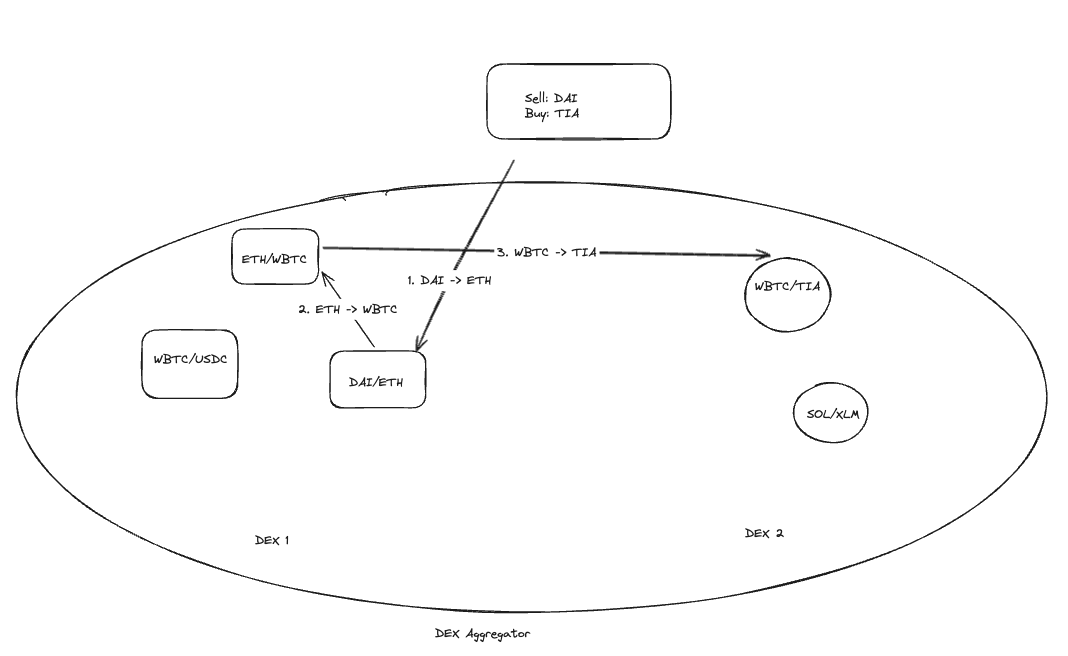

Dex aggregators can provide multiple advantages over single-exchange interactions when it comes to order-routing. First, they can facilitate the trading of assets in cases where any single dex lacks a route from the token being sold to the token being purchased. As an example, consider a purchase of $TIA for $DAI given the two DEX’s below. DEX 1 has three liquidity pools, and DEX2 has two liquidity pools.

DEX1 is the only exchange providing a market for $DAI, while DEX2 is the only one exchange providing a market for $TIA. A user limited to trading on either of the single exchanges would be unable to successfully complete this trade atomically; they’d have to manually discover a path from $DAI to $TIA and then traverse that path by manually submitting transactions, one after the other.

The requirement that the user manually discovers the route and is then responsible for executing it is undoubtedly a poor user experience. All of the liquidity required to facilitate this trade exists within the same shared space, so the trade between $DAI/$TIA should really not be any different from the trade between, say, $ETH/$WBTC. But in the simple market structure above, it is different and this difference has a material impact on the trader.

The liquidity sources are split amongst different exchanges. The partitioning of assets across exchanges introduce boundaries between DEX1’s pools and DEX2’s pools. The boundaries add friction to the user experience by introducing additional financial costs (through fees and slippage accrual) as well as costs in the form of labor, time & opportunity.

The Web3 community often critiques the heavily silo’d nature of Web2 infrastructure. Yet, silos abound in Web3 as well. Exchange aggregators are partly motivated by the friction caused by economic silos.

DEX aggregators help ameliorate this problem by abstracting over the silos that DEX’s create. Aggregators unify the otherwise fragmented liquidity. Aggregators are also poised to split a user’s trade across multiple markets in cases where splitting the trade might ultimately be more cost-effective to the end user.

Aggregator of Aggregators (higher order aggregators)

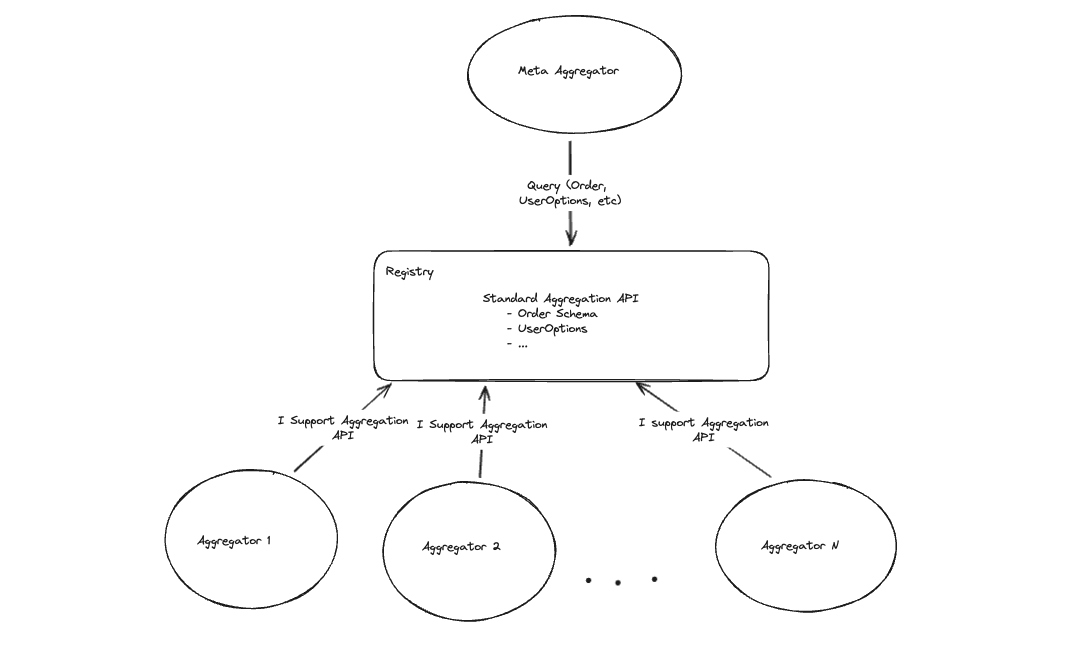

Further customizability might be achieved by a meta-aggregator, or first-order aggregator, that aggregates over the DEX aggregators (zero-order aggregators). The trading options that a meta-aggregator can provide to an end user is the union of all the various options provided by the underlying zero-order aggregators.

A first-order aggregator could, in principle, provide two sorts of benefits beyond what zero-order aggregators could provide directly:

Greater order customization: Various aggregators, owing to the fact they aren’t nearly as immutable as the on-chain DEX’s they interact with, are likely to offer their own set of unique benefits. A meta-aggregator can surface all of these benefits from all of its underlying aggregators. This gives the end-user greater control over how the final route for their trade is selected.

More options without more overhead: The first-order aggregator outsources the burdensome order routing computations to the underlying zero-order aggregators. The first-order aggregator need only provide search and selection between the routes offered by these aggregators. Depending on the aggregators’ own architecture & interfaces, it is possible that the first-order aggregator could chart a trading path that spans across multiple underlying aggregation system. But even without that kind of sophisticated functionality, the first-order aggregator has clear advantages over using any of its underlying aggregators directly. In the simple case, the first-order aggregator simply pits multiple aggregator services against each other. Each service functions to provide the best trading path to the end user, and therefore act as a natural filtration system. Amongst the set of best choices, the meta-aggregator enables the user to choose the best of those.

First-order aggregators have the opportunity to provide greater customizability & expressiveness to the end-user. They can also offer a more comprehensive search process and a potentially more optimal selection process (than any single aggregator).

The higher-order aggregator concept does not fundamentally solve the silo problem, though. The complexity cost of economic silos (such as liquidity fragmentation) is internalized in some form or another. The user tends to pay for this in the end.

The use of a higher order aggregator does not guarantee that all viable trade routes for a user’s order will actually be explored. This is especially the case because the global state is constantly changing and no aggregator service can take all of these changes into account quickly enough to actually serve its users. Notably, aggregators are pure “search and selection” services with “best effort” level outcomes; there are no execution guarantees.

The root problem at play here is: the discovery and selection mechanisms cannot keep pace with the available choices on the network, since the choices exist in a globally open system that anyone can update at any time.

New DEX’s, new liquidity pools, new aggregators, new assets, new financial systems, are always emerging at the edge. Even the best aggregator system cannot ensure that it routes over all of them. Because of this, no aggregator system can guarantee truly optimal solution discovery.

Aggregators → Higher Order Aggregators → Open Aggregator Protocols

One possible remedy that starts to get at the heart of the problem is to invert the mechanism by which the meta-aggregator adds support for new dex aggregators. More precisely, the meta-aggregator could transition from actively integrating with specific dex aggregators to passively sourcing aggregators who opt in of their own volition.

In other words, we remove the bottleneck of an integrator by placing integration responsibility on the integrated system itself. This makes sense for the integrator because it doesn’t have to actively track all new developments. It makes sense for new systems as well, because it enhances their discoverability.

The shift of integration responsibility from the higher-order aggregator developer team to, well, anybody in the larger blockchain network certainly increases integration capacity. Aggregators (or independent parties) would “register” the aggregator as a viable sources of order-routing information in this hypothetical system. This approach would allow the meta-aggregator to outsource the responsibility of keeping pace with the highly dynamic set of settlement destinations for the end user’s desired trade.

Following this approach would require standardization of the meta-aggregator<>dex-aggregator interface, of course. Due to the permissionless nature of this “dex aggregator registry”, verification of aggregator compliance would be required. At the least, something like ERC-165 would likely be used to programmatically enforce interface support. Checking for correct implementation of the semantics expected of an aggregator is a different story entirely.

Why require aggregator registration at all?

While this approach would mark an incremental improvement in search and selection of trade routes, it is also significantly more complex. Further, it is only a partial solution. The existence of a registry creates a hard boundary between the meta-aggregator’s search space and… the rest of the global network. Furthermore, it is possible (and likely) that one aggregator or another provides special features, configuration options, or even workflows that are outside of the restrictions of the standardized aggregator interface. These uniquely valuable features would be obfuscated by standardization. This forces developers to choose between greater compatibility & discoverability versus innovation & competitiveness.

The evolution from DEX → Aggregators → Meta-aggregators is one of expanding the search space, the search criteria, and the selection criteria. Higher-order aggregators do not inherently provide access to the globally optimal set of trading routes. It is conceivable that some other route, not discoverable by the underlying aggregators individually nor collectively, might exist.

In common smart contract architectures, the fact is that developers must always choose between uniqueness versus integration & discoverability; dapps can’t route over opaque EVM bytecode that they don’t already understand a priori.

Let’s continue on the journey of hypothetically expanding the aggregator concept to understand its limitations. Let’s move beyond “aggregators of aggregators of…” models and consider the hypothetical super aggregator.

A Super-DEX Aggregator for Global Trade Routing (and how current blockchain architecture blocks us from having one)

While the registry-based model reduces the overhead of supporting new aggregators, it is still not all-things-considered search. To step beyond the higher-order aggregator model, we’d need a way to identify every possible trading path across the entire global system. Computing such a set seems completely infeasible for multiple reasons: the dynamic state of the chain, the combinatorial explosion of choices, semantic opacity, and the inability to programmatically reason about the entire state transition that results from submitting the trade transaction.

Dynamic State of the Chain: The set of possible routes is undergoing constant change. By the time a candidate route is chosen, it may very well be obsolete.

Combinatorial Explosion: The routing problem suffers from combinatorial explosion due to the fact that the path through which the trade is routed may have multiple steps, each of which is only valid to consider based on its predecessors. The set of possible choices quickly becomes a massively unwieldy decision tree. Naively, some form of backtracking over a massive & ever-changing data set isn’t a promising approach.

Contracts can’t express useful rules about other contracts: Such super-aggregator routing couldn’t be permissionless even if we wanted it to. The reason is semantic opacity. Smart contracts only have access to so much information. Even if a smart contract has a target address and knows the interface of a target, it does not have access to the target’s semantics. The logic that’s triggered when contract A calls contract B’s function is opaque to contract A at runtime. The semantic opacity of the traditional smart contract programming paradigm significantly inhibits integration automation because contracts cannot detect erroneous or malicious behavior.

Contracts can’t reason about the state transition in which they are taking part: Finally, even without the problem of semantic opacity, the on-chain component of the meta-aggregator does not have access to the information it would need to ensure safe integrations in a permissionless & trustless environment. The trader’s transaction may result in a long sequence of contract calls across different contract systems. Each contract in the execution sequence lacks access to the entire state change. It therefore cannot enforce important sets of invariants without relying on overly restrictive methods like explicit whitelisting & blacklisting. Smart contract systems ensure safe interoperation with third party code by relying on manual human intervention (such as admin permissions or governance mechanisms) to approve or deny access to such untrusted software for which the authors’ identities may not even be known.

The ideal super-aggregator would be safe, permissionless, and trustless. Safety is absolutely non-negotiable in blockchain applications and is the one property we can’t compromise on. Due to fundamental limitations of the programming models we use today, we can’t have complete safety without also adding some form of permission system that’s operated by some trusted party (the “party” in this context is an abstraction and refers not only to individual operators but also to trusted sets of presumably incentive-aligned operators such as DAO members, for example).

Revisiting the concept of common knowledge, we can interpret this problem as a lack of common knowledge at the smart contract (programming model) level. In current smart contract systems based on the account model, contracts do not share common knowledge about the transaction in which they are executing, nor do they share common knowledge about how to interpret the meaning of other contracts. The interoperability of modern smart contract systems is consequently superficial and fundamentally limited in their ability to facilitate intent-centric interactions. This is a great example of how the design decisions at the base layer of a system cannot be perfectly abstracted away.

The Everything-Aggregator (a generalized intents protocol)

For the sake of discussion, assume that we could remove the need for an explicit registry in our hypothetical meta-aggregator. Let’s assume that it can discover all possible trading routes and then select the best one for the end-user. Given a system with such powerful search and selection capabilities, perhaps other systems besides dex’s should be included in the meta-aggregator’s search space. Perhaps we’d call this system the “Everything Aggregator”

This would make a lot of sense for the end user. After all, other financial services could be quite useful in the context of identifying the best sequence of actions to fulfill a user’s trade. A simple & well-known example of this is flash lending. Flash lending services enable users to take out extremely short-term loans (”flash loans”) that they pay back by the end of the same transaction in which their debt was initially created.

Flash loans enables traders to trade in ways that they’d be otherwise incapable of fulfilling by temporarily granting them access to capital resources. Flash loans introduce distinct parties who play distinct roles in a trade. The traders discover and capitalize on profit opportunities, while the lenders focus on providing the capital required to exploit those opportunities. In a way, the trader and the lender are engaging in a permissionless, collaborative trading process.

An Everything Aggregator can search & select over a broader class of financial applications than can the aggregators we’ve discussed in preceding sections.

The hypothetical Everything Aggregator takes us beyond the “exchange” use case; it is a general purpose matching protocol. Given user requirements, it will search for relevant systems across an indeterminate set of possibilities. It will then construct potential state transitions made possible by various combinations of protocols (such as lending and trading protocols). It then identifies state transitions enabled by such combinations and selects the best of these according to the goals expressed by the end user’s request.

This general purpose aggregator is really a decentralized orchestration engine. It not only searches for “pieces of the puzzle”; it identifies and classifies the ways in which those pieces fit together. It relies heavily on the ability to decompose a user request into subproblems as well as compose different decentralized services into a final solution.

It would be extremely challenging to implement something like this on Ethereum. One would hope that this system would be fair in its consideration of potential solutions. One would also hope that it is efficient in its ability to hone in on the relevant systems that are likely to be useful in facilitating the user’s end goal. This generic protocol would also need to effectively identify combinations that span across a multitude of decentralized applications. It should guarantee safety invariants (such as “no user should lose funds unintentionally) without being overly restrictive by way of whitelisting or other forms of permissioned integrations.

The applications over which the Everything Aggregator… aggregates will also have important requirements of their own. These applications themselves must also benefit from safety guarantees, lest become part of a larger, multi-step transaction that involves malicious third party code or failures due to externalities that were not accounted for.

This is too much to hope for. But it does highlight an important point: the optimal protocol for end user’s is one where the search and selection process for a particular intent is perfectly tailored to the user’s specific request while also offering guarantees about the final result. To achieve such a high degree of customization would require a fully open system that’s semantically transparent enough to enable intelligent inter-application routing combined safety guarantees for the applications as well.

The ideal system is one in which nothing is hardcoded nor pre-defined, from the search process, to the selection process, to the execution or settlement process; one which busts open the solution space and the problem space for maximal expressiveness. Such a system would structure itself adaptively and just-in-time. The user’s input (a goal) would constrain the problem space, this would in turn automatically direct the search through the solution space. The dimensional reduction of the search space, driven by the user’s goal, then enable a search and selection process which ignores exactly that which is irrelevant to achieving the user’s goal and nothing more.

What this boils down to is: the end-goal of “intents” is one that is maximally expressive (of goals & capabilities), permissionless (to ensure anything that should be included in the search is included in the search) and collaborative (so that many parties can provide a part of the final solution when the user’s goal is multi-domain or when a single system cannot meet all the user’s criteria).

A system that meets these goals would look significantly different from what we have seen from blockchains thus far. In fact, its capabilities would also extend far beyond the promises of current intent-based projects.

We are building such a system. We believe the future of blockchain technology is not about making blockchains scale: it’s about making blockchains smart.

Khalani will implicitly realize the full potential of intent-centric protocols. But for the world’s first blockchain of intelligence, intents are just the tip of the iceberg.

To follow our journey, please join our Telegram and Discord. Connect with us on X at Khalani Network and follow our founders Tannr and Kevin.

Subscribe to my newsletter

Read articles from Tannr Allard directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by