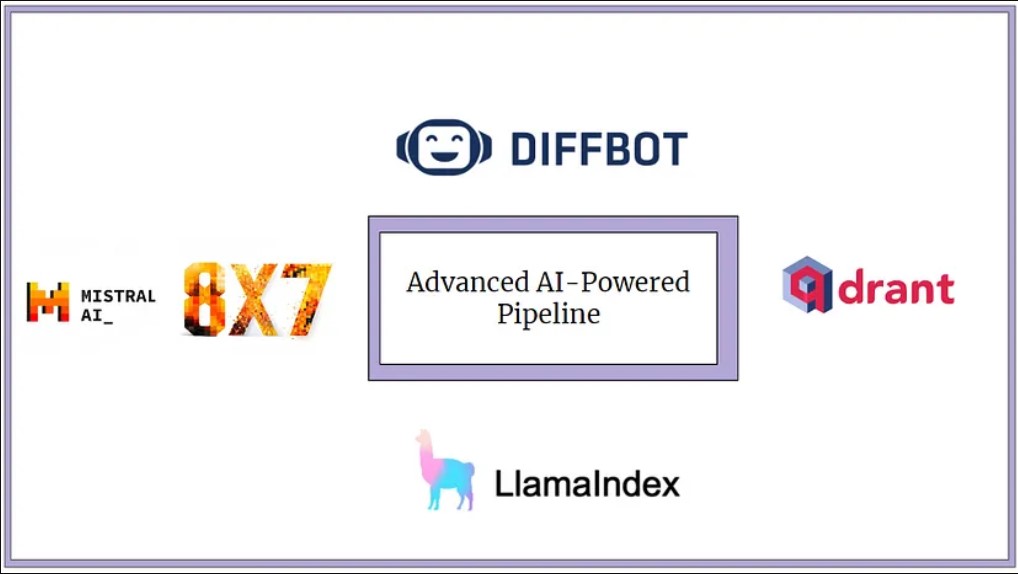

How to Build an Advanced AI-Powered Enterprise Content Pipeline Using Mixtral 8x7B and Qdrant

Akriti Upadhyay

Akriti UpadhyayTable of contents

Introduction

As the digital landscape rapidly evolves, enterprises are facing the challenge of managing and harnessing the exponential growth of data to drive business success. With the expansion of volume and complexity of content, traditional content management approaches are failing to provide the agility and intelligence required to scale or extract valuable insights.

The integration of vector databases and the mixture of experts, such as Mixtral 8x7B LLM, offers a transformative solution for enterprises seeking to unlock the full potential of their content pipelines. In this blog post, we will explore the essential components and strategies for building an advanced AI-powered enterprise content pipeline using Mixtral 8x7B and Qdrant – an advanced vector database following the HNSW algorithm for approximate nearest neighbor search.

To build the advanced AI-powered pipeline, we’ll leverage a Retrieval Augmented Generation (RAG) pipeline by following these steps:

Loading the Dataset using LlamaIndex

Embedding Generation using Hugging Face

Building the Model using Mixtral 8x7B

Storing the Embedding in the Vector Store

Building a Retrieval pipeline

Querying the Retriever Query Engine

Enterprise Content Generation with Mixtral 8x7B

To build a RAG pipeline with Mixtral 8x7B, we’ll install the following dependencies:

%pip install -q llama-index==0.9.3 qdrant-client transformers[torch] |

Loading the Dataset Using LlamaIndex

For the dataset, we have used Diffbot Knowledge Graph API. Diffbot is a sophisticated web scraping and data extraction tool that utilizes artificial intelligence to automatically retrieve and structure data from web pages. Unlike traditional web scraping methods that rely on manual programming to extract specific data elements, Diffbot uses machine learning algorithms to comprehend and interpret web content much like a human would. This allows Diffbot to accurately identify and extract various types of data, including articles, product details, and contact information, from a wide range of websites.

One of the standout features of Diffbot is its Knowledge Graph Search, which organizes the extracted data into a structured database known as a knowledge graph. A knowledge graph is a powerful representation of interconnected data that enables efficient searching, querying, and analysis. Diffbot's Knowledge Graph Search not only extracts individual data points from web pages but also establishes relationships between them by creating a comprehensive network of information.

To get the URL, make an account on Diffbot. Go to Knowledge Graph, and Search. Here we have used Organization in the Visual, and filtered by Industries->Pharmaceutical Companies.

Then, we chose GSK, which is a renowned pharmaceutical company, and clicked Articles.

After clicking Articles, we got an option to export it as CSV or make an API call.

We made an API call and used that URL to access the data in Python.

import requests |

The data is saved now in a JSON file. Using LlamaIndex Simple Directory Reader, we will load the data from the “json” directory.

from llama_index.core import SimpleDirectoryReader |

Now, it’s time to split the documents into chunks using Sentence Splitter.

from llama_index.node_parser.text import SentenceSplitter |

After that, we will create a Text Node object where we will assign the metadata of the source document to the metadata attribute of the node so that the relationships between them can be managed easily.

# Import the TextNode class from the llama_index schema module |

Embedding Generation Using Hugging Face

There are many supported embedding tool integrations with LlamaIndex; here we are moving forward with the Hugging Face Embedding tool.

# Import the HuggingFaceEmbedding class from the llama_index embeddings module |

Building the Model Using Mixtral 8x7B

Mixtral 8x7B is a cutting-edge language model developed by Mistral AI. It is a sparse mixture of experts (MOE) model with open weights. It is designed to offer powerful AI capabilities by integrating elements from BERT, RoBERTa, and GPT-3. This model represents a significant advancement in natural language processing by providing a practical and accessible solution for various applications.

Mixtral 8x7B employs a Mixture of Experts (MoE) architecture and is a decoder-only model. In this architecture, each layer consists of 8 feedforward blocks, referred to as experts. During processing, a router network dynamically selects two experts for each token at every layer, which enables effective information processing and aggregation.

One of Mixtral 8x7B's standout features is its exceptional performance, characterized by high-quality outputs across diverse tasks. The model is pre-trained with multilingual data using a context size of 32k tokens. It outperforms Llama 2 and GPT-3.5 on most benchmarks but, in some cases, it matches Llama 2 and the GPT-3.5 model.

Mixtral 8x7B is also available in an Instruct form, which is supervised and fine-tuned on an instruction-following dataset, and optimized through Direct Preference Optimization (DPO) training. To know more about Mixtral 8x7B in detail, visit this paper.

Using Hugging Face and LlamaIndex, we will load the model.

import torch |

After that, we will create a service context with the loaded LLM and the embedding model.

from llama_index import ServiceContext |

Storing the Embedding in the Vector Store

Here, we have used the Qdrant Vector Database to store the embeddings. Qdrant is a high-performance open-source vector search engine designed to efficiently index and search through large collections of high-dimensional vectors. It's particularly well-suited for use cases involving similarity search, where the goal is to find items that are most similar to a query vector within a large dataset.

We will initiate the Qdrant Client first, and create a collection by enabling hybrid search.

import qdrant_client |

We will add the node to the vector store and create a storage context. Also, we will create an index where we will use documents, service context, and storage context.

# Add nodes to the vector store |

We will then use a query and embedding model to create a query embedding, which we will use later as a reference.

query_str = "Can you update me about shingles vaccine?" |

Using hybrid query mode, we will create a vector store query using LlamaIndex, where we will use the query embedding and save the query result.

from llama_index.vector_stores import VectorStoreQuery |

Then, we will parse the query result into the set of nodes.

from llama_index.schema import NodeWithScore |

Building a Retrieval Pipeline

For building a retrieval pipeline, we’ll use the above to create a retriever class.

from llama_index import QueryBundle |

Finally, our query engine will be ready with the help of Retriever Query Engine.

from llama_index.query_engine import RetrieverQueryEngine |

Querying the Retriever Query Engine

As our query engine is ready, now is the time to pass some queries and see some results.

Question 1:

query_str = "Write a paragraph about GSK announcement about its shares." |

The response will be:

GSK plc announced the completion of its share consolidation on July 18, 2022. This followed the demerger of the Consumer Healthcare business from the GSK Group to form Haleon. The consolidation of GSK shares became effective at 8.00 a.m. on July 19, 2022. As part of the consolidation, a ratio of 4 new ordinary shares was applied for every 5 existing ordinary shares. Fractional entitlements that arose from the consolidation were aggregated and sold in the open market, with the net proceeds paid to each relevant shareholder according to their entitlement. Following the issuance and consolidation, the total number of voting rights in GSK as of July 19, 2022, was 4,067,352,076. |

Question 2:

query_str = "Write a paragraph about GSK's RSV vaccine." |

The response will be:

GSK's Arexvy is the world's first respiratory syncytial virus (RSV) vaccine for older adults. The US Food and Drug Administration (FDA) approved Arexvy for the prevention of lower respiratory tract disease (LRTD) caused by RSV in individuals 60 years of age and older. This groundbreaking approval enables adults aged 60 years and older to be protected from RSV disease for the first time. The approval is based on data from the positive pivotal AReSVi-006 phase III trial that showed exceptional efficacy in older adults, including those with underlying medical conditions, and in those with severe RSV disease. The US launch was planned before the 2023/24 RSV season. RSV is a common, contagious virus that can lead to potentially serious respiratory illness. It causes approximately 177,000 hospitalizations and an estimated 14,000 deaths in the US in adults aged 65 years and older each year. |

Question 3:

query_str = "Write a paragraph about GSK's Endrometrial Cancer Drug Development." |

The response will be:

GSK has made significant improvement in the development of drugs for endometrial cancer. Their drug, Jemperli (dostarlimab), has been approved by the European Commission and the US Food and Drug Administration (FDA) for the treatment of adult patients with mismatch repair-deficient (dMMR)/microsatellite instability-high (MSI-H) primary advanced or recurrent endometrial cancer. Jemperli, in combination with carboplatin and paclitaxel (chemotherapy), is the first and only frontline immuno-oncology treatment in the European Union for this type of endometrial cancer. The FDA has also granted accelerated approval for Jemperli as a monotherapy for treating adult patients with dMMR/MSI-H recurrent or advanced endometrial cancer that has progressed on or following prior treatment with a platinum-containing regimen. This approval is based on the results from the dMMR/MSI-H population of Part 1 of the RUBY/ENGOT-EN6/GOG3031/NSGO phase III trial. GSK continues to evaluate Jemperli in the hopes of further expansion for the drug as data mature. |

Question 4:

query_str = "Write a paragraph about GSK's Hepatocellular Carcinoma Drug Development." |

The response will be:

GSK is making significant progress in the development of drugs for hepatocellular carcinoma (HCC). One of their drugs, Cobolimab, is currently in Phase II clinical trials for HCC. Cobolimab is a humanized monoclonal IgG4 antibody that inhibits T cell immunoglobulin mucin-3 (TIM-3), and is under development for the treatment of solid tumors including melanoma, squamous and non-squamous non-small cell lung carcinoma, HCC, and colorectal cancer. It is administered through the intravenous route. The drug's phase transition success rate (PTSR) and likelihood of approval (LoA) are being closely monitored. GSK's efforts in this area demonstrate their commitment to advancing treatments for HCC. |

Question 5:

query_str = "Write a paragraph about GSK's Uncomplicated Cervical And Urethral Gonorrhea Drug Development." |

The response will be:

GSK is currently developing a potential first-in-class antibiotic, Gepotidacin, for the treatment of uncomplicated cervical and urethral gonorrhea. This drug is in Phase III of clinical development. Gepotidacin is the first in a new chemical class of antibiotics called triazaacenaphthylene bacterial topoisomerase inhibitors. It is being investigated for use in uncomplicated urinary tract infection and urogenital gonorrhea, two infections not addressed by new oral antibiotics in 20 years. The Phase III programme comprises two studies, EAGLE-1 and EAGLE-2, testing Gepotidacin in two common infections caused by bacteria identified as antibiotic-resistant threats. The development of Gepotidacin is the result of a successful public-private partnership between GSK, the US government's Biomedical Advanced Research and Development Authority (BARDA), and Defense Threat Reduction Agency (DTRA). |

Final Words

With the help of the LlamaIndex framework, we used Diffbot API to extract enterprise content that was related to a pharmaceutical company, GSK. Using Hugging Face embeddings, Qdrant Vector Store, and Mixtral 8x7B, the retrieval pipeline was built. The results obtained using the retrieval query engine were quite fascinating. Building an advanced AI-powered enterprise content pipeline has become easy with the help of Mixtral 8x7B.

References

https://arxiv.org/pdf/2401.04088.pdf

https://docs.diffbot.com/docs/getting-started-with-diffbot-knowledge-graph

https://docs.llamaindex.ai/en/stable/examples/low_level/oss_ingestion_retrieval.html

This article was originally published here: https://blog.superteams.ai/how-to-build-an-advanced-ai-powered-enterprise-content-pipeline-using-mixtral-8x7b-and-qdrant-b01aa66e3884

Subscribe to my newsletter

Read articles from Akriti Upadhyay directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by