A Quick Look at AWS Batch

Cloud Tuned

Cloud Tuned

A Quick Look at AWS Batch

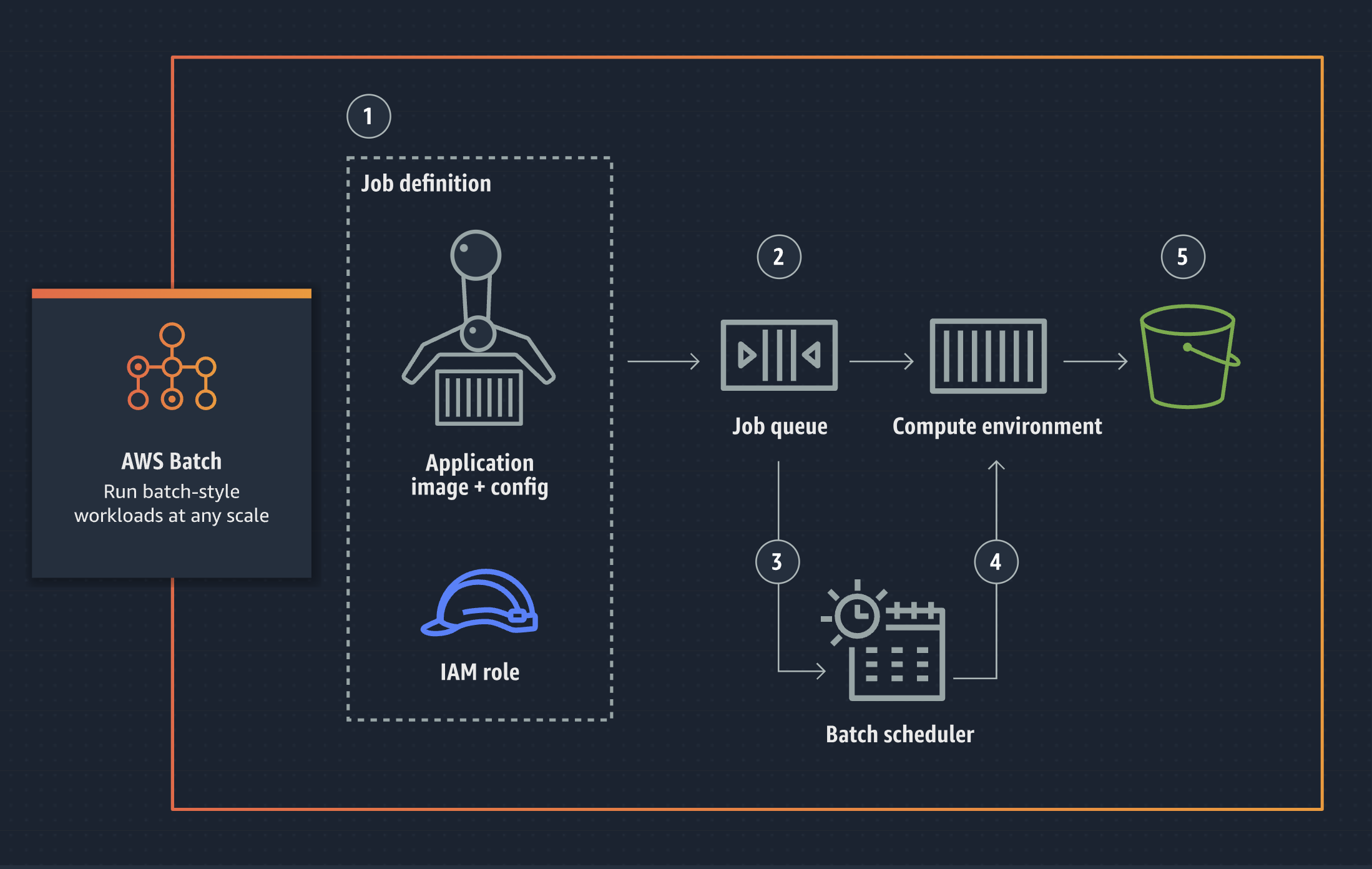

AWS Batch is a container-based, fully managed service that allows you to run batch-style workloads at any scale. The following flow describes how AWS Batch runs each job.

- Create a job definition which specifies how jobs are to be run, supplying an IAM role, memory and CPU requirements, and other configuration options.

- Submit jobs to a managed AWS Batch job queue, where jobs reside until they are scheduled onto a compute environment for processing.

- AWS Batch evaluates the CPU, memory, and GPU requirements of each job in the queue and scales the compute resources in a compute environment to process the jobs.

- AWS Batch scheduler places jobs in the appropriate AWS Batch compute environment for processing.

- Jobs exit with a status and write results to user-defined storage.

Benefits and features

Fully Managed

AWS Batch eliminates the need to operate third-party commercial or open source batch processing solutions. There is no batch software or servers to install or manage. AWS Batch manages all the infrastructure for you, avoiding the complexities of provisioning, managing, monitoring, and scaling your batch computing jobs.

Integrated with AWS

AWS Batch is natively integrated with the AWS platform, allowing you to leverage the scaling, networking, and access management capabilities of AWS. This makes it easy to run jobs that safely and securely retrieve and write data to and from AWS data stores such as Amazon S3 or Amazon DynamoDB.

Cost optimized resource provisioning

AWS Batch provisions compute resources and optimizes the job distribution based on the volume and resource requirements of the submitted batch jobs. AWS Batch dynamically scales compute resources to any quantity required to run your batch jobs, freeing you from the constraints of fixed-capacity clusters. AWS Batch will utilize Spot Instances on your behalf, reducing the cost of running your batch jobs further.

Support for both tightly coupled or massively parallel workloads

Access the breadth and depth of the AWS cloud, with compute resource management handled by AWS Batch. Scale across thousands of compute nodes with AWS Batch managing Spot capacity, or scale a tightly-coupled node group for HPC or deep learning jobs.

Architecture of AWS Batch:

The architecture of AWS Batch consists of the following components:

- Compute Environments: EC2-based or Fargate-based environments where batch computing jobs are executed.

- Job Definitions: Specifications for individual batch computing jobs, including container image, resource requirements, and execution parameters.

- Job Queues: Queues used to organize and prioritize batch computing jobs based on user-defined criteria.

- Schedulers: AWS Batch schedulers responsible for orchestrating job execution, allocating resources, and managing job queues.

- Compute Resources: EC2 instances or AWS Fargate containers provisioned by AWS Batch to execute batch computing jobs.

- Integration Points: Integration with other AWS services such as Amazon S3 for input/output data storage, Amazon CloudWatch for monitoring, and AWS Identity and Access Management (IAM) for access control.

Use Cases of AWS Batch:

AWS Batch is suitable for a wide range of batch processing workloads, including but not limited to:

- Data Processing: Batch processing of large datasets for analytics, data transformation, and ETL (Extract, Transform, Load) operations.

- Scientific Computing: Parallel processing of computational tasks in scientific research, simulations, and modeling.

- Media Processing: Encoding, transcoding, and rendering of multimedia content at scale.

- Genomics and Life Sciences: Analysis of genetic data, sequencing, and bioinformatics workflows.

- Financial Analytics: Calculation of risk models, financial simulations, and portfolio analysis.

Conclusion:

AWS Batch simplifies the process of running batch computing workloads at scale by providing managed compute environments, automatic scaling, and integration with other AWS services. With its flexible architecture, comprehensive features, and seamless integration, AWS Batch enables users to execute batch processing workflows efficiently, cost-effectively, and reliably. By leveraging AWS Batch, organizations can accelerate time-to-insight, optimize resource utilization, and streamline batch processing operations in the cloud.

Subscribe to my newsletter

Read articles from Cloud Tuned directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by