Efficient AWS S3 Management with Boto3 Functions in Python

Vedant Thavkar

Vedant Thavkar

Introduction

Amazon Simple Storage Service (S3) is a widely-used cloud storage solution provided by Amazon Web Services (AWS). Boto3, the AWS SDK for Python, offers developers a powerful toolset to interact with S3 programmatically. In this article, we'll explore the basics of AWS S3, discuss common S3 management tasks, and demonstrate how to efficiently manage S3 resources using custom functions implemented with Boto3 in Python.

Understanding AWS S3

Amazon S3 is an object storage service designed to store and retrieve any amount of data from anywhere on the web. It offers high availability, durability, and scalability, making it suitable for a wide range of use cases, including data backup, archival, and content distribution.

Key concepts of Amazon S3 include:

Buckets:

A bucket is a container for objects stored in Amazon S3.

Each bucket must have a globally unique name, adhering to DNS naming conventions.

Buckets are used to organize and manage objects within S3.

Objects:

An object is the fundamental entity stored in Amazon S3.

Objects consist of data (the file itself) and metadata (information about the file).

Each object is identified by a unique key within a bucket.

Regions:

Amazon S3 is a regional service, meaning data is stored in a specific geographical region.

Users can choose the region where their buckets and data will reside.

Selecting the appropriate region can impact latency, compliance, and cost.

Access Control:

S3 provides fine-grained access control mechanisms to manage permissions on buckets and objects.

Access control lists (ACLs) and bucket policies can be used to control who can access and manipulate S3 resources.

Implementing Boto3 Functions for S3 Management

Using Boto3, developers can create custom functions to perform various S3 operations efficiently. Here's a brief overview of common S3 management tasks and their corresponding Boto3 functions:

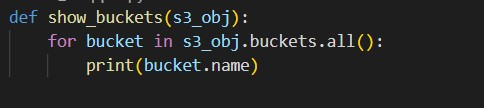

Listing S3 Buckets:

Function:

list_s3_buckets()Purpose: Retrieve a list of all existing S3 buckets associated with the AWS account.

Practical Work: Use this function to display a list of S3 buckets in the AWS account.

pythonCopy codeimport boto3

def show_buckets(s3_obj):

for bucket in s3_obj.buckets.all():

print(bucket.name)

# Example usage:

import boto3

from aws_wrapper import show_buckets,upload_file,list_files,create_bucket

s3_obj = boto3.resource('s3')

file_path='my_test_upload_file.txt'

show_buckets(s3_obj)

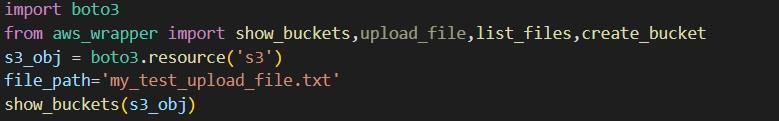

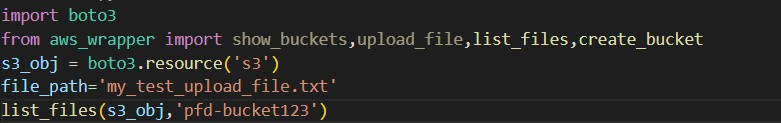

Listing Files in a Specific S3 Bucket:

Function:

list_files_in_bucket(bucket_name)Purpose: List all objects (files) stored within a specific S3 bucket.

Practical Work: Call this function to display the list of files in a selected S3 bucket.

def list_files(s3_obj,bucket_name):

print(f"The file in {bucket_name} are:")

for object in s3_obj.Bucket(bucket_name).objects.all():

print(object.key)

# Example usage:

import boto3

from aws_wrapper import show_buckets,upload_file,list_files,create_bucket

s3_obj = boto3.resource('s3')

file_path='my_test_upload_file.txt'

list_files(s3_obj,'pfd-bucket123')

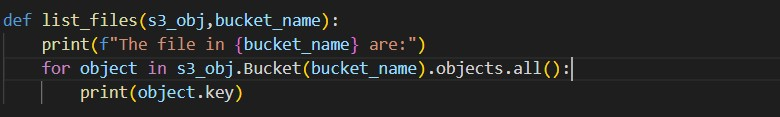

Creating an S3 Bucket:

Function:

create_s3_bucket(bucket_name)Purpose: Create a new S3 bucket with the specified name.

Practical Work: Implement a user interface to allow users to create new S3 buckets on-demand.

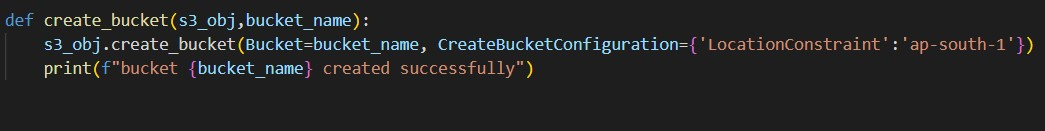

def create_bucket(s3_obj,bucket_name):

s3_obj.create_bucket(Bucket=bucket_name, CreateBucketConfiguration={'LocationConstraint':'ap-south-1'})

print(f"bucket {bucket_name} created successfully")

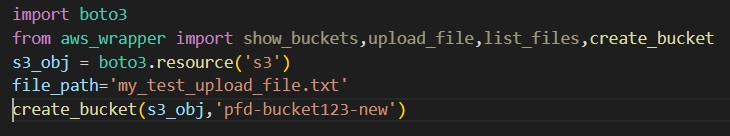

import boto3

from aws_wrapper import show_buckets,upload_file,list_files,create_bucket

s3_obj = boto3.resource('s3')

file_path='my_test_upload_file.txt'

# show_buckets(s3_obj)

#upload_file(s3_obj,'pfd-bucket123',file_path,'my_test_upload_file.txt')

list_files(s3_obj,'pfd-bucket123')

create_bucket(s3_obj,'pfd-bucket123-new')

Uploading File to S3 Bucket:

Function:

upload_file_to_bucket(file_path, bucket_name, object_key)Purpose: Upload a file from the local machine to a specified S3 bucket.

Practical Work: Integrate this function into your application to allow users to upload files directly to S3.

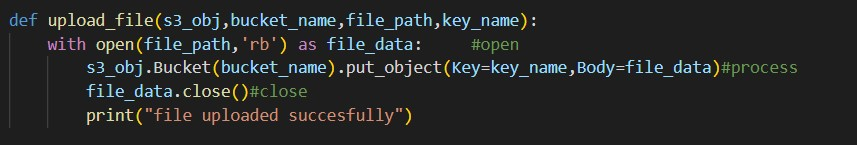

def upload_file(s3_obj,bucket_name,file_path,key_name):

with open(file_path,'rb') as file_data: #open

s3_obj.Bucket(bucket_name).put_object(Key=key_name,Body=file_data)#process

file_data.close()#close

print("file uploaded succesfully")

# Example usage:

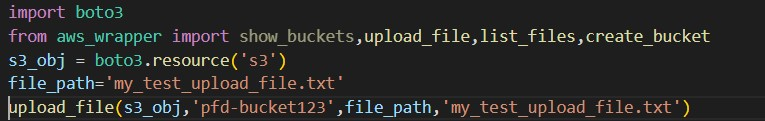

import boto3

from aws_wrapper import show_buckets,upload_file,list_files,create_bucket

s3_obj = boto3.resource('s3')

file_path='my_test_upload_file.txt'

upload_file(s3_obj,'pfd-bucket123',file_path,'my_test_upload_file.txt')

Conclusion

Boto3 functions provide a convenient and efficient way to manage AWS S3 resources programmatically in Python. By encapsulating common S3 operations into reusable functions, developers can streamline S3 management tasks and integrate them seamlessly into their applications and workflows. Understanding the basics of AWS S3 and leveraging the power of Boto3 empowers developers to build robust and scalable cloud storage solutions.

Subscribe to my newsletter

Read articles from Vedant Thavkar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vedant Thavkar

Vedant Thavkar

"DevOps enthusiast and aspiring engineer. Currently honing skills in streamlining development workflows and automating infrastructure. Learning AWS, Docker, Kubernetes, Python, and Ansible. Eager to contribute and grow within the DevOps community."