How to AI code on your PC locally and privately (even with an AMD laptop!)

Kris F

Kris F

1. Introduction

This is going to be a quick article, as I thought a lot of people could benefit from it.

AI coding is not the future. It's the present! If you haven't tried it yet, then what are you waiting for? 🙂🤖

There're various paid tools available to get started, but my favourite stack I'll describe below so far has a lot of advantages over others. Mainly price and privacy.

2. Why not chatGPT?

For 3 reasons:

from my experience ChatGPT recently has been "dumbed down", which you may heard elsewhere. It generally became lazier at writing longer text (cost saving measure I'd guess), which is quite important for long pieces of code

chatGPT has no context of your code

chatGPT isn't private and they may use your input to train their model.

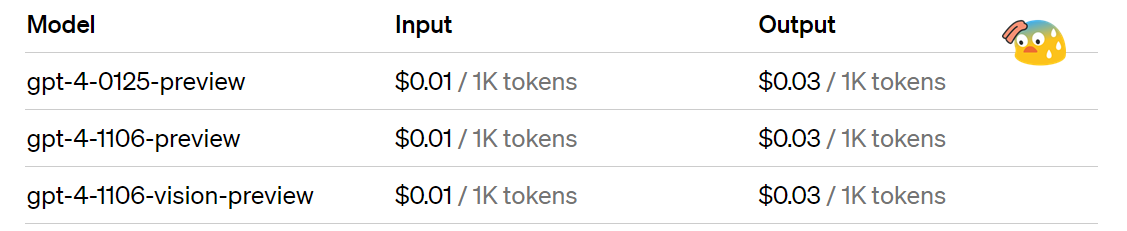

Of course you can use chatGPT API, and issue #3 and #1 would be resolved, but that's still a pretty dang expensive tool for coding.

3. So what's the alternative?

Well, your own PC!

If you have a half-decent CPU / GPU, it's worth giving it a go and see how much "speed" you'll get.

You'll VERY LIKELY NOT be able to run bigger models (like a 70 Billion parameter), as those require a lot of GPU vRAM, which your laptop won't have.

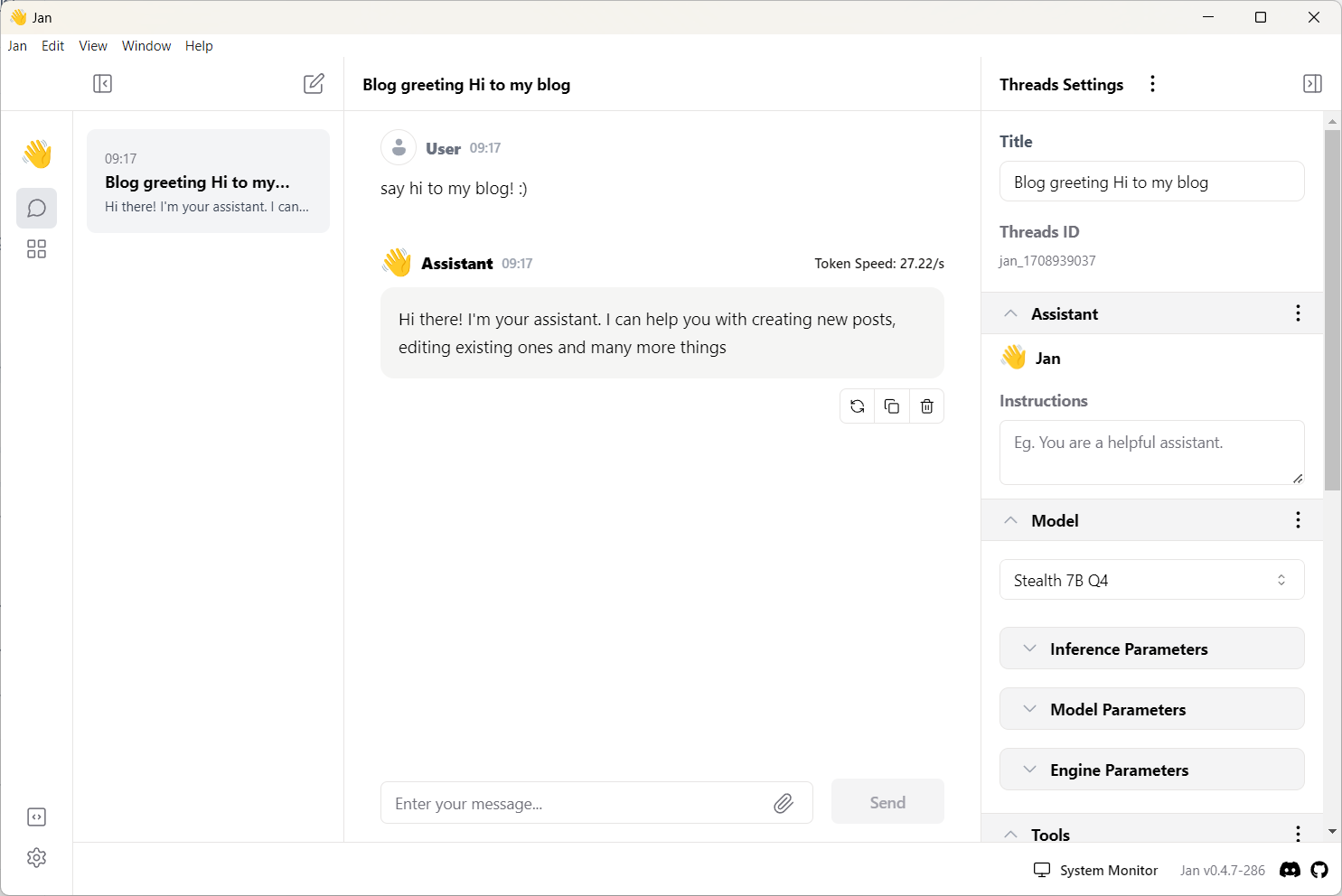

Anyway, to get started, go ahead and download https://jan.ai/

This is a tool that allows you to run all those juicy LLMs locally!

The dudes maintaining the application are amazing! They have their own discord channel where all development work is OPEN TO PUBLIC! (along with the source code). You can ask them questions, help them test or contribute to the project. They're very responsive.

Additionally, as far as I know jan.ai the ONLY solution that works with AMD powered GPU laptops. (for desktop PC there're other options)

4. What if I have a crappy PC?

Don't worry, you still have plenty of options.

There're a LOT of serverless infrastructure providers that gives you option to an API you can use.

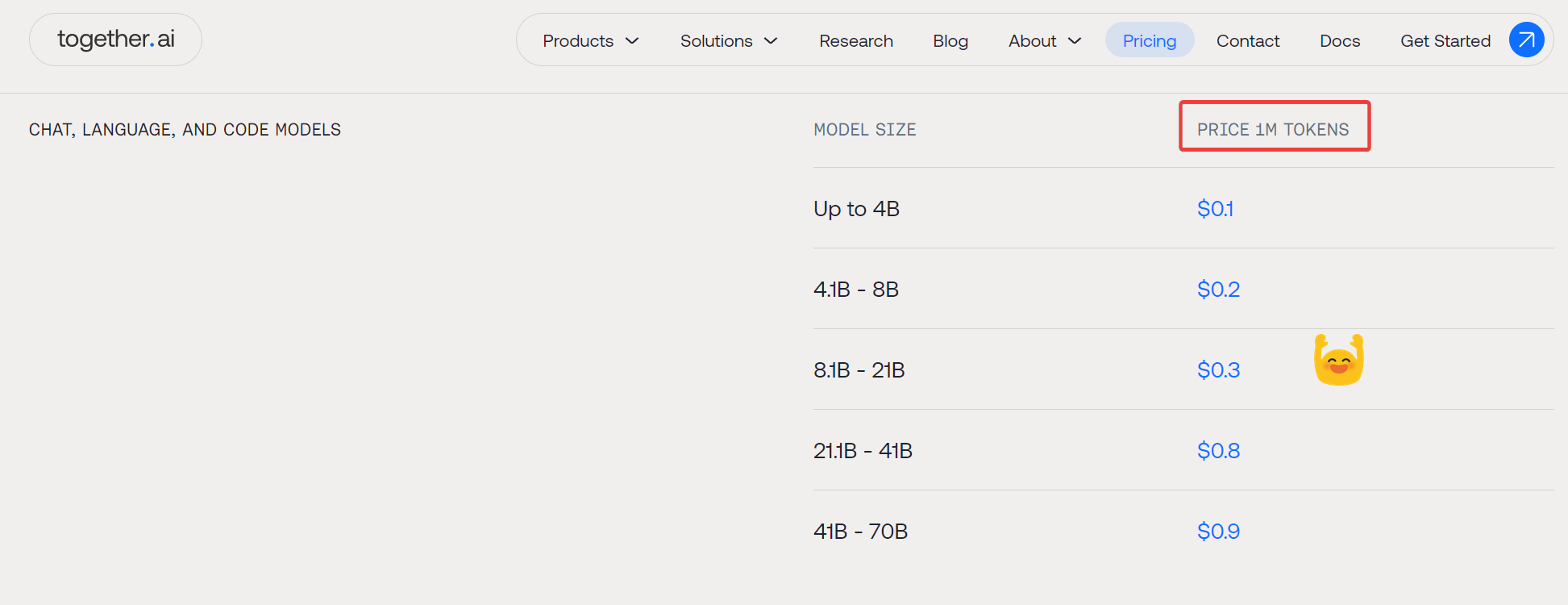

Places like mystic.ai , predibase and my favourite together.ai . But there's more!

They all give you free $20-25 credit, so there's no hurt in trying them.

Do remember the word "serverless", as those are the ones charging you per token or per second of inference (the other option is "dedicated").

The best part of it?

Much better pricing!

Yes, you read that right, it's 1M tokens, not 1k!! That makes this solution about 500-1000 times cheaper than openAI's GPT-4 turbo!

5. what about opensource model performance?

Good question.

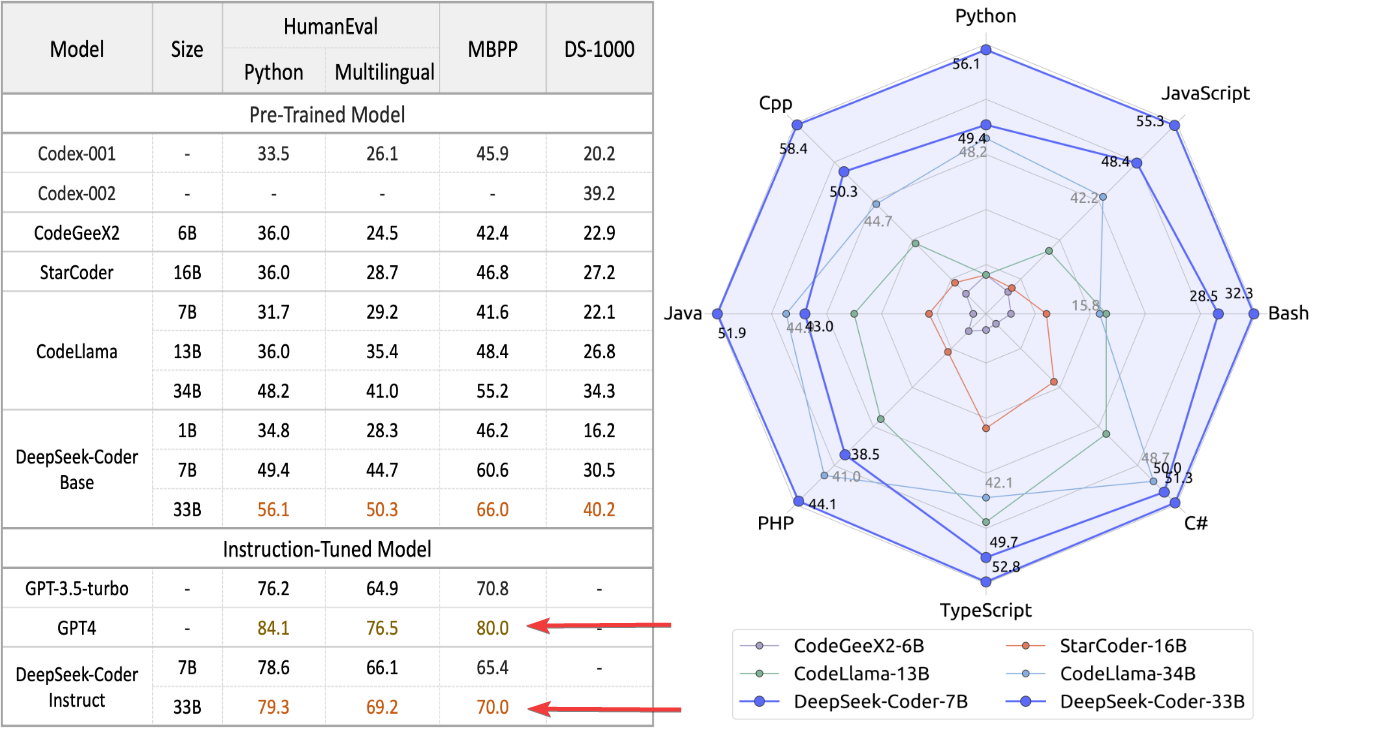

Let me show you a little screenshot:

(source: https://deepseekcoder.github.io/)

It isn't so bad at all!

DeepSeek-Coder Instruct 33B is about as good as GPT4!

Now you probably won't be able to run a 33B model on your local PC (unless you have a beefy machine at home), that's why we can use together.ai !

- "Ok, so these are the models. What about the IDE?"

6. IDE and extensions

Here, you have more than just one options. VSCode and Jetbrains has their own AI assistants, but they're paid. There're probably others too.

Then you have more niche IDEs like "Cursor", which is an AI driven IDE from ground up (using VSCode as basis). The gui of this is excellent!

This means it's smoother and more integrated, and it's easier to do AI-related tasks within the IDE. I've used this for a while, but as you may guessed, it's paid as well (unless you use your own openai key)

Then recently I discovered an extension called "Continue". And that's where I stopped!

With continue, you can use either VScode or Jetbrains IDEs :

So to get going, install the extension and modify the config file.

Here's 2 examples you can add in the config file for local (jan.ai) and remote (together.ai):

{

"title": "deepseek-coder-6.7b-instruct.Q2_K",

"model": "deepseek-coder-6.7b-instruct.Q2_K",

"apiBase": "http://127.0.0.1:1337/v1",

"completionOptions": {},

"provider": "openai"

},

{

"title": "deepseek-ai/deepseek-coder-33b-instruct",

"model": "deepseek-ai/deepseek-coder-33b-instruct",

"apiKey": "your secret API key for together.ai",

"completionOptions": {},

"provider": "together"

},

As you see the first item has the provider "openai" set. The reason behind this is that jan.ai creates a web server that has openAI compatible API. So any other models can be accessed the same way as openAi. (switching between them is easy)

And honestly? That's it! That's all you need to set this up.

If you really want, you can download additional models from Huggingface and just copy it into the following folder : C:\Users\youruser\jan\models\deepseek-coder-6.7b-instruct.Q2_K

Make sure the model and the containing folder name are identical.

So to summarize, the tools we used:

jan.ai

continue.dev

vscode (or vscodium as I did above)

an LLM model

together.ai

Let me know if you're stuck or you have a question and good luck AI-coding!

Subscribe to my newsletter

Read articles from Kris F directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kris F

Kris F

I am a dedicated double certified AWS Engineer with 10 years of experience, deeply immersed in cloud technologies. My expertise lies in a broad array of AWS services, including EC2, ECS, RDS, and CloudFormation. I am adept at safeguarding cloud environments, facilitating migrations to Docker and ECS, and improving CI/CD workflows with Git and Bitbucket. While my primary focus is on AWS and cloud system administration, I also have a history in software engineering, particularly in Javascript-based (Node.js, React) and SQL. In my free time I like to employ no-code/low code tools to create AI driven automations on my self hosted home lab.