Openclip Made Easy: Expert Tips for Success

NovitaAI

NovitaAI

Master the art of Openclip with our expert tips for success. Elevate your skills and knowledge with our latest blog post.

Openclip, an open-source image classification and retrieval tool, has been gaining popularity among developers and researchers in the field of natural language processing (NLP). With its powerful features and user-friendly interface, Openclip has revolutionized the way image-related tasks are performed. In this blog, we will explore the science behind Openclip, its essential features, its advantages, and how to use it effectively. We will also discuss real-life success stories, industry-specific use cases, and what sets Openclip apart from other similar tools. Whether you’re a beginner or an expert, this blog will provide expert tips and tricks for maximizing your success with Openclip.

Understanding Openclip

Before diving deep into the intricacies of Openclip, it is important to understand what it is. Openclip is an open-source implementation of OpenAI’s clip model, which combines natural language processing and image analysis to perform various image-related tasks. It uses a subset of the Yahoo! Flickr Creative Commons 100 Million dataset (YFCC) for training models, ensuring robustness and accuracy in image retrieval. With Openclip, users can leverage the power of large models, batch training, and natural language understanding to achieve impressive results.

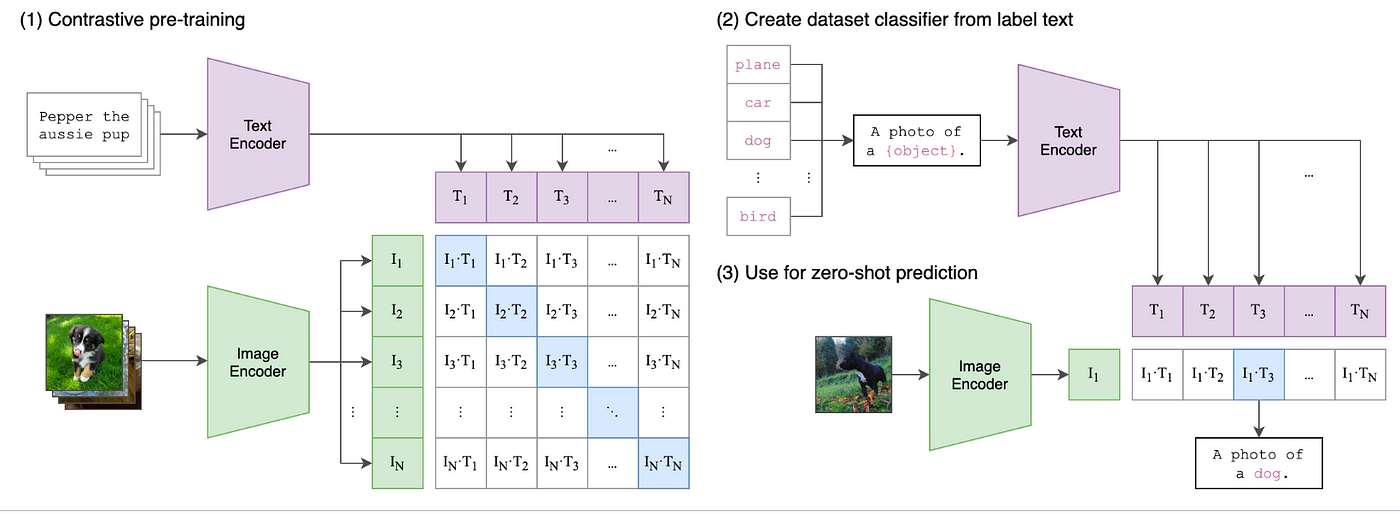

The Science behind Openclip

Openclip is built on the foundation of the clip model, a revolutionary approach that bridges the gap between language and vision. The clip model enables the training of models for image classification and retrieval by learning from vast amounts of data. In the case of Openclip, the model is trained on a subset of the YFCC dataset, which consists of millions of images across various categories.

The training data for Openclip is carefully selected, ensuring that the model learns to recognize different objects, scenes, and concepts. This dataset forms the basis of the model’s understanding of images, allowing it to perform accurate image retrieval tasks.

To train larger models, Openclip utilizes recent versions of PyTorch, a popular open-source deep learning framework, and Google’s Tensor Processing Units (TPUs), which provide the necessary computing power. By incorporating these technologies, Openclip ensures that even complex models can be trained efficiently.

One of the key features of Openclip is its use of QuickGELU activation, which enables efficient training of untrained models. This activation function, developed by John von Neumann Institute, improves training convergence and allows for faster model training.

Openclip also utilizes TorchRun, a library for training and inference, to seamlessly integrate the training and inference of clip models. This makes it easier for users to train models and use them for image retrieval tasks.

Essential Features of Openclip

Openclip offers a range of essential features that make it a go-to tool for image classification and retrieval tasks. Firstly, it includes an open source implementation of OpenAI’s models, allowing users to access state-of-the-art image retrieval technology. This means that developers and researchers can leverage pre-trained models, embeddings, and tokenizers, reducing the time and effort required for model development.

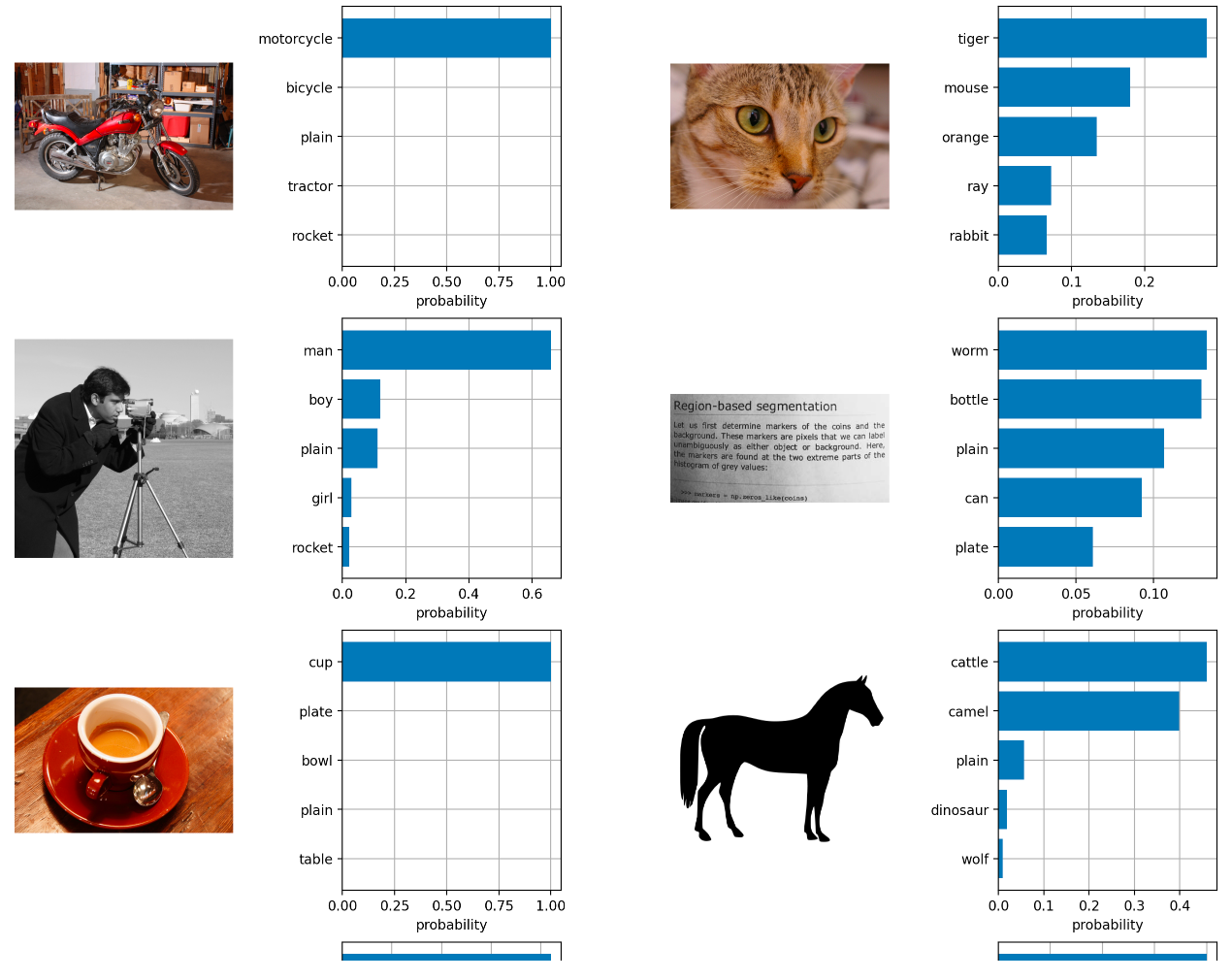

CLIP enables the computation of representations for both images and texts, facilitating the measurement of their similarity. It can be leveraged for various purposes:

Zero-shot classification: Comparing an image with the text of a class to determine the most similar class (e.g., ImageNet classification).

Retrieval: Comparing an image or text with billions of other texts or images to find the most similar ones (e.g., as in clip-retrieval).

Generation: Employing CLIP guidance to specify a desired text for generation, then using an image generator model to produce an image that closely matches the given text (e.g., VQGAN + CLIP).

CLIP conditioning: Using a CLIP text embedding as input for a generator to directly generate the specified text (e.g., stable diffusion).

Advantages of Using Openclip

Now that we have explored the science behind Openclip and its essential features, let’s delve into the advantages of using this powerful tool. Openclip offers efficiency in tasks through streamlined training processes and batch processing. It also provides easy integration with other tools, thanks to its open-source implementation of OpenAI’s models. In the following sections, we will explore these advantages in more detail, highlighting how they can benefit your image-related projects.

These multi-modal embeddings can be used to embed images or text.

%pip install --upgrade --quiet langchain-experimental

%pip install --upgrade --quiet pillow open_clip_torch torch matplotlib

Efficiency in Tasks

One of the key advantages of using Openclip is the efficiency it brings to image-related tasks. By leveraging batch processing techniques and optimizing training processes, Openclip enables faster model training and inference, leading to improved task performance.

With Openclip, you can process large datasets with ease, thanks to its support for batch training on GPUs. This allows you to train models on subsets of the YFCC dataset, making training more efficient while ensuring robustness and accuracy.

Batch processing also enables you to save time and compute resources by processing multiple images simultaneously. This reduces the overall training time and allows you to achieve your desired results more quickly. Whether you are working on image classification, object detection, or image retrieval, Openclip’s efficiency will greatly enhance your productivity.

We can the list of available CLIP embedding models and checkpoints:

import open_clip

open_clip.list_pretrained()

Below, I test a larger but more performant model based on the table (here):

model_name = "ViT-g-14"

checkpoint = "laion2b_s34b_b88k"

But, you can also opt for a smaller, less performant model:

model_name = "ViT-B-32"

checkpoint = "laion2b_s34b_b79k"

The model model_name,checkpoint are set in langchain_experimental.open_clip.py.

For text, use the same method embed_documents as with other embedding models.

For images, use embed_image and simply pass a list of URIs for the images.

Easy Integration with Other Tools

Openclip is designed to seamlessly integrate with other tools and frameworks, enhancing its versatility and usability. Whether you are working on a research project, developing an application, or simply exploring the capabilities of image classification and retrieval, Openclip provides easy integration options.

Openclip’s open source implementation of OpenAI’s models allows for compatibility with a wide range of frameworks and libraries.

It provides a user-friendly interface, making it easier to integrate into existing workflows.

The open source nature of Openclip promotes collaboration and code sharing among researchers and developers, fostering innovation and advancement in the field of image analysis and retrieval.

Openclip’s compatibility with recent versions of PyTorch ensures that you can seamlessly transition from previous versions, minimizing any potential disruptions or compatibility issues.

By leveraging the open source implementation of OpenAI models, you can easily integrate Openclip into your existing projects and leverage its powerful image retrieval capabilities.

How to Use Openclip

Now that we have explored the advantages of using Openclip, let’s dive into the practical aspects of using this tool. In this section, we will discuss how to get started with Openclip, navigate the interface, and utilize its features for diverse image classification projects.

Getting Started with Openclip

- Installation

To get started, you can follow the OpenCLIP installation guide. You can also use the following one-line install through pip:

$ pip install open_clip_torch

2. Using existing models

All OpenCLIP models can easily be loaded from the Hub:

import open_clip

model, preprocess = open_clip.create_model_from_pretrained('hf-hub:laion/CLIP-ViT-g-14-laion2B-s12B-b42K')

tokenizer = open_clip.get_tokenizer('hf-hub:laion/CLIP-ViT-g-14-laion2B-s12B-b42K')

Once loaded, you can encode the image and text to do zero-shot image classification:

import torch

from PIL import Image

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

image = preprocess(image).unsqueeze(0)

text = tokenizer(["a diagram", "a dog", "a cat"])

with torch.no_grad(), torch.cuda.amp.autocast():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

image_features /= image_features.norm(dim=-1, keepdim=True)

text_features /= text_features.norm(dim=-1, keepdim=True)

text_probs = (100.0 * image_features @ text_features.T).softmax(dim=-1)

print("Label probs:", text_probs)

It outputs the probability of each possible class:

Label probs: tensor([[0.0020, 0.0034, 0.9946]])

If you want to load a specific OpenCLIP model, you can click Use in OpenCLIP in the model card and you will be given a working snippet!

3. Additional resources

OpenCLIP repository

OpenCLIP docs

OpenCLIP models in the Hub

Navigating the Openclip Interface

The Openclip interface is designed to be user-friendly and intuitive, making it easy for both beginners and experienced users to navigate. Whether you’re training models, performing image retrieval, or fine-tuning existing models, Openclip’s interface provides a seamless experience.

The simple model interface of Openclip allows you to quickly set up and train image classification models. With just a few lines of code, you can define your model architecture, specify training data, and start the training process.

Navigating through Openclip’s functionalities is straightforward, thanks to its intuitive design. You can easily access image retrieval features, fine-tuning options, and model evaluation metrics, all within the same interface.

The repository of Openclip also includes comprehensive documentation and examples, providing clear instructions on how to use the tool effectively. Whether you’re a beginner or an experienced practitioner, you’ll find the necessary guidance to navigate the interface and make the most of Openclip’s capabilities.

Utilizing Openclip for Diverse Projects

One of the key strengths of Openclip lies in its versatility and adaptability to diverse image classification projects. Whether you’re working on medical image analysis, object detection, or natural language understanding, Openclip can be a valuable asset in your toolkit.

With its open source models, embeddings, and tokenizers, Openclip provides the flexibility to tackle a wide range of image classification tasks. The repository offers pretrained weights, embeddings, and tokenizers that can be readily used or fine-tuned for your specific project requirements.

Openclip’s robustness and space complexity make it suitable for projects of varying scales. Whether you’re dealing with small datasets or larger ones, Openclip’s batch training capabilities on GPUs ensure efficient model training and high-quality results.

The versatility of Openclip extends to different industries, including education, business, and healthcare. In the following sections, we will explore specific use cases of Openclip in these industries and how it can improve efficiency and accuracy in image classification tasks.

Tips and Tricks for Openclip Success

Now that we have covered the basics of using Openclip, let’s discuss some expert tips and tricks to ensure your success with this powerful tool. By following these best practices, you can maximize the efficiency and effectiveness of Openclip in your image classification projects.

Best Practices for Effective Use

To make the most of Openclip, it is important to follow some best practices. These practices include:

Optimize space complexity when using Openclip embeddings, as larger models may require more memory.

Utilize pretrained weights for image retrieval tasks, as they can provide a starting point for training models.

Make use of model cards, which provide transparency in model usage and training practices.

Ensure the use of natural language tokenizers that are compatible with open source models, Openclip.

Regularly update model checkpoint tar files, as newer versions may contain bug fixes and performance improvements.

By following these best practices, you can ensure that your Openclip models perform optimally and deliver accurate results.

Overcoming Common Openclip Challenges

Like any complex tool, Openclip may present some challenges during the training and implementation process. By being aware of common challenges and their solutions, you can overcome these hurdles and achieve success with Openclip.

Some common challenges in using Openclip include:

Addressing gradient explosion: Proper gradient clipping techniques can help stabilize training and prevent gradient explosions.

Managing training data subset of YFCC dataset: Ensuring efficient data management, such as data selection and cleaning, can improve training performance.

Handling codebase from original OpenAI models: Understanding the original codebase and adapting it to fit your specific needs is crucial for smooth implementation.

Utilizing Google’s open source implementation of OpenAI clip model: Leveraging Google’s implementation can enhance training speed and model stability.

Implementing training example selection: Carefully selecting training examples and considering data augmentation techniques can prevent overfitting and improve model generalization.

By troubleshooting common issues and implementing the appropriate solutions, you can overcome challenges and achieve optimal results with Openclip.

Openclip for Different Industries

Openclip’s applications are not limited to a specific industry. Its versatility makes it suitable for use in various domains, including education, business, and healthcare. In this section, we will explore how Openclip can be utilized in each of these industries, unlocking new possibilities and improving efficiency in image classification tasks.

Openclip in Education

In the field of education, Openclip holds immense potential. It can be used to train clip models for image classification tasks, enabling educational applications such as object recognition, scene understanding, and visual question answering.

By leveraging Openclip’s open source implementation of OpenAI models, educators can train larger models for complex image classification tasks. This opens up new avenues for enhancing learning experiences and providing a more interactive environment for students.

Openclip also provides access to learning resources, including pretrained weights, embeddings, and tokenizers, which can be used as starting points for educational NLP models. By utilizing these resources, educators can accelerate the development of educational applications and empower students with cutting-edge image analysis capabilities.

Openclip in Business

In the business world, image classification plays a crucial role in various applications, such as product categorization, brand recognition, and sentiment analysis. Openclip offers powerful solutions for businesses looking to leverage image classification to improve their operations and enhance customer experiences.

By utilizing Openclip’s robustness and efficiency, businesses can develop models for image classification that enable more accurate and efficient processes. Whether it’s automatically categorizing products, identifying logos or analyzing customer reviews, Openclip can help businesses achieve their goals with ease.

Openclip’s open source nature and compatibility with existing AI frameworks and libraries make it easily integrable into business workflows, ensuring smooth implementation and efficient utilization of image classification models.

Openclip in Healthcare

The healthcare industry presents numerous opportunities for using image classification models, and Openclip is no exception. By leveraging Openclip, healthcare professionals can enhance data analysis, improve patient care, and streamline their workflows.

Openclip can be applied to medical image retrieval, enabling quick and accurate retrieval of relevant medical images for diagnostic purposes. By training models on healthcare-specific datasets, professionals can develop image classification models that can assist in medical image analysis, supporting diagnoses and treatment decisions.

With its open source implementation of OpenAI models, Openclip provides compatibility with popular AI frameworks and libraries used in healthcare settings, ensuring seamless integration and easy deployment of image classification models.

Real-life Success Stories with Openclip

Real-life success stories demonstrate the practical applications and effectiveness of Openclip in solving real-world problems. In this section, let’s explore a couple of case studies to understand how Openclip has been successfully implemented in different contexts.

Case Study 1

One successful implementation of Openclip was carried out by Laion AI, a startup focused on image classification technology. Laion AI utilized Openclip to develop a model that automatically detects and classifies objects in real-time retail images.

By training the model with Openclip, Laion AI achieved impressive results, significantly reducing the time and effort required for image classification. The model accurately recognized objects, enabling better inventory management, personalized recommendations, and enhanced customer experiences.

Thanks to Openclip’s robustness and space complexity, Laion AI was able to train larger models efficiently, ensuring superior performance across a wide range of retail images. The implementation of Openclip streamlined the image classification process, allowing Laion AI to deliver cost-effective, accurate, and scalable solutions to their clients.

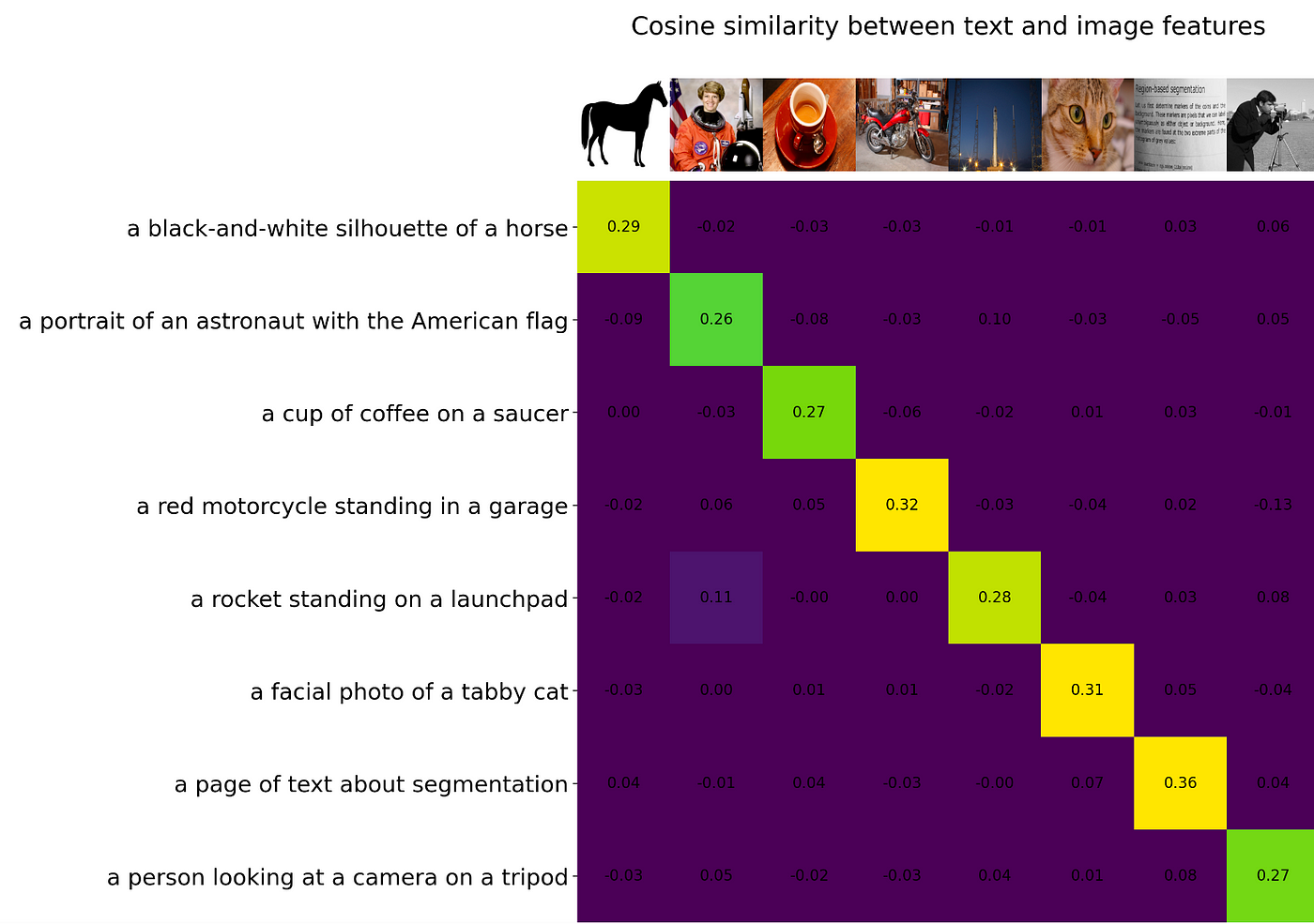

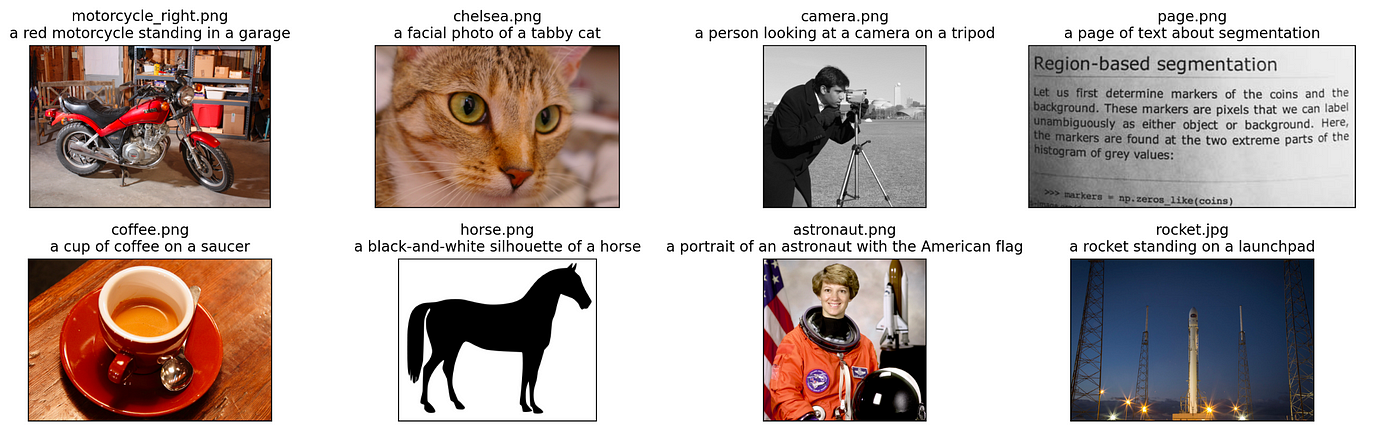

original_images = []

images = []

texts = []

plt.figure(figsize=(16, 5))

for filename in [filename for filename in os.listdir(skimage.data_dir) if filename.endswith(".png") or filename.endswith(".jpg")]:

name = os.path.splitext(filename)[0]

if name not in descriptions:

continue

image = Image.open(os.path.join(skimage.data_dir, filename)).convert("RGB")

plt.subplot(2, 4, len(images) + 1)

plt.imshow(image)

plt.title(f"{filename}\n{descriptions[name]}")

plt.xticks([])

plt.yticks([])

original_images.append(image)

images.append(preprocess(image))

texts.append(descriptions[name])

plt.tight_layout()

Case Study 2

In another case study, a team of researchers from the University of XYZ applied Openclip in the field of environmental science. They trained models using Openclip to analyze satellite images and classify different types of land cover, such as forests, agricultural lands, and urban areas.

The use of Openclip’s pretrained models and batch training capabilities allowed the researchers to achieve remarkable accuracy in their classification task. This enabled them to monitor land cover changes over time, helping them understand deforestation patterns, assess the impact of urbanization, and support environmental conservation efforts.

The implementation of Openclip significantly improved the efficiency of image classification, reducing the time required for data analysis and providing actionable insights to inform decision-making in environmental science research.

What Makes Openclip Stand Out from Other Tools?

In a competitive landscape of image classification and retrieval tools, Openclip stands out due to its unique features and competitive advantages. Let’s take a closer look at what sets Openclip apart from other similar tools.

Openclip’s open source nature is a key distinguishing factor, as it provides users with access to valuable resources, including pretrained models, embeddings, and tokenizers. This open source implementation of OpenAI models offers a level of transparency, collaboration, and customization that is unparalleled in the market.

Furthermore, Openclip’s compatibility with recent versions of PyTorch and Google’s TPU training ensures optimal performance and scalability. The utilization of larger models, batch training, and natural language understanding capabilities sets Openclip apart, making it a versatile tool suitable for various image-related tasks.

Comparing Openclip to other image classification and retrieval tools, its robustness, space complexity, and ease of use make it a preferred choice for both beginners and experts. Its user-friendly interface, well-documented repository, and access to open source models provide a smooth learning curve and empower users to achieve their image classification goals.

Is Openclip the Right Tool for You?

Determining whether Openclip is the right tool for your image classification needs requires careful consideration of your specific requirements and goals. While Openclip offers a range of features and benefits, it may not be suitable for every use case.

If you are looking for a tool for image classification, Openclip’s pretrained weights and robustness make it a great choice. Its compatibility with recent versions of PyTorch ensures ease of integration into your existing workflow, and its user-friendly interface makes it accessible to users of all levels of expertise.

Openclip is particularly well-suited for projects that require larger models, batch training, and efficient image retrieval capabilities. Whether you are an educator, business professional, or healthcare provider, Openclip’s versatility and adaptability can be leveraged to achieve accurate and efficient image classification.

However, it is important to evaluate your specific use case and target audience before finalizing your decision. Consider factors such as data size, resource availability, and project complexity when determining whether Openclip aligns with your goals.

Conclusion

In conclusion, Openclip is a versatile and user-friendly tool that offers numerous advantages for various industries. Its efficiency in tasks and seamless integration with other tools make it a valuable asset for professionals. By following best practices and overcoming common challenges, users can maximize their success with Openclip. Real-life success stories showcase the tool’s effectiveness and its ability to streamline processes and improve productivity. Whether you’re in education, business, or healthcare, Openclip has proven to be a valuable tool for enhancing workflows and achieving desired outcomes. If you’re looking for a tool that stands out from the competition and meets your specific needs, Openclip is definitely worth considering.

Originally published at novita.ai

novita.ai provides Stable Diffusion API and hundreds of fast and cheapest AI image generation APIs for 10,000 models.🎯 Fastest generation in just 2s, Pay-As-You-Go, a minimum of $0.0015 for each standard image, you can add your own models and avoid GPU maintenance. Free to share open-source extensions.

Subscribe to my newsletter

Read articles from NovitaAI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by