2 of 2: Setting Up AnythingLLM for Work.

Brian King

Brian King

TL;DR.

This post details the setup process for AnythingLLM, a versatile document chatbot. It is a guide through updating the system, starting services like Llama 2 and ChromaDB, and running AnythingLLM. It also explains how to prepare, customize, and add documents to AnythingLLM workspaces, using a Systems Analyst Workspace as an example. This post highlights the potential of AnythingLLM to provide a personalized ChatGPT experience with complete privacy.

Attributions:

https://docs.anaconda.com/free/miniconda/index.html↗,

https://www.docker.com/↗, and

An Introduction.

In my last post, I described how to install a Dockerized instance of AnythingLLM. This time, I show how to set it up as a private, specialised LLM experience.

The purpose of this post is to show how to set up AnythingLLM for custom results.

The Big Picture.

AnythingLLM runs natively on Windows and macOS. Although a Linux deployment is still to emerge, that didn't stop me (mostly because the developers created a Dockerized version) from exploring how to install it on an Ubuntu system. Now that AnythingLLM is installed, I will show how to set it up as a powerful, personal assistant.

Prerequisites.

A Debian-based Linux distro (I use Ubuntu),

Updating the System.

- I update my system:

sudo apt clean && \

sudo apt update && \

sudo apt dist-upgrade -y && \

sudo apt --fix-broken install && \

sudo apt autoclean && \

sudo apt autoremove -y

Starting the Services.

In my previous post, I spent time setting up the following services. Now I can start these services whenever its time to get work.

Starting Llama 2.

In the first terminal, I activate the Ollama environment:

conda activate Ollama

- I run Llama 2:

ollama run llama2

- I can now access Llama 2 through port 11434, e.g.:

http://192.168.188.41:11434

NOTE: The IP address above points to the PC that is running ChromaDB.

Starting ChromaDB.

- In the second terminal, I activate the ChromaDB environment:

conda activate ChromaDB

- I run ChromaDB:

chroma run

NOTE: ChromaDB saves data to the

~/ChromaDB/chroma_data/chroma.sqlite3file.

- I can now access ChromaDB through port 8000, e.g.:

http://192.168.188.41:8000

NOTE: The IP address above points to the PC that is running ChromaDB.

Starting AnythingLLM.

NOTE: At this stage:

Ollama is running Llama2 in the Ollama environment, and

ChromaDB is running in the Chroma environment.

Both environments are provided by the Miniconda package manager , conda, and are running in separate terminals.

- From the third terminal, I check if any containers are running on port 3001:

docker container ls

- I delete any containers running on port 3001:

docker rm -f <container-name>

- I run AnythingLLM:

docker start AnythingLLM

What is AnythingLLM?

AnythingLLM is a free, easy-to-use, and versatile document chatbot. It is made for people who want to chat with, or create, a custom knowledge base using existing documents and websites. RAG (Retrieval-Augmented Generation) is the process of creating custom knowledge bases, which conveniently sidesteps the need for finetuning an LLM (Large Language Model) with LoRA (Low-Rank Adaptation) or QLoRA (Quantised LoRA). The information from the documents and websites are converted, and saved, to a vector database so an LLM can use this data to improve its answers my queries. AnythingLLM can work with many users, or just a single user, from one installation. This makes AnythingLLM great for those who want a ChatGPT experience, and complete privacy, while supporting multiple users in the same setup.

Preparing AnythingLLM.

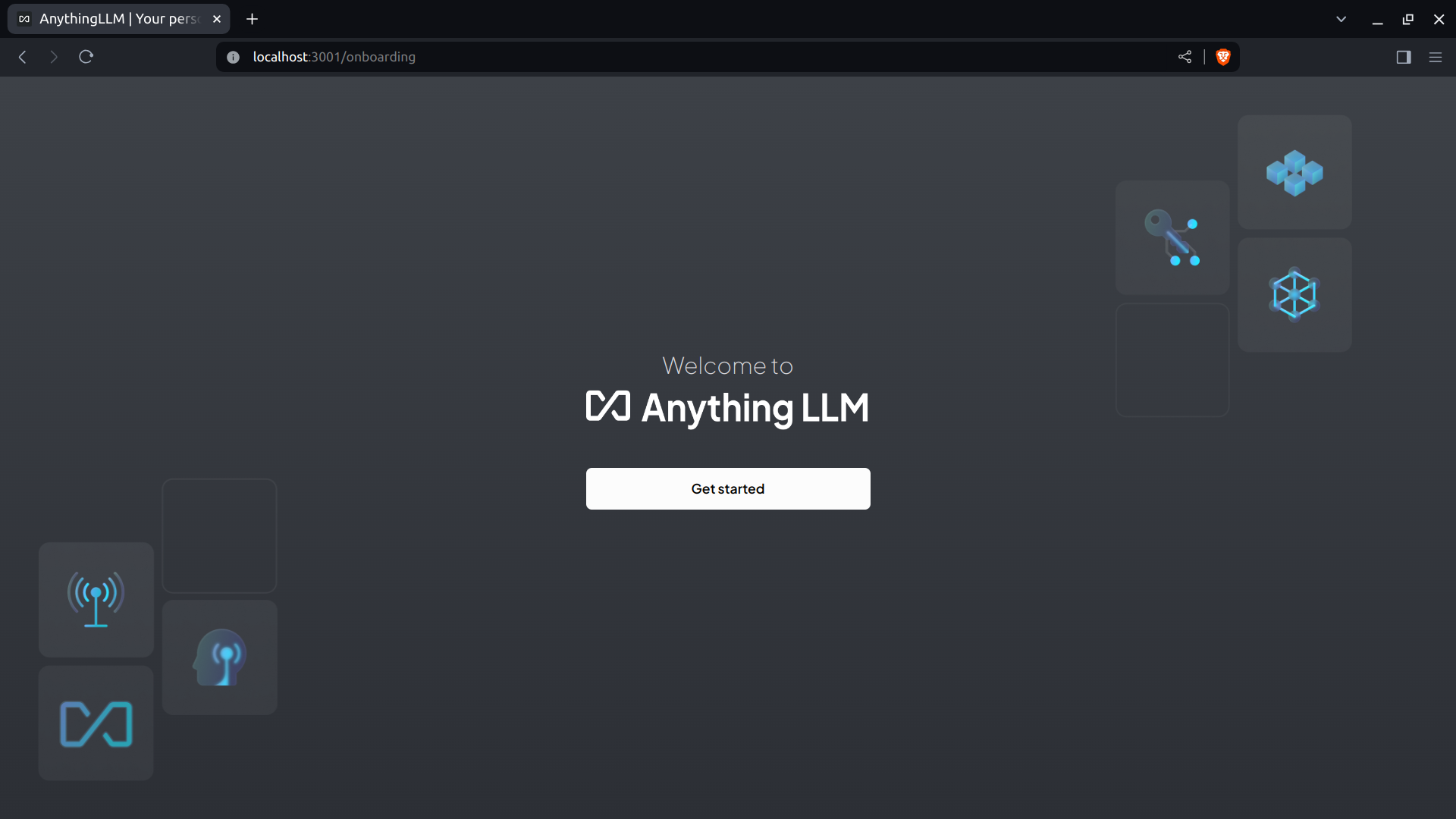

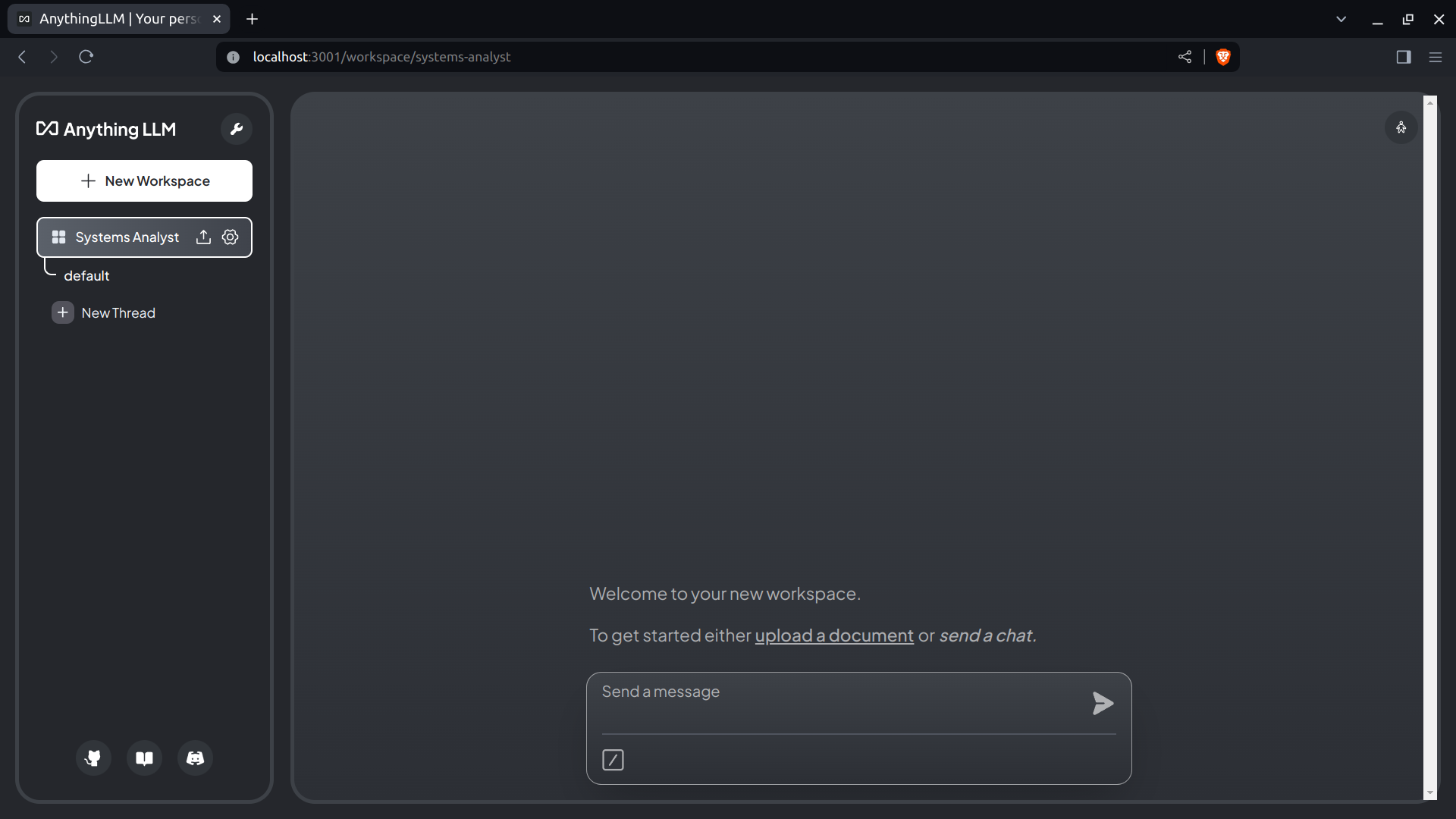

- I open AnythingLLM in a browser:

http://localhost:3001

- On the "Welcome to AnythingLLM" screen, I click the "Get started" button:

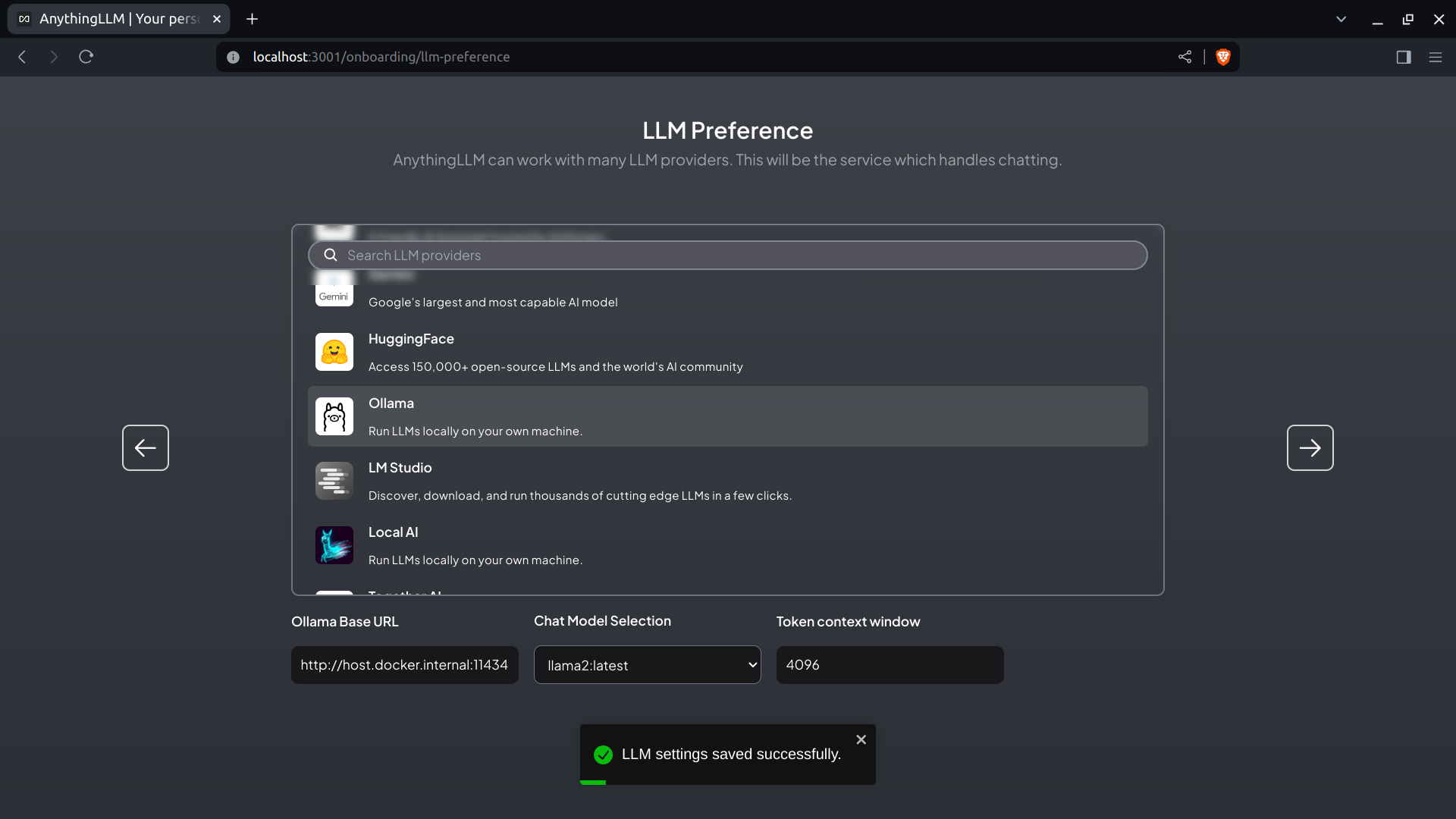

- On the "LLM Preference" screen, I select "Ollama", specify the "Ollama Base URL", and set the "Token context window":

NOTE: The "Chat Model Selection" chooses the Llama2 model by default.

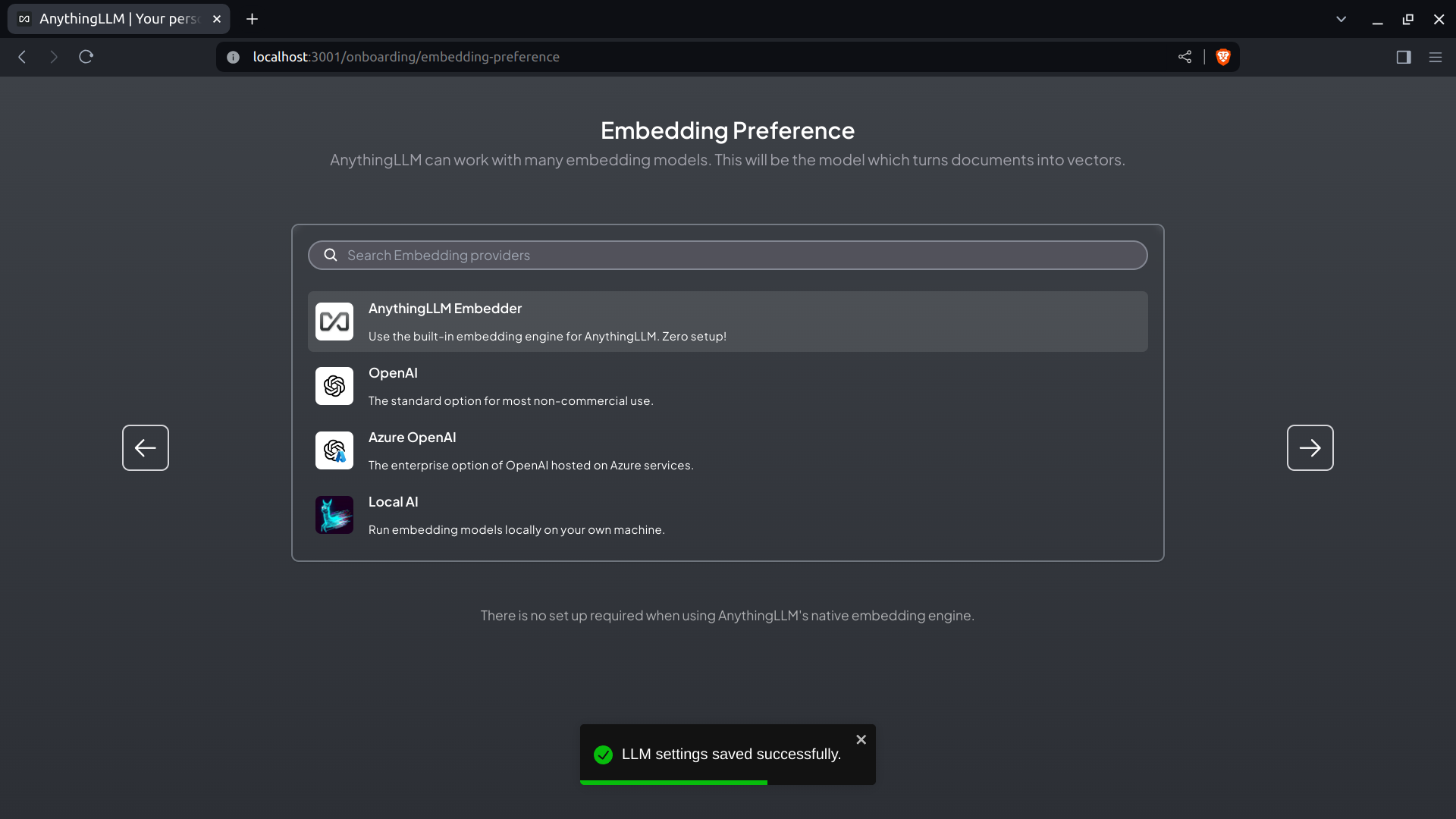

- On the "Embedding Preference" screen, I select the default "AnythingLLM Embedder":

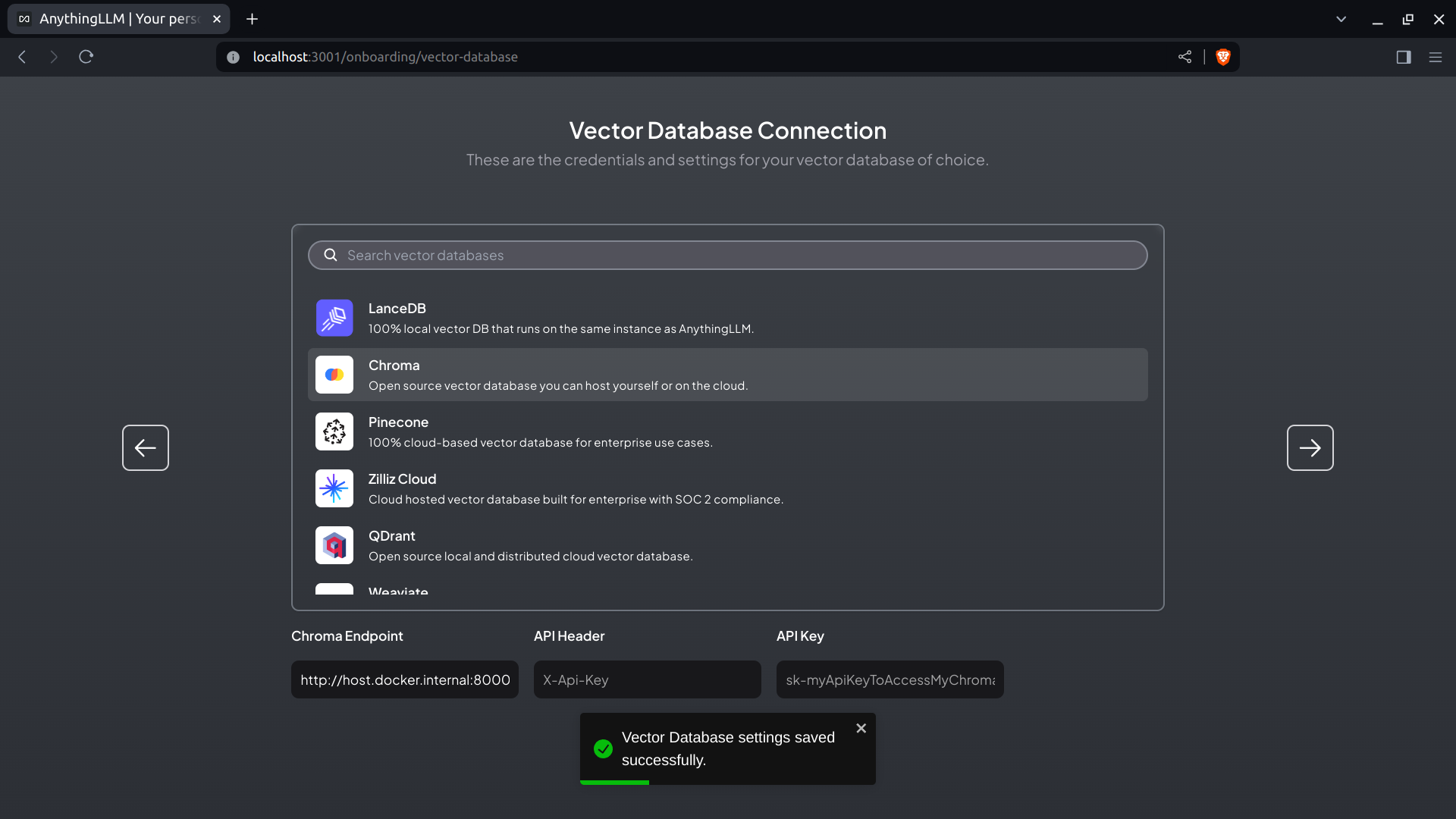

- On the "Vector Database Connection" screen, I select "Chroma" and set the "Chroma Endpoint":

- On the "Custom Logo" screen, I leave the "AnythingLLM" logo:

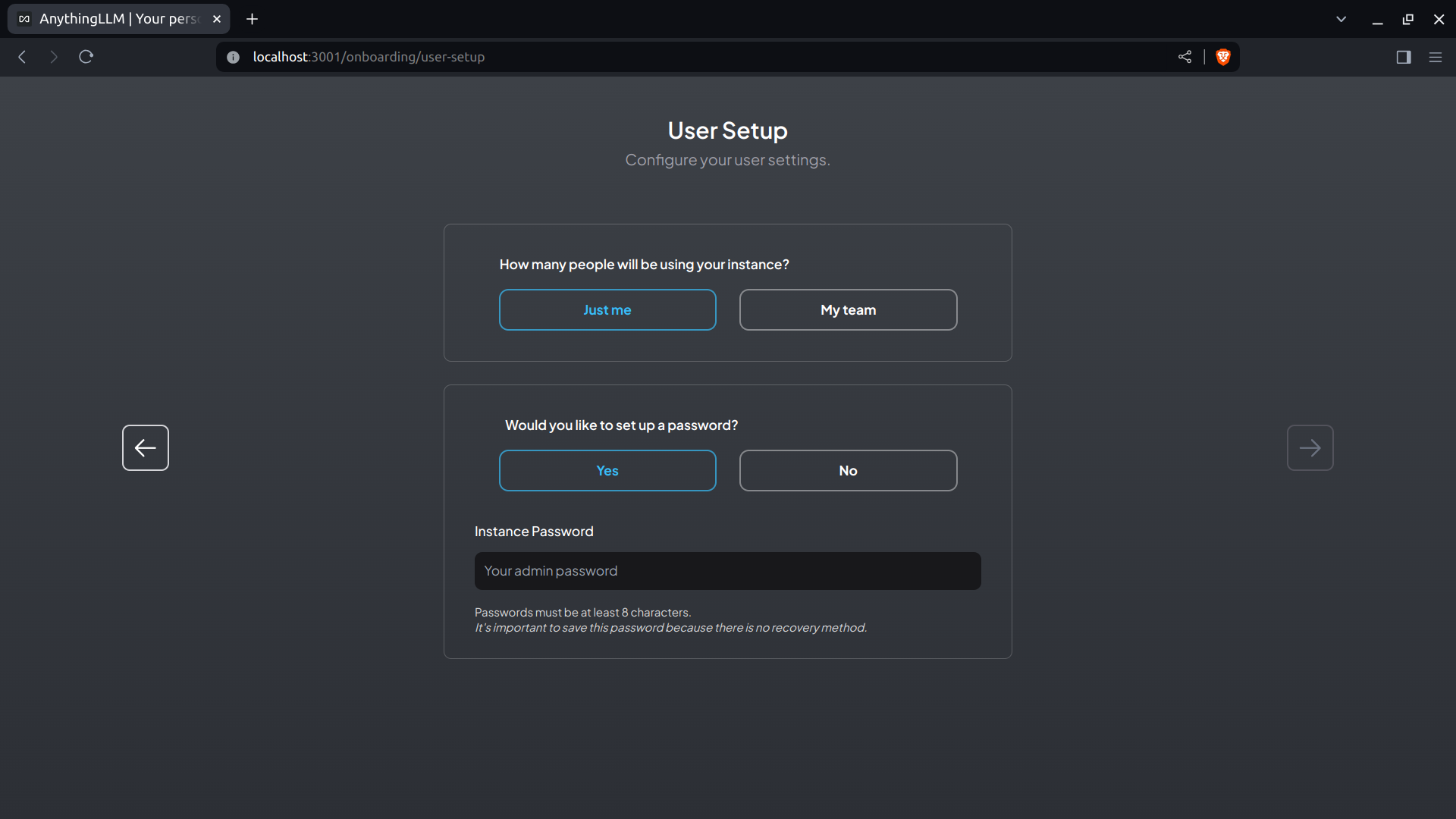

- On the "User Setup" screen, I click the "Just me" button and set a password:

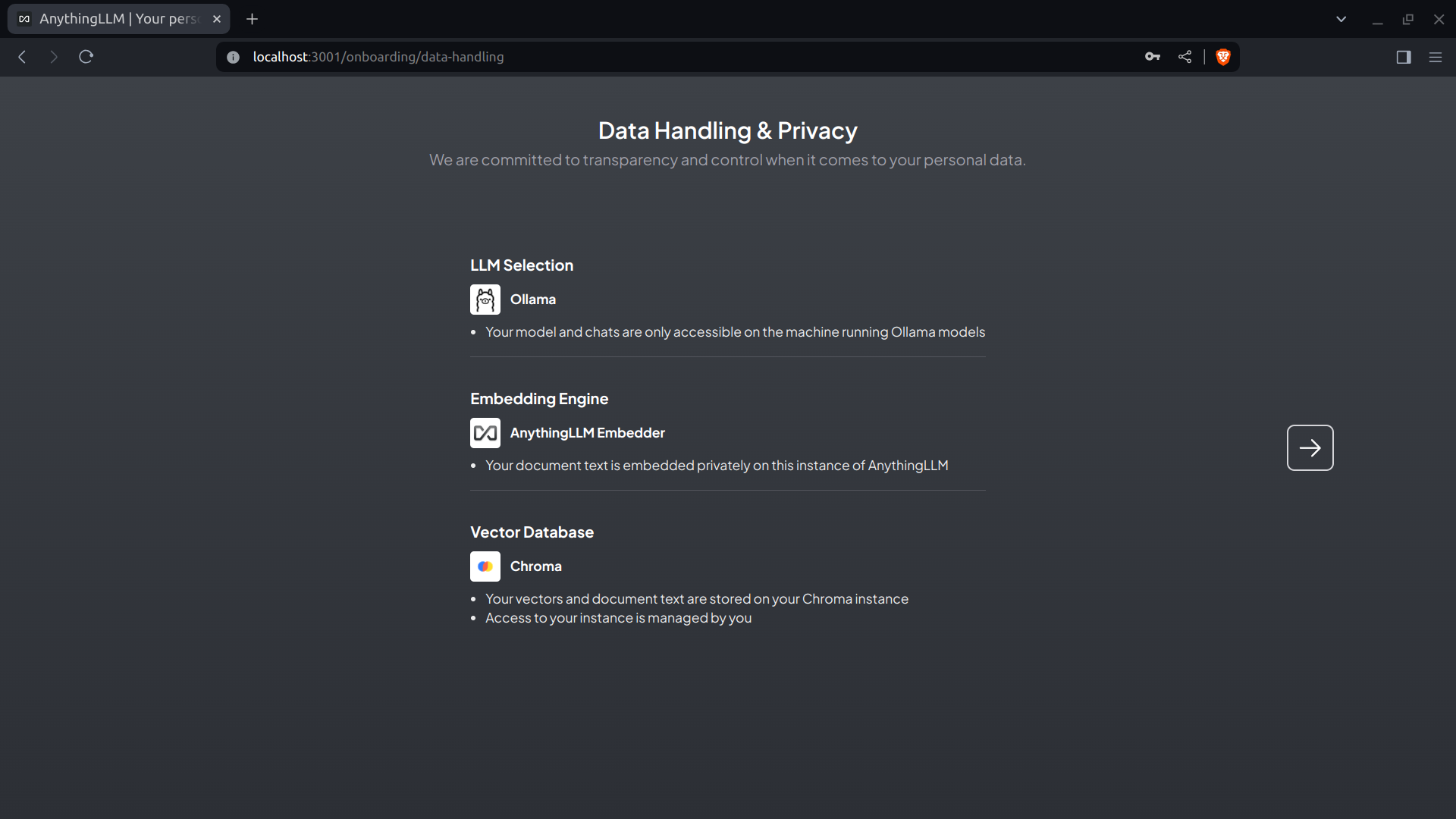

- On the "Data Handling & Privacy" screen, I check my chosen settings:

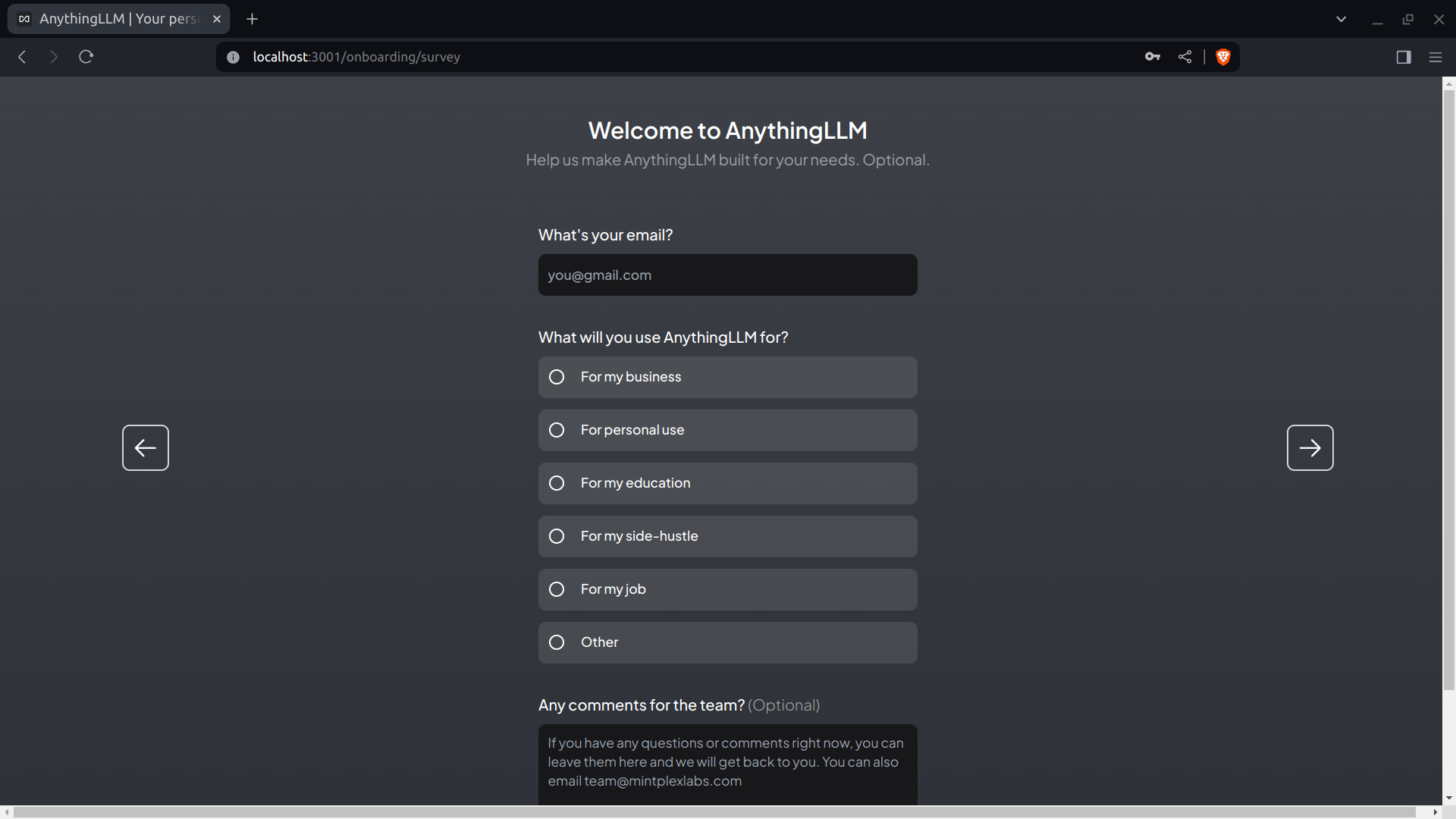

- On the "Welcome to AnythingLLM" screen, I complete the survey:

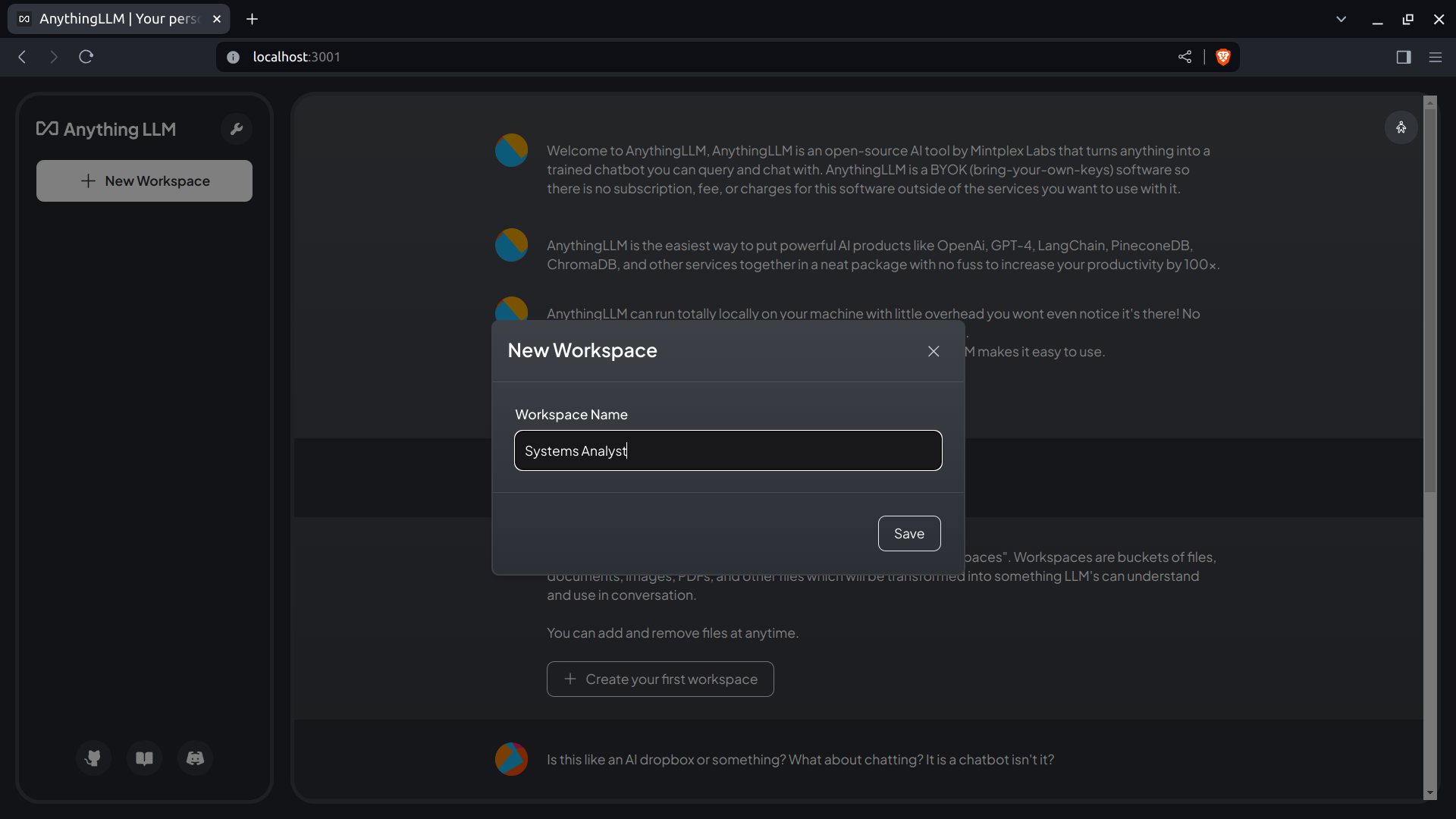

- In the "New Workspace" modal window, I call my first workspace "Systems Analyst":

Tuning the Systems Analyst Workspace.

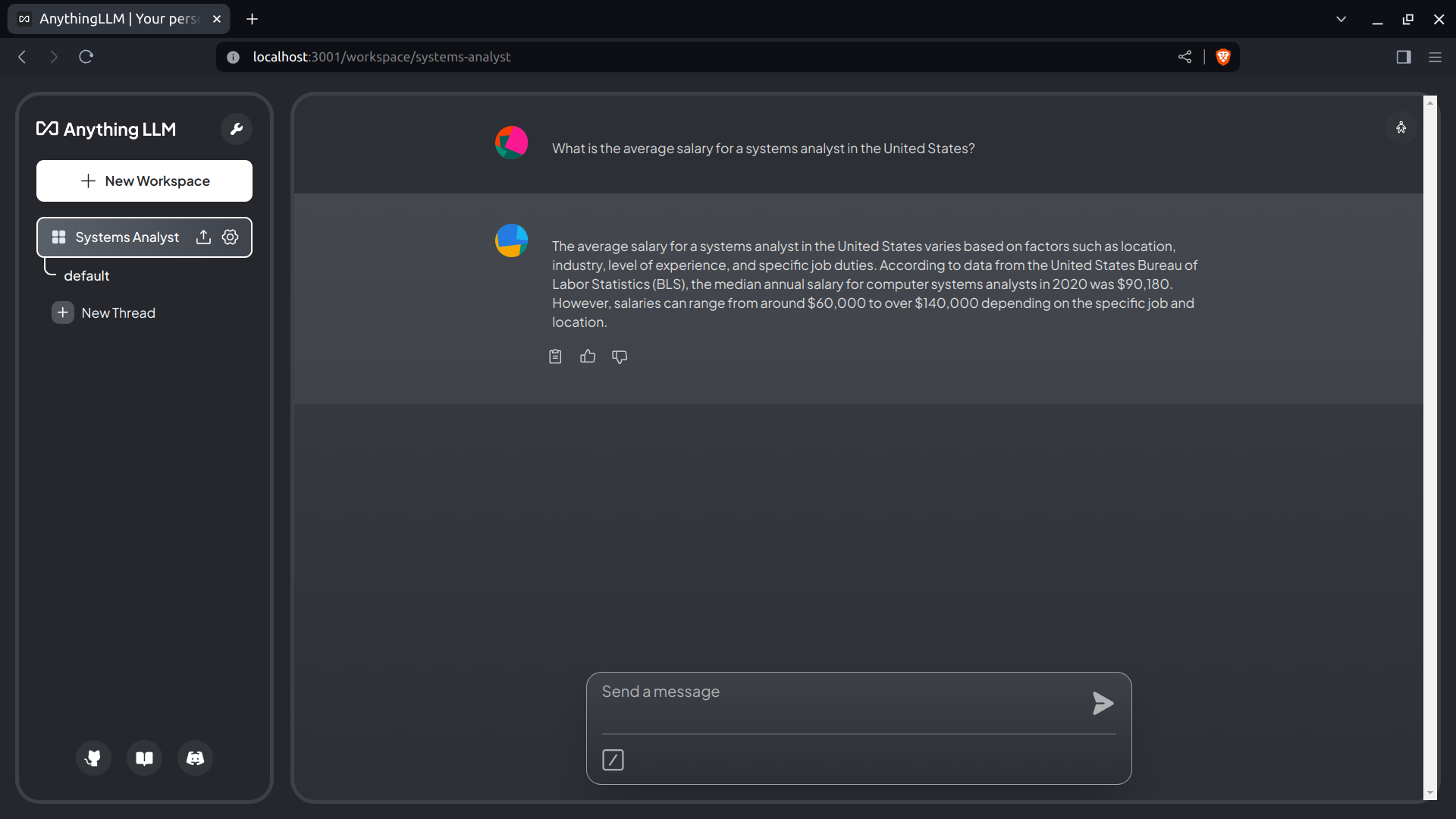

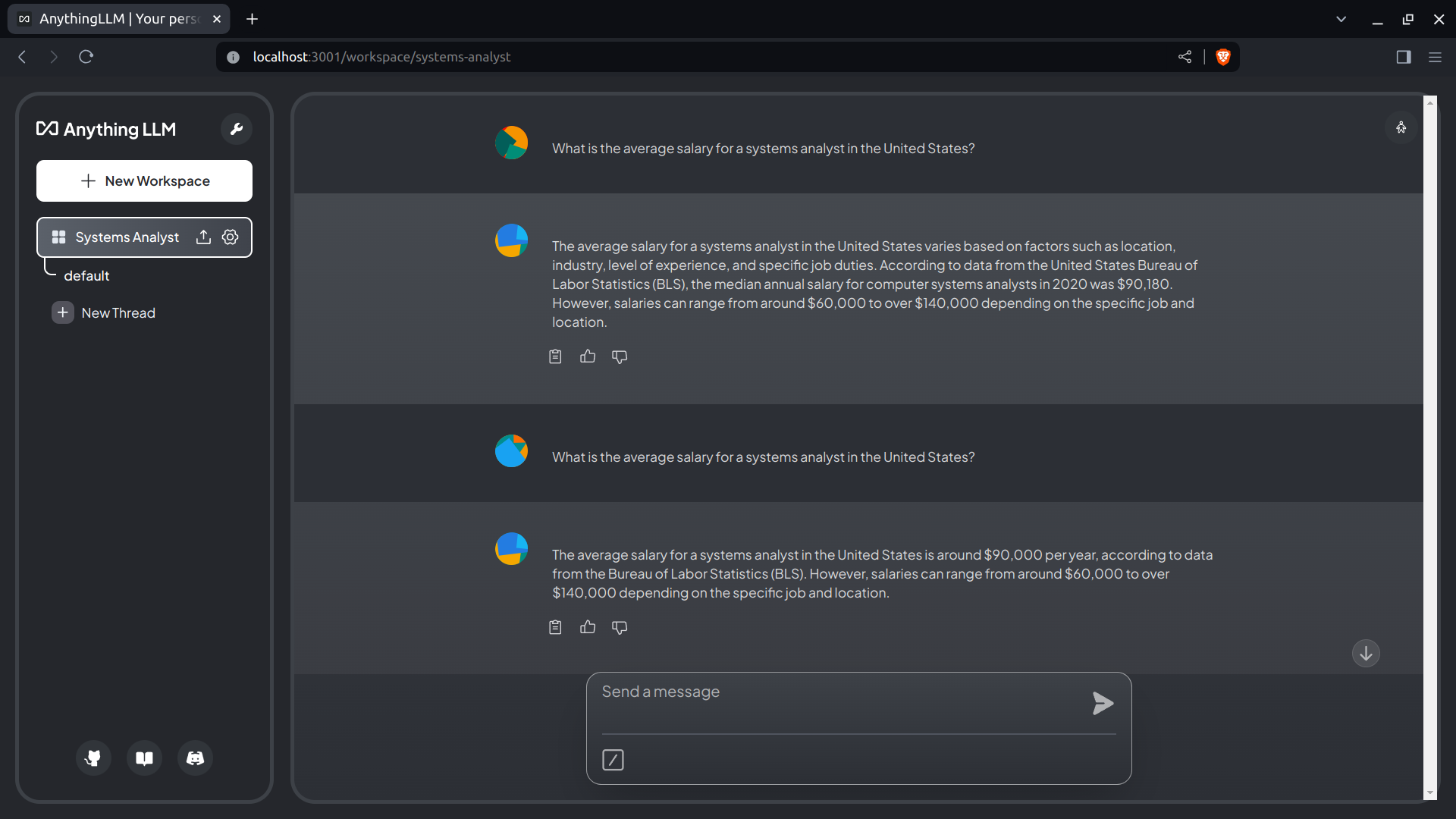

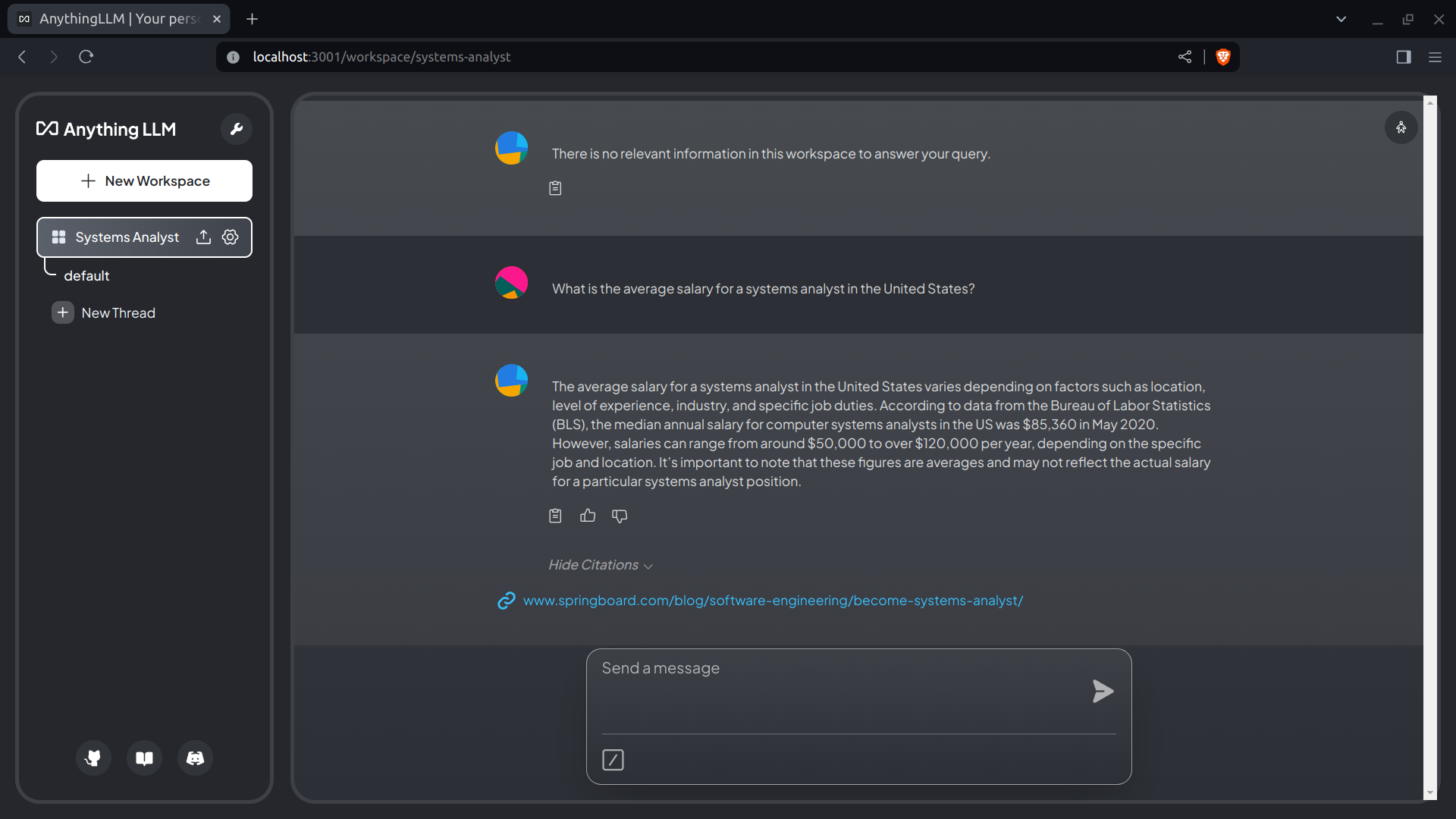

- I ask the following: What is the average salary for a systems analyst in the United States?

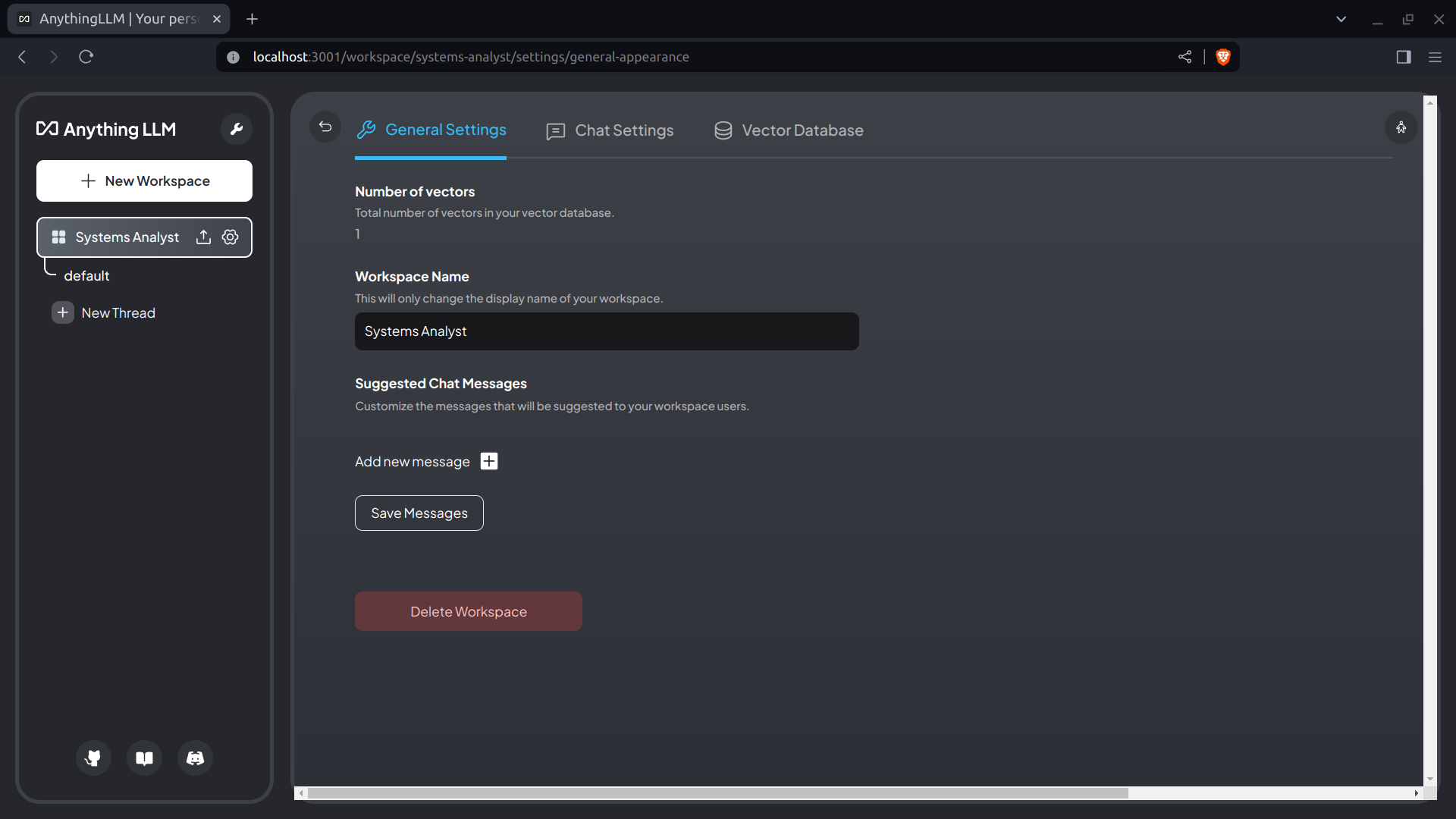

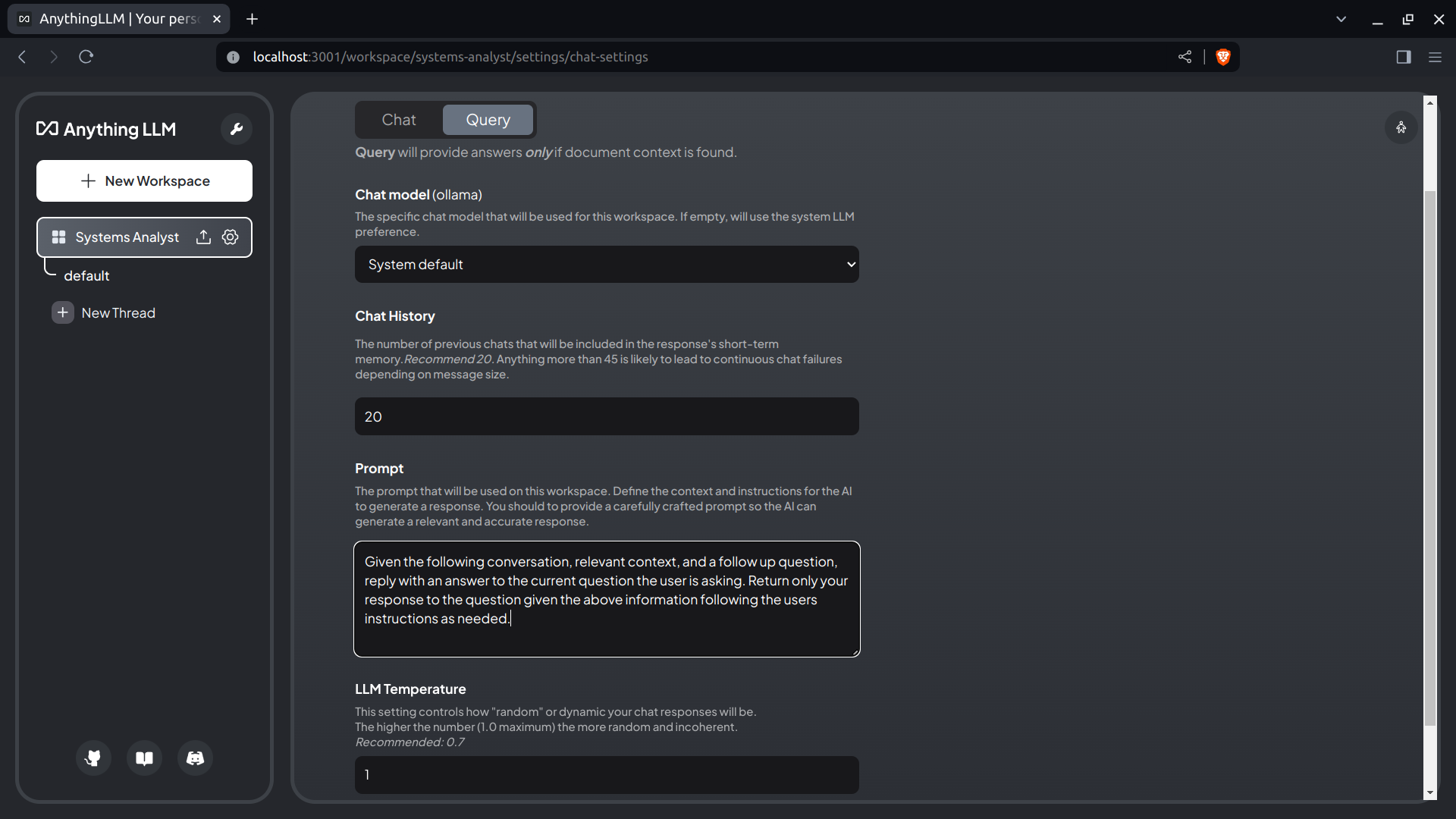

- In the left pane, I click the gear icon next to "Systems Analyst":

NOTE: Along the top of the General Appearance Settings are 3 tabs: General Settings, Chat Settings, and Vector Database.

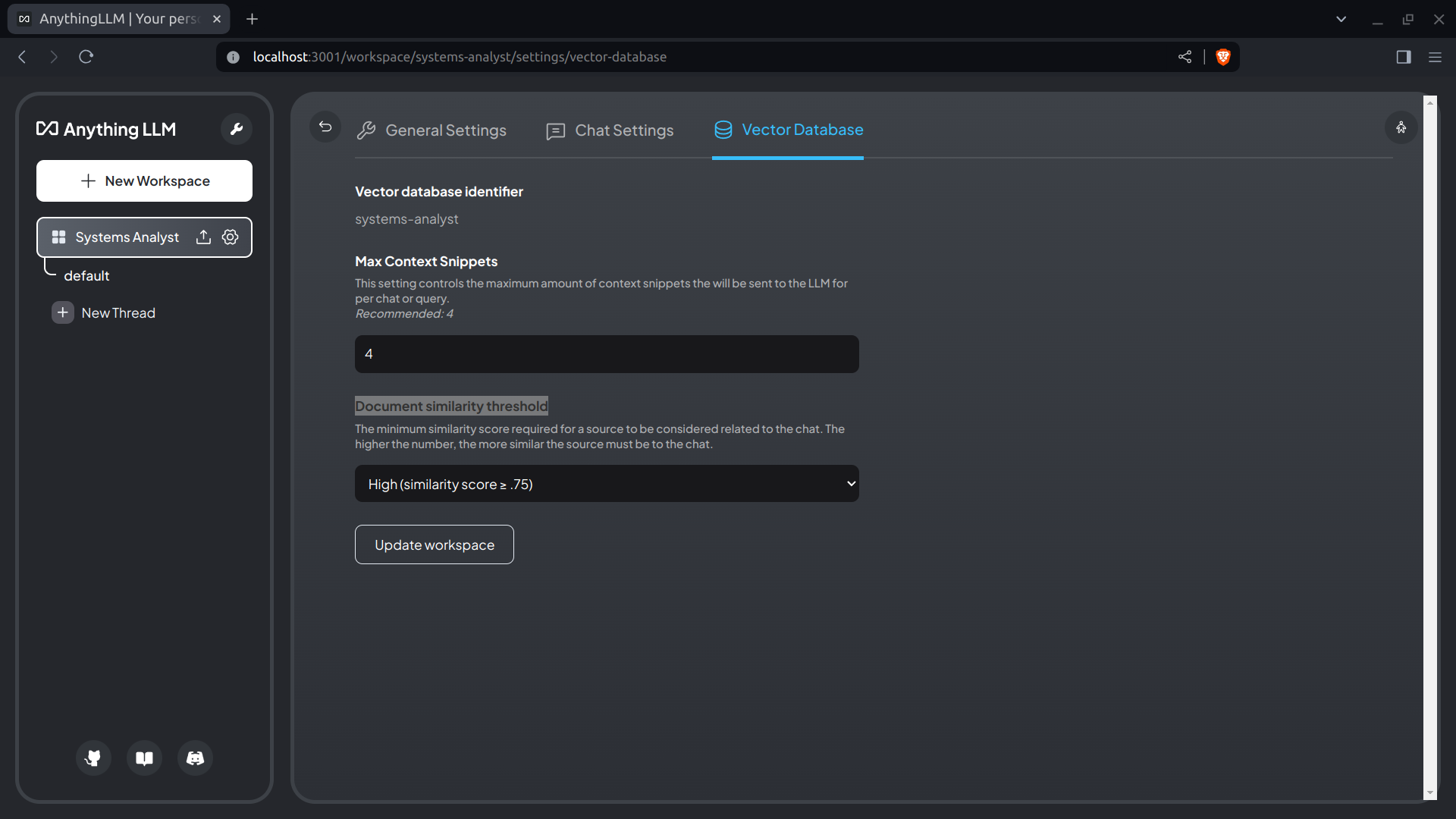

- In the Vector Database tab, I change the "Document similarity threshold" from low to high and click the "Update workspace" button:

- I click the return button (to the left of the tabs) and, on the main screen, I ask the same question again:

NOTE: The answer is essentially the same as last time.

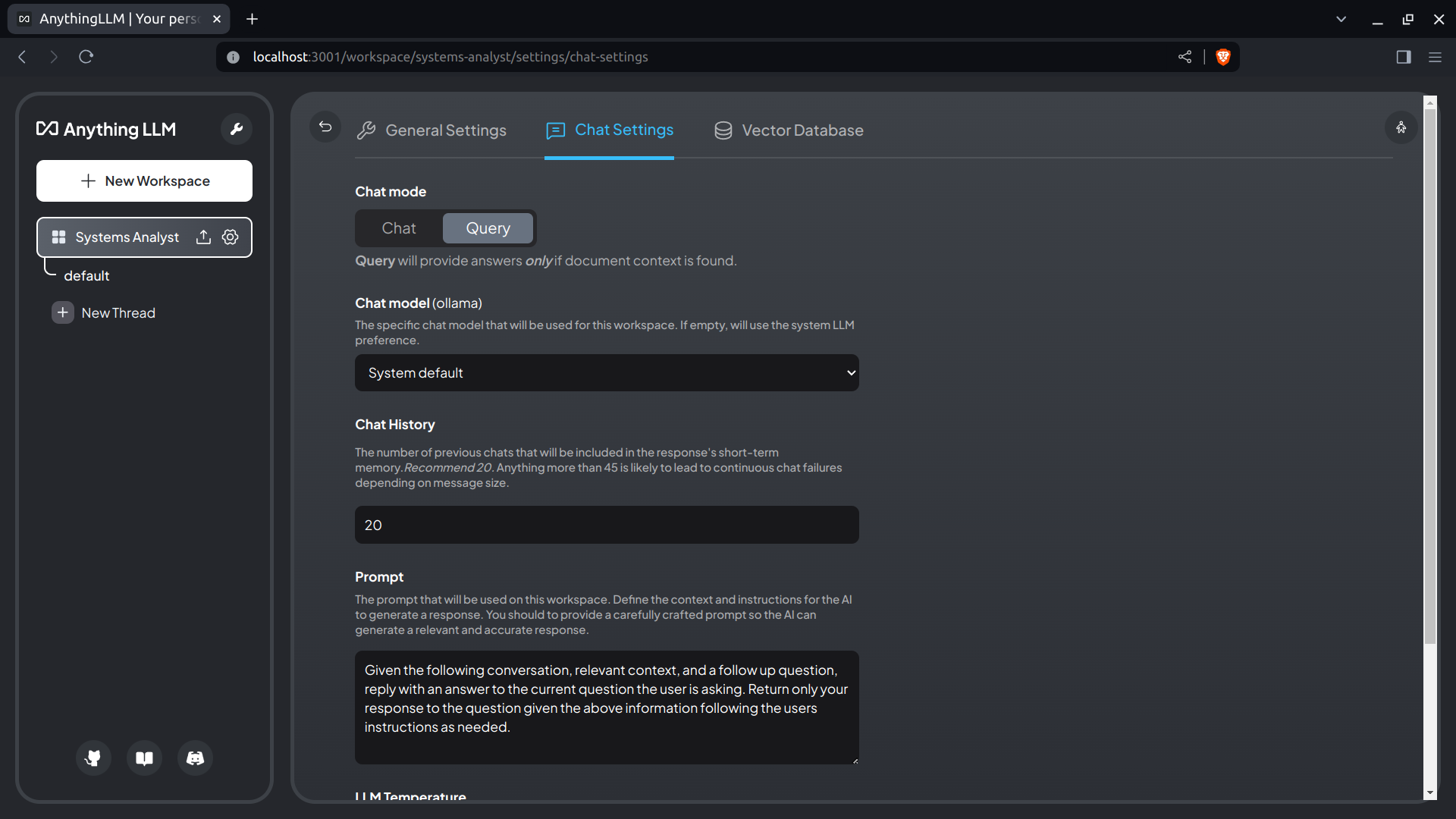

- I click the gear icon again, click the "Chat Settings" tab, change the "Chat mode" from "Chat" to "Query", click to "Update workspace" button at the bottom of the screen, and return to the main screen:

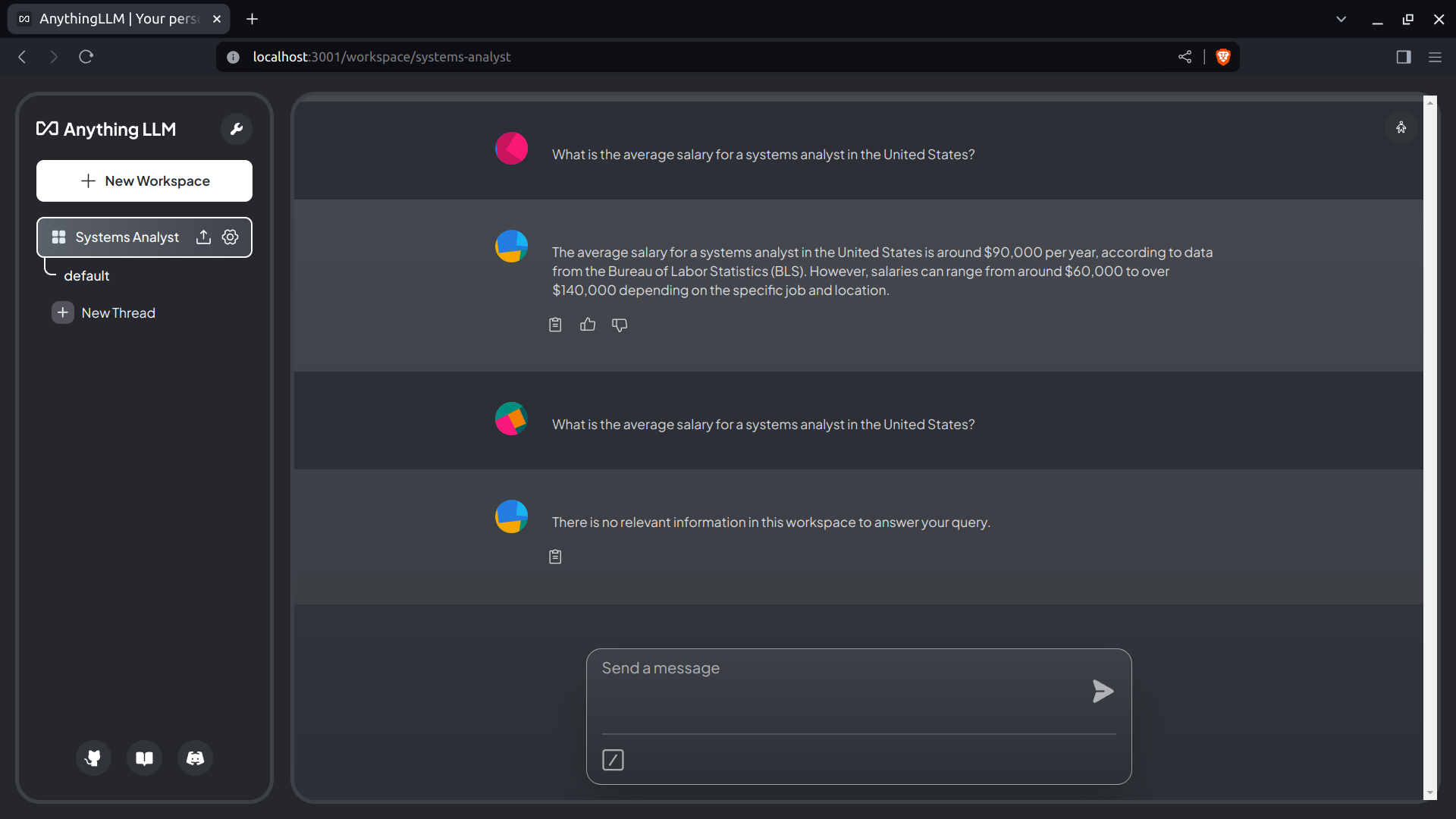

- I ask the same question for a third time:

NOTE: Query mode only works if there are documents available.

Adding Documents to the Systems Analyst Workspace.

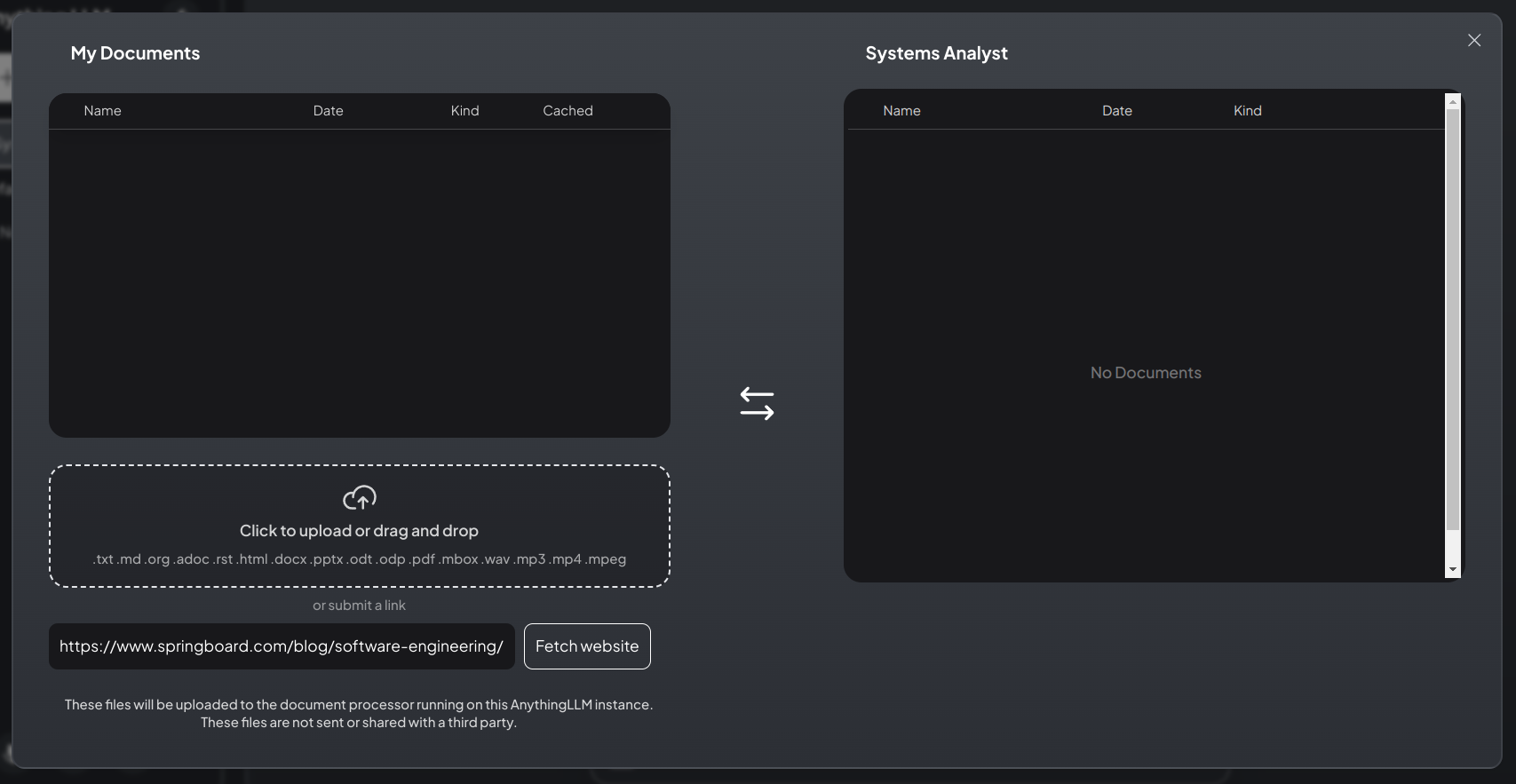

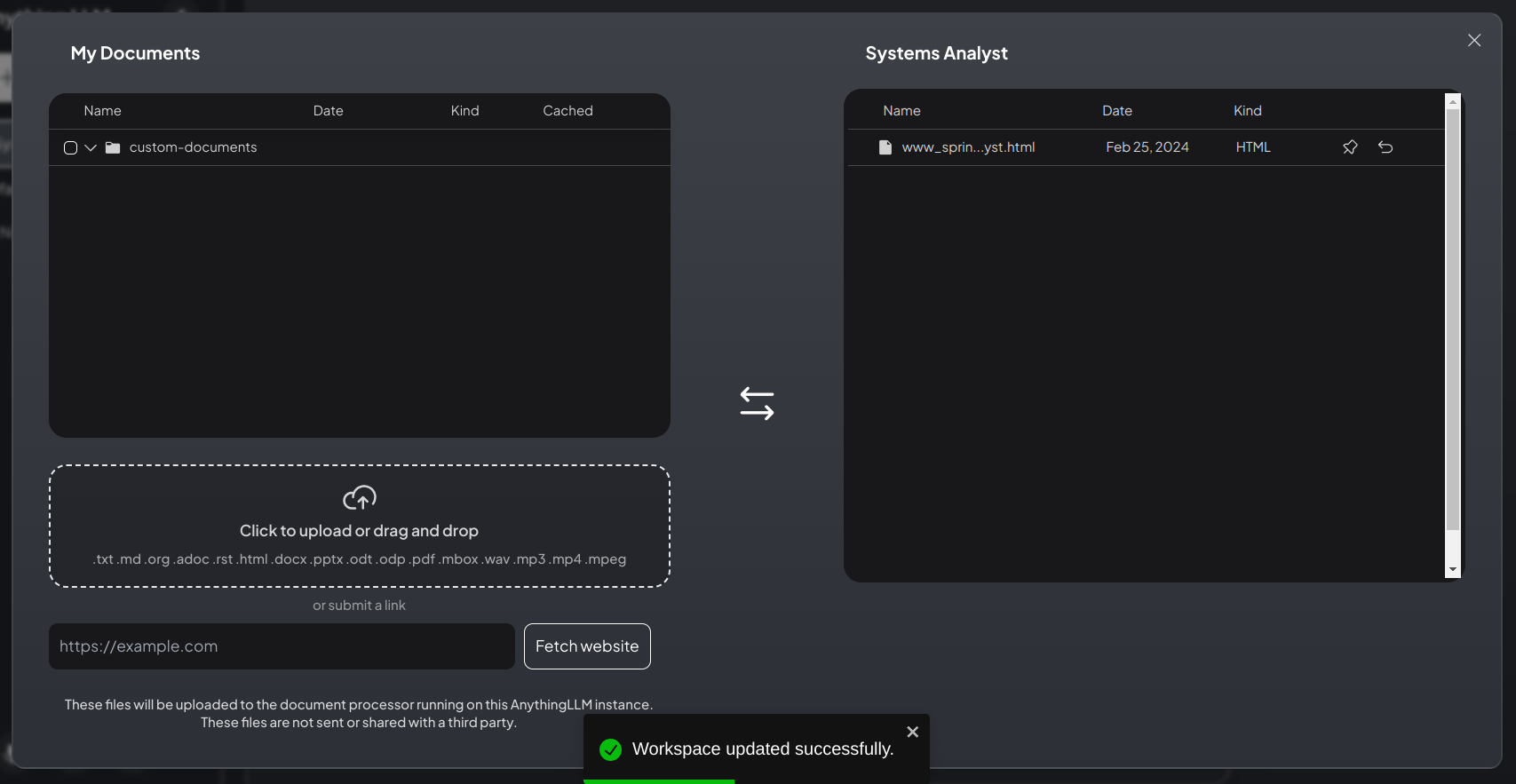

- In the left pane, I click the upload icon next to "Systems Analyst" (to the left of the gear icon):

- At the bottom of the modal window, I add a URL and click the "Fetch website" button:

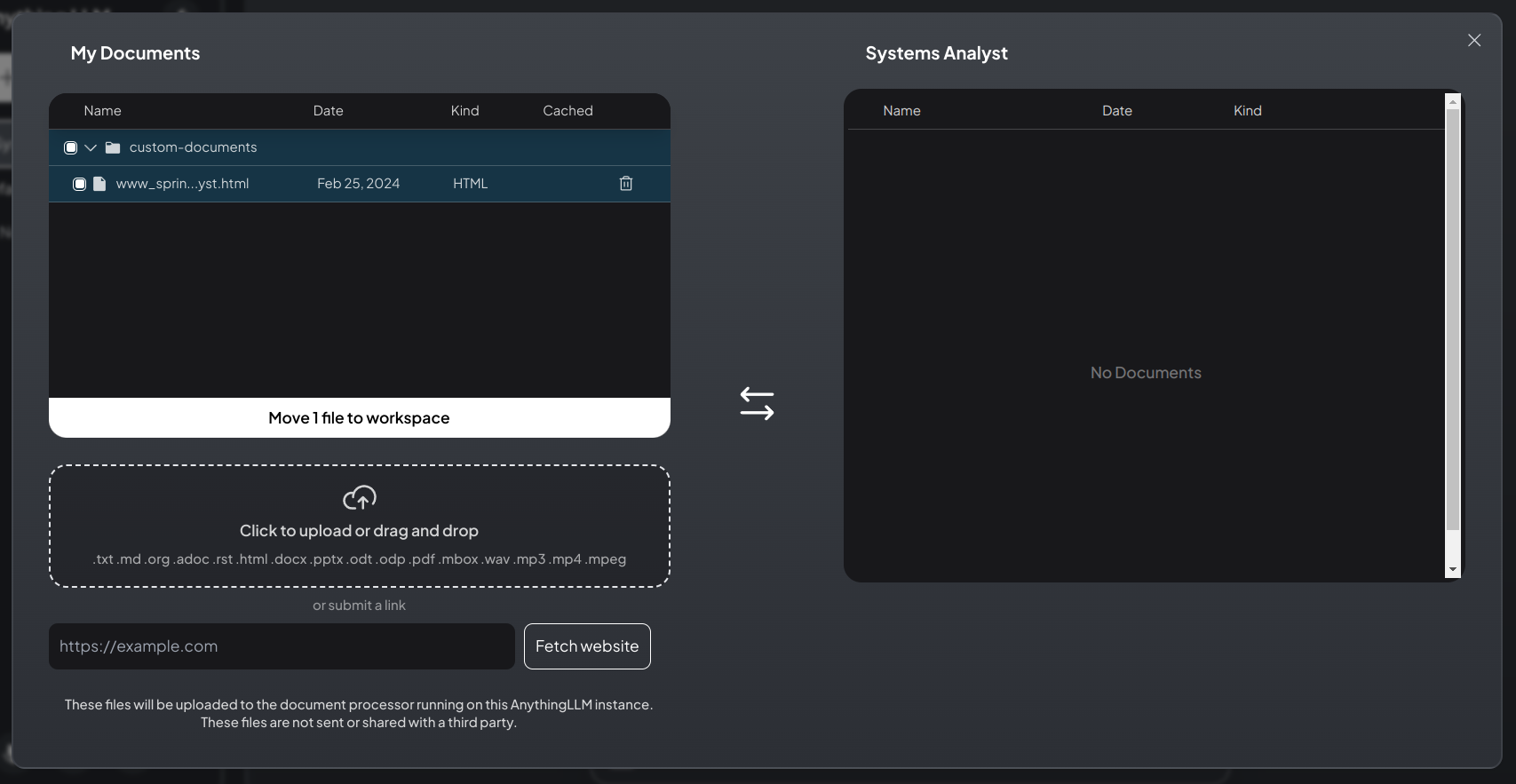

- In the "My Documents" panel, I select the new file and click the "Move 1 file to workspace" button:

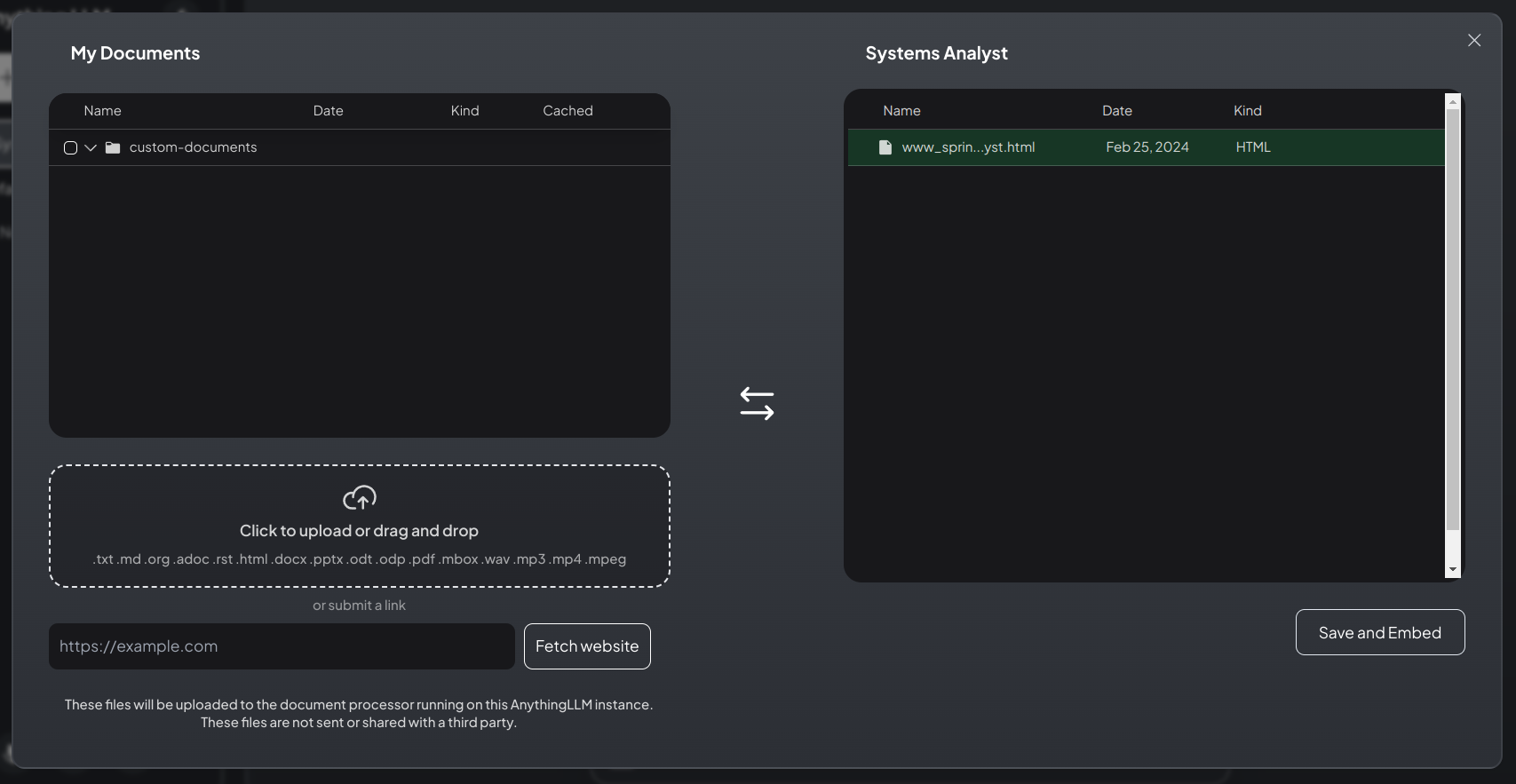

- Under the "Systems Analyst" panel, I click the "Save and Embed" button:

- After the Workspace is updated, I close the modal window:

- Back at the main screen, I ask the same question a 4th time:

NOTE: Although still in Query mode, Llama 2 provided an answer and included a citation from the added website.

Adding a Systems Prompt to the Systems Analyst Workspace.

- I click the gear icon, then I click the "Chat Settings" tab:

- In the "Prompt" field is the following:

Given the following conversation, relevant context, and a

follow up question, reply with an answer to the current

question the user is asking. Return only your response to

the question given the above information following the users

instructions as needed.

- The "Prompt" field is where I describe the "Systems Analyst" role:

Yoy are a systems analyst. Your skill is the ability to translate

business domain problems into technology domain problems. You will

provide the systems architect with the information she needs to

frame the technology problems for others to understand.

The Systems Analyst Workspace, when combined with quality documentation, will become a powerful point of contact where business entities can engage with our services.

Other Workspaces.

A Systems Analyst Workspace is just the beginning. There is other roles that need filling, too. Workspaces are a great way to delineate these roles, ensuring they each have access to quality, role-specific documents and defined by finely tuned prompts.

The Next Step.

When the Linux version of AnythingLLM is released, the first thing I'm going to do is dive into the APIs. Yes, I can start the ball rolling with the native Windows installation, but... Wait, I should start with the Windows installation!

Right, I've reached the end of this post.

'Bye.

The Results.

In this post, I've walked through the process of setting up AnythingLLM, a versatile document chatbot that can be tailored to specific use-cases and workspaces. I've explored how to start services, prepare the system, and customize workspaces according to specific roles like a Systems Analyst. I also showed how AnythingLLM can enhance its responses by using documents and websites to build a custom knowledge base. The ability to add documents to workspaces and fine-tune settings allows for a highly customized and efficient workflow. As I anticipate the Linux version of AnythingLLM, I can see the vast possibilities that this tool offers for creating interactive, personalized, and private ChatGPT experiences.

In Conclusion.

Have you ever dreamed of having a personalized AI-powered chatbot that can adapt to your specific needs? Let me introduce you to AnythingLLM. It is a free, versatile document chatbot that I can tailor with custom knowledge bases using documents and websites. It's like having a personalized ChatGPT experience that offers complete privacy.

Recently, I've set up AnythingLLM and let me tell you, the process is seamless. I can start services, prepare the system, and customize workspaces for specific roles like a Systems Analyst. AnythingLLM can enhance its responses by using documents and websites to build a custom knowledge base. Imagine the efficiency of having a tool that learns from the documents I provide! The ability to add documents to workspaces and fine-tune settings allows for a highly customized workflow, making it a game-changer for those who need an interactive, personalized, and private ChatGPT experience. As I await the Linux version of AnythingLLM, I can only imagine the vast possibilities that this tool offers.

Who else is excited about the potential of AI-powered chatbots like AnythingLLM? How would you use a personalized document chatbot in your workflow? Let's discuss!

Until next time: Be safe, be kind, be awesome.

#AnythingLLM, #Chatbot, #DocumentChatbot, #ChatGPT, #PersonalizedChatbot, #AI, #WorkspaceCustomization, #SystemsAnalyst, #KnowledgeBase, #AIChatbot

Subscribe to my newsletter

Read articles from Brian King directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Brian King

Brian King

Thank you for reading this post. My name is Brian and I'm a developer from New Zealand. I've been interested in computers since the early 1990s. My first language was QBASIC. (Things have changed since the days of MS-DOS.) I am the managing director of a one-man startup called Digital Core (NZ) Limited. I have accepted the "12 Startups in 12 Months" challenge so that DigitalCore will have income-generating products by April 2024. This blog will follow the "12 Startups" project during its design, development, and deployment, cover the Agile principles and the DevOps philosophy that is used by the "12 Startups" project, and delve into the world of AI, machine learning, deep learning, prompt engineering, and large language models. I hope you enjoyed this post and, if you did, I encourage you to explore some others I've written. And remember: The best technologies bring people together.