Unlocking Data Security: A Comprehensive Guide to Databricks Secret Scopes

Kiran Reddy

Kiran Reddy

2 Easy Ways to Create & Manage Databricks Secret Scopes

Introduction

In today's data-driven landscape, where technology facilitates the effortless creation and storage of vast volumes of information, the significance of data security cannot be overstated. With the proliferation of cloud service platforms, users now have unprecedented access to data from virtually anywhere, at any time. However, amidst this convenience, ensuring robust security measures remains paramount for organizations. To provide a secure environment to users and organizations, Databricks has the Secret Scopes feature that works as a safe storage space for users’ credentials and sensitive information present in the application or code. Databricks Secret Scope also provides users with a third-party Secret Scopes Management System where users can store and retrieve secrets whenever needed.

In this article, you will learn about Secret Scopes, Secrets, different ways to Create Secret Scopes and Secrets, and Working with Secret Scopes.

What is Databricks?

Databricks is a powerful analytics platform built on top of Apache-Spark, a high-performance open-source cluster computing framework. With Databricks, users can seamlessly develop, run, and share Spark-based applications, enabling efficient data analysis and manipulation. Beyond its foundational Spark capabilities, Databricks serves as a unified environment for Data Science and Data Engineering tasks, streamlining Machine Learning workflows and accelerating collaboration across teams. By consolidating data operations into a single platform, Databricks eliminates the need for multiple environments, offering professionals a comprehensive solution for everything from data collection to application development.

Why Databricks Secret Scopes?

""" EXAMPLE """

storage_account_name ='bhanustoragedemo'

output_container_name ='databricksdemo'

storage_account_access_key = 'uwN7Vn9KVf38eGLUW5+VZDJc5yTbvNDAB90oxqQRlnmdukqgOIZdrn75Zjg5uuHwgHqsaZgl5ATV+AStJ/04fg=='

spark.conf.set('fs.azure.account.key.'+storage_account_name+'.dfs.core.windows.net',storage_account_access_key)

dbutils.fs.ls('abfss://'+output_container_name+'@'+storage_account_name+'.dfs.core.windows.net/dump/')

In the above example we trying to access the cloud file using Account access key. Here the access key is present directly in the code. Storing credentials and sensitive information inside a code is not advisable in terms of Data Security and Privacy since anyone with access to code can get hold of authentication keys, which can allow them to fetch or update data without your permission.

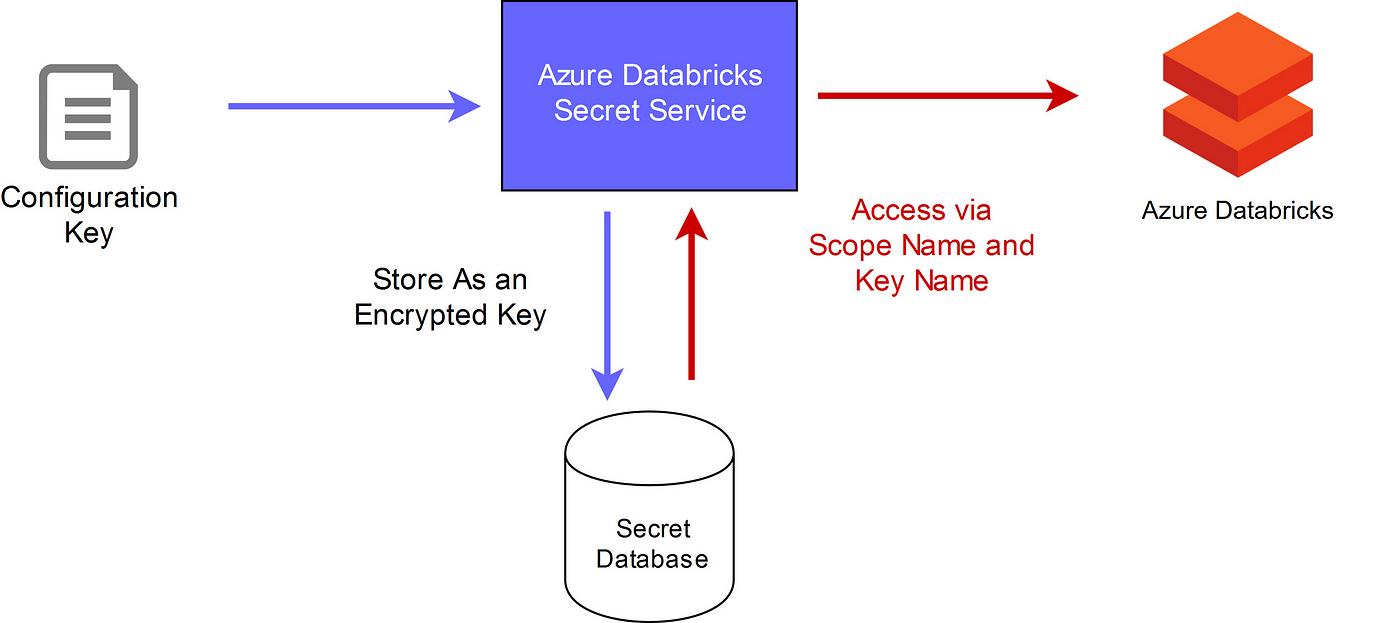

Secret Scopes can be created and managed by using Azure Key Vault and Databricks CLI method.But, the example shown is for managing Secret Scopes with the Databricks CLI method using DB Utility libraries. This library allows developers to store and access credentials or sensitive information safely and securely by sharing the code with different teams for different purposes.

What is Secret Scopes?

Managing secrets begins with creating a secret scope. A secret scope is collection of secrets identified by a name. A Secret Scope allows you to manage all the secret identities*or*information used for API authentication or other connections in a workspace. The naming of a Secret Scope has specific criteria in which the name should be non-sensitive*and*easily readable to the users using the workspace. A workspace is limited to a maximum of 100 secret scopes.

What is Secret ?

Inside a Secret Scope, Secrets are present in the form of a Key-value Pair that stores Secret information like access key, access Id, reference key, etc. In a Key-value pair, keys are the Identifiable Secret Names, and values are the Auditory Data interpreted as strings or bites.

Scopes are created with permissions controlled by secret ACLs. By default, scopes are created with MANAGE permission for the user who created the scope (the “creator”), which lets the creator read secrets in the scope, write secrets to the scope, and change ACLs for the scope.

Secret Management in Databricks

In Databricks, every Workspace has Secret Scopes within which one or more Secrets are present to access third-party data, integrate with applications, or fetch information. Users can also create multiple Secret Scopes within the workspace according to the demand of the application. However, a Workspace is limited to a maximum of 100 Secret Scopes, and a Secret Scope is limited to a maximum of 1000 Secrets.

Types of Databricks Secret Scopes

Azure Key Vault-Backed Scope

Databricks-Backed Scope

1)Azure Key Vault-backed scopes

Azure Key Vault-backed scopes offer an enhanced level of security and control within the Databricks environment. Leveraging Azure Key Vault, a cloud service for securely storing and managing sensitive information such as keys, secrets, and certificates, these scopes enable users to safeguard their credentials and access tokens effectively. By integrating with Azure Key Vault, Databricks ensures that secrets stored within scopes remain encrypted and protected, mitigating the risk of unauthorized access or exposure. This integration enhances data security and compliance, making Azure Key Vault-backed scopes a preferred choice for organizations seeking robust protection for their sensitive information within the Databricks ecosystem.

However, this Azure Key Vault-Backed Scope is only supported for the Azure Databricks Premium plan. Users can manage Secrets in the Azure Key Vault using the Azure Set Secret REST API*or*Azure portal UI.

2) Databricks-Backed Scope

In this method, the Secret Scopes are managed with an internally encrypted database owned by the Databricks platform. Users can create a Databricks-backed Secret Scope using the Databricks CLI version 0.7.1 and above.

Permission Levels of Secret Scopes

There are three levels of permissions that you can assign while creating each Secret Scope. They are:

Manage: This permission is used to manage everything about the Secret Scopes and ACLS (Access Control List). By using ACLs, users can configure fine-grained permissions to different people and groups for accessing different Scopes and Secrets.

Write: This allows you to read, write, and manage the keys of the particular Secret Scope.

Read: This allows you to read the secret scope and list all the secrets available inside it.

How to Create a Secret Scope & Secret?

To begin with, let’s take a peek on what are the requirements to Create Databricks Secret Scopes:

Azure Subscription

Azure Databricks Workspace create an Azure Databricks workspace

Azure Key Vault (Create a key vault using the Azure portal)

Azure Databricks Cluster (Runtime 4.0 or above)

Python 3 (3.6 and above)

Configure your Azure key vault instance for Azure Databricks

A) Creating Azure Key Vault-Backed Secret Scopes with Azure UI

In your web browser, visit the Azure Portal and log in to your Azure account.

Now, open your Azure Databricks Workspace and click on Launch Workspace.

By default, you will have your Azure Databricks Workspace URL present in the address bar of your web browser. Now, to create Secret Scopes, you have to add the extension “#secrets/createScope” to the default URL (ensure S in scope is uppercase).

Now, enter all the required information, scope name, DNS name, and resource ID that you generated earlier.

Then, click on the Create button. By this, we have created and connected the Azure Key Vault secret scope with Databricks.

Now, to create secrets in the Azure Key Vault Secret Scope, follow the below steps.

Navigate to your new key vault in the Azure portal

On the Key Vault settings pages, select Secrets.

Select on Generate/Import.

On the Create a secret screen choose the following values:

Upload options: Manual.

Name: Type a name for the secret. The secret name must be unique within a Key Vault. The name must be a 1-127 character string, starting with a letter and containing only 0-9, a-z, A-Z, and -. For more information on naming, see Key Vault objects, identifiers, and versioning

Value: Type a value for the secret. Key Vault APIs accept and return secret values as strings.

Leave the other values to their defaults. Select Create.

Once that you receive the message that the secret has been successfully created, you may select on it on the list.

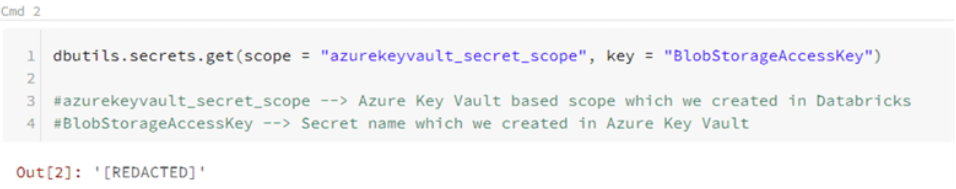

To check whether Databricks Secret Scope and Secret are created correctly, we use them in a notebook:

In your Databricks workspace, click on Create Notebook.

Enter the Name of the Notebook and preferred programming language.

Click on the Create button, and the Notebook is created.

Enter the code in the notebook:

dbutils.secrets.get(scope = "azurekeyvault_secret_scope", key = "BlobStorageAccessKey")

In the above code, azurekeyvault_secret_scope is the name of the secret scope you created in Databricks, and BlobStorageAccessKey is the name of the secret that you created in an Azure Vault.

B)Databricks-backed scopes

A Databricks-backed secret scope is stored in (backed by) an encrypted database owned and managed by Azure Databricks. The secret scope name:

Must be unique within a workspace.

Must consist of alphanumeric characters, dashes, underscores,

@, and periods, and may not exceed 128 characters.

The names are considered non-sensitive and are readable by all users in the workspace.

You create a Databricks-backed secret scope using the Databricks CLI (version 0.205 and above). Alternatively, you can use the Secrets API.

To create a Databricks-backed secret scope we need Databricks CLI.

Requirements

- Python Version >= 3.7

To install simply run command1pip install --upgrade databricks-cli

(Then set up authentication using username/password or authentication token. Credentials are stored at ~/.databrickscfg.)

After the execution of the command1, Databricks CLI gets installed.

Now, open the Databricks Workspace.

To access information through CLI, you have to authenticate.

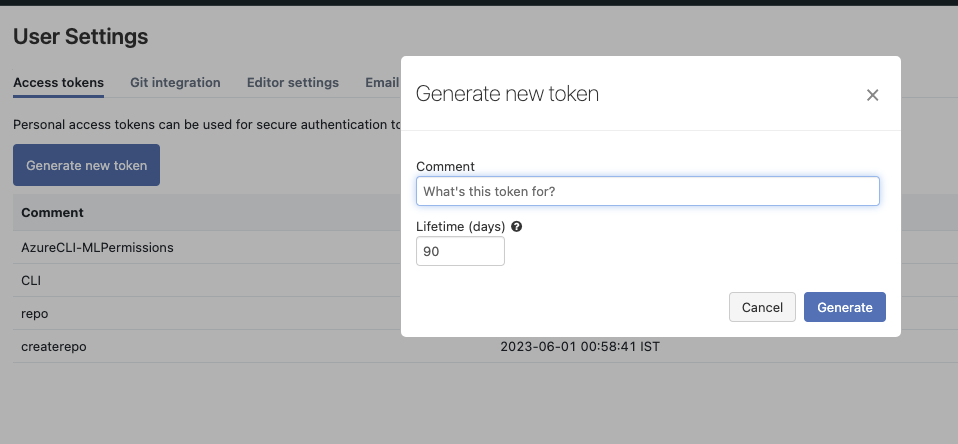

For authenticating and accessing the Databricks REST APIs, you have to use a personal access token.

Generate a new personal access token

To generate the access token, click on the user profile icon in the top right corner of the Databricks Workspace and select user settings.

Select Generate New Token

Enter the name of the comment and lifetime (total validity days of the token).

Click on generate.

Now, the Personal Access is generated; copy the generated token.

databricksconfigure (enter hostname/username/password at prompt)

databricks configure --token (enter hostname/auth-token at prompt)

After Executing the above command

When prompted to enter the Databricks Host URL, provide your Databricks Host Link.

Enter your generated token and authenticate.

Now, you are successfully authenticated and all set for creating Secret Scopes and Secrets using CLI.

Multiple connection profiles are also supported with

databricks configure --profile <profile> [--token].

The connection profile can be used as such:

databricks workspace ls --profile <profile>.

To test that your authentication information is working, try a quick test like databricks workspace ls.

1)Create a Databricks-backed secret scope

To create a scope using the Databricks CLI:

databricks secrets create-scope <scope-name>

By default, scopes are created with MANAGE permission for the user who created the scope. If your account does not have the Premium plan, you must override that default and explicitly grant the MANAGE permission to “users” (all users) when you create the scope:

databricks secrets create-scope --scope <ScopeName> -–initial-manage-principal users

After executing the any one of the above command, a Databricks Secret Scope will be successfully created.

Creating Secrets Inside the Secret Scope using Databricks CLI

You can enter the following command to create a Secret inside the Scope:

databricks secrets put –scope <scopeName> –key <SecretName>

databricks secrets put –scope BlobStorage –key BLB_Strg_Access_Key

BlobStorage > Name of the Secret scope

BLB_Strg_Access_Key > Name of the secret

Hence, the secret called BLB_Strg_Access_Key is created inside the scope called BlobStorage.

After this process, a notepad will open where you have to give the value of the Secret. Save the notepad after entering the value of the Databricks Secret. If there is no error, then the process is successful.

This is how you can create Databricks SecretScope and Secret using Databricks CLI.

2.1) How to List the Secret Scope?

This command lists all the Secret Scopes that were created before:

databricks secrets list–scopes

2.2)Listing the Secrets inside Secret Scopes

This command lists the Secrets present inside the Secret Scope named BlobStorage.

databricks secrets list –scope BlobStorage

3) How to Delete the Secret Scope?

This command deletes the Secret Scope named BlobStorage that was created before:

databricks secrets delete–scope –-scope BlobStorage

Creating a mount point using Databricks secrets

# Define your storage account name, container name, and secret scope name

storage_account_name = "<storage-account-name>"

container_name = "<container-name>"

secret_scope_name = "<secret-scope-name>"

# Retrieve the storage account access key from the secret scope

access_key = dbutils.secrets.get(scope=secret_scope_name, key="<storage-account-access-key>")

# Define the path for the mount point

mount_point = "/mnt/<mount-point-name>"

# Define the URL with storage account name and container name

url = "abfss://" + container_name + "@" + storage_account_name + ".dfs.core.windows.net/"

# Mount the storage using access key from secret scope

dbutils.fs.mount(

source = url,

mount_point = mount_point,

extra_configs = {"fs.azure.account.key." + storage_account_name + ".dfs.core.windows.net": access_key}

In our upcoming article, we'll delve deeper into the concept of mount points, exploring their functionality and significance within the Databricks environment.

Conclusion

The integration of Key Vaults, Secret Scopes, and Secrets within Databricks offers developers a robust framework for securely managing and sharing sensitive information across their organizations. Through the utilization of these features, developers can confidently grant access to various levels of their organization, ensuring that sensitive data remains protected at all times.

Throughout this article, we have explored the fundamental concepts and practical implementation of Databricks Secret and Secret Scopes. By understanding how to create and work with these components, developers are equipped with the knowledge and tools necessary to enhance the security and efficiency of their Databricks workflows.

As organizations continue to embrace data-driven strategies, the ability to manage secrets securely becomes increasingly critical. With Databricks Secret Scopes, developers can establish a solid foundation for safeguarding sensitive information, enabling collaboration and innovation within their teams while maintaining the highest standards of security.

Stay Connected

Stay tune for more insightful discussions and Feel free to share your thoughts in the comments! We're also eager to hear what topics you'd like us to cover in our upcoming articles.

Until then, keep exploring, practicing, and expanding your knowledge. The world of databases awaits your exploration!

Subscribe to my newsletter

Read articles from Kiran Reddy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kiran Reddy

Kiran Reddy

Passionate Data Engineer with a degree from Lovely Professional University. Enthusiastic about leveraging data to drive insights and solutions.