Day 23/100 100 Days of Code

Chris Douris

Chris Douris

I found a bug where some of the text was not detected with the find() method properly as the method is case-sensitive. I tried using the following function:

// To lower case

// Source: https://stackoverflow.com/questions/313970/how-to-convert-an-instance-of-stdstring-to-lower-case

std::transform(keyword.begin(), keyword.end(), keyword.begin(),

[](char c){return std::tolower(c);});

Unfortunately, it didn't work as I wanted it to because the lowercase() function cannot just simply convert non-English characters. I tried using std::locale and converting the keyword string to a wide string but to do that I needed to use the deprecated header file codecvt. I decided to avoid codecvt because it wasn't working as intended. The idea is to simply start using wstrings instead of strings as it will make the application support multiple languages properly.

I also changed the ParseContent method to accept only a string that contains the HTML content of the website and find all the anchor links.

std::vector<std::string> Scraper::ParseContent(std::string content) {

lxb_status_t status;

lxb_dom_element_t* body = nullptr;

lxb_dom_element_t* gather_collection = nullptr;

lxb_html_document_t* document = nullptr;

lxb_dom_collection_t* collection = nullptr;

lxb_html_parser_t* parser = nullptr;

std::vector<std::string> output;

// Initialize parser

parser = lxb_html_parser_create();

status = lxb_html_parser_init(parser);

if (status != LXB_STATUS_OK)

{

exit(EXIT_FAILURE);

}

// Create an empty array of lxb_char_t

// memset and then iterate through the content and

// assign to the html variable

lxb_char_t *html = new lxb_char_t[content.size() + 1];

// std::memset(html, 0, content.size() + 1);

std::transform(content.begin(), content.end(), content.begin(),

[](unsigned char c){return std::tolower(c);});

for (int i = 0; i < content.length(); i++) {

html[i] = content[i];

}

size_t html_len = content.length() + 1;

document = lxb_html_parse(parser, html, html_len);

if (document == nullptr) {

exit(EXIT_FAILURE);

}

// Destroy parser now that the process has finished

lxb_html_parser_destroy(parser);

body = lxb_dom_interface_element(document->body);

collection = lxb_dom_collection_make(&document->dom_document, 128);

if (collection == nullptr)

{

exit(EXIT_FAILURE);

}

status = lxb_dom_elements_by_tag_name(lxb_dom_interface_element(document->body), collection,

(const lxb_char_t *) "a", 1);

if (status != LXB_STATUS_OK)

{

exit(EXIT_FAILURE);

}

size_t get_collection_length = lxb_dom_collection_length(collection);

// Print out results

for (size_t i = 0; i < get_collection_length; i++)

{

gather_collection = lxb_dom_collection_element(collection, i);

serialize_node(lxb_dom_interface_node(gather_collection));

}

output.reserve(urls.size());

for (std::string& item : urls)

{

output.push_back(item);

}

// Destroy objects to avoid memory leaks

lxb_dom_collection_destroy(collection, true);

lxb_html_document_destroy(document);

delete [] html;

return output;

}

Subscribe to my newsletter

Read articles from Chris Douris directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Chris Douris

Chris Douris

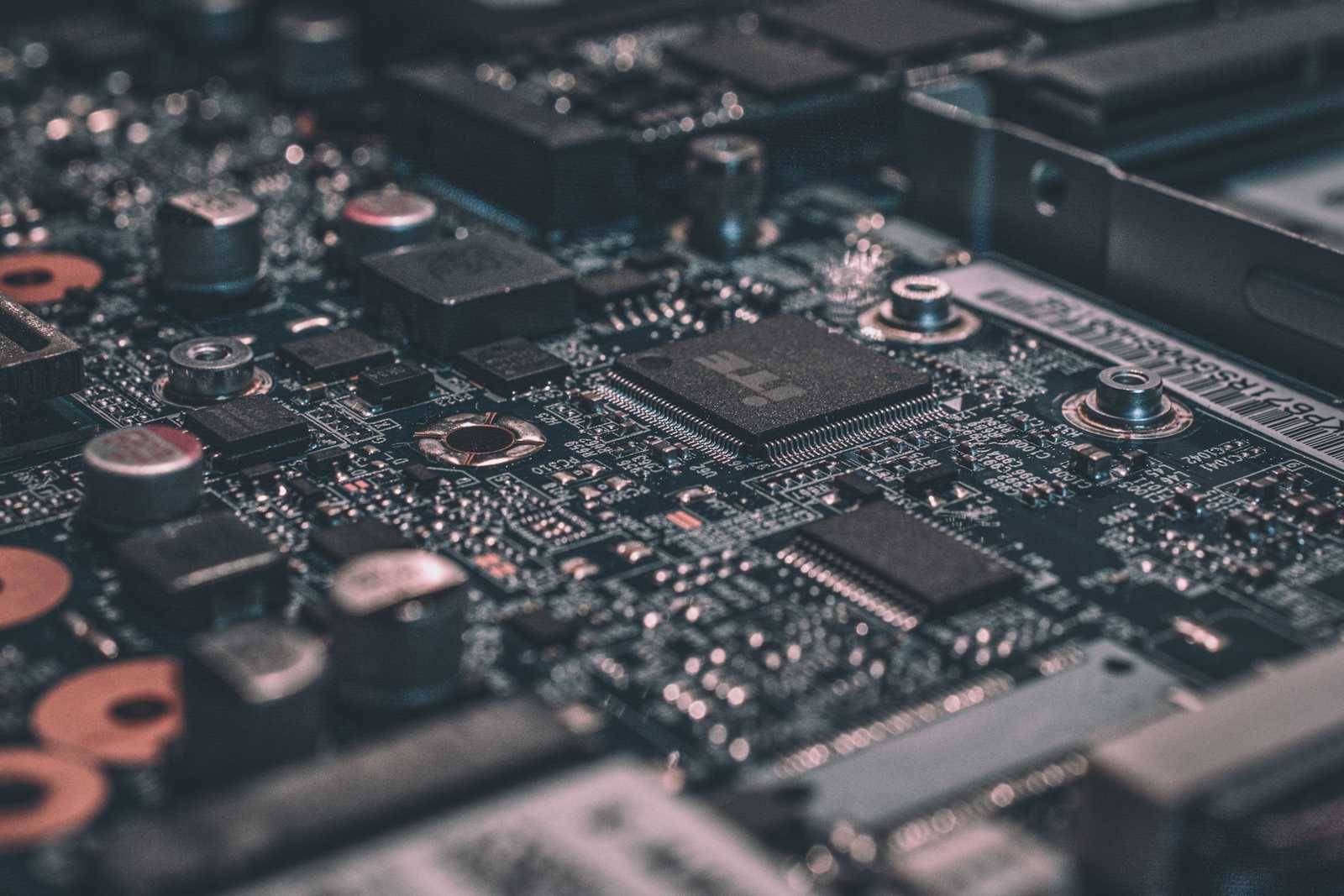

AKA Chris, is a software developer from Athens, Greece. He started programming with basic when he was very young. He lost interest in programming during school years but after an unsuccessful career in audio, he decided focus on what he really loves which is technology. He loves working with older languages like C and wants to start programming electronics and microcontrollers because he wants to get into embedded systems programming.