Day 19 : Docker for DevOps Engineers

Prathmesh Vibhute

Prathmesh Vibhute

In the realm of DevOps, streamlining development workflows and ensuring seamless deployment processes are paramount. Docker has emerged as a pivotal tool for achieving these objectives, offering containerization solutions that enable developers and operations teams to build, deploy, and manage applications with ease. In this guide, we will delve into two key aspects of Docker – Docker Volume and Docker Network – and explore how they enhance the capabilities of DevOps engineers.

Docker-Volume

Docker allows you to create something called volumes. Volumes are like separate storage areas that can be accessed by containers. They allow you to store data, like a database, outside the container, so it doesn't get deleted when the container is deleted. You can also mount from the same volume and create more containers having same data.

Docker Network

Docker allows you to create virtual spaces called networks, where you can connect multiple containers (small packages that hold all the necessary files for a specific application to run) together. This way, the containers can communicate with each other and with the host machine (the computer on which the Docker is installed). When we run a container, it has its own storage space that is only accessible by that specific container. If we want to share that storage space with other containers, we can't do that.

Task 1: Multi-Container Deployment with Docker Compose

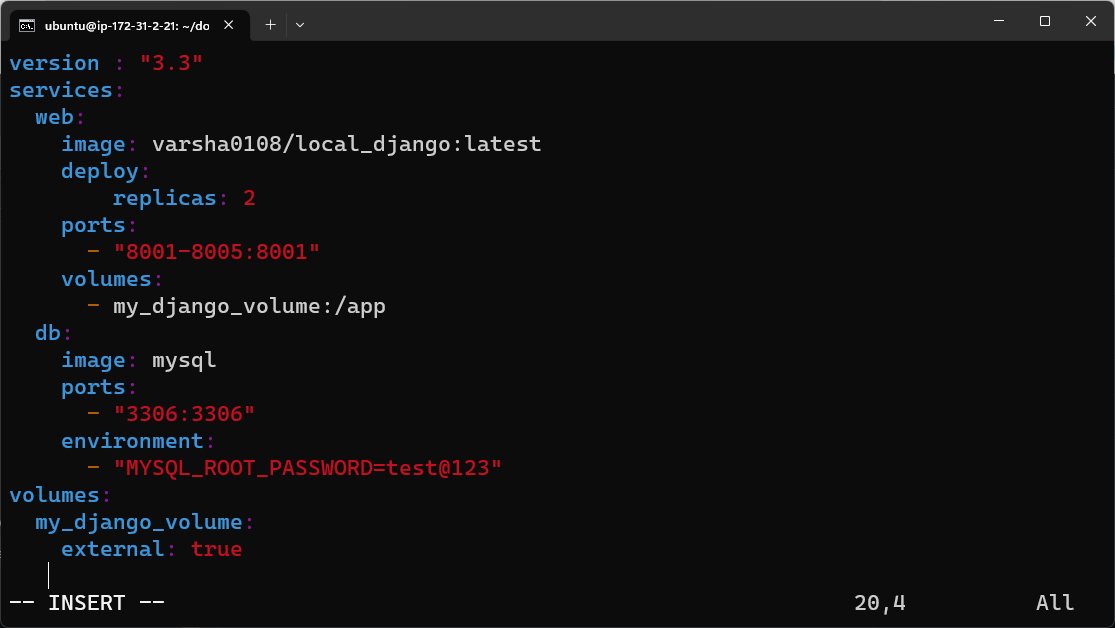

One of the most efficient ways to manage multi-container applications in Docker is through Docker Compose. By defining the configuration of multiple services in a single YAML file, DevOps engineers can orchestrate complex deployments with ease.

Define Services in docker-compose.yml: Specify the services, their dependencies, and configurations in the docker-compose.yml file.

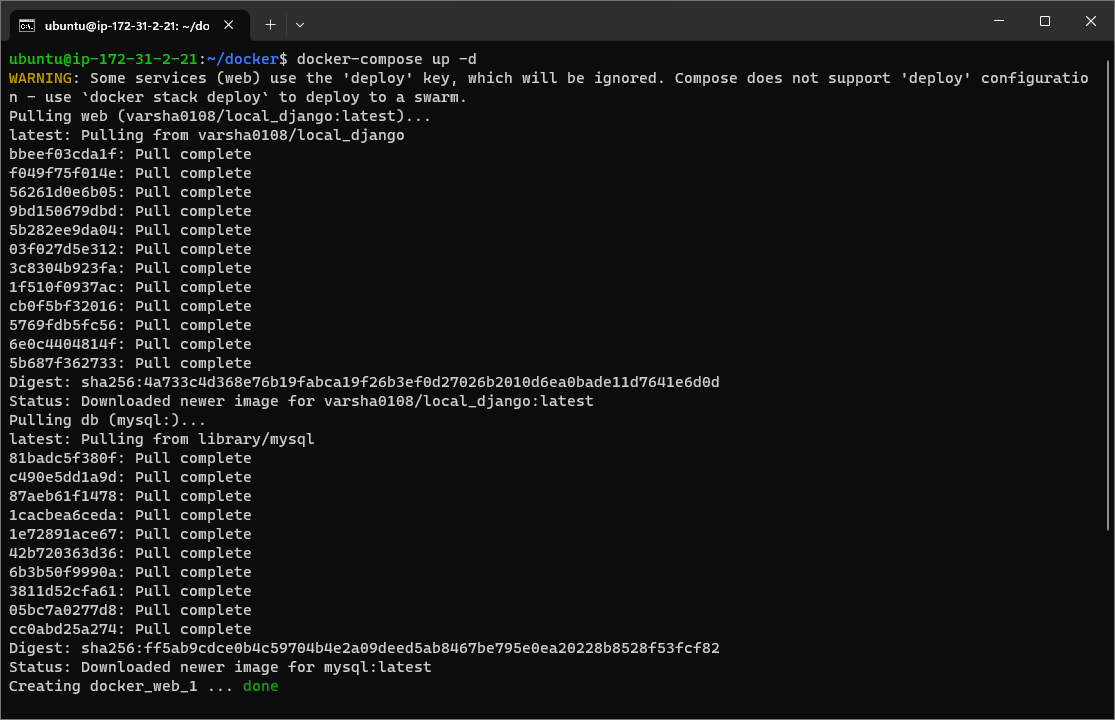

Start Containers: Execute

docker-compose up -dto bring up the containers in detached mode.

Scale Services: Utilize

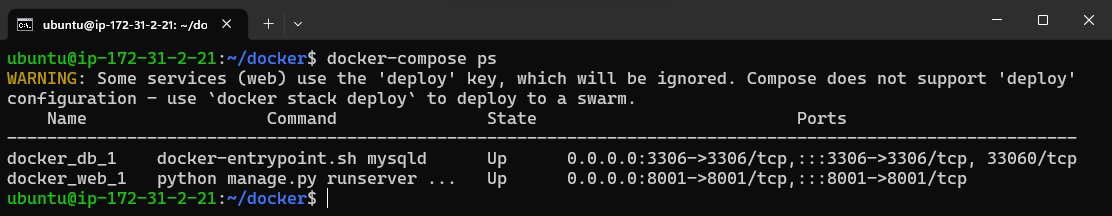

docker-compose scalecommand to adjust the number of replicas for specific services, enabling auto-scaling.Monitor and Manage Containers: Employ

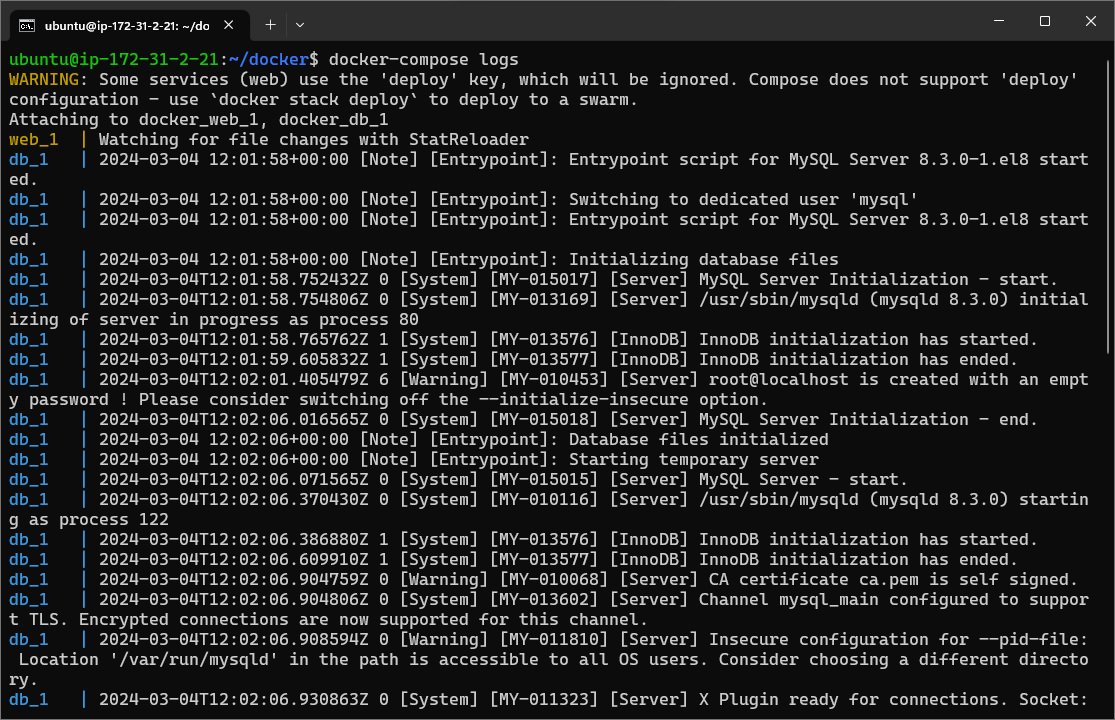

docker-compose psto monitor the status of containers anddocker-compose logsto view service-specific logs.

Stop and Remove Containers: Terminate the deployment and remove associated resources using

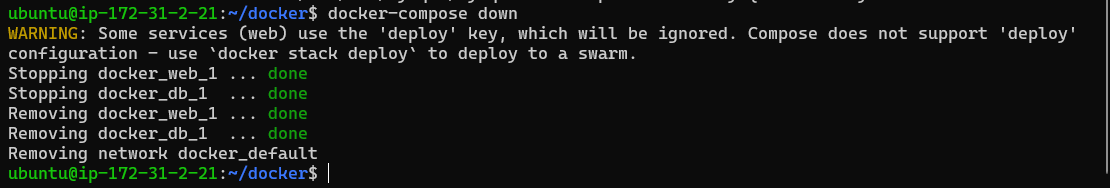

docker-compose down.

Task 2: Utilizing Docker Volumes for Data Sharing

Docker Volumes facilitate seamless data sharing between containers, ensuring consistency and accessibility across the application stack. Named Volumes, in particular, offer a convenient way to manage and reference volumes within Docker environments.

Here's how you can leverage Docker Volumes for data sharing:

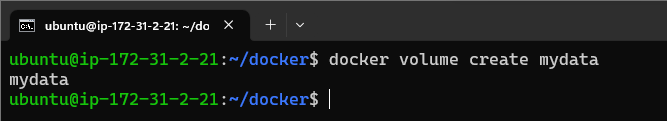

Create Docker Volumes: Use

docker volume createcommand to create named volumes.

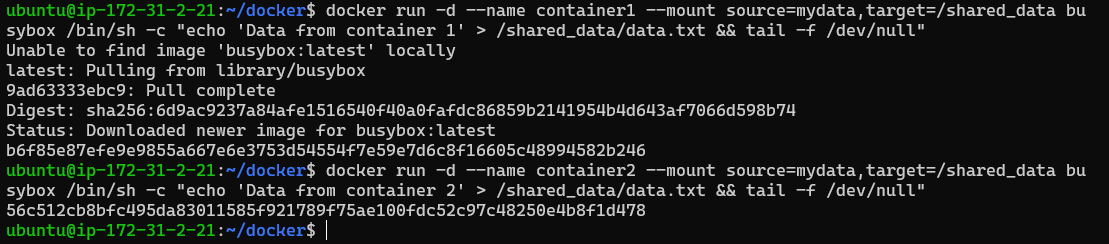

Now, let's create two containers that read and write data to this named volume.

In the above commands:

We create two containers named

container1andcontainer2.We mount the

mydatavolume to the/shared_datadirectory inside both containers.Inside each container, we echo some data to a file named

data.txtlocated in the/shared_datadirectory.

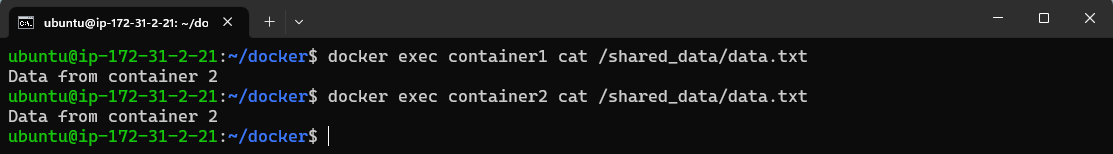

Now, let's verify that the data is the same in both containers:

Mount Volumes in Containers: Incorporate volumes into container configurations using

docker run --mountcommand.Verify Data Consistency: Validate data integrity across containers by executing commands within each container using

docker exec.

This confirms that both containers are accessing the same volume and can read and write data to it.

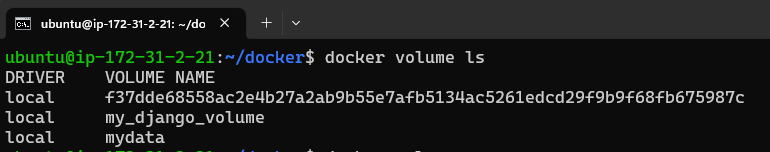

Manage Volumes: List existing volumes with

docker volume lsand remove volumes withdocker volume rmwhen no longer needed.To list all volumes:

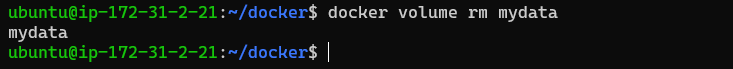

To remove the volume when you're done:

This will remove the named volume

mydataalong with any data stored in it. Make sure you don't need the data anymore before removing the volume.

Conclusion

Docker Volume and Docker Network are indispensable tools for DevOps engineers seeking to streamline development workflows and ensure efficient data management within containerized environments. By mastering these capabilities, teams can enhance collaboration, scalability, and reliability across their application deployments, ultimately driving greater efficiency and agility in their DevOps practices.

I'm confident that this article will prove to be valuable, helping you discover new insights and learn something enriching .

thank you : )

Subscribe to my newsletter

Read articles from Prathmesh Vibhute directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by