Day 37: Kubernetes Important interview Questions.

Yashraj Singh Sisodiya

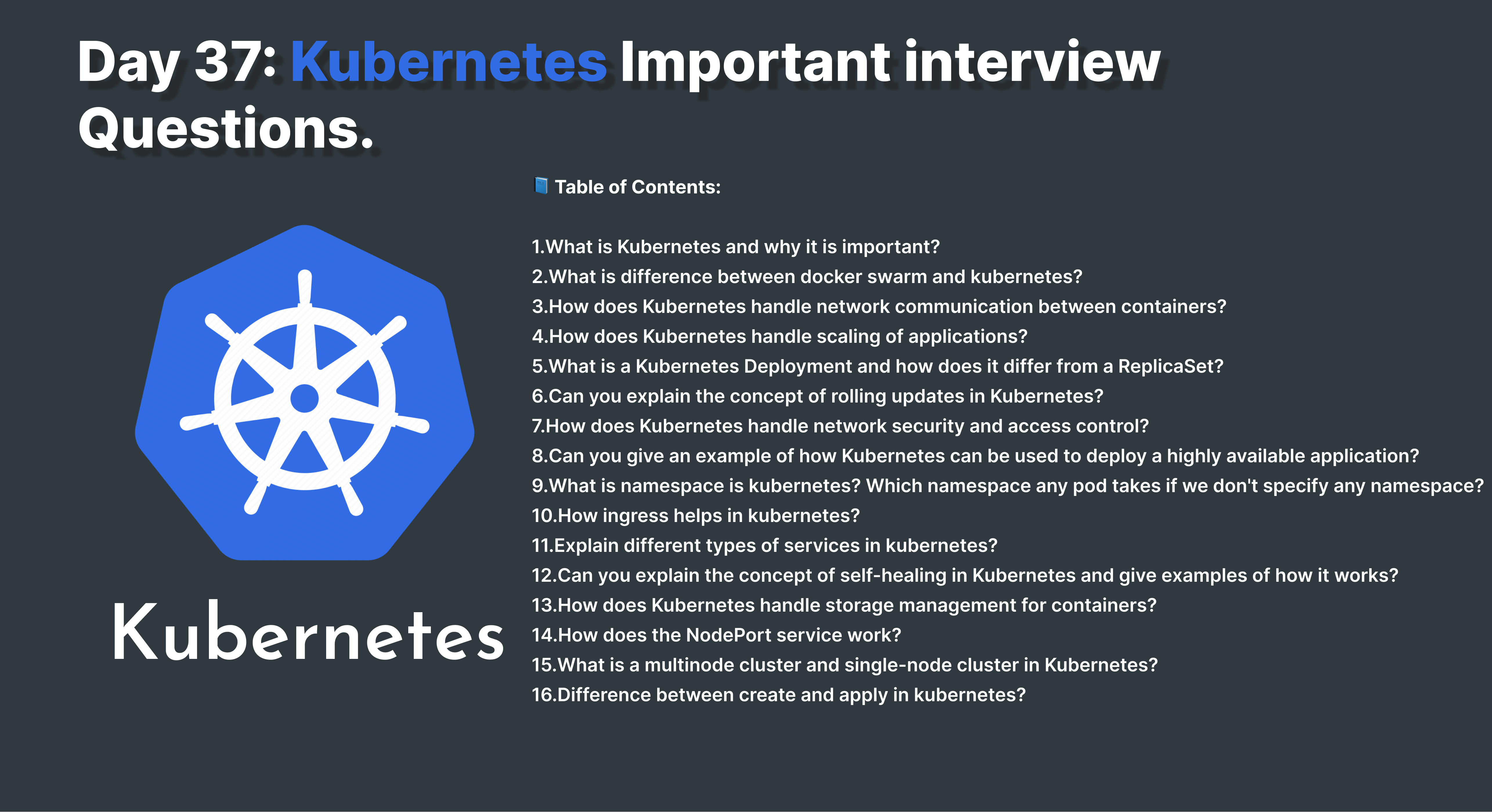

Yashraj Singh SisodiyaTable of contents

- Breaking Down Kubernetes: Must-Know Interview Questions

- What is Kubernetes and why is it important?

- What is the difference between Docker Swarm and Kubernetes?

- How does Kubernetes handle network communication between containers?

- How does Kubernetes handle scaling of applications?

- What is a Kubernetes Deployment and how does it differ from a ReplicaSet?

- Can you explain the concept of rolling updates in Kubernetes?

- How does Kubernetes handle network security and access control?

- Can you give an example of how Kubernetes can be used to deploy a highly available application?

- What is a namespace in Kubernetes? Which namespace does any pod take if we don't specify any namespace?

- How does Ingress help in Kubernetes?

- Explain different types of services in Kubernetes?

- Can you explain the concept of self-healing in Kubernetes and give examples of how it works?

- How does Kubernetes handle storage management for containers?

- How does the NodePort service work?

- What is a multinode cluster and single-node cluster in Kubernetes?

- Difference between create and apply in Kubernetes?

Breaking Down Kubernetes: Must-Know Interview Questions

Welcome to a comprehensive guide on Kubernetes interview questions! Whether you're a DevOps intern gearing up for interviews or a seasoned professional brushing up on your knowledge, these questions will help you navigate the intricate world of Kubernetes with ease.

What is Kubernetes and why is it important?

Kubernetes is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. It provides a robust framework for managing complex containerized workloads, offering features like automated scaling, load balancing, and service discovery. Kubernetes is crucial for modern cloud-native applications as it streamlines the deployment and management of microservices architecture, improving scalability, reliability, and efficiency.

What is the difference between Docker Swarm and Kubernetes?

Docker Swarm and Kubernetes are both container orchestration platforms, but they differ in architecture, features, and scalability. Docker Swarm is simpler to set up and manage, making it suitable for smaller-scale deployments. In contrast, Kubernetes offers advanced features like automatic scaling, service discovery, and rolling updates, making it ideal for large-scale, production-grade deployments. Kubernetes also has a larger ecosystem and community support compared to Docker Swarm.

How does Kubernetes handle network communication between containers?

Kubernetes uses a networking model called Container Networking Interface (CNI) to manage network communication between containers. Each Pod in Kubernetes gets its unique IP address, and containers within the same Pod communicate over localhost. Kubernetes assigns a unique IP address to each Pod, enabling seamless communication between Pods across different nodes in the cluster.

How does Kubernetes handle scaling of applications?

Kubernetes offers two main scaling mechanisms: horizontal scaling (scaling the number of Pods) and vertical scaling (scaling individual Pods). Horizontal scaling is achieved through Kubernetes controllers like ReplicaSet and Deployment, which automatically adjust the number of Pods based on resource utilization or user-defined metrics. Vertical scaling involves increasing or decreasing the resources allocated to individual Pods, such as CPU or memory, to meet performance requirements.

What is a Kubernetes Deployment and how does it differ from a ReplicaSet?

A Kubernetes Deployment is a higher-level abstraction that manages ReplicaSets and Pods, providing features like rolling updates and rollback functionality. A Deployment defines the desired state of a set of Pods and ensures that the desired number of Pods are running at all times. In contrast, a ReplicaSet is a lower-level controller that ensures a specified number of identical Pods are running concurrently. Deployments offer more advanced features like declarative updates and automated rollbacks, making them more suitable for managing application deployments in production environments.

Can you explain the concept of rolling updates in Kubernetes?

Rolling updates in Kubernetes allow you to update a deployment to a new version without causing downtime. Kubernetes gradually replaces old Pods with new ones, ensuring that the application remains available throughout the update process. This is achieved by creating a new replica set with the updated image and gradually scaling up the new replica set while scaling down the old one. Rolling updates ensure smooth transitions between different versions of an application, minimizing disruption to users.

How does Kubernetes handle network security and access control?

Kubernetes provides various mechanisms for network security and access control, including network policies, role-based access control (RBAC), and pod security policies. Network policies allow you to define rules for controlling traffic between Pods based on IP addresses, ports, and protocols. RBAC enables you to define granular permissions for accessing Kubernetes resources, such as Pods, deployments, and namespaces. Pod security policies define security settings and constraints for Pods, such as restricting privileged access and enforcing security contexts.

Can you give an example of how Kubernetes can be used to deploy a highly available application?

Kubernetes can be used to deploy a highly available application by distributing application components across multiple Pods and nodes in the cluster. For example, you can deploy a stateless web application with multiple replicas spread across different nodes to ensure redundancy and fault tolerance. Kubernetes provides features like load balancing, auto-scaling, and rolling updates, ensuring that the application remains available and responsive even in the event of node failures or traffic spikes.

What is a namespace in Kubernetes? Which namespace does any pod take if we don't specify any namespace?

A namespace in Kubernetes is a logical partition within a cluster that provides a scope for Kubernetes objects, such as Pods, Services, and Deployments. It allows you to create multiple virtual clusters within the same physical cluster, enabling isolation and resource management. If a namespace is not specified for a Pod, it is automatically assigned to the default namespace.

How does Ingress help in Kubernetes?

In Kubernetes, Ingress is an API object that manages external access to services within a cluster. It provides a centralized point for routing external traffic to different services based on HTTP or HTTPS rules. Ingress allows you to configure routing rules, SSL termination, and load balancing for HTTP-based applications, making it easier to expose services to external users or applications.

Explain different types of services in Kubernetes?

Kubernetes offers several types of services to expose applications within a cluster, including ClusterIP, NodePort, LoadBalancer, and Ingress. - ClusterIP: Exposes a service on a cluster-internal IP address, accessible only within the cluster. - NodePort: Exposes a service on each node's IP address and a static port on the node, making the service accessible from outside the cluster. - LoadBalancer: Exposes a service externally using a cloud provider's load balancer, allowing access from outside the cluster. - Ingress: Manages external access to services within a cluster, providing HTTP and HTTPS routing rules and load balancing capabilities.

Can you explain the concept of self-healing in Kubernetes and give examples of how it works?

Self-healing in Kubernetes refers to the platform's ability to automatically detect and recover from failures without human intervention. Kubernetes continuously monitors the health and status of Pods, nodes, and other components in the cluster. If a Pod fails or becomes unresponsive, Kubernetes automatically restarts the Pod or reschedules it to another healthy node in the cluster. For example, if a Pod crashes due to a software bug or resource exhaustion, Kubernetes will restart the Pod to restore the desired state of the application.

How does Kubernetes handle storage management for containers?

Kubernetes offers various storage options for containers, including PersistentVolumes (PVs), PersistentVolumeClaims (PVCs), and storage classes. PersistentVolumes provide a persistent storage abstraction that can be dynamically provisioned or statically defined by administrators. PersistentVolumeClaims allow Pods to request storage resources from PersistentVolumes based on their capacity and access modes. Kubernetes supports various storage backends, including local storage, cloud storage, and network-attached storage (NAS), ensuring flexibility and scalability in storage management for containers.

How does the NodePort service work?

The NodePort service type in Kubernetes exposes a service on a specific port on each node in the cluster. Kubernetes allocates a random port in the range 30000-32767 on each node and forwards traffic from that port to the service. NodePort services allow external access to services by mapping the service port on each node to a static port on the node's IP address.

What is a multinode cluster and single-node cluster in Kubernetes?

In Kubernetes, a multinode cluster consists of multiple nodes (physical or virtual machines) that form a distributed computing environment. Each node runs Kubernetes components such as kubelet, kube-proxy, and container runtime (e.g., Docker), allowing for workload distribution and fault tolerance. In contrast, a single-node cluster consists of a single node that hosts all Kubernetes components, including the control plane and worker nodes. Single-node clusters are suitable for development or testing purposes but lack the scalability and fault tolerance of multinode clusters.

Difference between create and apply in Kubernetes?

In Kubernetes, the

kubectl createcommand is used to create new resources based on the configuration specified in a YAML or JSON file. If the resource already exists,kubectl createwill return an error, indicating that the resource is already present in the cluster. On the other hand, thekubectl applycommand is used to apply changes to existing resources or create new resources if they don't already exist.kubectl applycompares the desired state specified in the configuration file with the current state in the cluster and applies the necessary changes to make the two states consistent.kubectl applyis preferred for managing resources in Kubernetes as it supports declarative configuration and allows for easier management of changes and updates to resources.

Subscribe to my newsletter

Read articles from Yashraj Singh Sisodiya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Yashraj Singh Sisodiya

Yashraj Singh Sisodiya

I am Yashraj Singh Sisodiya, a 3rd Year CSE student at SVVV, born and raised in Shujalpur. Currently residing in Indore, I'm passionate about pursuing a career in DevOps engineering. My tech journey began with an internship at Infobyte, honing my skills as an Android Developer intern. Alongside my academic pursuits, I actively participate in co-curriculars, holding roles as Technical Lead at Abhyudaya and Cloud Lead at GDSC SVVV, while also serving as an MLSA of my college. I have a keen interest in Cloud Computing, demonstrated through projects such as User management and Backup using shell scripting Linux, Dockerizing applications, CI/CD with Jenkins, and deploying a 3-tier application on AWS. Always eager to learn, I'm committed to expanding my knowledge and skills in the ever-evolving tech landscape.