📂Day 30-Kubernetes Architecture: A Comprehensive Guide

Sandeep Kale

Sandeep Kale

➡️Kubernetes Overview

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It abstracts away the underlying infrastructure, allowing developers to focus on building and deploying applications without worrying about the underlying hardware. Kubernetes is designed around the concept of containers, making it easy to deploy and manage containerized applications. It provides automated scaling, self-healing capabilities, service discovery, load balancing, and support for rolling updates and rollbacks. Overall, Kubernetes provides a powerful platform for deploying, scaling, and managing containerized applications, simplifying the process of building and deploying applications at scale.

➡️What is Kubernetes? & Why do we call it k8s?

Kubernetes is a powerful tool for managing containers, which are a way to package up software so it can run consistently across different computing environments. With Kubernetes, you can deploy, scale, and manage containerized applications with ease. It abstracts away many of the complexities of managing containers, such as networking and storage, making it easier for developers to focus on building and deploying their applications.

The name "Kubernetes" comes from the Greek word for "helmsman" or "pilot," reflecting its role in steering and managing containerized applications. The abbreviation "k8s" is derived by taking the first letter of "Kubernetes," followed by the number of letters between the first and last letters, and then the last letter. This shorthand is commonly used in the industry as a convenient and recognizable way to refer to Kubernetes.

➡️Explain the Architecture of Kubernetes.

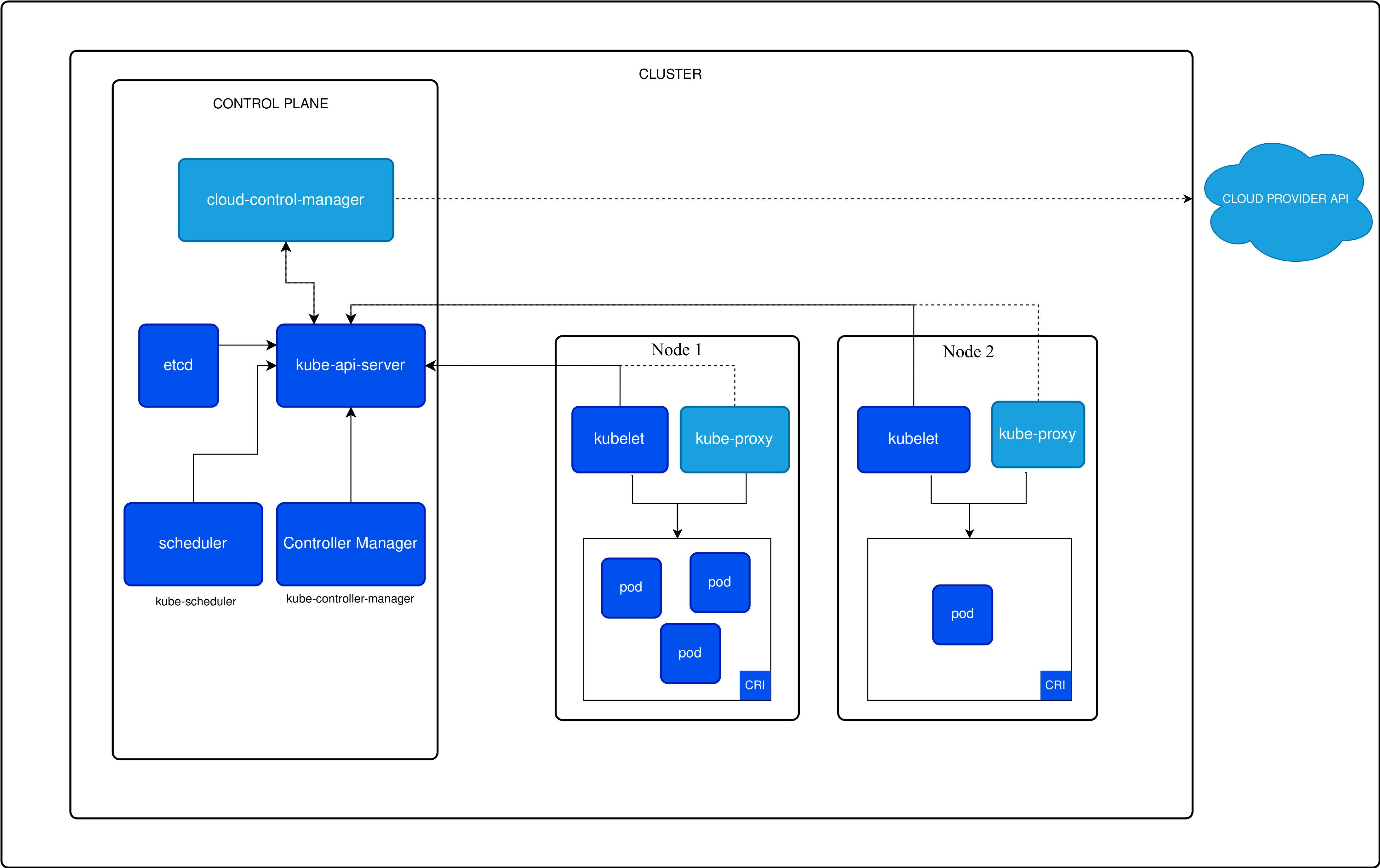

The architecture of Kubernetes is designed to provide a flexible and scalable platform for deploying, managing, and scaling containerized applications. At its core, Kubernetes is comprised of several key components that work together to manage the lifecycle of containers. Here's an overview of the main components:

Master (Server): The master node is responsible for managing the Kubernetes cluster. It consists of several components:

API Server: The API server is the central management point for the Kubernetes cluster. It exposes the Kubernetes API, which allows users to interact with the cluster.

Scheduler: The scheduler is responsible for placing containers onto nodes in the cluster. It takes into account factors such as resource requirements, node capacity, and other constraints.

Controller Manager: The controller manager is responsible for managing various controllers that handle different aspects of the cluster, such as replication, endpoints, and nodes.

etcd: etcd is a distributed key-value store that is used to store the cluster's state. It is used by the API server and other components to store configuration and runtime state information.

Node (Slave): A node is a worker machine in the Kubernetes cluster. It can be a physical machine or a virtual machine. Each node runs several components:

Kubelet: The kubelet is responsible for communicating with the master node and managing containers on the node.

kube-proxy: The kube-proxy is responsible for handling network communications to and from the pods running on the node.

Container Runtime: The container runtime is responsible for running containers on the node, such as Docker or containerd.

Pod: A pod is the smallest deployable unit in Kubernetes. It represents a single instance of a containerized application. Pods can contain one or more containers that share resources and are scheduled and deployed together.

Service: A service is an abstraction that defines a set of pods and a policy for accessing them. Services provide a way to access and load balance traffic to pods running in the cluster.

➡️What are the benefits of using K8s?

Using Kubernetes (k8s) offers several benefits for deploying and managing containerized applications:

Scalability: Kubernetes allows you to easily scale your applications up or down based on demand, ensuring optimal performance and resource utilization.

High availability: Kubernetes provides built-in mechanisms for ensuring that your applications are highly available, even in the event of node failures.

Resource efficiency: Kubernetes helps you make efficient use of your computing resources by packing containers onto nodes based on their resource requirements.

Automated deployments: Kubernetes supports automated deployment strategies, such as rolling updates and rollbacks, making it easy to deploy new versions of your applications without downtime.

Portability: Kubernetes provides a consistent environment for running your applications, making it easy to move them between different cloud providers or on-premises environments.

Service discovery and load balancing: Kubernetes provides built-in mechanisms for service discovery and load balancing, making it easy to distribute traffic across your application instances.

Self-healing: Kubernetes can automatically detect and restart failed containers, ensuring that your applications remain available and responsive.

➡️What is Control Plane?

The control plane in Kubernetes is responsible for managing the cluster and making decisions about the cluster's state. It consists of several components that work together to provide the cluster's functionality. These components include the API server, scheduler, controller manager, and etcd.

➡️What is the difference between kubectl and kubelets?

| Feature | kubectl | kubelet |

| Purpose | Command-line tool for interacting with the Kubernetes cluster. | Agent that manages containers on each node in the Kubernetes cluster. |

| Usage | Used by administrators, developers, and operators to deploy and manage applications, inspect cluster resources, and perform administrative tasks. | Managed by the Kubernetes control plane and not typically directly interacted with by users. Communicates with the control plane to manage containers on the node. |

| Example | kubectl get pods | Receives instructions from the control plane to start or stop containers on the node. |

➡️Explain the Role of the API Server?

The API server in Kubernetes acts as the central management point for the entire cluster. It exposes the Kubernetes API, which allows users, administrators, and components within the cluster to interact with the cluster and perform various operations. The API server is a key component of the Kubernetes control plane and plays a crucial role in the functioning of the cluster. Here are some key aspects of the API server's role:

Exposes the Kubernetes API: It provides a way for users and components to interact with the cluster.

Authenticates and Authorizes Requests: It ensures that only authorized users and components can make changes to the cluster.

Validates and Processes Requests: It checks that requests are valid and then carries out the requested actions within the cluster.

Updates the Cluster State: It maintains and updates the cluster's state, ensuring that changes are consistent across the cluster.

Gateway to the Control Plane: It acts as a gateway to other control plane components, like the scheduler and controller manager, forwarding requests to them for processing.

🎯Conclusion

Overall, Kubernetes is a powerful platform for deploying, managing, and scaling containerized applications. It provides a flexible and scalable architecture, automated deployment and scaling, high availability, and self-healing capabilities. Kubernetes abstracts away the complexities of managing containers, making it easier to build and deploy applications at scale.

Happy Learning 😊

Subscribe to my newsletter

Read articles from Sandeep Kale directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by