Monitoring LLMs with Montelo & the Vercel AI SDK

Deactivated User

Deactivated User

The Vercel AI SDK is a library designed to help developers build conversational streaming user interfaces in JavaScript and TypeScript. It makes it really easy to build ChatGPT-like interfaces.

If you haven't already, read the docs or watch this video 👇 on setting up the Vercel AI SDK.

What it doesn't do

The AI SDK doesn't store any of the requests for you out of the box. There is a guide on saving the result of a completion to a database after streaming it back to the user.

You can manually do it like this:

export async function POST(req: Request) {

// ...

// Convert the response into a friendly text-stream

const stream = OpenAIStream(response, {

onStart: async () => {

// This callback is called when the stream starts

// You can use this to save the prompt to your database

await savePromptToDatabase(prompt);

},

onToken: async (token: string) => {

// This callback is called for each token in the stream

// You can use this to debug the stream or save the tokens to your database

console.log(token);

},

onCompletion: async (completion: string) => {

// This callback is called when the stream completes

// You can use this to save the final completion to your database

await saveCompletionToDatabase(completion);

},

});

// Respond with the stream

return new StreamingTextResponse(stream);

}

But you'll have to setup a database, figure out a schema, deploy it, write to it, debug it, maintain it, and more 🥱

There's an easier way.

MonteloAI

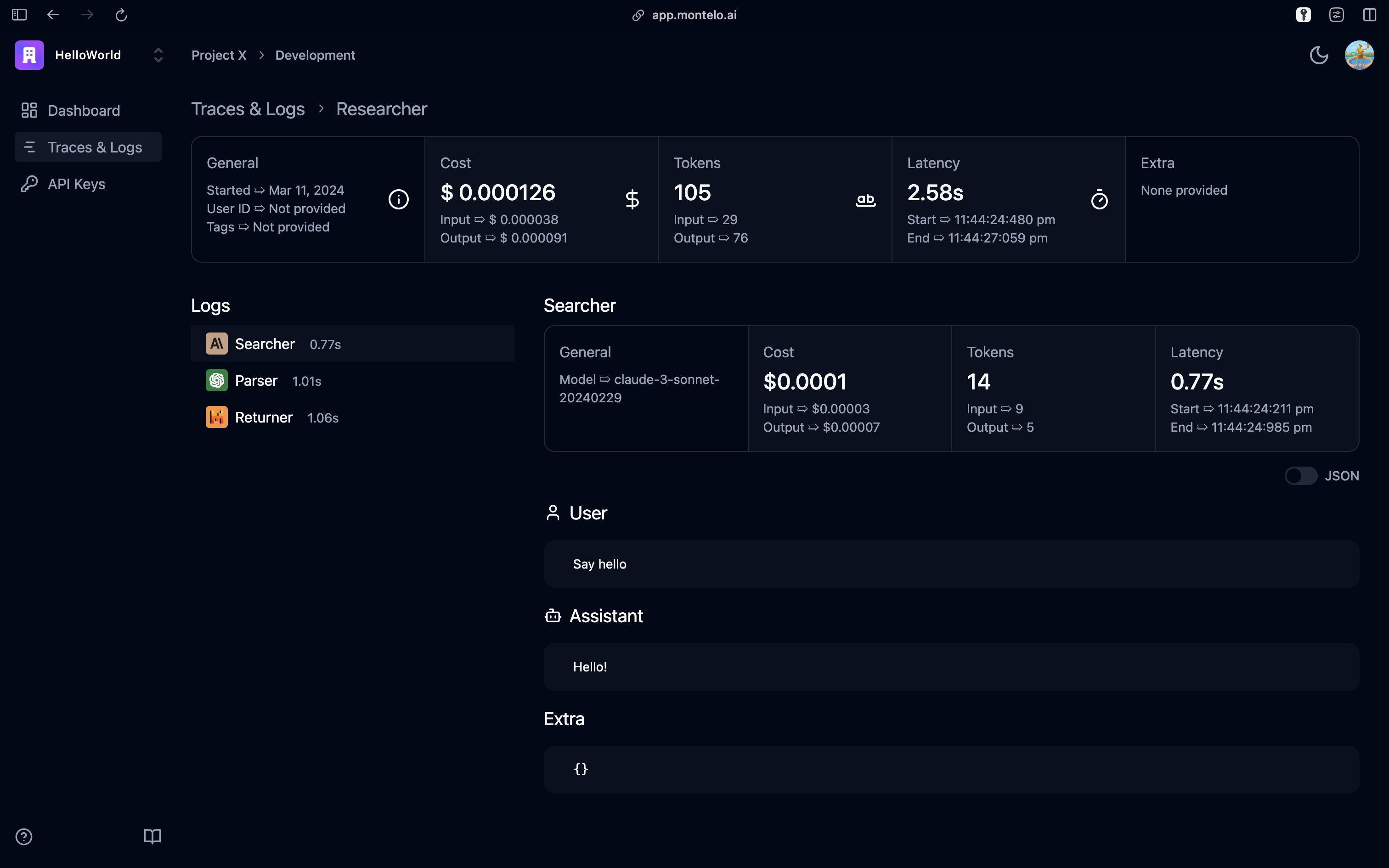

MonteloAI is the LLM DevOps platform for TypeScript. With one simple code change, Montelo will automatically log all your requests to a beautiful dashboard where you can track latency, cost, and token usage.

To get started, follow the steps below.

Create an account and a project

Visit Montelo to create a (free) account.

Get And Set Your API Key

Visit your project's dashboard and get it's API key. Set it in your .env as MONTELO_API_KEY.

MONTELO_API_KEY=sk-...

Install Montelo

Back to your IDE, install Montelo.

npm install montelo

Replace OpenAI With Montelo

Switch out new OpenAI() with new Montelo().

// import OpenAI from 'openai';

import { Montelo } from 'montelo';

// const openai = new OpenAI();

const montelo = new Montelo();

Then switch out all openai calls to montelo.openai .

// ❌

// const response = await openai.chat.completions.create({

// model: 'gpt-3.5-turbo',

// messages,

// });

// ✅

const response = await montelo.openai.chat.completions.create({

model: 'gpt-3.5-turbo',

messages,

})

And you're done! You should now be able to see all your logs on the dashboard 🚀

Montelo currently supports OpenAI, Mistral, and Anthropic.

Here's the complete example:

// src/app/api/chat/route.ts

import { Montelo } from 'montelo';

import { OpenAIStream, StreamingTextResponse } from 'ai';

import { NextResponse } from 'next/server';

// Create a Montelo client (that's edge friendly!)

const montelo = new Montelo();

// IMPORTANT! Set the runtime to edge

export const runtime = 'edge';

export async function POST(req: Request) {

try {

const { messages } = await req.json();

// Ask Montelo for a streaming chat completion given the prompt

const response = await montelo.openai.chat.completions.create({

name: 'chat',

model: 'gpt-3.5-turbo',

stream: true,

messages,

});

// Convert the response into a friendly text-stream

const stream = OpenAIStream(response);

// Respond with the stream

return new StreamingTextResponse(stream);

} catch (error) {

if (error instanceof OpenAI.APIError) {

const { name, status, headers, message } = error;

return NextResponse.json({ name, status, headers, message }, { status });

} else {

throw error;

}

}

}

For the full repo:

Subscribe to my newsletter

Read articles from Deactivated User directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by