Getting started with Architecture for AI apps: A Founder’s guide

Were Samson Bruno

Were Samson Bruno

Introduction

I assume that you already possess a keen interest in Artificial intelligence and you are now excited and want to build your own AI app. It could be a ChatGPT wrapper or something entirely unique that has never been applied in any industry before. This journey you desire to walk I have walked and will take you where I have been.

For reference purposes I will use the initial architecture of our solution Intelli. Our team is made up of four individuals — each with a prefix appended to the Role CTO, which means everyone’s opinion influences the technical decisions we make. When we envisioned Intelli(fondly referred to as Elli) we saw it this way; We broke it down into movable parts that would come together to form the sweet spot of Data and Intelligence that would solve our customer’s problems in the easiest and most cost-effective way

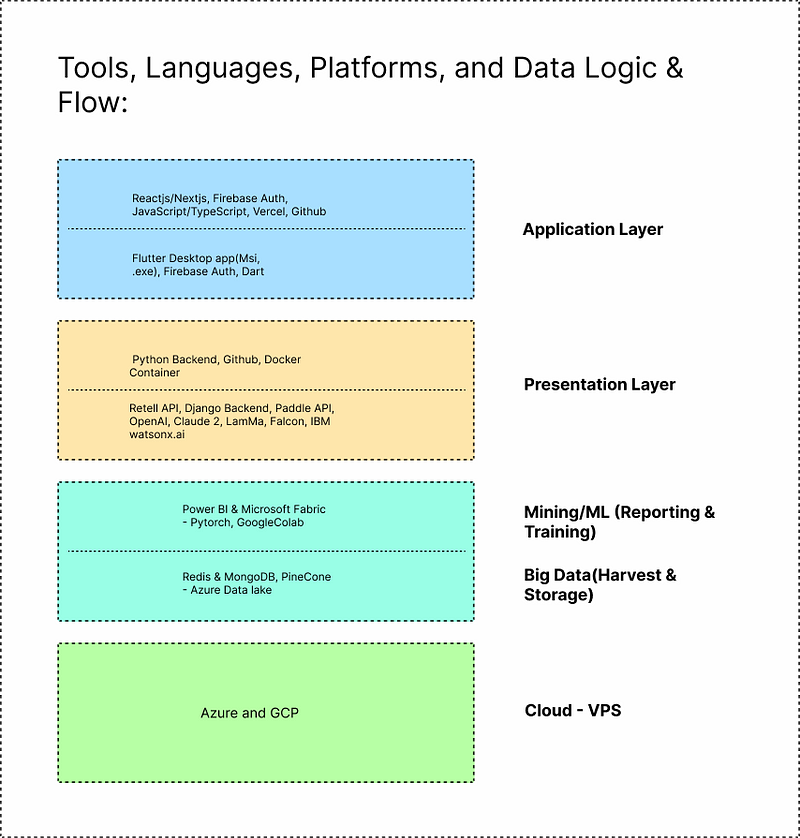

OSI Model Diagram

Bare in mind that the decisions for the various platform choices were made based off of cost, performance, ease of use, our team’s acquaintance with them and community built around it, for example we had Azure credits worth $1,000 and we had google credits worth $200; It only made financial sense that we rely on Azure although we acknowledge it was not the most friendly cloud platform available.

For the choice on databases, we segmented them into two usecases; one for basic storage and retrieval; We went for a vector database because they allow for fast similarity searches; which is what we wanted since we had a RAG model as part of our architecture at a point. We knew there was going to be indexing of data and a vector database would be the best way to store it.

Yet again the decision for what we chose came down to available resources and familiarity with the tech; we found documentation on how to setup indices in Pinecone and also saw some documentation on Langchain specifically tailored for pinecone. Adding to the fact that we ran into some errors trying to use Redis which was our first choice for a vector DB; we quickly switched our minds to Pinecone.

MongoDB and Azure Data Lake didn’t get the light of day for different reasons; The former didn’t have the easiest path to entry despite having plenty documentation out there; It had a steep learning curve and thus we chose to forego it. However much we acknowledge the robustness of the MongoDB Atlas Semantic search and coupling it with a RAG model.

The latter was too early for us. We aren’t yet at the level, or scale of having big data and as such would just be a wastage of resources for us to run this service if we have failed to exhaust our current DBs. Although we shall still keep it on our rack for when we get there and our needs warrant such storage capacities.

This brings me to the second segment of our Dbs; Data Mining and Machine Learning; we saw the essence of having our system become better at answering questions by fine-tuning a foundational model to turn it into a proprietary and enterprise LLM specifically built for our business usecase and fully decoupled from and independent of it’s source/parent model.

When we started we only thought about using PyTorch as the library for Natural Language Processing(NLP) within Google Colab as the playground for us to perform our training but our minds were introduced to better options like TensorFlow which is an End to end open source platform for machine learning and thus would serve our needs adequately and ticks most of the boxes we needed.

Power BI (in Microsoft Fabric) were basically reporting tools; to visually represent the data/numbers of the progress of our training and Machine Learning procedures.

Moving up to the Presentation Layer; we chose to stick to A Python Back-end using the Django framework for it’s benefits of being structured, organized and an out of the box solution(comes with batteries included) It greatly increases our speed of building. And the fact that we also had access to technical expertise in our vicinity that were proficient with it made it a sound decision to make. The most important reason for this decision however is because we thought about the intricacies of dealing with data for ML purposes in any language other than python and it made sense that we work with python from day 0.

We chose to host our codebase on GitHub and initially had decided to ship it to production in a docker container; however as we proceeded we found out alternative ways to ship it that would be easier for us to execute. Vercel provided a serverless edge option to launch our Django app and we lunged at it(I love all things serverless!).

For the APIs we are utilizing Retell for Voice, Paddle for Payments, Our LLMs are OpenAi, Llama 2 and hope to soon have our own LLM Epsilon join these ranks.

For the Presentation Layer we chose to use NextJs framework to build the web app and Flutter to build the desktop for reason of our team having a familiarity and experience with these tools and technologies and thus making it a perfect fit.

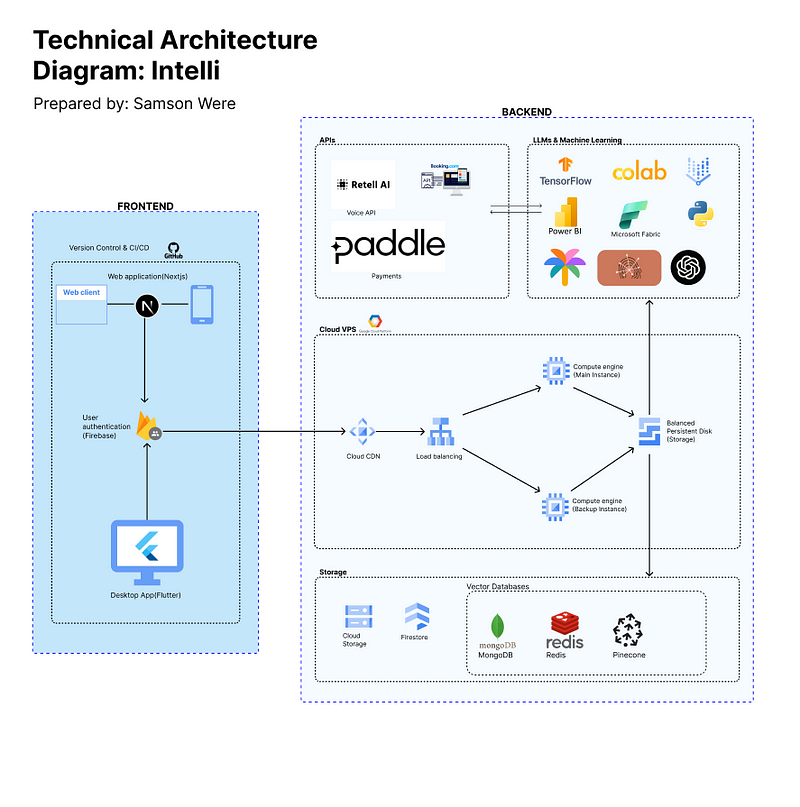

Technical Architecture Diagram

Now that I have explained the OSI model; you can glimpse at the architecture and it will make sense to you. As you look at the architecture diagram you will realise there are elements that were added that were not part of the OSI model; this is because we kept on refining and changing our architecture as we learned and kept on going.

Additional Notes

Some of the components we switched entirely are listed herein:

Cloud Service Provider — Much as we love GCP; and had crafted our entire cloud infrastructure around them. We had to choose to flow where the credits flowed, to make our building azurier(easier)

Claude and Palm2 — We thought we would start with these two models but things turned out Open AI, so we took that path.

We had missed a crucial part (OTAs) so we had to look out for those that had developer platforms and provided their API services.

Conclusion

I believe if you have followed this article to this point, your mind has been opened up to see things as they are; plain. I hope this has been an insightful read for you and that it has inspired you to come up with an even more optimised architecture and subsequently product.

This was written to help you see AI app architecture from the angle of any other software you have built before; what changes are the tools and the application of the software and the paradigm of building it.

You can build anything you set your mind to, so go forth and build.

Subscribe to my newsletter

Read articles from Were Samson Bruno directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Were Samson Bruno

Were Samson Bruno

I am a developer from Uganda passionate about sharing my knowledge