How to install AWX on AWS EKS

Brian Gaber

Brian GaberWhat is AWX

AWX is the free, open source version of Ansible Automation Platform, formerly known as Ansible Tower. AWX is an open source community project, sponsored by Red Hat, that enables users to better control their community Ansible project use in IT environments. AWX is the upstream project from which the automation controller component is ultimately derived. For more information on AWX look here.

Objective

The purpose of this document is to explain how to install AWX 23.9.0 on an AWS EKS cluster using AWX Operator. I recommend you read the entire document before executing any of these steps so that you can decide ahead of time how you will handle DNS and how you will expose AWX. Three options are presented for exposing AWX. DNS can be handled automatically by ExternalDNS or manually. If you do not want your AWX deployment exposed on the Internet then you probably don't want to use ExternalDNS. I show how to deploy AWX on a two node EKS cluster using the AWX Operator. My objective was to make AWX securely accessible from an AWS ALB.

Table of Contents

Environment

Tested on:

- AWS EKS Cluster

Products that will be deployed:

AWS CSI Storage Add-on

AWS CNI Network Add-on

AWS Load Balancer Controller add-on (optional, required for Application Load Balancer)

ExternalDNS (optional, required for automatic creation of Route 53 DNS Zone record)

AWX 23.9.0

PostgreSQL 13

References

Prerequisite Instructions

Computing resources

EKS Management Console installed on Amazon Linux v2 AMI on

t3a.microwith AWS CLI v2 and eksctl.Worker Nodes on t3a.xlarge and 200 GB storage minimum.

It's recommended to add more CPUs and RAM (like 4 CPUs and 8 GiB RAM or more) to avoid performance issue and job scheduling issue.

The files in this repository are configured to ignore resource requirements which are specified by AWX Operator by default.

Storage resources

- to be added

Setup EKS Management Console

The purpose of the EKS Management Console (EMC) is to manage the EKS Cluster and EKS worker nodes. The EMC is built on a small EC2 instance. I used a t3a.micro. Among many functions it is the EMC that creates, updates and deletes all Clusters and Node Groups. The EMC is created by installing the AWS CLI, kubectl, eksctl and helm software packages.

Create IAM User

Create IAM user with programmatic access only and attach the AdministratorAccess policy. Copy the Access Key ID and Secret Access Key to be used when setting up the AWS CLI.

Install Prerequisite Software

$ curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install --bin-dir /usr/bin --install-dir /usr/bin/aws-cli --update

Configure the AWS CLI using the Access Key ID and Secret Access Key.

aws configure

curl -O https://s3.us-west-2.amazonaws.com/amazon-eks/1.29.0/2024-01-04/bin/linux/amd64/kubectl

sudo chmod +x ./kubectl

sudo mv kubectl /usr/local/bin

kubectl version

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

sudo mv /tmp/eksctl /usr/local/bin

eksctl version

Install helm Helm is a Kubernetes deployment tool for automating creation, packaging, configuration, and deployment of applications and services to Kubernetes clusters. In these instructions Helm will be used for the AWS Load Balancer Controller installation.

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

which helm

helm version

Create EKS Cluster

There are many options for creating an EKS cluster using eksctl as shown in this eksctl schema document. It can be created using a k8s manifest file or from the command line. From the CLI there are also many options as shown by running the eksctl create cluster --help command. I will just show two variations: one that creates a new VPC and required components (subnets, etc) and one that uses an existing VPC and subnets.

Deploy Cluster To New VPC From CLI

The following command creates a new EKS cluster in a new VPC and required components (subnets, etc):

eksctl create cluster --name awx --version 1.29 --region us-east-1 --zones us-east-1a,us-east-1b,us-east-1c,us-east-1d,us-east-1f --nodegroup-name standard-workers --node-type t3a.xlarge --nodes 2 --nodes-min 1 --nodes-max 4 --managed

Deploy Cluster To Existing VPC From CLI

The following command creates a new EKS cluster in an existing VPC and subnets with the worker nodes only having private IP addresses:

eksctl create cluster --name awx --version 1.29 --region us-east-1 --vpc-public-subnets=subnet-42267f68,subnet-c2565fb4 --vpc-private-subnets=subnet-2a590000,subnet-63696015 --nodegroup-name standard-workers --node-type t3a.xlarge --nodes 2 --nodes-min 1 --nodes-max 4 --node-private-networking --managed

Deploy Cluster To Existing VPC With eskctl Manifest

The following k8s manifest performs the same EKS deployment as the eksctl command above. That is, it creates a new EKS cluster in an existing VPC and subnets with the worker nodes only having private IP addresses:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: awx

region: us-east-1

version: "1.29"

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

instanceType: t3a.xlarge

labels:

alpha.eksctl.io/cluster-name: awx

alpha.eksctl.io/nodegroup-name: standard-workers

maxSize: 3

minSize: 1

name: standard-workers

privateNetworking: true

tags:

alpha.eksctl.io/nodegroup-name: standard-workers

alpha.eksctl.io/nodegroup-type: managed

vpc:

id: vpc-87e65ae0

manageSharedNodeSecurityGroupRules: true

nat:

gateway: Disable

subnets:

private:

us-east-1b:

id: subnet-2a590000

us-east-1c:

id: subnet-63696015

public:

us-east-1b:

id: subnet-42267f68

us-east-1c:

id: subnet-c2565fb4

The EKS cluster is created with this manifest using this command:

eksctl create cluster -f awx-cluster.yaml

You can monitor the build from CloudFormation in the AWS Console. As well you will see output to the eksctl command similar to the following:

2022-05-30 09:13:24 [ℹ] eksctl version 0.173.0

2022-05-30 09:13:24 [ℹ] using region us-east-1

2022-05-30 09:13:24 [✔] using existing VPC (vpc-87e65ae0) and subnets (private:map[us-east-1b:{subnet-2a590000 us-east-1b 10.251.36.0/22 0} us-east-1c:{subnet-63696015 us-east-1c 10.251.40.0/22 0}] public:map[us-east-1b:{subnet-42267f68 us-east-1b 10.251.32.0/23 0} us-east-1c:{subnet-c2565fb4 us-east-1c 10.251.34.0/23 0}])

2022-05-30 09:13:24 [!] custom VPC/subnets will be used; if resulting cluster doesn\'t function as expected, make sure to review the configuration of VPC/subnets

2022-05-30 09:13:24 [ℹ] nodegroup "standard-workers" will use "" [AmazonLinux2/1.29]

2022-05-30 09:13:24 [ℹ] using Kubernetes version 1.29

2022-05-30 09:13:24 [ℹ] creating EKS cluster "awx" in "us-east-1" region with managed nodes

2022-05-30 09:13:24 [ℹ] 1 nodegroup (standard-workers) was included (based on the include/exclude rules)

2022-05-30 09:13:24 [ℹ] will create a CloudFormation stack for cluster itself and 0 nodegroup stack(s)

2022-05-30 09:13:24 [ℹ] will create a CloudFormation stack for cluster itself and 1 managed nodegroup stack(s)

2022-05-30 09:13:24 [ℹ] if you encounter any issues, check CloudFormation console or try \'eksctl utils describe-stacks --region=us-east-1 --cluster=awx\'

2022-05-30 09:13:24 [ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "awx" in "us-east-1"

2022-05-30 09:13:24 [ℹ] CloudWatch logging will not be enabled for cluster "awx" in "us-east-1"

2022-05-30 09:13:24 [ℹ] you can enable it with \'eksctl utils update-cluster-logging --enable-types={SPECIFY-YOUR-LOG-TYPES-HERE (e.g. all)} --region=us-east-1 --cluster=awx\'

2022-05-30 09:13:24 [ℹ]

2 sequential tasks: { create cluster control plane "awx",

2 sequential sub-tasks: {

wait for control plane to become ready,

create managed nodegroup "standard-workers",

}

}

2022-05-30 09:13:24 [ℹ] building cluster stack "eksctl-awx-cluster"

2022-05-30 09:13:25 [ℹ] deploying stack "eksctl-awx-cluster"

2022-05-30 09:13:55 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:14:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:15:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:16:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:17:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:18:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:19:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:20:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:21:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:22:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:23:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:24:25 [ℹ] waiting for CloudFormation stack "eksctl-awx-cluster"

2022-05-30 09:26:26 [ℹ] building managed nodegroup stack "eksctl-awx-nodegroup-standard-workers"

2022-05-30 09:26:26 [ℹ] deploying stack "eksctl-awx-nodegroup-standard-workers"

2022-05-30 09:26:26 [ℹ] waiting for CloudFormation stack "eksctl-awx-nodegroup-standard-workers"

2022-05-30 09:26:56 [ℹ] waiting for CloudFormation stack "eksctl-awx-nodegroup-standard-workers"

2022-05-30 09:27:28 [ℹ] waiting for CloudFormation stack "eksctl-awx-nodegroup-standard-workers"

2022-05-30 09:28:07 [ℹ] waiting for CloudFormation stack "eksctl-awx-nodegroup-standard-workers"

2022-05-30 09:29:46 [ℹ] waiting for CloudFormation stack "eksctl-awx-nodegroup-standard-workers"

2022-05-30 09:29:46 [ℹ] waiting for the control plane availability...

2022-05-30 09:29:46 [✔] saved kubeconfig as "/home/awsadmin/bg216063/.kube/config"

2022-05-30 09:29:46 [ℹ] no tasks

2022-05-30 09:29:46 [✔] all EKS cluster resources for "awx" have been created

2022-05-30 09:29:46 [ℹ] nodegroup "standard-workers" has 2 node(s)

2022-05-30 09:29:46 [ℹ] node "ip-10-251-39-154.ec2.internal" is ready

2022-05-30 09:29:46 [ℹ] node "ip-10-251-41-159.ec2.internal" is ready

2022-05-30 09:29:46 [ℹ] waiting for at least 1 node(s) to become ready in "standard-workers"

2022-05-30 09:29:46 [ℹ] nodegroup "standard-workers" has 2 node(s)

2022-05-30 09:29:46 [ℹ] node "ip-10-251-39-154.ec2.internal" is ready

2022-05-30 09:29:46 [ℹ] node "ip-10-251-41-159.ec2.internal" is ready

2022-05-30 09:29:49 [ℹ] kubectl command should work with "/home/awsadmin/bg216063/.kube/config", try \'kubectl get nodes\'

2022-05-30 09:29:49 [✔] EKS cluster "awx" in "us-east-1" region is ready

Only Deploy Nodegroup With eskctl Manifest

There may be occasions where you only want to deploy the Nodegroup on an existing EKS Cluster and not deploy the cluster. Two possibilities are one, where you want to change one of the Nodegroup parameters, like instanceType and two, the build of the cluster was interrupted by loss of Internet or VPN during the running of the eksctl cluster create command resulting in the cluster being deployed, but not the Nodegroup.

In the first case, to change one of the Nodegroup parameters, you first have to remove the existing Nodegroup. To do this you first need to get the Nodegroup name. This can be done with the eksctl get nodegroup --cluster <CLUSTER NAME> command. For example, in our case, the following commands will delete the Nodegroup:

eksctl get nodegroup --cluster awx

CLUSTER NODEGROUP STATUS CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID ASG NAME TYPE

awx standard-workers ACTIVE 2022-05-30T13:26:53Z 1 4 2 t3a.xlarge AL2_x86_64 eks-standard-workers-a2c08a92-eda6-425e-048c-d1b5f786301c managed

eksctl delete nodegroup --cluster awx --name standard-workers

To only deploy the Nodegroup and not the EKS Cluster you need an eksctl manifest file similar to the one shown above and run the command: eksctl create nodegroup -f awx-cluster.yaml

Notice the command this time is eksctl create nodegroup and not eksctl create cluster.

AWS CSI Storage add-on

The Amazon Elastic Block Store (Amazon EBS) Container Storage Interface (CSI) driver manages the lifecycle of Amazon EBS volumes as storage for the Kubernetes Volumes that you create. The Amazon EBS CSI driver makes Amazon EBS volumes for these types of Kubernetes volumes: generic ephemeral volumes and persistent volumes

Install the CSI Storage add-on

AWS CNI Network Add-on

The Amazon VPC CNI plugin for Kubernetes is the networking plugin for Pod networking in Amazon EKS clusters. The plugin is responsible for allocating VPC IP addresses to Kubernetes nodes and configuring the necessary networking for Pods on each node.

Install the CNI Storage add-on

Create the Amazon VPC CNI plugin for Kubernetes IAM role

Optional DNS

An ACM certificate will be required for the DNS Domain you will be using for AWX. If the DNS Domain does not already exist then it will need to be created.

Once the DNS Hosted Zone and ACM certificate are created we can move on to ExternalDNS if public access is required.

ExternalDNS will require an IAM policy for permissions to perform its functions. The recommended IAM policy can be found here

Here is a CloudFormation template that will create the ExternalDNS IAM Policy:

{

"AWSTemplateFormatVersion": "2010-09-09",

"Description": "The IAM Resources for External DNS",

"Parameters": {

"DeployRole": {

"Description": "Decide whether deploy or not the IAM Role",

"Type": "String",

"Default": "no",

"AllowedValues": [

"yes",

"no"

]

}

},

"Conditions": {

"CreateIamRole": { "Fn::Equals": [ { "Ref": "DeployRole" }, "yes"] }

},

"Resources": {

"ExternalDnsIamPolicy": {

"Type": "AWS::IAM::ManagedPolicy",

"Properties": {

"PolicyDocument" : {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"route53:ChangeResourceRecordSets"

],

"Resource": [

"arn:aws:route53:::hostedzone/*"

]

},

{

"Effect": "Allow",

"Action": [

"route53:ListHostedZones",

"route53:ListResourceRecordSets"

],

"Resource": [

"*"

]

}

]

},

"ManagedPolicyName" : "ExternalDNSPolicy"

}

}

}

}

Further on in the Setting up ExternalDNS for Services on AWS document you will find the recommended Kubernetes manifest for clusters with RBAC enabled. Read this manifest carefully and deploy ExternalDNS using this manifest.

For example, if the Manifest (for clusters with RBAC enabled) is copied into a file named external-dns.yaml then it is deployed with this command:

kubectl apply -f external-dns.yaml

Optional AWS Load Balancer Controller add-on

The AWS Load Balancer Controller requires subnets with specific tags. Read this document for information on the subnet tags

Public subnets are used for internet-facing load balancers. These subnets must have the following tags:

kubernetes.io/role/elb 1 or ``

Private subnets are used for internal load balancers. These subnets must have the following tags:

kubernetes.io/role/internal-elb 1 or ``

In order to use an ALB, referred to as an Ingress in Kubernetes, you must first install the AWS Load Balancer Controller add-on following these AWS instructions.

Ensure you create the AmazonEKSLoadBalancerControllerRole IAM Role described in Steps 1 and 2.

In step 5 of these instructions I recommend using the Helm 3 or later instructions, rather than the Kubernetes manifest instructions.

Here is the helm install command I used:

helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=awx \

--set serviceAccount.create=false \

--set serviceAccount.name=aws-load-balancer-controller

As shown in step 6 of the AWS instructions, I confirmed the deployment with this command:

kubectl get deployment -n kube-system aws-load-balancer-controller

NAME READY UP-TO-DATE AVAILABLE AGE

aws-load-balancer-controller 2/2 2 2 3m23s

If the READY column reports 0/2 for a long time then the AmazonEKSLoadBalancerControllerRole IAM Role is probably not setup correctly.

To undo the helm install command use the following command:

helm uninstall aws-load-balancer-controller -n kube-system

Install AWX Application on EKS Cluster

Classic Load Balancer (CLB) Without SSL

Prepare required files

Modification of awx-clb-without-tls.yaml and\or kustomization.yaml may be required depending on your requirements. Here are mine:

awx-clb-without-tls.yaml

---

apiVersion: awx.ansible.com/v1beta1

kind: AWX

metadata:

name: awx

spec:

# These parameters are designed for use with:

# - AWX Operator: 2.12.2

# https://github.com/ansible/awx-operator/blob/2.12.2/README.md

# - AWX: 23.9.0

# https://github.com/ansible/awx/blob/23.9.0/INSTALL.md

admin_user: admin

admin_password_secret: awx-admin-password

# create Classic Load Balancer

service_type: LoadBalancer

postgres_configuration_secret: awx-postgres-configuration

kustomization.yaml

---

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

generatorOptions:

disableNameSuffixHash: true

secretGenerator:

- name: awx-postgres-configuration

type: Opaque

literals:

- host=awx-postgres-13

- port=5432

- database=awx

- username=awx

- password=Ansible123!

- type=managed

- name: awx-admin-password

type: Opaque

literals:

- password=Ansible123!

resources:

# Find the latest tag here: https://github.com/ansible/awx-operator/releases

- github.com/ansible/awx-operator/config/default?ref=2.12.2

- awx-clb-without-tls.yaml

# Set the image tags to match the git version from above

images:

- name: quay.io/ansible/awx-operator

newTag: 2.12.2

# Specify a custom namespace in which to install AWX

namespace: awx

Classic Load Balancer (CLB) With SSL

An AWS ACM certificate is required for a CLB with SSL. This ACM certificate should be associated with a Route 53 record in a hosted zone.

Prepare required files

Modification of awx-clb-with-tls.yaml and\or kustomization.yaml may be required depending on your requirements. Here are mine:

awx-clb-with-tls.yaml

---

apiVersion: awx.ansible.com/v1beta1

kind: AWX

metadata:

name: awx

spec:

# These parameters are designed for use with:

# - AWX Operator: 2.12.2

# https://github.com/ansible/awx-operator/blob/2.12.2/README.md

# - AWX: 23.9.0

# https://github.com/ansible/awx/blob/23.9.0/INSTALL.md

admin_user: admin

admin_password_secret: awx-admin-password

# create Classic Load Balancer

service_type: LoadBalancer

loadbalancer_protocol: https

loadbalancer_port: 443

service_annotations: |

external-dns.alpha.kubernetes.io/hostname: your.domain-name.com

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: arn:aws:acm:{region}:{account}:certificate/{id}

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: http

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: https

postgres_configuration_secret: awx-postgres-configuration

kustomization.yaml

---

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

generatorOptions:

disableNameSuffixHash: true

secretGenerator:

- name: awx-postgres-configuration

type: Opaque

literals:

- host=awx-postgres-13

- port=5432

- database=awx

- username=awx

- password=Ansible123!

- type=managed

- name: awx-admin-password

type: Opaque

literals:

- password=Ansible123!

resources:

# Find the latest tag here: https://github.com/ansible/awx-operator/releases

- github.com/ansible/awx-operator/config/default?ref=2.12.2

- awx-clb-with-tls.yaml

# Set the image tags to match the git version from above

images:

- name: quay.io/ansible/awx-operator

newTag: 2.12.2

# Specify a custom namespace in which to install AWX

namespace: awx

Application Load Balancer (ALB) With SSL

In order to use an ALB, referred to as an Ingress in Kubernetes, you must first install the AWS Load Balancer Controller add-on. This procedure was described above in the Optional AWS Load Balancer Controller add-on section.

An AWS ACM certificate is required for an ALB with SSL. This ACM certificate should be associated with a Route 53 record in a hosted zone.

Run command below to check if AWS Load Balancer Controller add-on is installed.

kubectl get deployment -n kube-system aws-load-balancer-controller

If AWS Load Balancer Controller add-on is installed then output should be similar to:

NAME READY UP-TO-DATE AVAILABLE AGE

aws-load-balancer-controller 2/2 2 2 3m23s

Prepare required files

Modification of awx-ingress.yaml and\or kustomization.yaml may be required depending on your requirements. Here are mine:

awx-ingress.yaml

---

apiVersion: awx.ansible.com/v1beta1

kind: AWX

metadata:

name: awx

spec:

# These parameters are designed for use with:

# - AWX Operator: 2.12.2

# https://github.com/ansible/awx-operator/blob/2.12.2/README.md

# - AWX: 23.9.0

# https://github.com/ansible/awx/blob/23.9.0/INSTALL.md

admin_user: admin

admin_password_secret: awx-admin-password

ingress_type: ingress

ingress_path: "/"

ingress_path_type: Prefix

# hostname value is used in the ALB Listener rules

# if host is equal to <hostname value> then traffic will be forwarded to Target Group

hostname: your.domain-name.com

ingress_annotations: |

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}]'

alb.ingress.kubernetes.io/actions.redirect: "{\"Type\": \"redirect\", \"RedirectConfig\": {\"Protocol\": \"HTTPS\", \"Port\": \"443\", \"StatusCode\": \"HTTP_301\"}}"

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:{region}:{account}:certificate/{id}

alb.ingress.kubernetes.io/load-balancer-attributes: "idle_timeout.timeout_seconds=360"

postgres_configuration_secret: awx-postgres-configuration

kustomization.yaml

---

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

generatorOptions:

disableNameSuffixHash: true

secretGenerator:

- name: awx-postgres-configuration

type: Opaque

literals:

- host=awx-postgres-13

- port=5432

- database=awx

- username=awx

- password=Ansible123!

- type=managed

- name: awx-admin-password

type: Opaque

literals:

- password=Ansible123!

resources:

# Find the latest tag here: https://github.com/ansible/awx-operator/releases

- github.com/ansible/awx-operator/config/default?ref=2.12.2

- awx-ingress.yaml

# Set the image tags to match the git version from above

images:

- name: quay.io/ansible/awx-operator

newTag: 2.12.2

# Specify a custom namespace in which to install AWX

namespace: awx

Install AWX Operator and Deploy AWX

Now that the manifest files have been prepared based on how you have decided to access AWX it is time to deploy AWX. AWX is deployed by running the following command:

kubectl apply -k .

This command should produce output similar to following:

namespace/awx created

customresourcedefinition.apiextensions.k8s.io/awxbackups.awx.ansible.com created

customresourcedefinition.apiextensions.k8s.io/awxrestores.awx.ansible.com created

customresourcedefinition.apiextensions.k8s.io/awxs.awx.ansible.com created

serviceaccount/awx-operator-controller-manager created

role.rbac.authorization.k8s.io/awx-operator-awx-manager-role created

role.rbac.authorization.k8s.io/awx-operator-leader-election-role created

clusterrole.rbac.authorization.k8s.io/awx-operator-metrics-reader created

clusterrole.rbac.authorization.k8s.io/awx-operator-proxy-role created

rolebinding.rbac.authorization.k8s.io/awx-operator-awx-manager-rolebinding created

rolebinding.rbac.authorization.k8s.io/awx-operator-leader-election-rolebinding created

clusterrolebinding.rbac.authorization.k8s.io/awx-operator-proxy-rolebinding created

configmap/awx-operator-awx-manager-config created

secret/awx-admin-password created

secret/awx-postgres-configuration created

service/awx-operator-controller-manager-metrics-service created

deployment.apps/awx-operator-controller-manager created

To monitor the progress of the deployment, check the logs of deployments/awx-operator-controller-manager:

kubectl logs -f deployments/awx-operator-controller-manager -c awx-manager -n awx

Second, in kustomization.yaml put back awx-clb-without-tls or awx-clb-with-tls or awx-ingress.yaml and then run this command:

kubectl apply -k .

Should get output similar to following:

namespace/awx unchanged

customresourcedefinition.apiextensions.k8s.io/awxbackups.awx.ansible.com unchanged

customresourcedefinition.apiextensions.k8s.io/awxrestores.awx.ansible.com unchanged

customresourcedefinition.apiextensions.k8s.io/awxs.awx.ansible.com unchanged

serviceaccount/awx-operator-controller-manager unchanged

role.rbac.authorization.k8s.io/awx-operator-awx-manager-role configured

role.rbac.authorization.k8s.io/awx-operator-leader-election-role unchanged

clusterrole.rbac.authorization.k8s.io/awx-operator-metrics-reader unchanged

clusterrole.rbac.authorization.k8s.io/awx-operator-proxy-role unchanged

rolebinding.rbac.authorization.k8s.io/awx-operator-awx-manager-rolebinding unchanged

rolebinding.rbac.authorization.k8s.io/awx-operator-leader-election-rolebinding unchanged

clusterrolebinding.rbac.authorization.k8s.io/awx-operator-proxy-rolebinding unchanged

configmap/awx-operator-awx-manager-config unchanged

secret/awx-admin-password unchanged

secret/awx-postgres-configuration unchanged

service/awx-operator-controller-manager-metrics-service unchanged

deployment.apps/awx-operator-controller-manager unchanged

awx.awx.ansible.com/awx created

When the awx-ingress.yaml deployment completes successfully, the log output ends with:

kubectl logs -f deployments/awx-operator-controller-manager -c awx-manager -n awx

...

...

----- Ansible Task Status Event StdOut (awx.ansible.com/v1beta1, Kind=AWX, awx/awx) -----

PLAY RECAP *********************************************************************

localhost : ok=65 changed=0 unreachable=0 failed=0 skipped=43 rescued=0 ignored=0

Required objects have been deployed next to AWX Operator in awx namespace.

$ kubectl -n awx get awx,all,ingress,secrets

NAME AGE

awx.awx.ansible.com/awx 44m

NAME READY STATUS RESTARTS AGE

pod/awx-74bdc7c78d-z94zn 4/4 Running 0 26m

pod/awx-operator-controller-manager-557589c5f4-78zqq 2/2 Running 0 50m

pod/awx-postgres-0 1/1 Running 0 44m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/awx-operator-controller-manager-metrics-service ClusterIP 10.100.82.115 <none> 8443/TCP 50m

service/awx-postgres ClusterIP None <none> 5432/TCP 44m

service/awx-service ClusterIP 10.100.242.202 <none> 80/TCP 26m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/awx 1/1 1 1 26m

deployment.apps/awx-operator-controller-manager 1/1 1 1 50m

NAME DESIRED CURRENT READY AGE

replicaset.apps/awx-74bdc7c78d 1 1 1 26m

replicaset.apps/awx-operator-controller-manager-557589c5f4 1 1 1 50m

NAME READY AGE

statefulset.apps/awx-postgres 1/1 44m

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/awx-ingress <none> your.domain-name.com k8s-awx-awxingre-b00bcd36ae-545180207.us-east-1.elb.amazonaws.com 80 44m

NAME TYPE DATA AGE

secret/awx-admin-password Opaque 1 50m

secret/awx-app-credentials Opaque 3 44m

secret/awx-broadcast-websocket Opaque 1 44m

secret/awx-operator-controller-manager-token-gc8g9 kubernetes.io/service-account-token 3 50m

secret/awx-postgres-configuration Opaque 6 50m

secret/awx-secret-key Opaque 1 44m

secret/awx-token-dtxw4 kubernetes.io/service-account-token 3 44m

secret/default-token-srctw kubernetes.io/service-account-token 3 50m

DNS

Now your AWX deployment is available at the URL shown to the right of ingress.networking.k8s.io/awx-ingress. In this example, AWS ALB your.domain-name.com. If External DNS was installed then the Route 53 record should have been created and you should be able to use URL in browser. Otherwise, you will need to manually enter DNS Zone record.

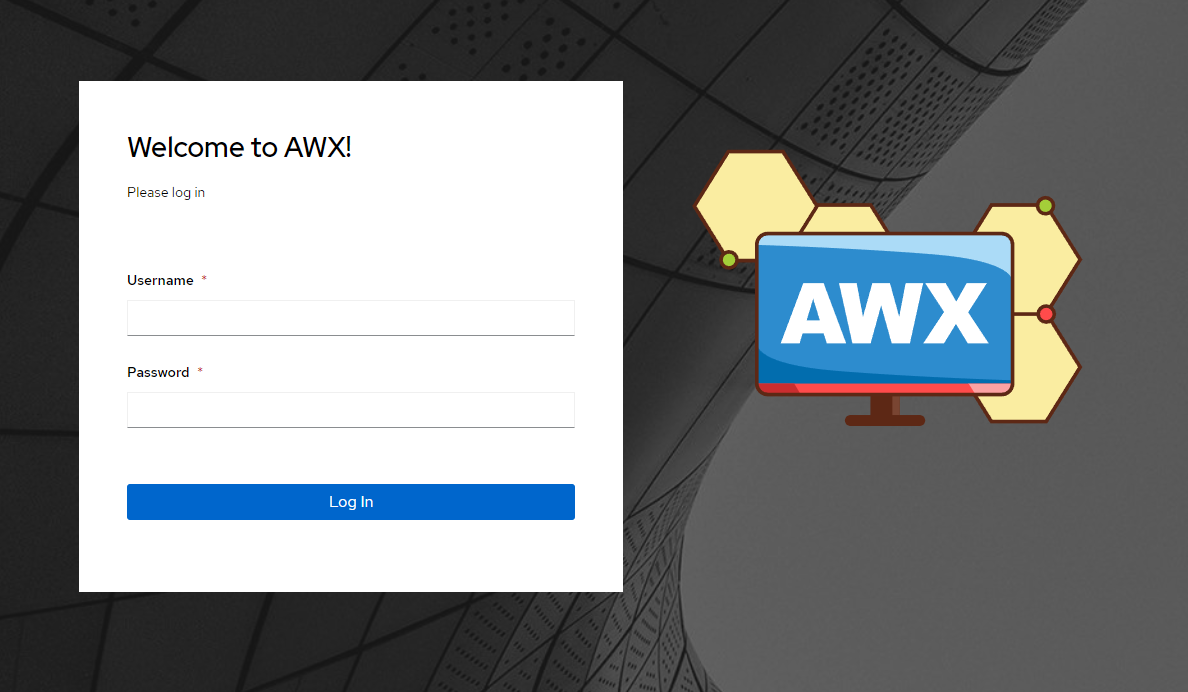

Here is what a successful launch of AWX looks like:

and what the "About" will show:

Subscribe to my newsletter

Read articles from Brian Gaber directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by