Docker storage drivers

Megha Sharma

Megha Sharma

Docker storage drivers are components responsible for managing the way Docker containers and images are stored on disk. Different storage drivers offer varying performance characteristics, capabilities, and compatibility with different storage technologies.

To use storage drivers effectively, it’s important to know how Docker builds and stores images, and how these images are used by containers. You can use this information to make informed choices about the best way to persist data from your applications and avoid performance problems along the way.

👉 Storage drivers VS Docker volumes

Storage drivers and Docker volumes are both integral components of Docker containers, but they serve different purposes and operate at different levels within the Docker architecture.

Storage Drivers:

Storage drivers in Docker are responsible for managing the interface between the container runtime and the underlying storage system. They handle tasks such as reading and writing data to containerized file systems, managing image layers, and optimizing storage operations.

Storage drivers are crucial for achieving performance, scalability, and reliability in Docker containers. They determine how data is stored, retrieved, and managed within containers, impacting aspects like speed, efficiency, and compatibility with various storage backends.

Docker Volumes:

Docker volumes provide a way to persist data generated by containers or share data between containers and the host system. Volumes are separate entities from container file systems and can exist independently, allowing for data persistence even if containers are stopped or removed.

Volumes enable data sharing and persistence across container lifecycles. They can be managed and manipulated independently of containers, making them suitable for scenarios like database storage, configuration files, log files, and other persistent data needs.

👉 Images and layers

A Docker image is built up from a series of layers. Each layer represents an instruction in the image’s Dockerfile. Each layer except the very last one is read-only. Consider the following Dockerfile:

# syntax=docker/dockerfile:1

FROM ubuntu:22.04

LABEL org.opencontainers.image.authors="org@example.com"

COPY . /app

RUN make /app

RUN rm -r $HOME/.cache

CMD python /app/app.py

This Dockerfile contains four commands. Commands that modify the filesystem create a layer. The FROM statement starts out by creating a layer from the ubuntu:22.04 image. The LABEL command only modifies the image's metadata, and doesn't produce a new layer. The COPY command adds some files from your Docker client's current directory. The first RUN command builds your application using the make command, and writes the result to a new layer. The second RUN command removes a cache directory, and writes the result to a new layer. Finally, the CMD instruction specifies what command to run within the container, which only modifies the image's metadata, which doesn't produce an image layer.

Each layer is only a set of differences from the layer before it. Note that both adding, and removing files will result in a new layer. In the example above, the $HOME/.cache directory is removed, but will still be available in the previous layer and add up to the image's total size.

The layers are stacked on top of each other. When you create a new container, you add a new writable layer on top of the underlying layers. This layer is often called the “container layer”. All changes made to the running container, such as writing new files, modifying existing files, and deleting files, are written to this thin writable container layer. The diagram below shows a container based on an ubuntu:15.04 image.

A storage driver handles the details about the way these layers interact with each other. Different storage drivers are available, which have advantages and disadvantages in different situations.

👉Container and layers

The major difference between a container and an image is the top writable layer. All writes to the container that add new or modify existing data are stored in this writable layer. When the container is deleted, the writable layer is also deleted. The underlying image remains unchanged.

Because each container has its own writable container layer, and all changes are stored in this container layer, multiple containers can share access to the same underlying image and yet have their own data state. The diagram below shows multiple containers sharing the same Ubuntu 15.04 image.

Docker uses storage drivers to manage the contents of the image layers and the writable container layer. Each storage driver handles the implementation differently, but all drivers use stackable image layers and the copy-on-write (CoW) strategy.

👉 Container size on disk

To view the approximate size of a running container, you can use the docker ps -s command. Two different columns relate to size.

size: the amount of data (on disk) that's used for the writable layer of each container.virtual size: the amount of data used for the read-only image data used by the container plus the container's writable layersize. Multiple containers may share some or all read-only image data. Two containers started from the same image share 100% of the read-only data, while two containers with different images which have layers in common share those common layers. Therefore, you can't just total the virtual sizes. This over-estimates the total disk usage by a potentially non-trivial amount.

The total disk space used by all of the running containers on disk is some combination of each container’s size and the virtual size values. If multiple containers started from the same exact image, the total size on disk for these containers would be SUM (size of containers) plus one image size (virtual size - size).

This also doesn’t count the following additional ways a container can take up disk space:

Disk space used for log files stored by the logging-driver. This can be non-trivial if your container generates a large amount of logging data and log rotation isn’t configured.

Volumes and bind mounts used by the container.

Disk space used for the container’s configuration files, which are typically small.

Memory written to disk (if swapping is enabled).

Checkpoints, if you’re using the experimental checkpoint/restore feature.

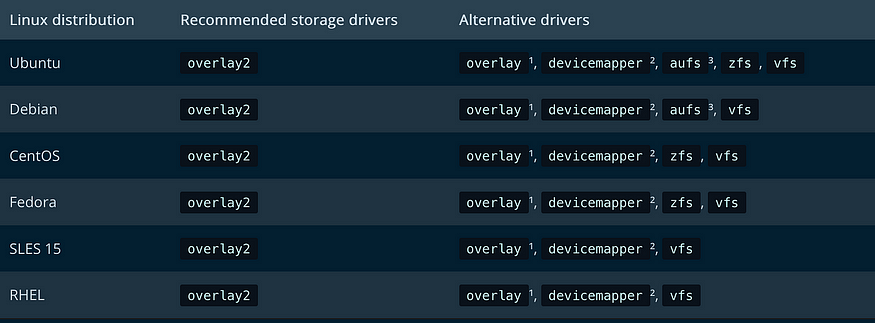

The Docker Engine provides the following storage drivers on Linux:

Driver:

overlay2:is the preferred storage driver for all currently supported Linux distributions, and requires no extra configuration.

fuse-overlayfs: is preferred only for running Rootless Docker on a host that does not provide support for rootless overlay2. On Ubuntu and Debian 10, the fuse-overlayfs driver does not need to be used, and overlay2 works even in rootless mode.

btrfs and zfs: The btrfs and zfs storage drivers allow for advanced options, such as creating "snapshots", but require more maintenance and setup. Each of these relies on the backing filesystem being configured correctly.

vfs: The vfs storage driver is intended for testing purposes, and for situations where no copy-on-write filesystem can be used. Performance of this storage driver is poor, and is not generally recommended for production use.

The Docker Engine has a prioritized list of which storage driver to use if no storage driver is explicitly configured, assuming that the storage driver meets the prerequisites, and automatically selects a compatible storage driver.

👉 Check your current storage driver

To see what storage driver Docker is currently using, use docker info and look for the Storage Driver line:

$ docker info

Containers: 0

Images: 0

Storage Driver: overlay2

Backing Filesystem: xfs

<...>

To change the storage driver, see the specific instructions for the new storage driver. Some drivers require additional configuration, including configuration to physical or logical disks on the Docker host.

Subscribe to my newsletter

Read articles from Megha Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Megha Sharma

Megha Sharma

👋 Hi there! I'm a DevOps enthusiast with a deep passion for all things Cloud Native. I thrive on learning and exploring new technologies, always eager to expand my knowledge and skills. Let's connect, collaborate, and grow together as we navigate the ever-evolving tech landscape! SKILLS: 🔹 Languages & Runtimes: Python, Shell Scripting, YAML 🔹 Cloud Technologies: AWS, Microsoft Azure, GCP 🔹 Infrastructure Tools: Docker, Terraform, AWS CloudFormation 🔹 Other Tools: Linux, Git and GitHub, Jenkins, Docker, Kubernetes, Ansible, Prometheus, Grafana