An Exploration of CPU Performance: Multitasking, Multiprocessing, and Multithreading

Nisha Singhal

Nisha SinghalTable of contents

You might have heard someone saying I have x cores and ythreads in my CPU. And the higher it is the more performant the CPU is. But why does this matter so much for CPU performance? Let's dig into it in the context of multithreading, multiprocessing and multitasking!

Before we proceed, it's essential to clarify some key concepts used in this article.

Every time you launch an application on your computer—let's take Chrome as an example—a new running instance of the application is launched. This running instance is referred to as a process. If you open another window in Chrome, a separate process is created. Similarly, if you launch Notepad, it spawns yet another process. In essence, each process represents a distinct running application on your system.

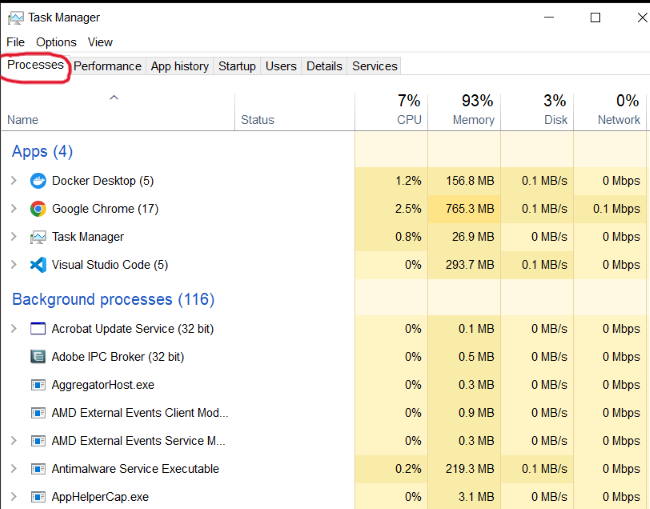

Hot tip: You can view all the currently running processes on your computer using Task Manager on Windows, System Monitor on Linux, or Activity Monitor on Mac. Here's a quick peek at mine:

When you fire up chrome, multiple small units of tasks are executing a set of instructions to keep the process running smoothly. Each such unit of instructions that is currently active is called a thread. For instance, within Chrome, there's an I/O thread handling your search queries and results, a networking thread making requests to servers for data retrieval, and so forth. While a process can have multiple threads, it always has at least one.

Multitasking

Multitasking involves a single processor handling multiple processes or tasks. These tasks are executed sequentially in small time slices, giving the illusion of concurrency to the user.

The crucial aspect of multitasking is that the processor manages multiple processes sequentially. For instance, if a single core is tasked with executing two processes, A and B, it juggles between them, executing a portion of A, then switching to B, and subsequently returning to A, and so forth. This technique enables a single-core processor to work on multiple processes concurrently. Since the CPU time is distributed among different processes, this approach is often referred to as time-sharing.

When the processor saves the state of the currently executing process and loads the state of a different process it is called context switching. While the switching time between processes is typically very short (in nanoseconds), it's important to note that the CPU is not executing multiple processes simultaneously. It rapidly switches between processes, giving the appearance of concurrency. Context switching can be triggered by preemptive actions initiated by the operating system scheduler, as well as by blocking operations within a process.

Preempting occurs when the operating system scheduler decides to pause the execution of the current process and allocate CPU time to another process with higher priority or for fairness reasons.

Blocking occurs when a process voluntarily relinquishes the CPU, typically because it is waiting for some event (such as user input or I/O operations) to occur. This blocking behavior allows other processes to execute while the current process is inactive.

It's important to note that blocking operations may also be non-voluntary, such as when a process is waiting for a resource to become available.

Though context switching is a key part to improving resource utilization of a system, it is an expensive overhead. This overhead arises due to the need for the operating system to save and restore the state of each process during a context switch. This process involves storing and reloading various components of the CPU's state, such as register values, program counters, and stack pointers. Additionally, transitioning between different execution contexts consumes CPU cycles and incurs additional memory accesses, contributing to performance overhead.

Multiprocessing

Multiprocessing refers to a scenario where a CPU incorporates multiple cores, with each core capable of independently executing its own set of instructions. In this setup, each core handles a separate process concurrently, enabling parallel processing. This means that multiple processes can run simultaneously, each utilizing a distinct core for execution.

For example, consider a quad-core CPU, which can manage up to four processes concurrently, one process per core. This parallel execution significantly improves system performance by distributing the workload across multiple cores.

It's important to note that multiprocessing relies on the operating system's ability to efficiently allocate processes to available CPU cores, ensuring optimal resource utilization.

Below is an image depicting a 12-core processor, showcasing the hardware architecture that enables multiprocessing:

Multithreading

Multithreading is a powerful concept in computer architecture that allows a single CPU core to execute multiple threads concurrently. Unlike multiprocessing, where multiple cores handle separate processes simultaneously, multithreading enables parallel execution within a single core.

For instance, my computer's processor has 6 cores and 12 threads. This means that each core can handle up to 2 threads concurrently. But if it had 6 cores and only 6 threads, each core could handle only one thread at a time, making multithreading impractical.

Each core in a CPU is a physical hardware component with its own Control Unit, Arithmetic Logic Unit, and registers. Threads, on the other hand, represent an abstract concept in CPU specifications, indicating the total number of threads that a CPU can execute concurrently.

While multithreading offers improved resource utilization, it introduces complexities in software development. Managing edge cases and potential issues such as deadlocks and data races becomes challenging.

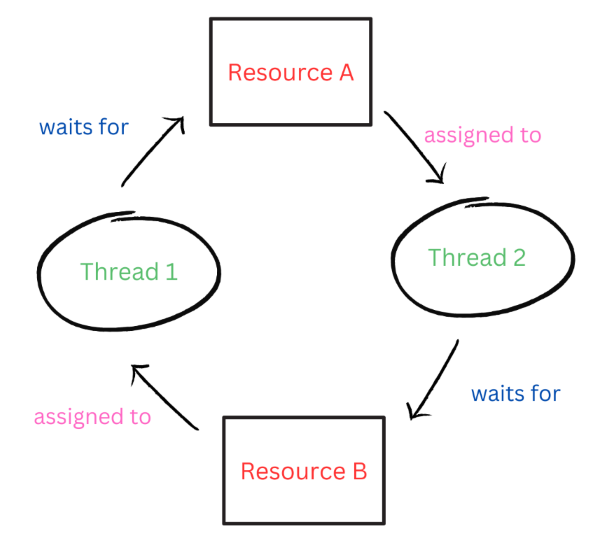

Deadlock occurs when multiple threads are waiting for each other to respond, resulting in unresponsiveness or freezing of the process. The image below illustrates the scenario where both threads 1 and 2 are interdependent, creating the deadlock situation.

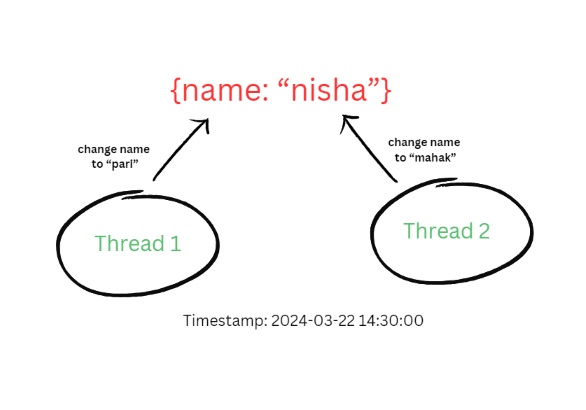

Data races occur when two or more threads attempt to modify the same data structure simultaneously. This can lead to inconsistencies and non-deterministic behavior in the program's execution. The illustration below depicts a scenario where both threads 1 and 2 are concurrently attempting to modify the same data, resulting in a data race.

And here we reach the end of the article. We've delved into the intricacies of CPU architecture, exploring concepts such as multitasking, multiprocessing, and multithreading. Stay tuned for more insightful blogs! Feel free to share any feedback or suggestions you may have:)

Subscribe to my newsletter

Read articles from Nisha Singhal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by