11 Best Ways to Optimize Kubernetes Resources and Reduce Costs

Luc Cao

Luc Cao

Reference: https://overcast.blog/11-best-ways-to-optimize-kubernetes-resources-and-reduce-costs-3c342fa3b71b

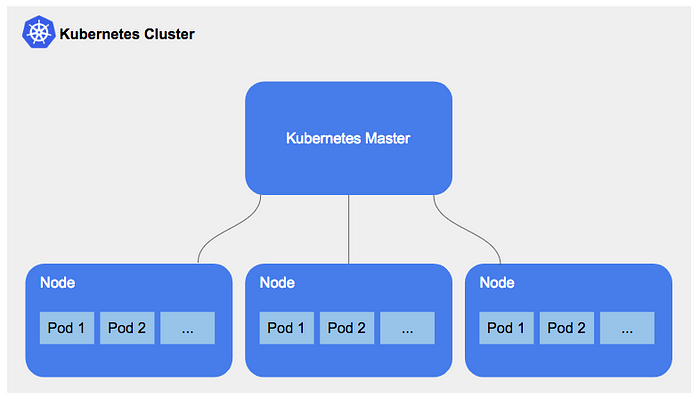

Kubernetes has become the de facto standard for container orchestration, offering a powerful platform for deploying, scaling, and managing containerized applications. However, as organizations scale their Kubernetes deployments, managing costs and optimizing resource allocations become critical challenges. For cloud engineering executives and engineering leaders, ensuring efficient Kubernetes operation without overspending requires strategic planning and the implementation of best practices. This blog post outlines five effective strategies to optimize Kubernetes costs and resources, ensuring your deployments run efficiently and economically.

1. Implementing Cluster Autoscaling

Cluster autoscaling dynamically adjusts the number of nodes in your cluster to meet the needs of your workloads. By scaling your cluster in and out based on demand, you can ensure that you’re only paying for the resources you actually need.

kubernetes / autoscaler Github

Code Example: Implementing Cluster Autoscaler on AWS EKSyamlCopy code

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: example-application-autoscaler

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: example-application

minReplicas: 3

maxReplicas: 10

targetCPUUtilizationPercentage: 50

This YAML configuration file creates a Horizontal Pod Autoscaler for an application, ensuring that it scales out when the CPU utilization goes above 50% and scales in when the usage drops, between a minimum of 3 and a maximum of 10 replicas.

2. Optimizing Container Resource Requests and Limits

Kubernetes allows you to specify CPU and memory (RAM) requests and limits for each container. Properly configuring these parameters ensures that your applications have enough resources to run efficiently while preventing them from consuming excessive resources.

Code Example: Setting Resource Requests and Limits

yamlCopy coapiVersion: v1

kind: Pod

metadata:

name: example-pod

spec:

containers:

- name: example-container

image: example/image

resources:

requests:

memory: "256Mi"

cpu: "500m"

limits:

memory: "512Mi"

cpu: "1000m"

This configuration specifies that the container needs at least 256Mi of memory and 0.5 CPU cores to run. It also sets limits at 512Mi of memory and 1 CPU core to prevent the container from using more than its fair share of resources.

3. Rightsizing Workloads

Rightsizing involves analyzing the resource utilization of your workloads and adjusting their allocations to better match their actual needs. Over-provisioned workloads waste money, while under-provisioned workloads may lead to performance issues.

Tools and Practices

Use monitoring tools like Prometheus and Grafana to gather data on resource usage.

Regularly review resource metrics and adjust requests and limits accordingly.

4. Using Spot Instances

Spot instances are available at a significant discount compared to standard pricing. By using spot instances for stateless or flexible workloads, you can significantly reduce your cluster costs.

Implementation Tip

Ensure your workloads are fault-tolerant and can handle the possible termination of spot instances.

Use Kubernetes’ support for mixed instance types to include spot instances in your node pools.

5. Implementing Namespace Quotas and Limits

By setting quotas and limits at the namespace level, you can control resource allocation and usage across different teams or projects, preventing any single team from consuming more than its fair share of resources.

Code Example: Setting a Namespace Resource Quota

apiVersion: v1

kind: ResourceQuota

metadata:

name: team-a-quota

namespace: team-a

spec:

hard:

requests.cpu: "10"

requests.memory: 20Gi

limits.cpu: "20"

limits.memory: 40Gi

This configuration limits the total amount of CPU and memory resources that can be requested or limited by all pods in the team-a namespace, ensuring fair resource distribution.

6. Leverage Horizontal Pod Autoscaling

Horizontal Pod Autoscaler (HPA) automatically adjusts the number of pods in a deployment or replica set based on observed CPU utilization or other selected metrics. This ensures that your applications have the resources they need during peak times and save resources during off-peak times.

Code Example: Horizontal Pod Autoscaler

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: example-hpa

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: example-app

minReplicas: 1

maxReplicas: 100

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 60

This HPA configuration scales the example-app deployment up or down to maintain an average CPU utilization of 60%, ensuring efficient resource use.

7. Optimize Storage Costs

Persistent storage can be a significant part of your Kubernetes costs. By optimizing your storage strategy, such as using appropriate storage classes and dynamically provisioning storage only as needed, you can reduce costs.

Tips for Storage Optimization

Use dynamic provisioning to automatically create storage only when it’s needed.

Regularly review and delete unneeded persistent volume claims (PVCs) to free up resources.

8. Adopt Multi-tenancy

Running multiple applications or services within the same Kubernetes cluster can maximize resource utilization and reduce overhead costs. Multi-tenancy requires careful management of resources and security, but it can significantly increase efficiency.

Best Practices for Multi-tenancy

Implement role-based access control (RBAC) to ensure secure access control.

Use namespaces to isolate resources and manage quotas effectively.

9. Implement Network Policies

Efficiently managing network traffic can reduce costs by ensuring resources are used optimally. Implementing network policies helps prevent unnecessary cross-talk between pods and can reduce the load on your network infrastructure.

Implementing Network Policies

Define network policies that control the flow of traffic between pods and namespaces, ensuring that only necessary communications occur, which can also enhance security.

10. Utilize Pod Disruption Budgets

Pod Disruption Budgets (PDBs) help ensure that a minimum number of pods are available during voluntary disruptions, such as node maintenance. This ensures high availability without over-provisioning resources.

Code Example: Pod Disruption Budget

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: example-pdb

spec:

minAvailable: 2

selector:

matchLabels:

app: example-app

This PDB ensures that at least two instances of example-app are always available, preventing over-scaling while maintaining availability.

11. Monitor and Analyze Costs

Regular monitoring and analysis of your Kubernetes spending are crucial. Use tools and services that provide insights into where costs are incurred and identify opportunities for optimization.

Tools for Cost Monitoring

Use cloud provider cost management tools to track Kubernetes-related expenses.

Implement third-party tools specifically designed for Kubernetes cost monitoring and optimization.

Conclusion

Optimizing Kubernetes costs and resources is an ongoing process that requires regular monitoring, analysis, and adjustment. By implementing cluster autoscaling, optimizing container resource requests and limits, rightsizing workloads, using spot instances, and setting namespace quotas and limits, engineering leaders can significantly reduce costs while ensuring their applications run efficiently. Adopting these strategies will help your organization make the most of its Kubernetes investments.

Subscribe to my newsletter

Read articles from Luc Cao directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by