🚀Day 75 - Sending Docker Log to Grafana😃

Trushid Hatmode

Trushid HatmodeTable of contents

- 🔶 Task-01: Install Docker and start docker service on a Linux EC2 throughUSERDATA.

- 🔶 Task-02: Create 2 Docker containers and run any basic application on those containers (A simple todo app will work).

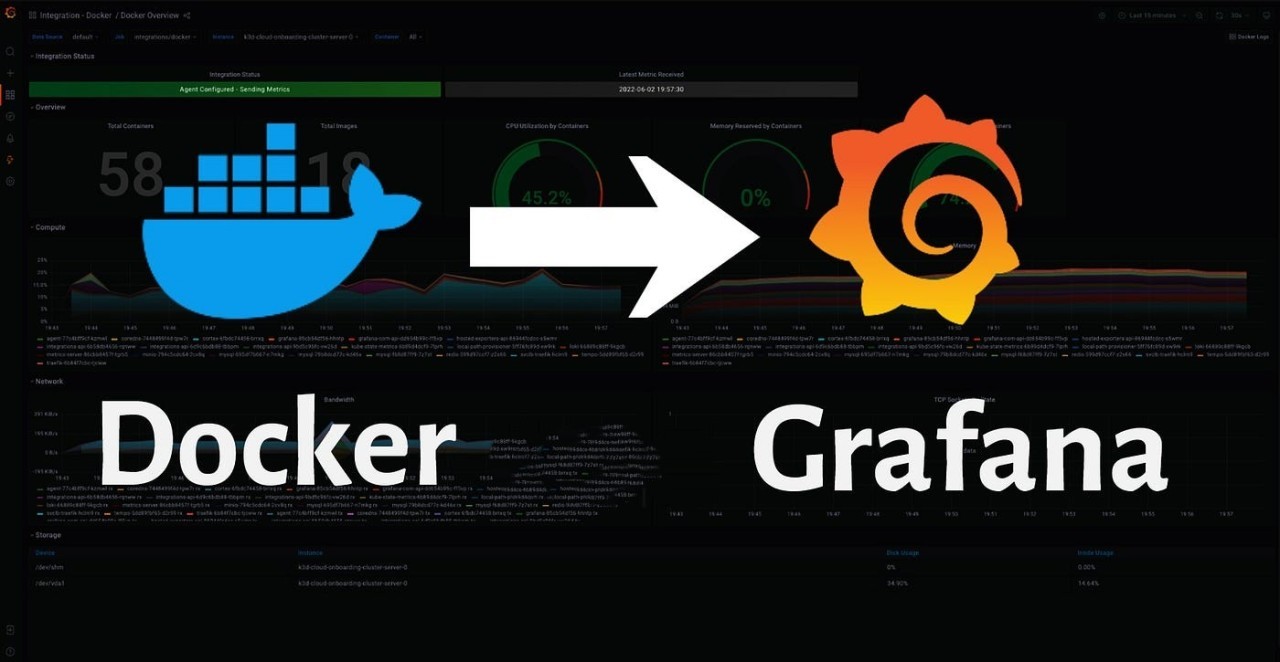

- 🔶 Task-03: Now integrate the docker containers and share the real-time logs with Grafana (Your Instance should be connected to Grafana and the Docker plugin should be enabled on Grafana).

- 🔶 Task-04: Check the logs or docker container names on Grafana UI.

🔶 Task-01: Install Docker and start docker service on a Linux EC2 throughUSERDATA.

#!/bin/bash

sudo apt update

sudo apt install -y apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt update

sudo apt install -y docker-ce docker-ce-cli containerd.io

sudo systemctl start docker

sudo systemctl enable docker

sudo usermod -aG docker $USER

sudo reboot

🔶 Task-02: Create 2 Docker containers and run any basic application on those containers (A simple todo app will work).

Connect to EC2 instance. Now create a docker-compose.yml file.

version: "3" volumes: prometheus-data: driver: local grafana-data: driver: local services: # Grafana service grafana: image: grafana/grafana-oss:latest container_name: grafana ports: - "3000:3000" volumes: - grafana-data:/var/lib/grafana restart: unless-stopped # Prometheus service prometheus: image: prom/prometheus:latest container_name: prometheus ports: - "9090:9090" volumes: - /etc/prometheus:/etc/prometheus - prometheus-data:/prometheus command: "--config.file=/etc/prometheus/prometheus.yml" restart: unless-stopped # Code-Server service code-server: image: lscr.io/linuxserver/code-server:latest container_name: code-server environment: - PUID=1000 - PGID=1000 - TZ=Etc/UTC volumes: - /etc/opt/docker/code-server/config:/config ports: - 8443:8443 restart: unless-stopped # Jenkins service jenkins: image: jenkins/jenkins:lts container_name: jenkins privileged: true user: root volumes: - /etc/opt/docker/jenkins/config:/var/jenkins_home - /var/run/docker.sock:/var/run/docker.sock - /usr/local/bin/docker:/usr/local/bin/docker ports: - 8081:8080 - 50000:50000 restart: unless-stopped # cAdvisor service cadvisor: image: gcr.io/cadvisor/cadvisor:v0.47.0 container_name: cadvisor ports: - 8080:8080 network_mode: host volumes: - /:/rootfs:ro - /var/run:/var/run:ro - /sys:/sys:ro - /var/lib/docker/:/var/lib/docker:ro - /dev/disk/:/dev/disk:ro devices: - /dev/kmsg privileged: true restart: unless-stoppedNow run the docker container by using

docker-compose up -d

Configure Prometheus to scrap metrics.

Modifyprometheus.ymlfile for your instance IP addressCOPY

COPY

# my global config global: scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute. evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute. # scrape_timeout is set to the global default (10s). #Alertmanager configuration alerting: alertmanagers: - static_configs: - targets: # - alertmanager:9093 # Load rules once and periodically evaluate them according to the global 'evaluation_interval'. rule_files: # - "first_rules.yml" # - "second_rules.yml" # A scrape configuration containing exactly one endpoint to scrape: # Here it's Prometheus itself. scrape_configs: # The job name is added as a label `job=<job_name>` to any timeseries scraped from this config. - job_name: "prometheus" # metrics_path defaults to '/metrics' # scheme defaults to 'http'. static_configs: - targets: ['3.89.110.158:9090'] #use public IP of Instance - job_name: "cadvisor" static_configs: - targets: ['3.89.110.158:8080'] #use public IP of Instance

Restart Prometheus and access the Prometheus dashboard to check target nodes. To access Prometheus use a Public IP address with port 9090.

🔶 Task-03: Now integrate the docker containers and share the real-time logs with Grafana (Your Instance should be connected to Grafana and the Docker plugin should be enabled on Grafana).

Access the Grafana Dashboard by using the instance IP address with port 3000

Select Dashboard Click on the + sign at the top right and choose Import

Dashboard.

Go to the Grafana Dashboards Library and search for cAdvisor. Choose your preferred dashboard and copy the ID Number. In our case, we'll use the Cadvisor exporter dashboard.

Paste the ID number on the Grafana dashboard and select to load. then Select our Prometheus server and import it.

🔶 Task-04: Check the logs or docker container names on Grafana UI.

In conclusion,

Day 75's task has equipped us with a valuable skillset in container monitoring, leveraging the power of Grafana to make informed decisions, troubleshoot effectively, and ensure the optimal performance of our containerized applications. This knowledge is not only beneficial for our current projects but is also a foundational skill in the DevOps landscape, where observability and agility are paramount. Keep exploring and expanding your expertise in the dynamic world of DevOps!

Happy Learning :)

Subscribe to my newsletter

Read articles from Trushid Hatmode directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Trushid Hatmode

Trushid Hatmode

As a DevOps Engineer, I'm passionate about building and maintaining robust, efficient, and scalable infrastructure to enable seamless software delivery. With a strong foundation in C/C++, Linux, and a toolkit that spans AWS, Jenkins, Docker, Nagios, Kubernetes, YAML, Ansible, Terraform, Bash Scripting, Git, and GitHub, I'm well-equipped to tackle the challenges of modern software development and deployment.