How to install prometheus in kubernetes using helm charts for monitoring and installation trouble shooting

Anant Saraf

Anant Saraf3 min read

Pre-requisites are : kubernetes cluster

Run following command on your cluster

All resources will be deployed in default namespace

$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

"prometheus-community" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈

$ helm install prometheus prometheus-community/kube-prometheus-stack

NAME: prometheus

LAST DEPLOYED: Thu Mar 21 22:06:48 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace default get pods -l "release=prometheus"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus

instances using the Operator.

$ kubectl.exe get all

NAME READY STATUS RESTARTS AGE

pod/alertmanager-prometheus-stack-kube-prom-alertmanager-0 2/2 Running 0 7m31s

pod/prometheus-prometheus-stack-kube-prom-prometheus-0 2/2 Running 0 7m31s

pod/prometheus-stack-grafana-5ddbdbb457-b49hp 3/3 Running 0 7m51s

pod/prometheus-stack-kube-prom-operator-6c8f7c8976-hx4nz 1/1 Running 0 7m52s

pod/prometheus-stack-kube-state-metrics-6d555c6cb9-gtbrx 1/1 Running 0 7m51s

pod/prometheus-stack-prometheus-node-exporter-64wh8 1/1 Running 0 7m51s

pod/prometheus-stack-prometheus-node-exporter-xldb9 1/1 Running 0 7m51s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 7m32s

service/prometheus-operated ClusterIP None <none> 9090/TCP 7m31s

service/prometheus-stack-grafana ClusterIP 10.107.159.14 <none> 80/TCP 7m52s

service/prometheus-stack-kube-prom-alertmanager ClusterIP 10.108.200.153 <none> 9093/TCP,8080/TCP 7m52s

service/prometheus-stack-kube-prom-operator ClusterIP 10.104.249.6 <none> 443/TCP 7m52s

service/prometheus-stack-kube-prom-prometheus ClusterIP 10.101.197.82 <none> 9090/TCP,8080/TCP 7m52s

service/prometheus-stack-kube-state-metrics ClusterIP 10.105.177.133 <none> 8080/TCP 7m52s

service/prometheus-stack-prometheus-node-exporter ClusterIP 10.98.86.189 <none> 9100/TCP 7m52s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-stack-prometheus-node-exporter 2 2 2 2 2 kubernetes.io/os=linux 7m52s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus-stack-grafana 1/1 1 1 7m52s

deployment.apps/prometheus-stack-kube-prom-operator 1/1 1 1 7m52s

deployment.apps/prometheus-stack-kube-state-metrics 1/1 1 1 7m52s

NAME DESIRED CURRENT READY AGE

replicaset.apps/prometheus-stack-grafana-5ddbdbb457 1 1 1 7m52s

replicaset.apps/prometheus-stack-kube-prom-operator-6c8f7c8976 1 1 1 7m52s

replicaset.apps/prometheus-stack-kube-state-metrics-6d555c6cb9 1 1 1 7m52s

NAME READY AGE

statefulset.apps/alertmanager-prometheus-stack-kube-prom-alertmanager 1/1 7m32s

statefulset.apps/prometheus-prometheus-stack-kube-prom-prometheus 1/1 7m31s

Following custom resources got deployed.

$ kubectl.exe get crd

NAME CREATED AT

alertmanagerconfigs.monitoring.coreos.com 2024-03-19T17:34:09Z

alertmanagers.monitoring.coreos.com 2024-03-19T17:34:09Z

podmonitors.monitoring.coreos.com 2024-03-19T17:34:09Z

probes.monitoring.coreos.com 2024-03-19T17:34:09Z

prometheusagents.monitoring.coreos.com 2024-03-19T17:34:10Z

prometheuses.monitoring.coreos.com 2024-03-19T17:34:10Z

prometheusrules.monitoring.coreos.com 2024-03-19T17:34:11Z

scrapeconfigs.monitoring.coreos.com 2024-03-19T17:34:11Z

servicemonitors.monitoring.coreos.com 2024-03-19T17:34:11Z

thanosrulers.monitoring.coreos.com 2024-03-19T17:34:12Z

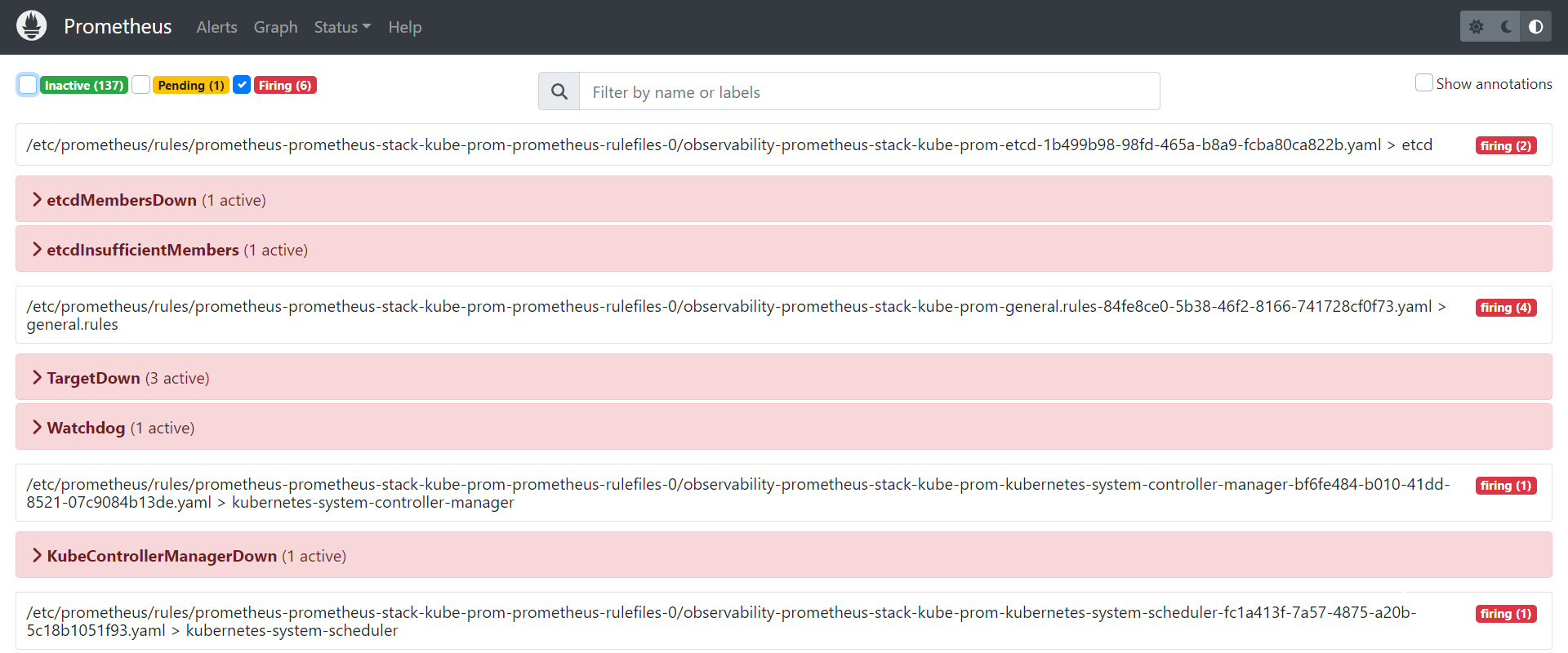

These are the prometheus rules

$ kubectl.exe get prometheusrule

NAME AGE

prometheus-stack-kube-prom-alertmanager.rules 17m

prometheus-stack-kube-prom-config-reloaders 17m

prometheus-stack-kube-prom-etcd 17m

prometheus-stack-kube-prom-general.rules 17m

prometheus-stack-kube-prom-k8s.rules.container-cpu-usage-second 17m

prometheus-stack-kube-prom-k8s.rules.container-memory-cache 17m

prometheus-stack-kube-prom-k8s.rules.container-memory-rss 17m

prometheus-stack-kube-prom-k8s.rules.container-memory-swap 17m

prometheus-stack-kube-prom-k8s.rules.container-memory-working-s 17m

prometheus-stack-kube-prom-k8s.rules.container-resource 17m

prometheus-stack-kube-prom-k8s.rules.pod-owner 17m

prometheus-stack-kube-prom-kube-apiserver-availability.rules 17m

prometheus-stack-kube-prom-kube-apiserver-burnrate.rules 17m

prometheus-stack-kube-prom-kube-apiserver-histogram.rules 17m

prometheus-stack-kube-prom-kube-apiserver-slos 17m

prometheus-stack-kube-prom-kube-prometheus-general.rules 17m

prometheus-stack-kube-prom-kube-prometheus-node-recording.rules 17m

prometheus-stack-kube-prom-kube-scheduler.rules 17m

prometheus-stack-kube-prom-kube-state-metrics 17m

prometheus-stack-kube-prom-kubelet.rules 17m

prometheus-stack-kube-prom-kubernetes-apps 17m

prometheus-stack-kube-prom-kubernetes-resources 17m

prometheus-stack-kube-prom-kubernetes-storage 17m

prometheus-stack-kube-prom-kubernetes-system 17m

prometheus-stack-kube-prom-kubernetes-system-apiserver 17m

prometheus-stack-kube-prom-kubernetes-system-controller-manager 17m

prometheus-stack-kube-prom-kubernetes-system-kube-proxy 17m

prometheus-stack-kube-prom-kubernetes-system-kubelet 17m

prometheus-stack-kube-prom-kubernetes-system-scheduler 17m

prometheus-stack-kube-prom-node-exporter 17m

prometheus-stack-kube-prom-node-exporter.rules 17m

prometheus-stack-kube-prom-node-network 17m

prometheus-stack-kube-prom-node.rules 17m

prometheus-stack-kube-prom-prometheus 17m

prometheus-stack-kube-prom-prometheus-operator 17m

Lets see one of them

$ kubectl.exe get prometheusrule/prometheus-stack-kube-prom-kubernetes-system -o yaml

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

annotations:

meta.helm.sh/release-name: prometheus-stack

meta.helm.sh/release-namespace: default

creationTimestamp: "2024-03-19T17:43:44Z"

generation: 1

labels:

app: kube-prometheus-stack

app.kubernetes.io/instance: prometheus-stack

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: kube-prometheus-stack

app.kubernetes.io/version: 57.0.3

chart: kube-prometheus-stack-57.0.3

heritage: Helm

release: prometheus-stack

name: prometheus-stack-kube-prom-kubernetes-system

namespace: default

resourceVersion: "2674"

uid: d9969ed5-d81e-4803-a017-ef6b87a98ae3

spec:

groups:

- name: kubernetes-system

rules:

- alert: KubeVersionMismatch

annotations:

description: There are {{ $value }} different semantic versions of Kubernetes

components running.

runbook_url: https://runbooks.prometheus-operator.dev/runbooks/kubernetes/kubeversionmismatch

summary: Different semantic versions of Kubernetes components running.

expr: count by (cluster) (count by (git_version, cluster) (label_replace(kubernetes_build_info{job!~"kube-dns|coredns"},"git_version","$1","git_version","(v[0-9]*.[0-9]*).*")))

> 1

for: 15m

labels:

severity: warning

- alert: KubeClientErrors

annotations:

description: Kubernetes API server client '{{ $labels.job }}/{{ $labels.instance

}}' is experiencing {{ $value | humanizePercentage }} errors.'

runbook_url: https://runbooks.prometheus-operator.dev/runbooks/kubernetes/kubeclienterrors

summary: Kubernetes API server client is experiencing errors.

expr: |-

(sum(rate(rest_client_requests_total{job="apiserver",code=~"5.."}[5m])) by (cluster, instance, job, namespace)

/

sum(rate(rest_client_requests_total{job="apiserver"}[5m])) by (cluster, instance, job, namespace))

> 0.01

for: 15m

labels:

severity: warning

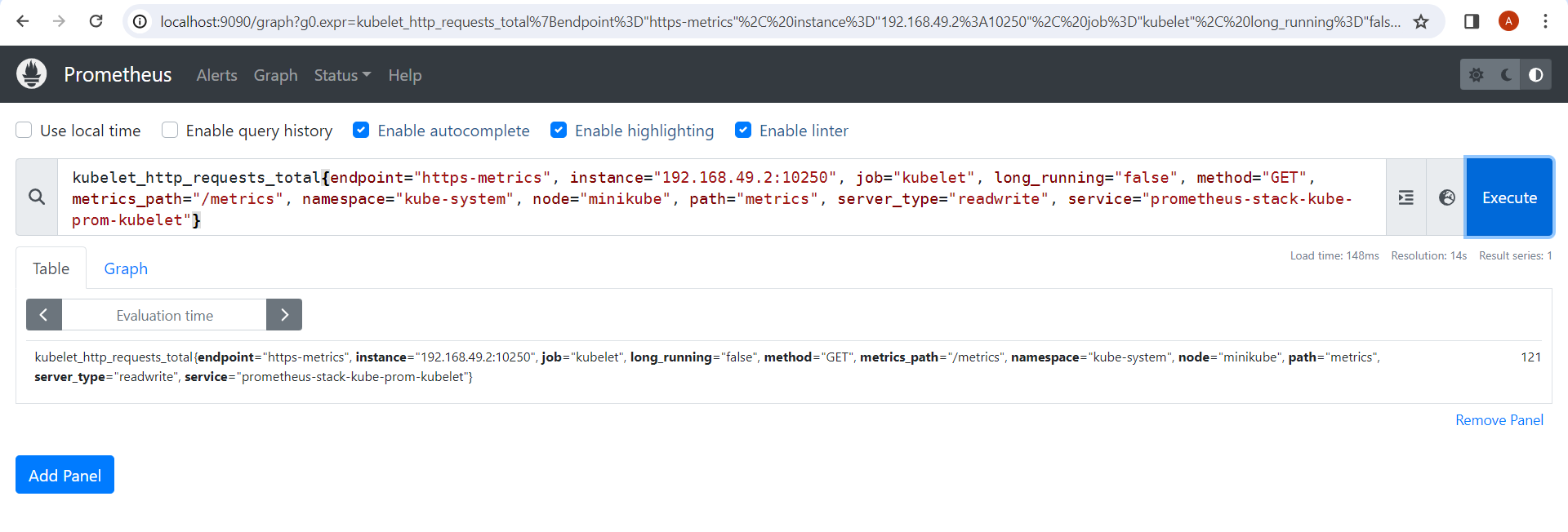

How to check the metrics now.

$ kubectl.exe port-forward pod/prometheus-prometheus-stack-kube-prom-prometheus-0 9090:9090

Forwarding from 127.0.0.1:9090 -> 9090

Forwarding from [::1]:9090 -> 9090

Handling connection for 9090

Handling connection for 9090

Handling connection for 9090

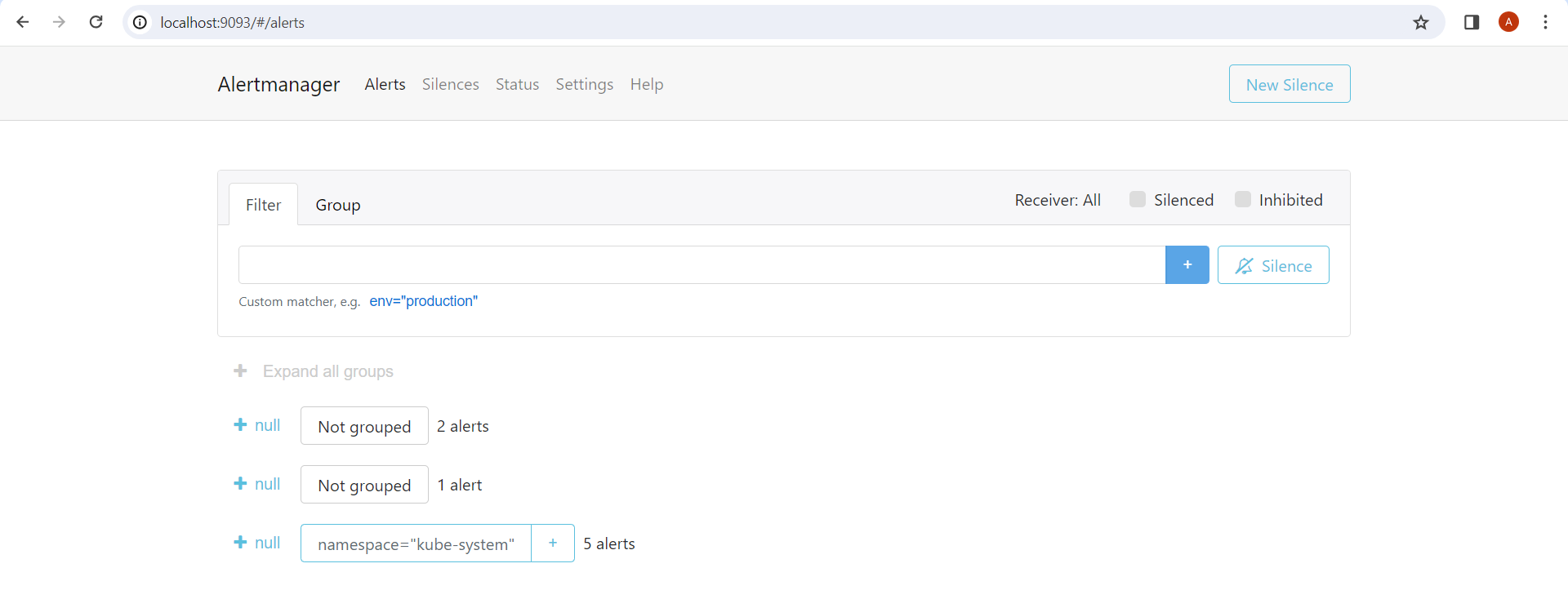

$ kubectl.exe port-forward pod/alertmanager-prometheus-stack-kube-prom-alertmanager-0 9093:9093

Forwarding from 127.0.0.1:9093 -> 9093

Forwarding from [::1]:9093 -> 9093

Handling connection for 9093

Handling connection for 9093

0

Subscribe to my newsletter

Read articles from Anant Saraf directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by