A Dagger module to run my unit tests locally

Emmanuel Sibanda

Emmanuel Sibanda

I built a platform to help people prepare for Data Structure and Algorithm interviews guided by AI.

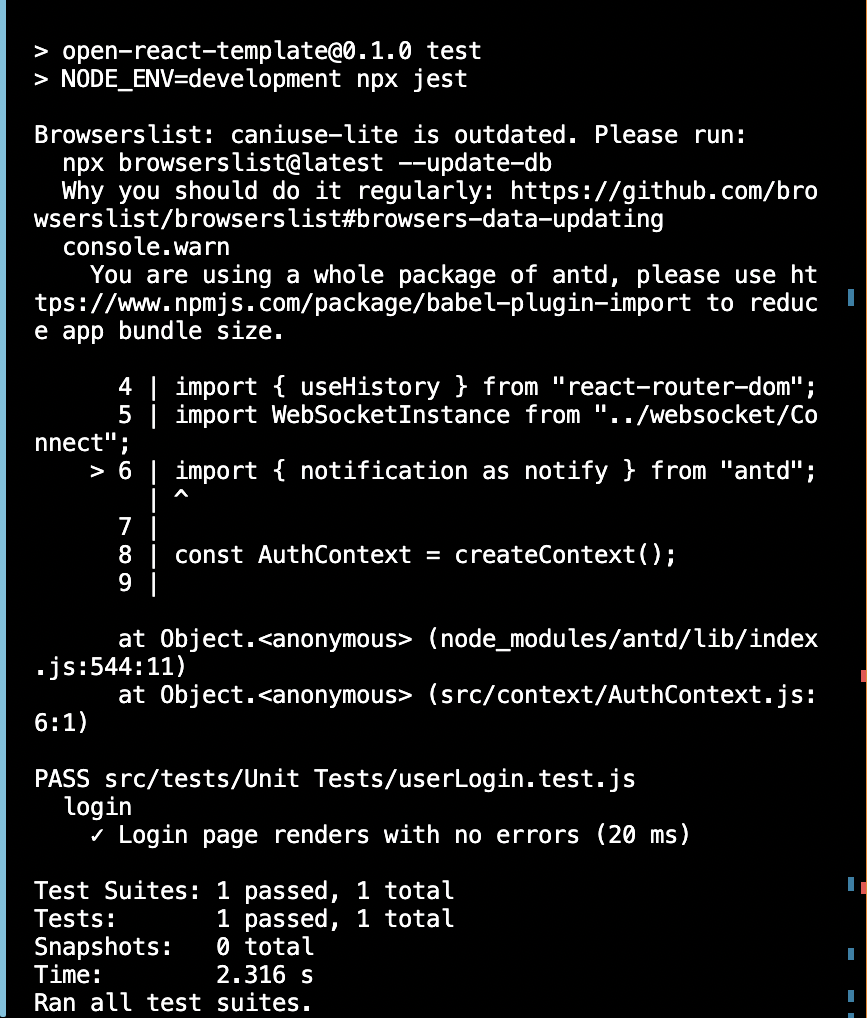

I set up a CI/CD pipeline using Dagger, triggered by an GitHub Action Workflow. This pipeline runs; tests, a linter, formatter and creates Docker images.

Problem

I found myself encountering this problem; If I trigger my CI process remotely using a GitHub Action Workflow, I reduce the time to get feedback to catch and fix issues arising from running unit tests in an environment agnostic manner locally.

What do I mean by this?

My unit tests are only triggered to run when I commit and push my code to GitHub. While I could write a script to run these tests locally,

"scripts": {

...

"test": "jest",

"coverage": "jest --coverage"

},

this could result in the "works on my machine" problem. I could use act to read and run my GitHub Actions locally so I don't have to commit and push code to GitHub to run my tests. However

act doesn’t replicate the GitHub Actions environment by default. It simulates the workflow runs but doesn’t provide exact replicas of the GitHub-hosted runner environments. This can lead to discrepancies when actions rely on specific runner configurations or dependencies

My workflow includes a job to run unit tests for my application. There could be a scenario where this job relies on a specific version of NodeJS and has dependencies installed globally on the runner. When running my tests locally with an act if I have a local docker container and run the test and the container doesn't have the exact NodeJS version or other dependencies required from my test, I would still have the same problem.

Justifying using Dagger

Dagger presents a potential solution. To paraphrase a video I found discussing this and other problems, suggesting Dagger as a potential solution;

I need a tool to customize CI tasks in a way to enable running these tests locally and remotely. Data orchestration of tasks should be context aware so that behavior is appropriate depending on the environment. The solution should have minimal assumptions of the tools required to be preinstalled while using containers to run the tasks.

Defining the task

Before defining the task, I dug more into Dagger and the Daggerverse; a searchable index of all public Dagger Functions. Dagger introduced Dagger Functions to allow users to extend what they can do with the Dagger API using custom code. I can package these functions into reusable modules and call the functions directly from the CLI.

With this added context I defined my task:

building a Dagger Function to be packaged as a module. This function will build docker images and run my unit tests resolving the "it works on my machine" problem, thus ensuring that when tests pass locally they will also in development

packaging it into a reusable module

Diving into the Dagger Documentation

I started off by installing the Dagger CLI

brew install dagger/tap/dagger

and called the test function provided in the documentation

dagger -m github.com/shykes/daggerverse/hello@v0.1.2 call hello

It's important to understand the difference between a Dagger Module and Dagger Function. The Dagger Function is self explanatory, this is regular code written in Go, Python and TypeScript. Functions can be packaged, shared and reused using Dagger Modules. I am building a Dagger function that I package into modules. Once I successfully test the module, I will upload it to the Daggerverse.

It is conceivable that I would need to add dependencies to my module. These modules can be added to a dagger.json

Problem

Logging output from running test in container

When Dagger runs my image, this occurs inside a container. In this scenario:

output = await (

dag.container()

.from_(image_address)

.with_mounted_directory("/app", src)

.with_workdir("/app")

.with_exec(["sh", "-c", "npm ci --legacy-peer-deps"])

.with_exec(["sh", "-c", "npm run test"])

.stdout()

)

Output is an "OCI-compatible container, also known as a Docker container." When I chain the stdout() command, this returns the output of the last executed command. This sounds like what I want. However:

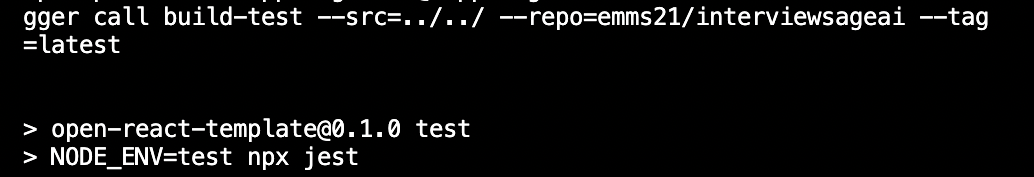

Running npm run test should trigger this script from the docker image of my project:

"test": "npx jest",

Let's go deeper...

I use the npx to trigger Jest to execute all test files in my project. I am using npx over npm because I need execute a package that was installed in my image. This is because when I created this Docker Image I pruned all devDependencies.

# Remove development dependencies

RUN npm prune --omit=dev --legacy-peer-deps

But why?

Let's start with the creation of my Docker image. My Docker image is "built up from a series of layers. Each layer represents an instruction in the image's Dockerfile... each layer is only a set of differences from the layer before it". When the Docker image is built if a layer changes, it will need to be rebuilt along with all other layers coming after it. To optimize the speed of my build, I defined a multi-stage build process.

There are 3 stages in my build;

A base stage: setting up a NodeJS environment with a specified version for consistency.

A build stage: building all dependencies, copying the application source code and running build scripts

A final stage: ensuring only the necessary runtime environment, build application and only the necessary runtime environment is install. Keeping the final image size own.

In my build stage specifically, I pruned devDependencies to further reduce the size of my image. I did this because these are packages not necessary to run my app. I don't need Jest to run my app, I use this to justify my rationale to continue to prune devDependencies.

All to say; I need to use npx because it "allows you to run an arbitrary command from an npm package (either one installed locally, or fetched remotely)" Since Jest will not be available in the image, npx will install this package.

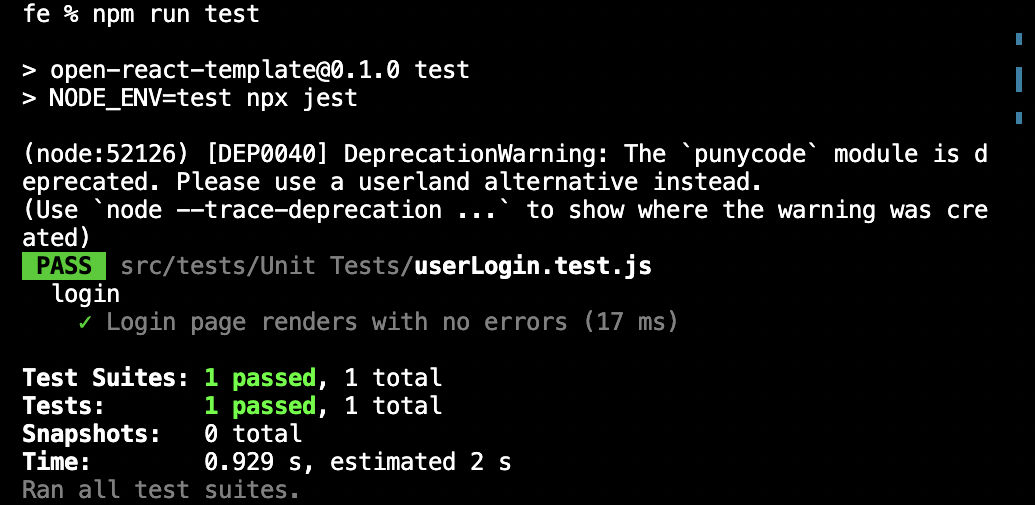

Going back to the problem at hand: since Jest by default sets the Node environment to test, the environment will initially be set to test. stdout() is capturing this output specifically.

Let's dive in deeper again...

.with_exec(["sh", "-c", "npm run test"])

I am running npm run tests as a shell script. Three standards streams are established when this command is executed; stdin, stdout, stderr.

The dagger stdout() implementation specifically gets output from the stdout stream:

ctx = self.select("stdout", _args)

This makes sense, it is self-explanatory. The problem is, in my case when I run npx jest locally, I get a warning along with the test results

Jest outputs its test results, including passes and failures to the stdout stream. It could be because Jest can handle and run tests that can be asynchronous resulting in stdout() not capturing the full output from running Jest.

Redirection...

"Before a command is executed, its input and output may be redirected" I used redirection to redirect the output from stderr to stdout . By doing this I ensure all output is captured in a single place.

.with_exec(["sh", "-c", "npm run test 2>&1"])

In the above, I am redirecting output from stderr to stdout consolidating the output from stdout and capturing the full output from executing Jest to run all my unit tests.

And now I have a Dagger Module to run my unit tests

Subscribe to my newsletter

Read articles from Emmanuel Sibanda directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Emmanuel Sibanda

Emmanuel Sibanda

I am a Full Stack Engineer. I enjoy solving practical everyday problems and blogging about what I build. I am curious about learning, and lean towards learning by building and blogging You can contact me at: emmanuelsibandaus@gmail.com