How to Setup Dynamic NFS Provisioning in a Kubernetes Cluster

Hakan Bayraktar

Hakan BayraktarDynamic NFS storage provisioning in Kubernetes streamlines the creation and management of NFS volumes for your Kubernetes applications. It eliminates the need for manual intervention or pre-provisioned storage. The NFS provisioner dynamically creates persistent volumes (PVs) and associates them with persistent volume claims (PVCs), making the process more efficient. If you have an external NFS share and want to use it in a pod or deployment, the nfs-subdir-external-provisioner provides a solution for effortlessly setting up a storage class to automate the management of your persistent volumes.

Prerequisites

Pre-installed Kubernetes Cluster

A Regular user which has admin rights on the Kubernetes cluster

Internet Connectivity

Step 1: Installing the NFS Server

sudo apt-get update

sudo apt-get install nfs-common nfs-kernel-server -y

Create a directory to export:

sudo mkdir -p /data/nfs

sudo chown nobody:nogroup /data/nfs

sudo chmod 2770 /data/nfs

Export directory and restart NFS service:

echo -e "/data/nfs\t10.124.0.0/24(rw,sync,no_subtree_check,no_root_squash)" | sudo tee -a /etc/exports

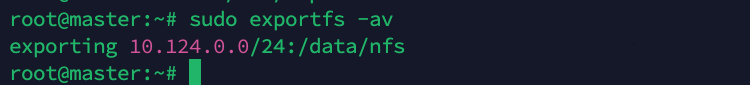

sudo exportfs -av

# Restart and show logs

sudo systemctl restart nfs-kernel-server

sudo systemctl status nfs-kernel-server

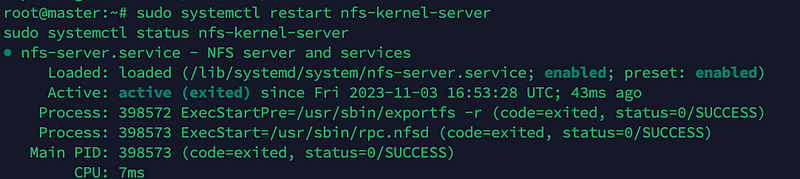

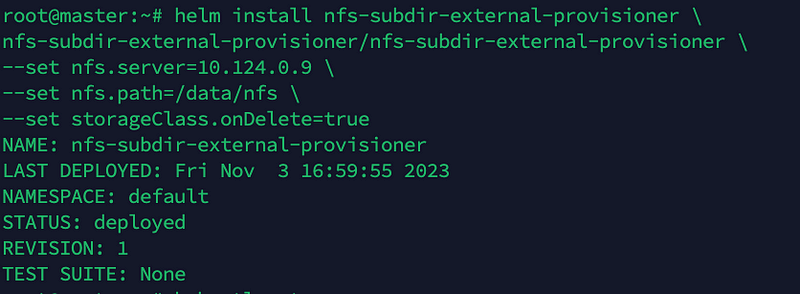

Show Export Details:

/sbin/showmount -e 10.124.0.9

Step 2: Install NFS client packages on K8s Nodes

Make sure all your Kubernetes nodes have the NFS client packages installed. On Ubuntu-based nodes, install nfs-common:

sudo apt update

sudo apt install nfs-common -y

Step 3: Install and Configure NFS Client Provisioner

Deploy the NFS Subdir External Provisioner in your Kubernetes cluster to automate the creation and management of NFS-backed Persistent Volumes (PVs) and Persistent Volume Claims (PVCs).

Install Helm3 on Debian/Ubuntu using the following commands:

curl https://baltocdn.com/helm/signing.asc | sudo apt-key add -

sudo apt-get install apt-transport-https --yes

echo "deb https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.list

sudo apt-get update

sudo apt-get install helm

Add Helm Repository for nfs-subdir-external-provisioner:

helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner

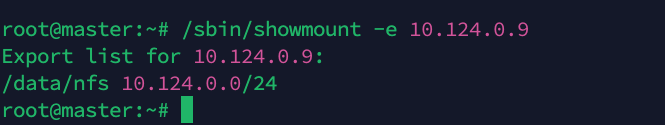

Install Helm Chart for NFS

helm install nfs-subdir-external-provisioner \

nfs-subdir-external-provisioner/nfs-subdir-external-provisioner \

--set nfs.server=10.124.0.9 \

--set nfs.path=/data/nfs \

--set storageClass.onDelete=true

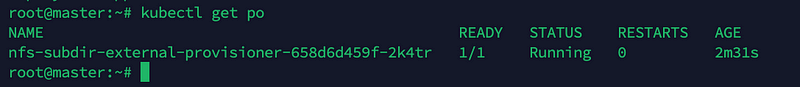

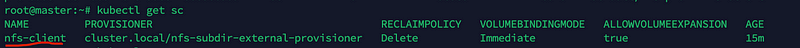

# Check pods and storage classes:

kubectl get pod

kubectl get sc

NFS dynamic storage class was installed and “nfs-client” storage was created.

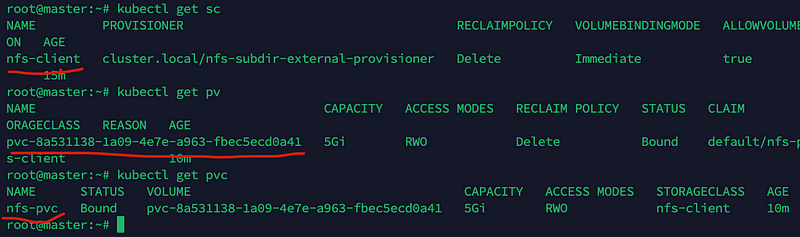

Step 4: Dynamic PVC Volume Create Testing:

Now we can test creating dynamic PVC volume.

nfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: nfs-client

resources:

requests:

storage: 5Gi

Create an NGINX pod that mounts the NFS export in its web directory:

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nfs-nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: nfs-nginx

persistentVolumeClaim:

claimName: nfs-pvc

containers:

- image: nginx

name: nginx

volumeMounts:

- name: nfs-nginx

mountPath: /usr/share/nginx/html

kubectl apply -f nfs-pvc.yaml

kubectl apply -f deployment.yaml

Apply this file to create an NGINX pod with the NFS volume mounted at /usr/share/nginx/html

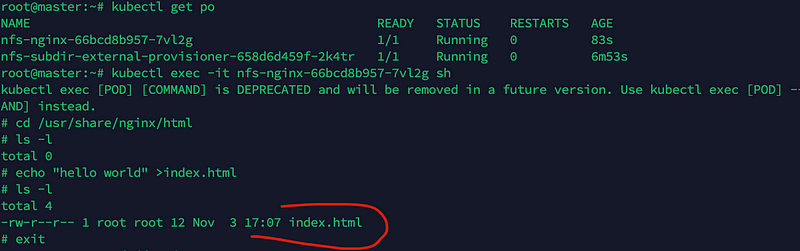

Now, let’s enter the pod and create an ‘index.html’ file under ‘/usr/share/nginx/html.’

kubectl get po

kubectl exec -it nfs-nginx-66bcd8b957-7vl2g sh

#now we are into pod

# cd /usr/share/nginx/html

# ls -l

# echo "hello world" >index.html

# ls -l

# exit

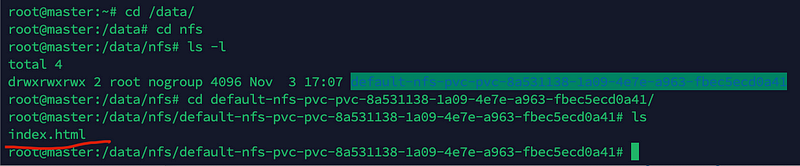

cd /data

cd nfs

ls -l

cd default-nfs-pvc-pvc-8a531138-1a09-4e7e-a963-fbec5ecd0a41/

During our testing, we observed that we could access the file (index.html) we created from the ‘/data/nfs’ folder on the server, confirming that the NFS server share is also accessible within the pod.

Subscribe to my newsletter

Read articles from Hakan Bayraktar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hakan Bayraktar

Hakan Bayraktar

Hi there 👋 I am Hakan. I work as DevOps Engineer. I’m looking to collaborate on, Python, AWS, CI/CD, Kubernetes projects. ✅ AWS Certified Solution Architect with 15+ years of experience in Linux, DevOps, AWS, Google Cloud Platform, cloud infrastructures, Cloud migrations, System automation, CI/CD, and much more! ✓ Solution-oriented DevOps Expert concentrated on making your infrastructure optimized, stable and reliable ✓ Strong experience architecting, running, and managing complex systems and infrastructures ✓ Main focuses - Automation, optimization, monitoring, and alerting in Cloud or large-scale server environments ✓ I love automation, coding, problem-solving, learning new skills, and implementing them to solve different challenges. ✅ Certifications: AWS Certified Solution Architect Certificate Certified Kubernetes Administrator (CKA) Red Hat Certified Technician (Red Hat Enterprise Linux 5) Certified Ethical Hacker ✅ Core skills and technologies: Cloud platforms: AWS, GCP, Digital Ocean Containers: Docker, Kubernetes Monitoring: Zabbix, Grafana, ELK, Prometheus, NewRelic Automation: Ansible, Terraform, CDK CI/CD : Jenkins, Gitlab, Github Action Scripting: Bash, Python Databases: MySQL, PostgreSQL No-SQL: Redis, ElasticSearch Web servers: Apache, Nginx, Lighttpd, Wildfly, Tomcat Operating systems: CentOS, Debian, RedHat, Ubuntu, Amazon Linux