High-Available Kubernetes Cluster on Hetzner

Fred

Fred

As someone playing a lot with Kubernetes Clusters and especially with the RKE2-Distribution, I thought: "Why shouldn't I write a blog about it?". Therefore here I am showing you how to create a Kubernetes Cluster on Hetzner-Cloud and make it High-Available.

Hetzner offers affordable infrastructure services that are easy to use and suitable for both hobby and professional projects. In this guide, we'll focus on their cloud servers in particular.

Rancher Kubernetes Engine 2 (RKE2) is a Kubernetes Distribution developed by Rancher. What makes this distribution so special is, that it focuses on security and compliance within the U.S. Federal Government sector. You can read more about it on their homepage.

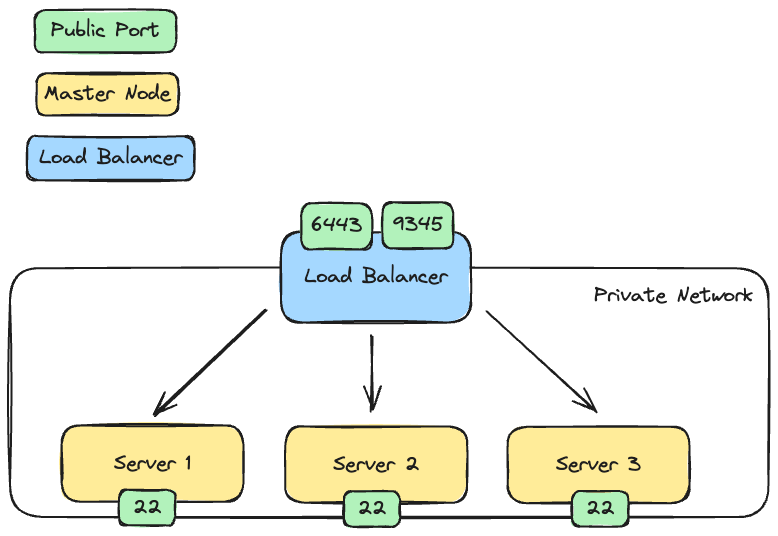

The following diagram is showing you how our infrastructure is going to look like:

Prerequisite

Hetzner Account created

kubectl installed on your local machine

ssh key available

Having some basic knowledge of Kubernetes

We are going to create everything in an imperative way to clearly illustrate our actions. Normally you would do that in a declarative way using Terraform or similar tools.

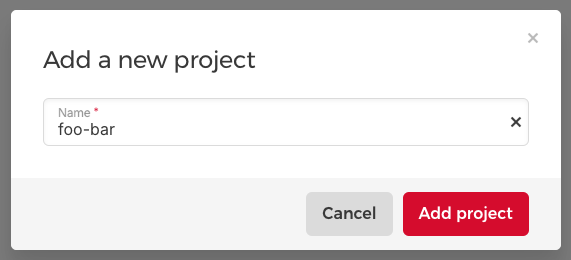

Project

Lets start by creating a new Project in the Hetzner Cloud Console:

From now on every resource will be created inside of that project.

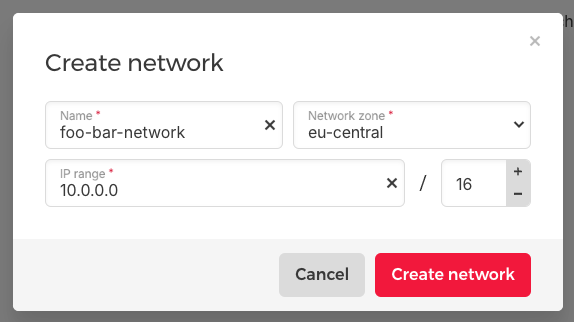

Network

Next, we create a new network. This setup ensures that our nodes communicate exclusively via the private network. Because this network is considered secure, all ports on the servers network adapter used in that network remain open.

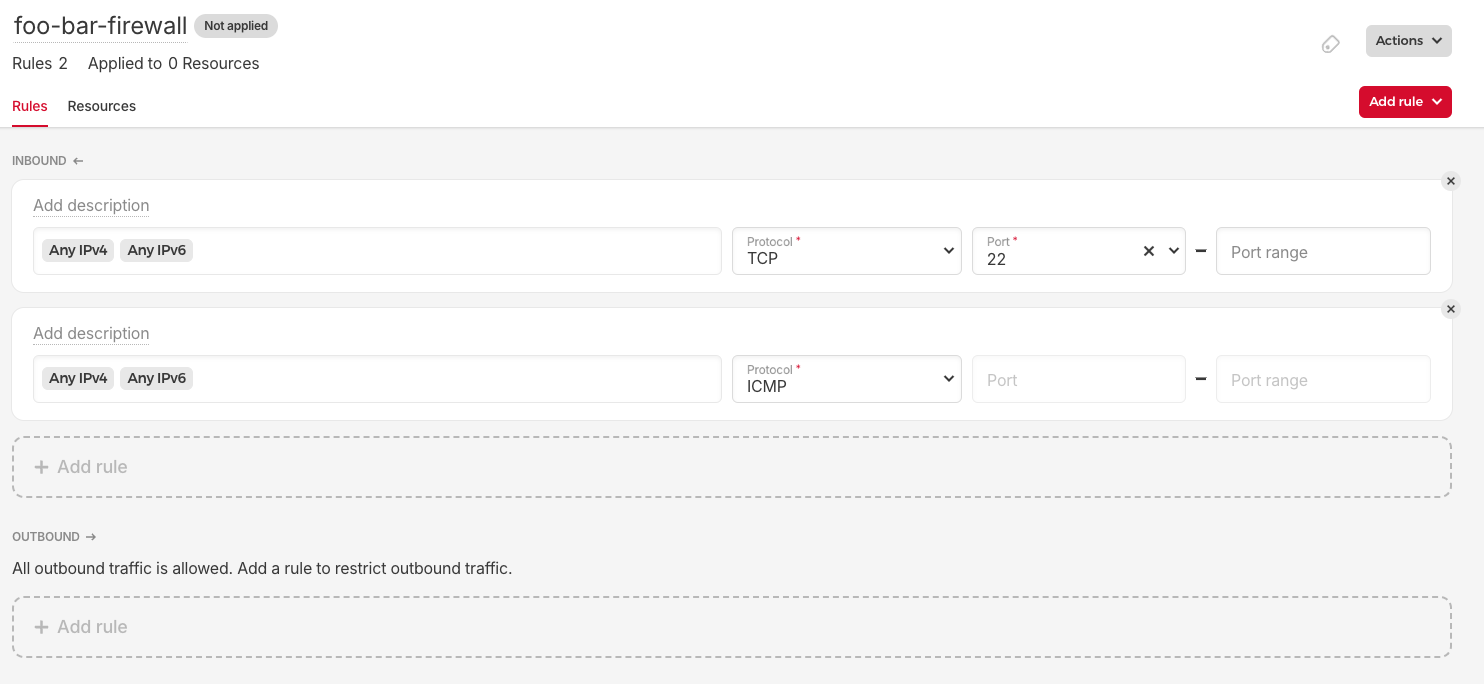

Firewall

We create a firewall to restrict access to our nodes. We are only going to allow port 22 to be able to access our node via SSH. As already mentioned before, we don't have to open additional ports as our cluster will communicate over our private network.

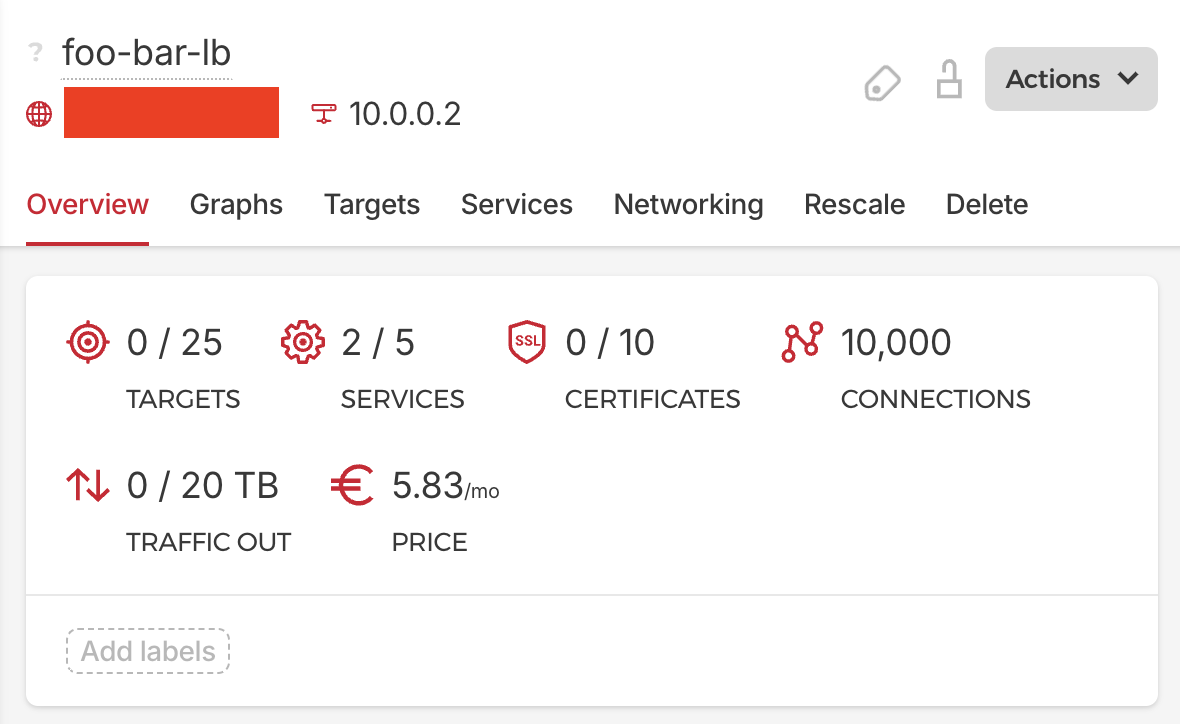

Load Balancer

To be able to register new nodes on the cluster, we need a fixed registration address. We are going to use a load balancer for that. Therefore we create one with the following configurations:

Services:

TCP 6443 --> 6443

TCP 9345 --> 9345

Networking:

- Select the created private network "foo-bar-network"

We set the port 6443 so that we are able to connect to our cluster with kubectl through our load balancer. Port 9345 is used so that other master nodes can register on the cluster. This port can be closed when we added all nodes on our cluster.

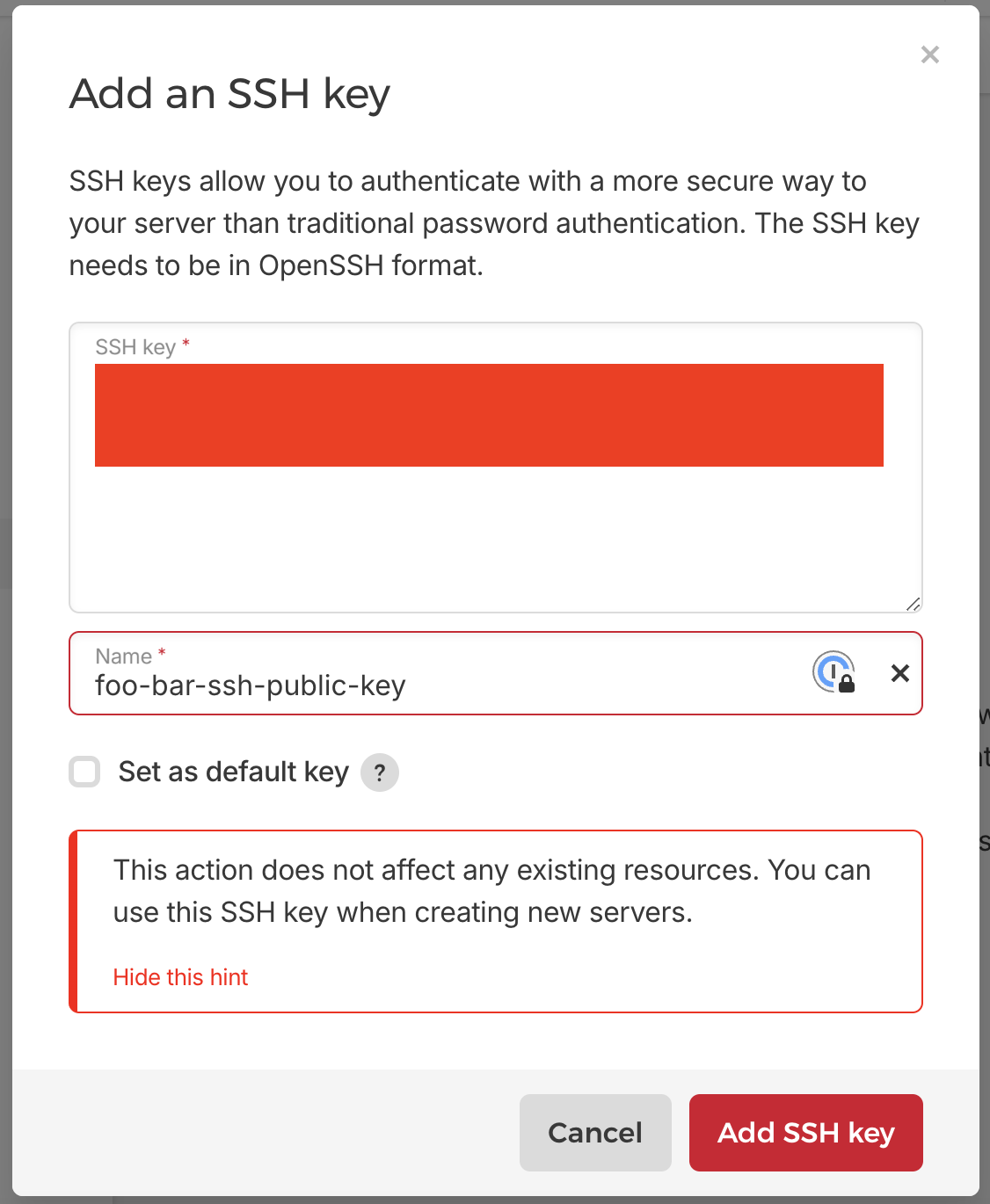

SSH Key

Add your SSH key in the "Security" section.

First Master Node

Now lets create our first master node by creating a new server. Use the following server configurations:

OS Image: Ubuntu 22.04

CPU Architecture: x86 (Intel/AMD)

Server Type: CPX21

Networking:

Select Public IPv4

Private networks: Select our foo-bar-network

Add your own SSH key

Firewalls: foo-bar-firewall

Name: master-1

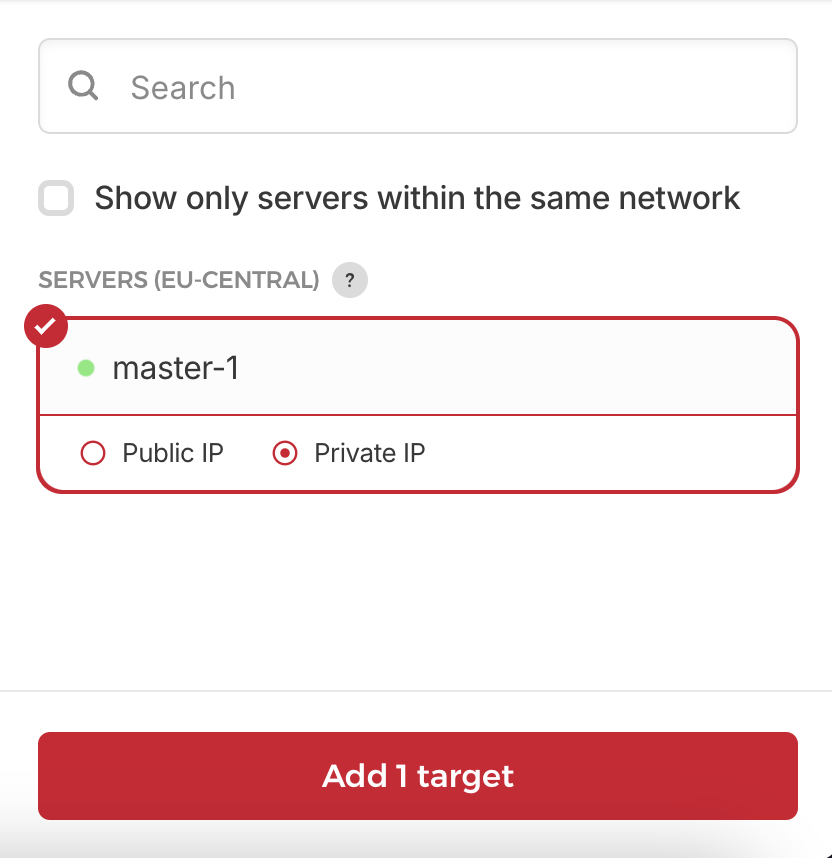

Now we have to configure our load balancer to target our node. We go to our created load-balancer and add a new target using its private IP address.

We are now ready to start with the installation Kubernetes.

Installation of RKE2

Lets connect to our server via SSH and prepare some stuff.

ssh root@<MASTER_NODE_1_IP>

Update and upgrade packages:

apt-get update && apt-get upgrade

Create the RKE2 config file:

mkdir -p /etc/rancher/rke2 && touch /etc/rancher/rke2/config.yaml

The config file for our first master node should look like this:

# IP Address of the server in the private network

node-ip: <PRIVATE_IP_OF_SERVER>

# To avoid certificate errors with the fixed registration address

tls-san:

- <PUBLIC_IP_OF_LOADBALANCER>

Now we proceed with the installation. Install first the binary:

curl -sfL https://get.rke2.io | sh -

Enable the service:

systemctl enable rke2-server.service

Start the service:

systemctl start rke2-server.service

Check the logs if something wrong happened:

journalctl -u rke2-server -f

Check if the nodes are available:

/var/lib/rancher/rke2/bin/kubectl get nodes --kubeconfig /etc/rancher/rke2/rke2.yaml

NAME STATUS ROLES AGE VERSION

master-1 Ready control-plane,etcd,master 11m v1.27.12+rke2r1

Looks good! Now lets see if we can also access our cluster over our load balancer. Lets change back to our notebook and copy the kubeconfig file on it.

scp root@<PUBLIC_IP_OF_MASTER_NODE>:/etc/rancher/rke2/rke2.yaml ~/.kube/foo-bar-config.yaml

We have to change the server address in our kubeconfig file with the public IP address of our load balancer. Else kubectl would try to connect to the cluster on localhost:

# foo-bar-config.yaml

yada yada yada..

server: https://<PUBLIC_IP_OF_LOAD_BALANCER>:6443

yada yada yada..

Now lets check the connection.

export KUBECONFIG=~/.kube/foo-bar-config.yaml

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 Ready control-plane,etcd,master 21m v1.27.12+rke2r1

Amazing!

Second and third master node

The additional servers can be prepared like in the first one:

Server provisioning

Add firewall

Add to private network

Add server as target in load-balancer on private address

Only the config file for RKE2 is going to be different and should look like this:

# /etc/rancher/rke2/config.yaml

# Private IP of your master-n server

node-ip: <PRIVATE_IP>

# Private IP of our Load Balancer

server: https://<PRIVATE_IP_OF_LOADBALANCER>:9345

token: <YOUR_SERVER_TOKEN>

tls-san:

- <PUBLIC_IP_OF_LOADBALANCER>

The server token is available on the master-1 node under /var/lib/rancher/rke2/server/node-token

cat /var/lib/rancher/rke2/server/node-token

Now we can proceed with the installation like before by downloading and installing the binary etc.

The endresult should then look like this:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 Ready control-plane,etcd,master 21m v1.27.12+rke2r1

master-2 Ready control-plane,etcd,master 10m v1.27.12+rke2r1

master-3 Ready control-plane,etcd,master 5m v1.27.12+rke2r1

It's also worth to mention that the nodes are schedulable as they don't have the taint that would prevent that. If you need that, just extend the config file on the respective node as follows:

node-taint:

- "CriticalAddonsOnly=true:NoExecute"

Conclusion

Thats it! We have established a high available cluster ready for deploying our applications. This can serve as a playground for testing and experimentation. It's important to note that we haven't configured a node autoscaler and if one node is down, manual intervention is required. But until then our cluster remains available until addressed.

Feel free to give some feedback and ideas how to extend this approach :)

Subscribe to my newsletter

Read articles from Fred directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by