Multiclass Classification in Python: Techniques, Examples, and Best Practices

Prakhar Kumar

Prakhar Kumar

Introduction: Multiclass classification is a fundamental task in machine learning that involves predicting the correct class label from multiple possible classes for a given input. In this blog post, we'll delve into various techniques for implementing multiclass classification in Python, provide practical examples, and discuss best practices for model evaluation and improvement.

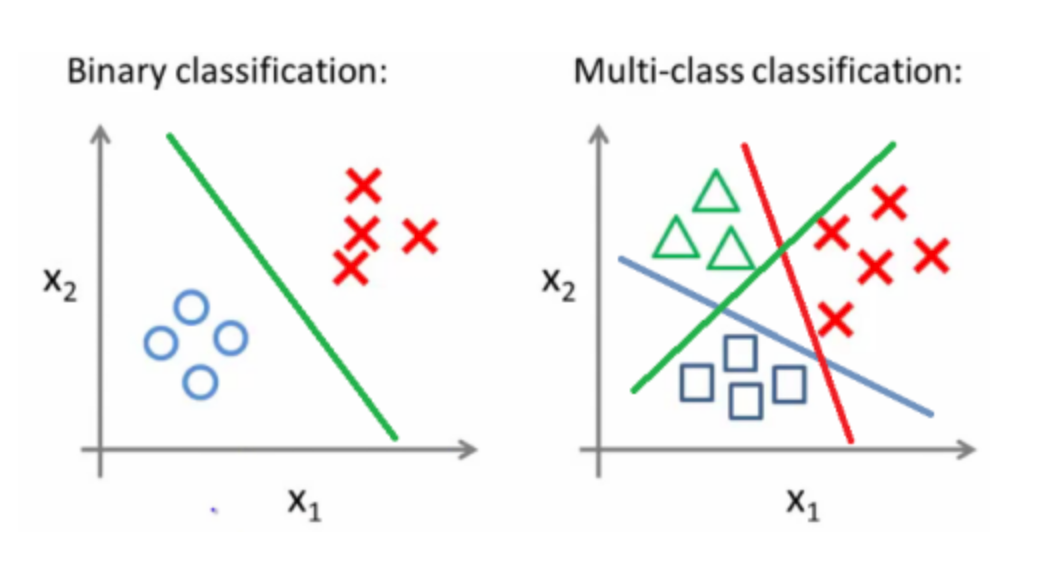

Understanding Multiclass Classification: Multiclass classification is different from binary classification as it involves predicting one class label among multiple classes. It is commonly used in applications such as image recognition, sentiment analysis, and document categorization, where the input can belong to more than two categories or classes.

Python Libraries for Multiclass Classification: Python offers a wide range of libraries and tools for implementing multiclass classification algorithms:

scikit-learn: scikit-learn provides a comprehensive set of algorithms and tools for multiclass classification, including Support Vector Machines (SVM), Random Forests, Gradient Boosting, and more.

TensorFlow and Keras: TensorFlow and Keras offer deep learning frameworks with built-in support for multiclass classification using neural network architectures like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs).

XGBoost: XGBoost is a popular gradient boosting library that excels in multiclass classification tasks by combining multiple weak classifiers.

NLTK: NLTK can be used for text classification tasks involving multiple classes, with techniques like Naive Bayes and Maximum Entropy classifiers.

Techniques for Multiclass Classification:

One-vs-Rest (OvR) Strategy: Decomposes the multiclass problem into multiple binary classification problems, where each class is treated as a separate binary classification task.

One-vs-One (OvO) Strategy: Constructs binary classifiers for each pair of classes and uses majority voting to determine the final class label.

Multinomial Logistic Regression: Directly models the probability distribution over multiple classes using softmax regression.

Ensemble Methods: Combines multiple base classifiers to improve classification accuracy, such as Random Forests, Gradient Boosting, and Voting classifiers.

Python Implementation Examples:

Example 1: Multiclass Classification with scikit-learn and SVM

pythonCopy codefrom sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

# Load the Iris dataset

iris = load_iris()

X = iris.data

y = iris.target

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train an SVM classifier

svm_classifier = SVC(kernel='linear')

svm_classifier.fit(X_train, y_train)

# Make predictions on the test set

y_pred = svm_classifier.predict(X_test)

# Evaluate accuracy

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy:", accuracy)

Example 2: Multiclass Text Classification with NLTK and Naive Bayes

pythonCopy codeimport nltk

from nltk.corpus import movie_reviews

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import classification_report

# Load movie reviews dataset from NLTK

nltk.download('movie_reviews')

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]

# Preprocess text data and extract features

vectorizer = CountVectorizer()

X = vectorizer.fit_transform([" ".join(doc) for doc, _ in documents])

y = [category for _, category in documents]

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train a Multinomial Naive Bayes classifier

nb_classifier = MultinomialNB()

nb_classifier.fit(X_train, y_train)

# Make predictions on the test set

y_pred = nb_classifier.predict(X_test)

# Evaluate classification report

print("Classification Report:")

print(classification_report(y_test, y_pred))

Example 3: Multiclass Image Classification with TensorFlow and CNN

pythonCopy codeimport tensorflow as tf

from tensorflow.keras.datasets import cifar10

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Load the CIFAR-10 dataset

(X_train, y_train), (X_test, y_test) = cifar10.load_data()

# Normalize pixel values

X_train, X_test = X_train / 255.0, X_test / 255.0

# Define a Convolutional Neural Network (CNN) model

model = Sequential([

Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)),

MaxPooling2D((2, 2)),

Flatten(),

Dense(128, activation='relu'),

Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train the model

model.fit(X_train, y_train, epochs=10, validation_data=(X_test, y_test))

# Evaluate accuracy on test set

test_loss, test_acc = model.evaluate(X_test, y_test)

print("Test Accuracy:", test_acc)

Best Practices for Multiclass Classification:

Data Preprocessing: Normalize and preprocess input features, handle missing values, and encode categorical variables appropriately.

Feature Engineering: Select relevant features, perform dimensionality reduction if necessary, and extract meaningful features from text or image data.

Model Selection: Choose appropriate algorithms and models based on the nature of the data and problem, considering factors like scalability, interpretability, and computational resources.

Hyperparameter Tuning: Fine-tune model hyperparameters using techniques like grid search, random search, or Bayesian optimization to improve model performance.

Model Evaluation: Evaluate model performance using appropriate metrics such as accuracy, precision, recall, F1-score, and confusion matrix. Consider cross-validation for robustness assessment.

Conclusion: Multiclass classification is a crucial machine learning task with diverse applications. Python provides powerful libraries and techniques for implementing multiclass classification algorithms effectively. By understanding different strategies, experimenting with examples, and following best practices, developers can build accurate and robust multiclass classification models for various real-world scenarios.

Subscribe to my newsletter

Read articles from Prakhar Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by